The Big WHS was originally supposed to house approximately 30TB of storage when the plans were first detailed on an Excel spreadsheet BOM in December 2009. This was a big upgrade to my first DIY Windows Home Server box that had well under 20TB. About five months later, the storage capacity has crested 60TB, with further room to expand. The Big WHS now spans two 4U Norco cases (using a total of 8U of rackspace and another 4U chassis is in the works) has over 60TB of storage, and requires well over a dozen ports on the gigabit switch.

The current hardware list is as follows:

- CPU: Intel Core i7 920

- Motherboard: Supermicro X8ST3-F

- Memory: Patriot Viper 12GB DDR3 1600

- Case (1): Norco RPC-4020

- Case (2): Norco RPC-4220

- Drives: 12x Seagate 7200rpm 1.5TB, 2x 7200.11 1TB, 12x Hitachi 7200rpm 2TB and 2x 1TB, 8x Western Digital Green 1.5TB EADS, 2x Western Digital Green 2TB EARS.

- SSD: 2x Intel X25-V 40GB

- Controller: Areca ARC-1680LP

- SAS Expanders: 2x HP SAS Expander (one in each enclosure)

- NIC (additional): 2x Intel Pro/1000 PT Quad , Intel Pro/1000 GT (PCI)

- Host OS: Windows Server 2008 R2 with Hyper-V installed

- Fan Controllers: Various

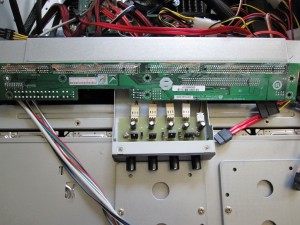

- PCMIG board to power the HP SAS Expander in the Norco RPC-4220

- Main switch – Dell PowerConnect 2724

Changes and Future Plan

The configuration changes have had a few drivers:

- I have been using many more Hyper-V virtual machines on the server lately. 8-10 VM’s run simultaneously on the box these days.

- Storage growth is approximately 1.2TB/month due to additional witness preparation footage being shot, all in HD. The acceleration of storage growth has required space for additional drives.

- The two Norco cases are LOUD with the stock fans with the RPC-4220 being significantly louder than a RPC-4020. I found that running the stock fans at 75% provided enough cooling while significantly reducing noise.

Bottom of modified PCMIG board and simple fan controller in RPC-4220 DAS Enclosure - Since I generally like to give each Hyper-V VM its own gigabit NIC, I added the second Intel Pro/1000 PT Quad and an Intel Pro/1000 GT. The GT is a PCI card so it works fine in the Supermicro motherboard.

- The second enclosure required SAS connectivity, so I am using the Areca 1680LP’s external port to connect to the HP SAS Expander’s external port. In the second enclosure, I am using a PCMIG board to power the HP SAS Expander so I can house a second server based on an Intel Xeon X3440 and Supermicro X8SIL-F.

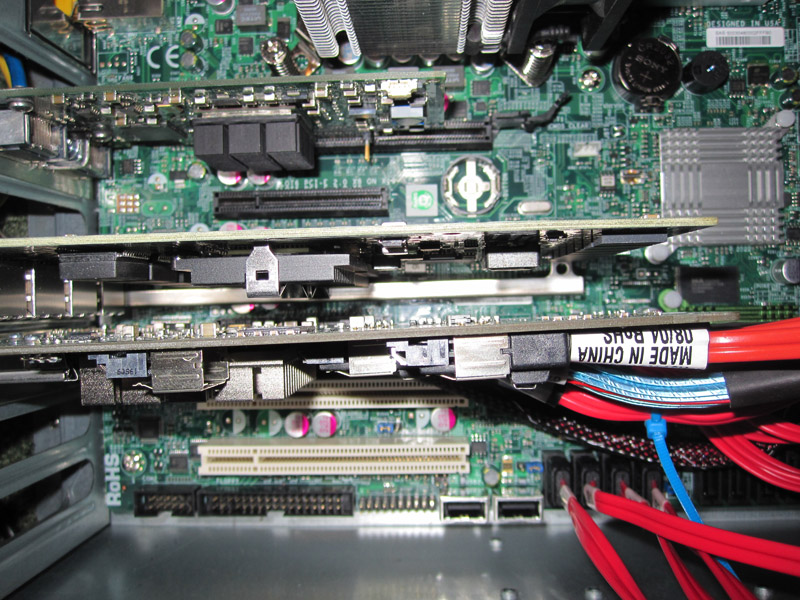

Install SFF-8088 cable between the Norco RPC-4220 DAS/ SAS Expander Enclosure and main server - The second 4U houses a Supermicro X8SIL-F with an Intel Xeon X3430. The majority of the drives in the chassis, and the HP SAS Expander are on one power supply while this secondary server is powered using a second PSU. This second mini server gives me a test bed for Hyper-V VM migration, VMWare ESXi appliances, and bare metal installs for things like WHS V2 codename VAIL. Having this test server allows me to test configurations and changes before running them on the Big WHS. Since it is in the secondary DAS Enclosure of the Big WHS, it allows me to re-allocate a row of disks by changing the termination point of one cable.

- SSD’s were added to be used as basic cache drives, either for higher I/O VM’s (my SQL Server) or just to use to benchmark other VM’s. I can imagine adding additional SSD’s in the future and they may be added to the third 4U that is in progress.

So those are the basic changes at this point. Nothing fancy, but I am probably done buying drives until the 3TB hard drives come to market later in 2010.

I am not done yet though. There are a few things I have been meaning to add:

- Supermicro X8SIL-F plus a Core i3 530 or Xeon X3440 in the second 4U (I did order a X3460 today also). Will need to have a separate power supply, but I would like to house a second CPU and more RAM in the second Norco enclosure. This will let me migrate some VM’s. I also have the opportunity to utilize the few unused hot swap slots in the Norco RPC-4220. Finally, I want a platform to test WHS V2 codename VAIL on a bare metal installation, and want a VMWare ESXi machine. Another use is that I want something that can host client Virtual Machines before putting them on the Big WHS.

- 10BaseT Ethernet… at some point I want to upgrade the network. A big factor here is that the switch costs are very high as are the costs for the cards. Even with 2x SSD’s in Raid 0 on SATA II read speeds are below 600MB/s. This means that there is very little opportunity to use full 10BaseT 10 gigabit bandwidth between single clients and the server using a direct CAT 6 run.

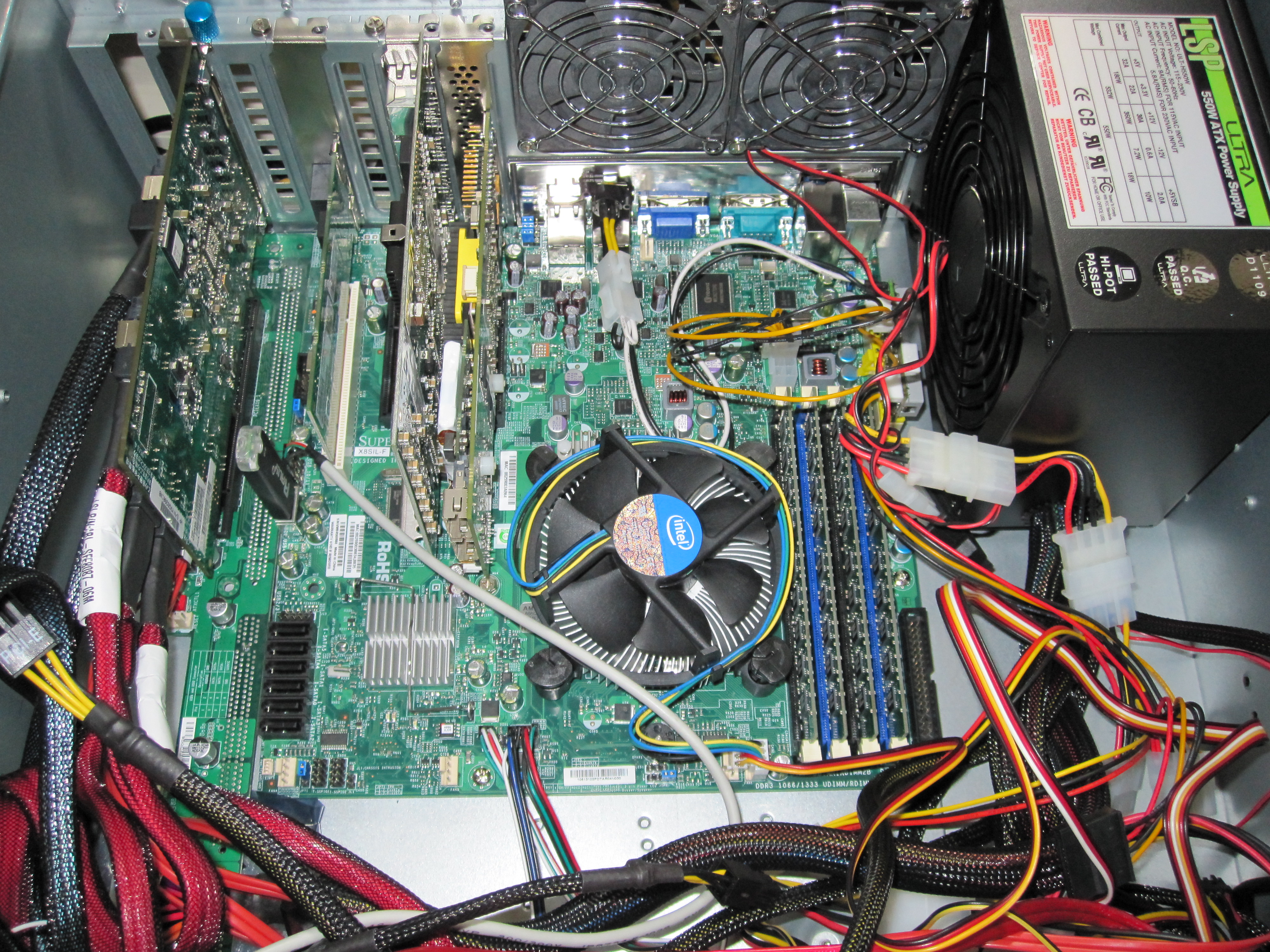

- I am thinking of utilizing the Norco RPC-470 from the first WHS to be a third 4U enclosure for holding optical drives, SSDs, and another virtual machine farm. I am more sold on the idea of it being an optical media expansion/ SSD case than I am of it being yet another virtual machine box. On the other hand, I am biased at the moment because I just lost an entire physical stack of installation DVD’s two weeks ago. The time involved in validating the non-operational status of those disks has been time consuming. I’ve started burning in the new 4U and am actually connecting it to a Silicon Image based eSATA 4-port card (I just wanted to see what this would be like).

Third 4U to be more disks plus SSD's and/or optical drives - Brace for the impending drive failures. With almost 40 drives spinning, I know that failures in the next few months are inevitable. Therefore, I need to start planning today for the replacement of disks in the near future. That is actually the reason that I am running more spare capacity than normal.

Finally, the first WHS was dismantled this weekend. Its Norco RPC-470 is being used as a test server (see above) and will most likely become a third 4U for the Big WHS. The Core 2 Duo 6420, Gigabyte motherboard, DDR2 memory, and Adaptec controllers will most likely be sold or given away.

That wraps up this edition of the Big WHS update. Stay tuned to see what happens next! It should be exciting as WHS V2 codename VAIL gets closer to release.

Damn, that first picture is the moneyshot. Why so many VMs though? I thought you mainly use Server 2008 for storage and VMs are just to play around with, not really important. I forgot what kind of RAID you’re running… RAID 6 would be my first guess. Also, how’s the power consumption on that thing?

Sorry so many questions at once haha

Last night (after I finished that post) I added the RPC 470 last night with an Intel board and an i3-530… so it is constantly growing.

One big reason for multiple VM’s is that I’ve been playing with basically all of the open source NAS distributions, and some Microsoft products.

Raid is raid 6, raid 1, raid 0, and some straight pass-through disks.

Power consumption isn’t great, but it is much better than you would think. I haven’t tested the full thing, but I would be surprised if it averaged over 500w and peaked at 1kw. Then again, I have not hooked up a Kill-A-Watt between the IBM UPS and the wall in several months.

Which NAS do you like the most?

Pure NAS, I think OpenSolaris/ EON ZFS Store (based on OpenSolaris) is the best.

FreeNAS has a nice WebGUI and FreeBSD, but an older revision of ZFS so it is much more user friendly.

Windows Home Server is super easy to configure and maintain, and has by far the best 3rd party ecosystem of them all. VAIL is going to be a change, but it is, in many ways, and improvement.

Openfiler has a nice WebGUI but it is a bit more powerful/ complex to set up than FreeNAS, and it does not have ZFS.

I guess my answer is, it depends :-)

So what do you think about parallel VS serial (SAS) SCSI?

Some people say parallel is dead but SCSI generally killed by SATA due to it’s high prices. Usually when I got my hands on SCSI equipments like now got some Xfire servers with 320 ULTRA they are way too obsolete.

There is a SCSI640 standard too, I personally never saw any card of that kind. Some 320 ULTRA SCSI controllers and harddrives are still on insane prices but they are nothing more than pile of junks when you can buy 2 TB 10KRPM SATA drives for 60$s…

SAS is a huge improvement over prior SCSI implementations. I still remember having to terminate the huge SCSI ribbon chains. Realistically, the only reason to buy pre-SAS SCSI hardware these days is if you have some mission critical server that needs a spare/ replacement.

With that being said, SAS is still quite a bit better than SATA, both in terms of interface and drive performance.

Hi,

I’ve been amazed with your build and I want to share some info you may want to explore about scalable storage solutions with performance and is platform independent

Pls check the link below

http://www.compellent.com/

BTW….. what type of data do you store on those drives?

Are you into video?

Please check out the below as well

http://www.schange.com/

If it’s petabyte storage that would satisfy your ever growing needs then Seachange has it all for you

I have friends working there

I would have worked for them last 2008 but declined their offer and I am now working here in Singapore as an IT Professional

Personally I have a humble 4TB (2 X 2TB Hitachi Deskstar 7200) Windows Home Server 2003 hosted on a humble Shuttle K45SE running 24 hours a day

I also recycled my original DIY Windows Home Server (Intel Atom 945gclf2d) with 2 x 1.5 WD green drives and is now running FreeNAS

For my Webserver I have Windows Server 2008 Std running IIS hosting iWeb, WordPress, Joomla, Moodle and PHPBB

Lastly…. on the cheap you may want to explore Sata Hardware Port Multipliers from Addonics

How would you handle RAID on your server with two JBOD’s? If I remember right most RAID cards only have one external SFF-8088 port.

Currently (July) I do have more than one RAID card in there, but you can easily convert a SFF-8087 port to a SFF-8088 port. I have a bunch of two and four port converters in there for when I do things like testing a LSI 1068e based add-in card with only internal ports with the SAS Expander enclosures.

So two raid cards will still work with the software raids like openfiler/opensolaris will use. Perfect..

Exactly :-) The other thing that it works for is something like WHS and Vail where you can just connect drives.

You also have the option of doing RAID 6 + hotspare(s) in each enclosure (I don’t like spanning enclosures with arrays.)

144 Toshiba MBF2600RC Hard Drives: $72,000

6 Supermicro 2U Chassis w/ 24 2.5″ Hot swap bays: $8,300

Other Components (CPUs, motherboard, etc..): $8,000

Cost for beating EMC in price and performance: Priceless.

Estimated IOPS: 19000

Only one more question. Could you use a RAID card instead of an sas expander?

Great question, and you can, however that would basically require going from SFF-8087 on the raid card to external SFF-8088 then doing the SFF-8088 -> SFF-8087 conversion in the second enclosure. Net result is a LOT of external wiring but you do get more performance. Also, connecting more than 1-2 chassis will be very difficult not using expanders.

Also, if you see the comments on the Build Your Own SAS Expander Part 1 post (see 19 Aug 2010 post by Doug), Supermicro just released a 72 hot swap 2.5″ drive 4U enclosure. When the 25nm Intel SSDs come to market I am thinking of building something like this.

I did find this: http://www.lsi.com/DistributionSystem/AssetDocument/LSI_620J_JBOD_PB_031610.pdf

Seems like it will do the job really well…just have to figure out how to hook it up to a single server using both host ports. Most raid controllers I’ve seen only have 1 external 8088 port..

super micro SC216 is likethe 602j but better power supplies and $700US cheaper

Yes the but SC216 doesn’t come with the SAS components already built in, and isn’t quite as modular.

Check the E1/ E2 variants for example. That usually denotes single or dual onboard SAS expanders in Super Micro terms.