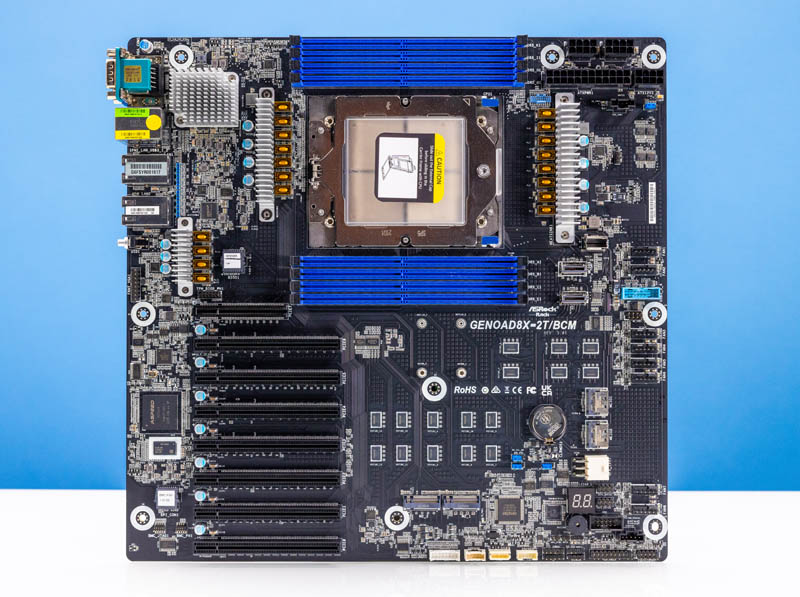

The ASRock Rack GENOAD8X-2T/BCM might be an uncomfortably good motherboard. It has a huge number of features that those looking to build an AMD EPYC-based system will want, exposing the platform’s huge I/O capabilities to builders. At the same time, this is a platform with some quirks to it, so it is a fun one to review. Let us get to it.

ASRock Rack GENOAD8X-2T/BCM

Let us start of with the most important spec of the motherboard, it is EEB (12.63″ x 13″) in size. That means it will fit many larger cases on the market today, but it will require looking for EEB support. Even with a size that is much larger than a standard ATX motherboard, this system is absolutely packed with functionality to the point it almost feels like ASRock Rack ran out of space at some points. Many server motherboards today use proprietary form factors, but boards like this help those who wish to build their own servers in specific chassis.

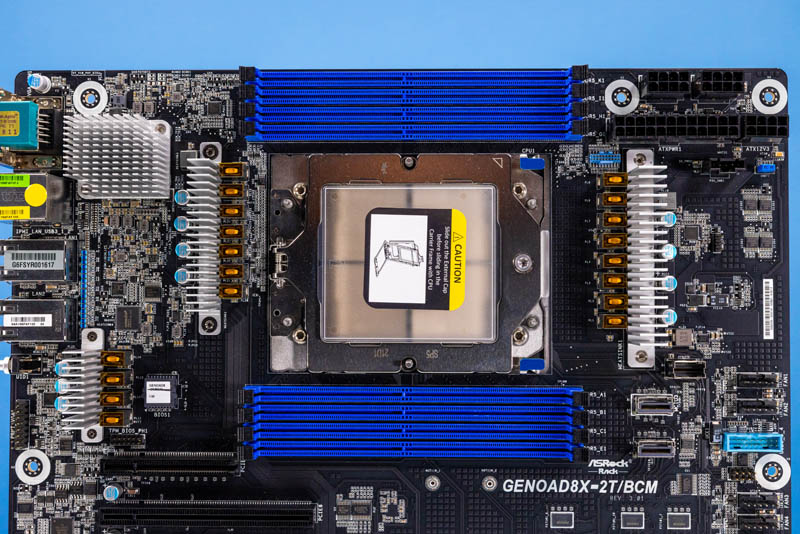

Let us start with a prime example of what makes this platform cool, but also the constraints it is under. We get an AMD socket SP5. Just for some sense, that allows for up to a 96-core AMD EPYC 9004 “Genoa”, a 96-core with 1.1GB of L3 cache Genoa-X, or a 128-core Bergamo. Putting it succinctly, one can get roughly the performance of a dual-socket previous-generation server in this single socket.

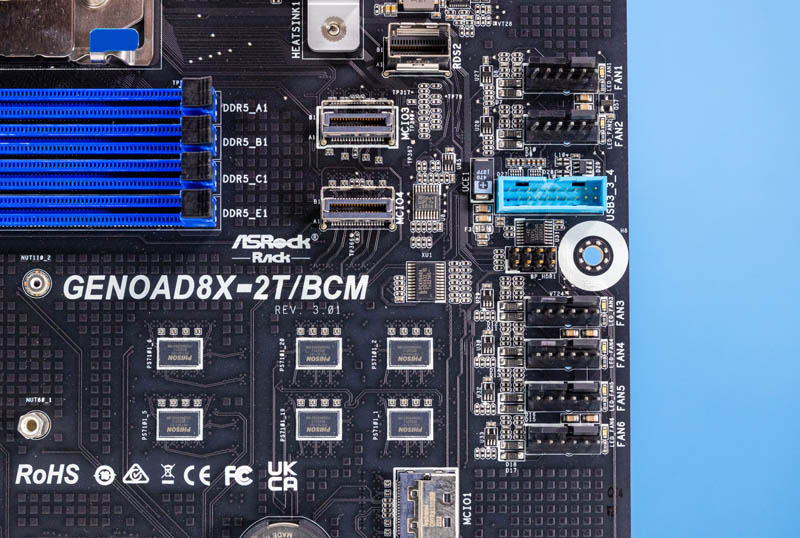

There are trade-offs to fit these massive CPUs. One of the most prominent is memory. This motherboard only supports 8-channel DDR5 memory in one DIMM per channel (1DPC) mode. We recently explained Why 2 DIMMs Per Channel Will Matter Less in Servers but we are losing 50% of the memory channels and 66% of the maximum capacity with this design. On the other hand, with DDR5 we get roughly 50% more memory bandwidth than the previous generation’s 8-channel DDR5 designs. Still, it is less than the CPUs are capable of in terms of performance and capacity. Putting 24 DDR5 DIMM slots next to a SP5 socket would have taken up an enormous amount of motherboard space so the trade-off was made.

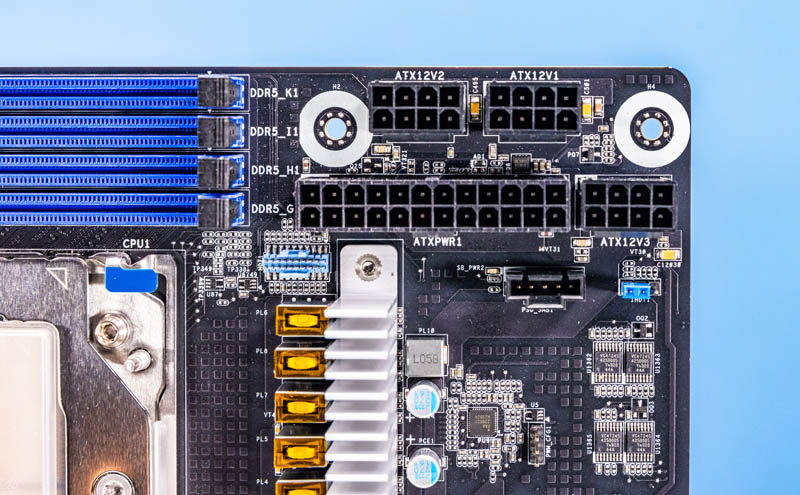

Something else that is a bit different with this platform is the power input. There are three ATX12V CPU power inputs along with a standard ATX power input. Having CPUs that can use 350W or more plus a huge set of components means these systems need more power. We started STH in the era when it was common to have a single ATX12V power connector onboard.

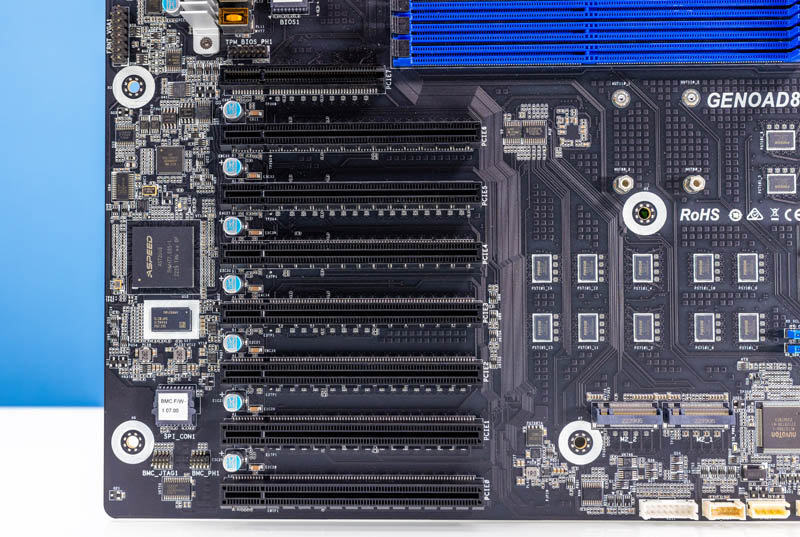

Then comes the expansion slot arry that many will be excited to see. There are seven PCIe Gen5 x16 slots and one x8 slot. Four of those x16 slots can run in CXL 1.1 mode.

Seven PCIe Gen5 x16 and one x8 slot would be a lot of I/O alone, but there is more, a lot more. An example is the dual M.2 slots onboard.

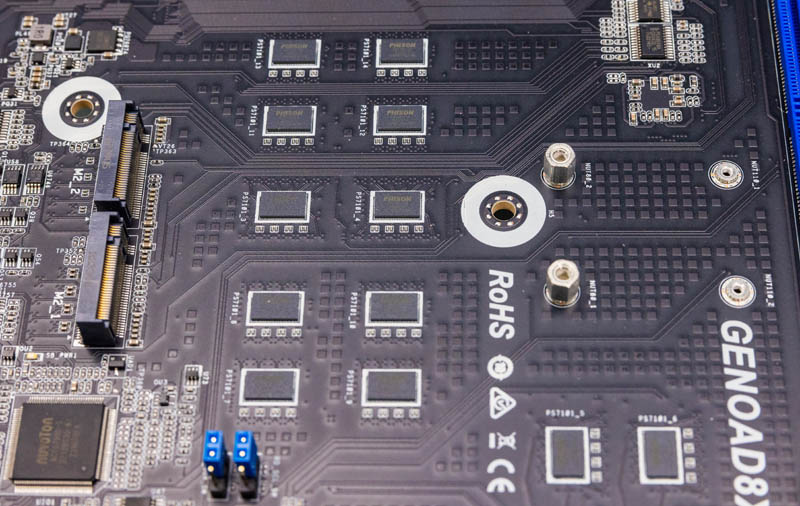

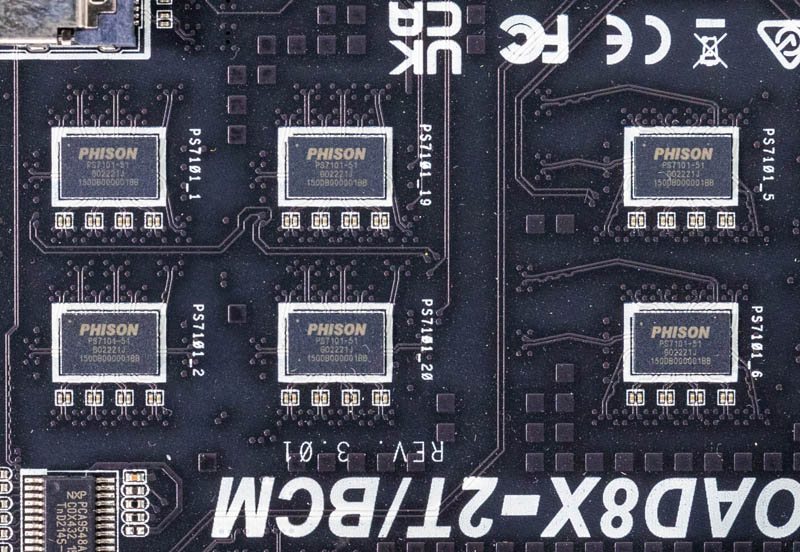

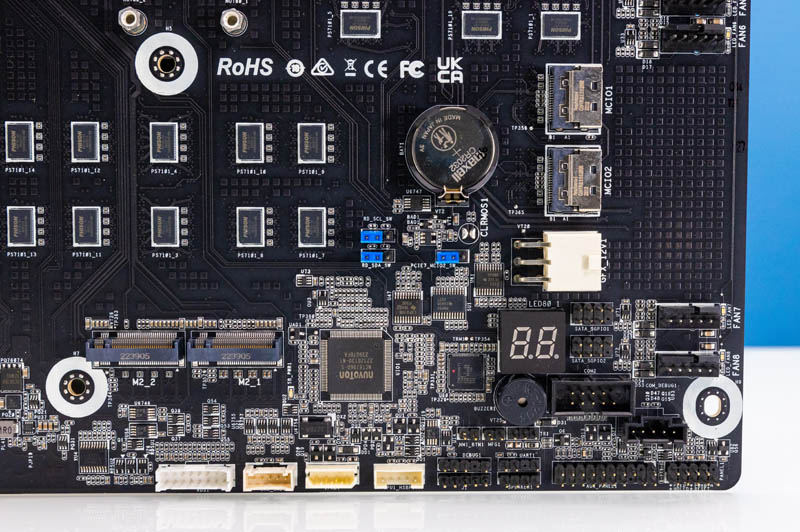

Before we get to the rest of the I/O, we should probably address the chips found all over this motherboard. There are Phison PS7101 chips all over which are PCIe Gen5 signal redrivers with mux/ demux. With PCIe Gen5, even reaching signals from one side of the motherboard to the other can be challenging from a signal integrity standpoint, and even moreso if, for example, a motherboard is designed like this one where the two PCIe x8 segments on the AMD Green2 side can drive one PCIe Gen5 x16 slot or send x8 to the slot and x4 to a M.2 and x4 to a MCIO connector. Those different distances were not as big of a challenge in the PCIe Gen3 era, but with PCIe Gen5, it is a signal integrity challenge, hence why chips like the Phison PS7101 are being used.

As one can see, there are two MCIO connectors on the opposite side of the motherboard from the PCIe slots with the M.2 slots in the middle.

That white 6-pin connector is a graphics 12v power connector.

There are two more MCIO x4 connectors above the PS7101 array and between the DIMM slots and the front panel USB 3 header and fan header array.

These MCIO connectors are the options for connecting not just slotted PCIe cards but also NVMe SSDs and with some of the connectors things like SATA SSDs. We are going to put the block diagram in this review because it is a very complex setup.

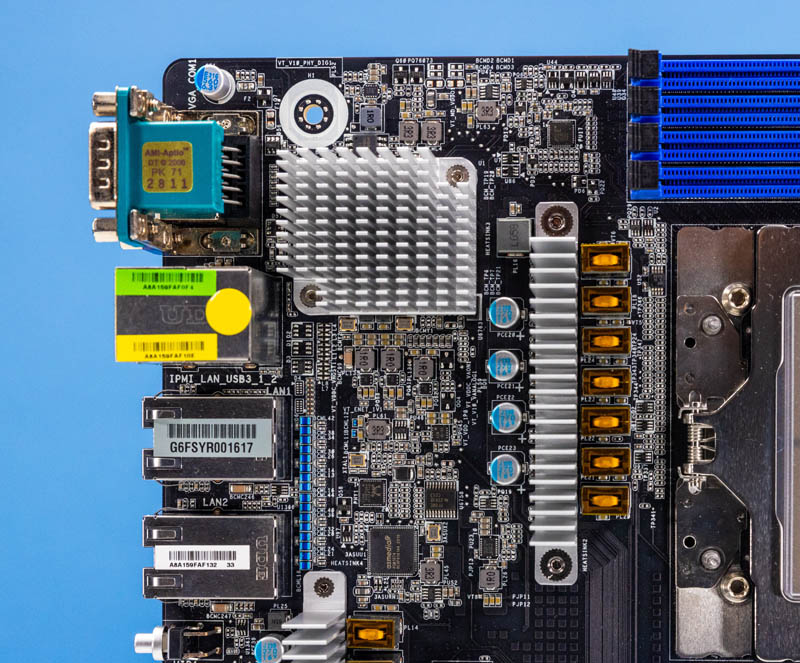

On the subject of the rear I/O something we wanted to quickly mention is that the airflow of this motherboard is standard server front-to-back. Some will make pedestal servers out of this platform, but we strongly advise that if you are looking to use the top vents for CPU exhaust, that you do so with liquid cooling to not interfere with the system airflow.

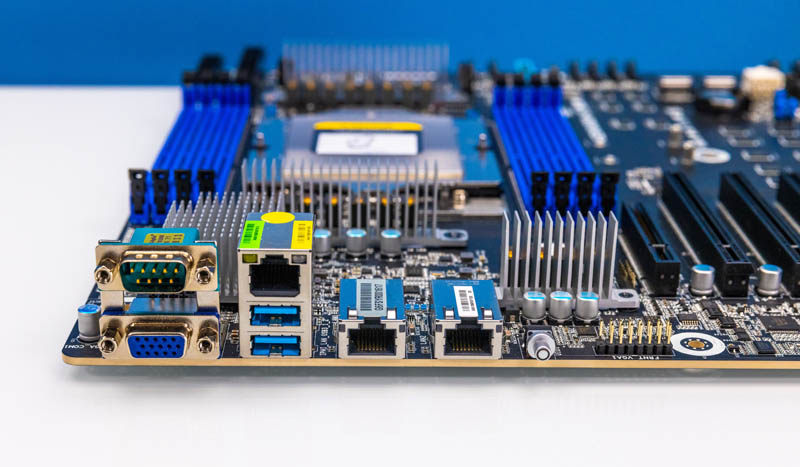

At this point, we probably have more than a handful of STH readers thinking that this would make an amazing workstation platform. Those are excellent thoughts, however, one of the big challenges is the enormous gap between the server and workstation rear I/O. This platform has a decidedly server rear I/O with legacy serial, VGA, and two USB ports for local KVM cart access in the data center.

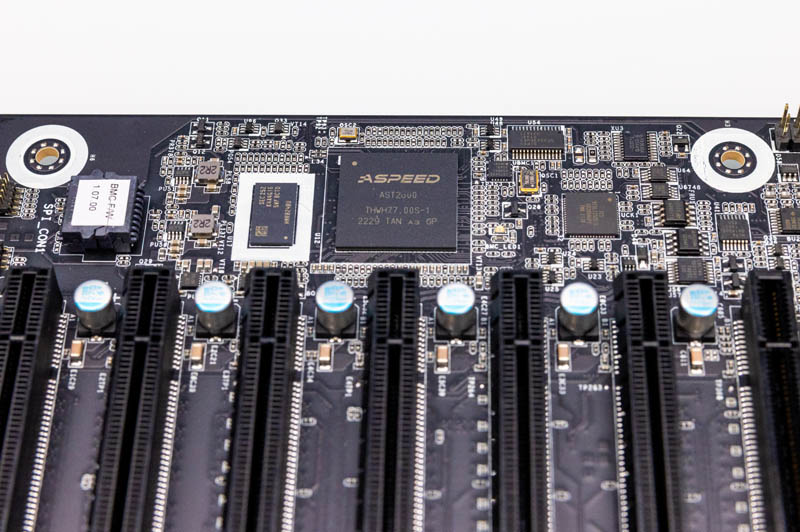

The single network port stacked atop the USB ports is for out-of-band management. The motherboard has an ASPEED AST2600 Baseboard Management Controller or BMC onboard. This runs an industry-standard management stack for IPMI and functionality like iKVM access.

The two network ports are 10Gbase-T ports powered by the Broadcom BCM57416 controller that sits under a heatsink behind the VGA and serial ports. This is also the reason for “BCM” in the GENOA8X-2T/BCM product name.

This is a complex motherboard, so next, let us get to the topology.

Please re-read and re-write the paragraph about memory slots/ bandwidth, then you can delete this comment.

“Putting 24 DDR5 DIMM slots next to a SP5 socket would have taken up an enormous amount of motherboard space so the trade-off was made.” LOL

Dope… SP5 is twelve channel… harhar. It’s been a long week!

It is time for the PCIe x16 slot to DIE. We don’t have room on the mobo and I challenge anyone to find 7 useful single slot cards to plug into this thing.

We should have moved to cabled PCIe expansion a long time ago.

With this amount of PCIe, I don’t really find the challenge to use this as a workstation TBO. We do have insane USB cards that convert PCIe Gen3x8 into 8 USB3.2 10G ports. This would add cost to the system, but if you don’t need this amount of full-speed USB, then you would hava a lot of cheaper solution giving you 4 ports or so.

@emerth it exists. I have the Deep MATX version of this mobo and it has quite a lot of PCIE over MCIO cables as well as some PCIE x16 slots

What’s the idle power usage?

I love large dense boards like this! This is awesome. Rear I/O isn’t a big issue since there’s plenty of slots of I/O addon cards.

@Lance Longreen – can you post the full name & maker of your board?

Ahah – you mean this, right?

https://www.asrockrack.com/general/productdetail.asp?Model=ROMED4ID-2T#Specifications

Perhaps a stupid thing to ask…but with silicon photonics and all that jazz in vogue. I’m not sure why PCIE hasn’t shifted future development to a fiber optic for days + copper for power generation. I feel like manufacturing and tooling might make that fiscally impossible … but otherwise I’m confused.

All of these additional active redrivers snd complexity to keep signal integrity really makes you wonder about the future…

@emerth

I’m assuming it’s the ASRock Rack GENOAD8UD-2T/X550:

https://www.asrockrack.com/general/productdetail.asp?Model=GENOAD8UD-2T/X550#Specifications

@emerth did you check Asrock Rack homepage at all ? ;-)

Anyway here it is:

It has a mix of PCIE over the usual ×16 slots as well as MCIO connectors (Mini cooledge)

https://www.asrockrack.com/general/productdetail.asp?Model=GENOAD8UD-2T/X550#Specifications

@Nick, it is because normal ppl are not driving the market. PCIe5 exists because hyperscalers and such need it, not because normal ppl and businesses do. I think AMD has begun to accept this with some of it’s chipsets and mobo designs staying with reasonable-cost PCIe4.

@PatrickKennedy , Is there an chance of producing a full length pdf version of this review ?

For my reviews later when I goto think about possible upgrade paths for my present system .

When will the be a server motherboards with USB C (display port)?

Nothing wrong with VGA, just getting harder to buy monitors with VGA.

Never is the price mentioned…

@Gusty, when checking the webz for pricing I see pricing ranging from just over $1000 to close to $1200. So it will probably depend on reseller but it is likely somewhere in that price range.

is ther a optien to bifurcation on the slots?

I have this board with a 84c Genoa, its a great board, love the IPMI, its very extensive, as is the BIOS. My main problem is that I can’t install XCP-NG on it, the problem is the software, its refuses to find my NVME drive. Proxmox etc instals without a problem. The location of the NVMEs could be a bit better. This board is huge, it barely fit into my Meshify2XL. Would be nice if it had a USB3.2 header, but for the rest can’t complain

1) Can anyone share their builds? What components are you using, actually working in the board?

I’m wanting to understand the working m.2, ddr5 and any PCIe boards being used. It would be a plus it you are running Linux. I am asking b/c I am wanting to build a system with the 9355P and I have some spare m.2’s, NVIDIA 3090, Adaptec 8805, etc.