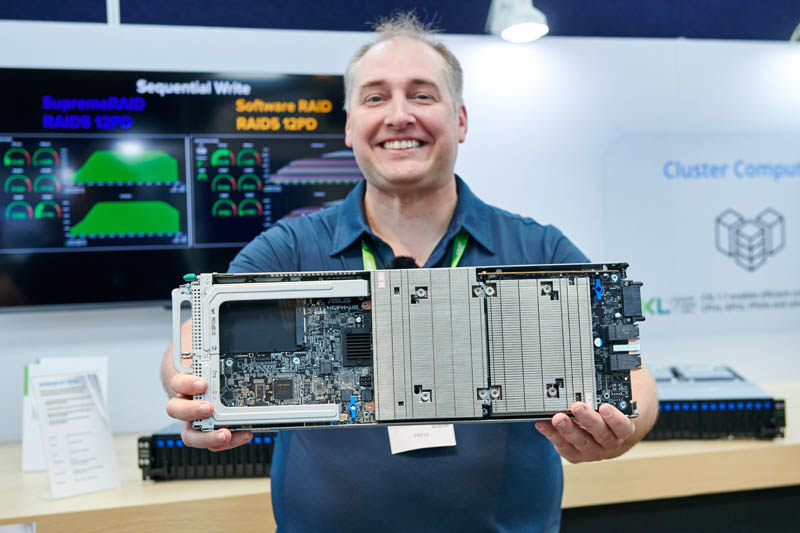

At Computex 2023, we saw a 2U 4-node NVIDIA Grace Superchip server from ASUS. Dubbed the ASUS RS720QN-E11-RS24U, this combines the 24-bay 2.5″ 2U chassis we have seen in a number of ASUS reviews with four NVIDIA Grace Superchip nodes.

NVIDIA Grace Superchip

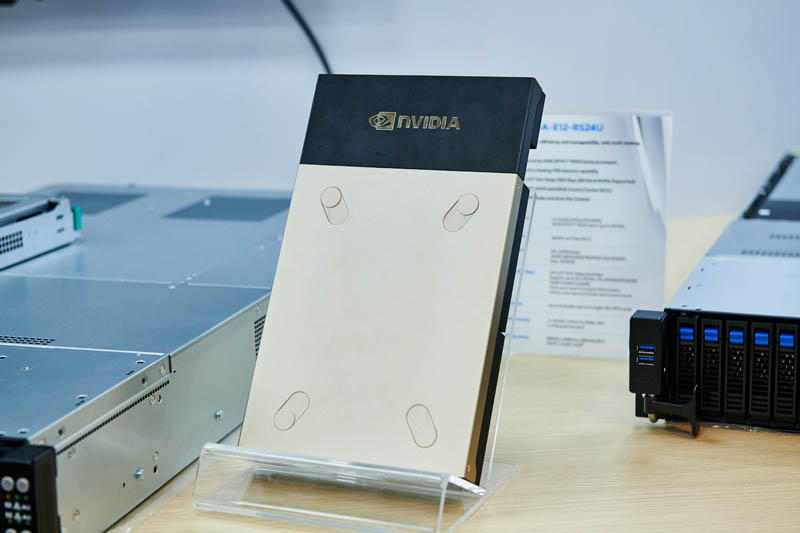

Something that was interesting is that the NVIDIA Grace Superchip module looks stunning, but it is not what we are seeing being deployed in many servers. Still, here is the module in its beauty skin.

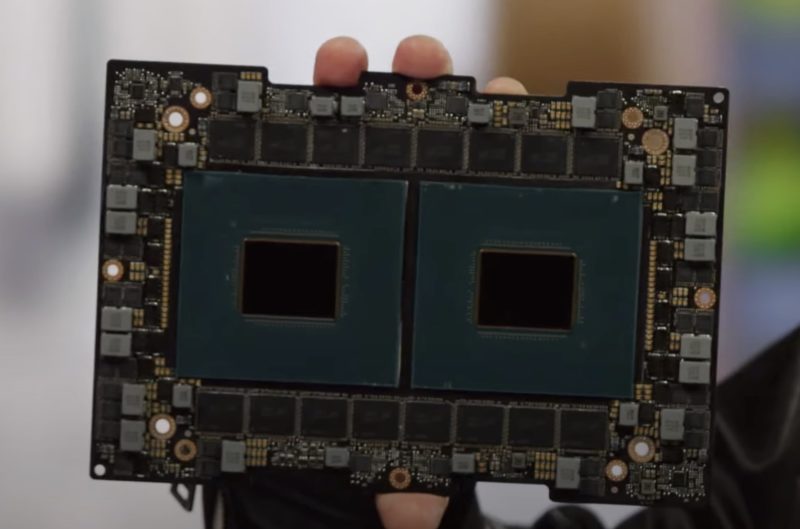

As a quick refresher, a NVIDIA Grace CPU is a 72-core Arm Neoverse V2 design. NVIDIA says that two of these 72-core CPUs in the configuration called the “Grace Superchip” configuration should get a SPECint_rate_2017 of around 740. That is an estimated number since they are not officially submitted results. Some publications have said this dual-chip solution with 144 cores is the fastest CPU (or dual CPU configuration) out there. ASUS with a single AMD EPYC 9654 is at 898 here as an example. Given NVIDIA’s estimated numbers, the dual Grace solution should be competitive with 84-96 core single socket AMD EPYC “Genoa” on the integer side.

What this solution does have is a high-speed chip-to-chip interconnect as well as LPDDR5X memory with ECC onboard.

At 500W, with memory included in that figure, it is fairly power efficient. That AMD EPYC 9654 has a 360W TDP but also has 12 memory channels which can use another 60W+. What NVIDIA gets with this, however, is 960GB of ECC memory with around 1TB/s of memory bandwidth. So the NVIDIA Grace Superchip is really designed for high memory bandwidth without using HBM memory like the Intel Xeon MAX CPU.

Since this is our first piece on a system with the NVIDIA Grace Superchip, we wanted to clear up a bit about the chips since NVIDIA has become popular and consumer sites are saying this is the fastest chip without explaining how it is the fastest.

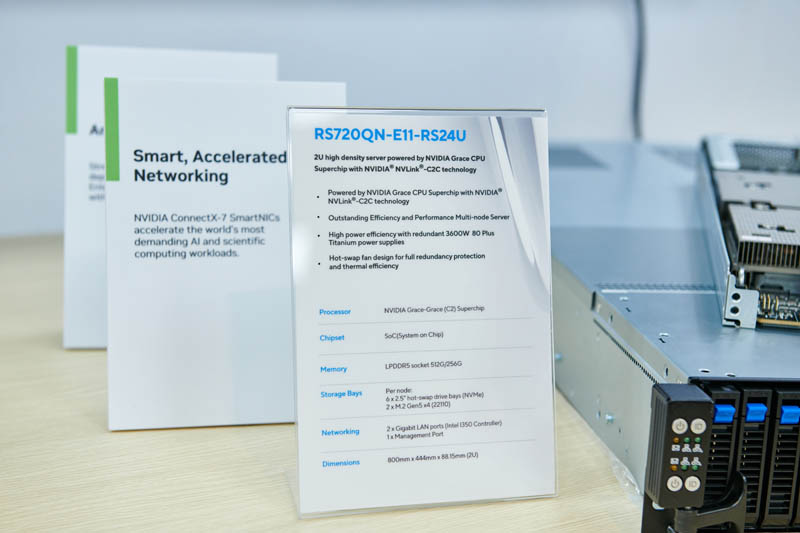

The ASUS RS720QN-E11-RS24U 2U 4-Node NVIDIA Grace System

Here are the quick specs of the ASUS RS720QN-E11-RS24U. Something interesting is that ASUS says LPDDR5 at 512G/ 256G. The modules are slated to come in with LPDDR5X in 240GB, 480GB, and 960GB configurations (with ECC.)

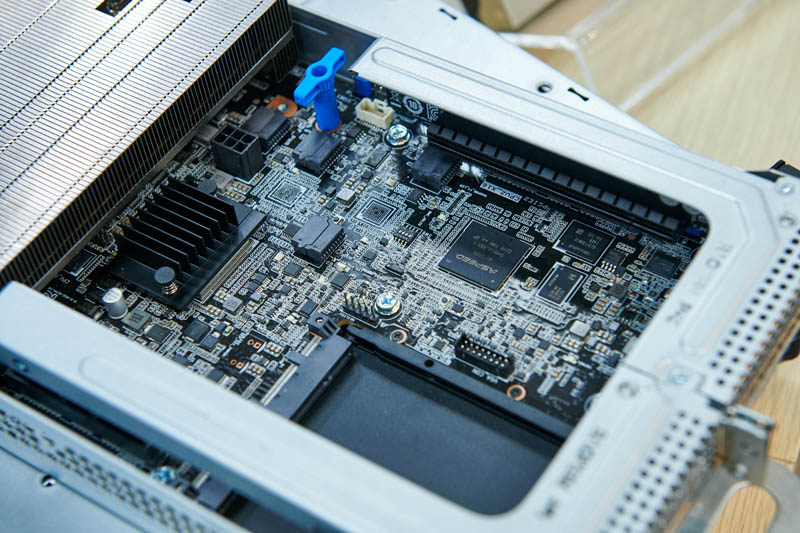

The system looks like most ASUS nodes, with rear I/O and management. One big difference is that we do not get the POST code LED here.

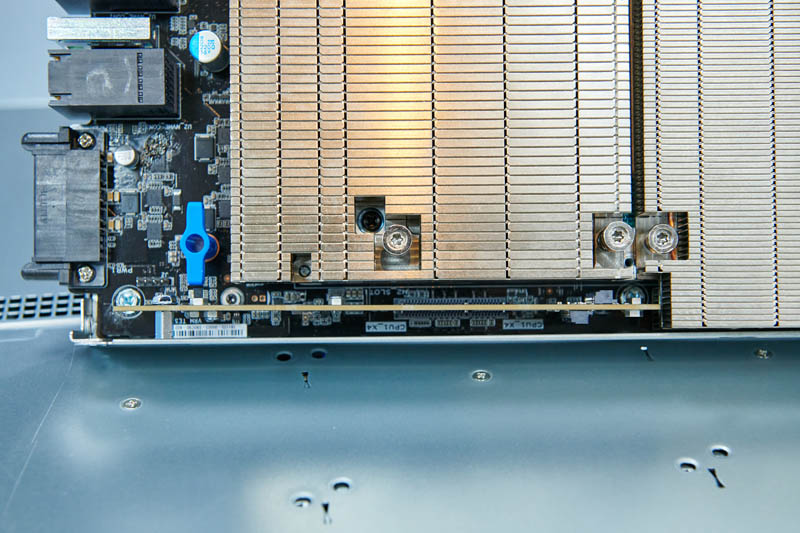

Inside the system, we get two PCIe Gen5 slots as well as an OCP NIC 3.0 slot. We can also see the ASPEED BMC onboard.

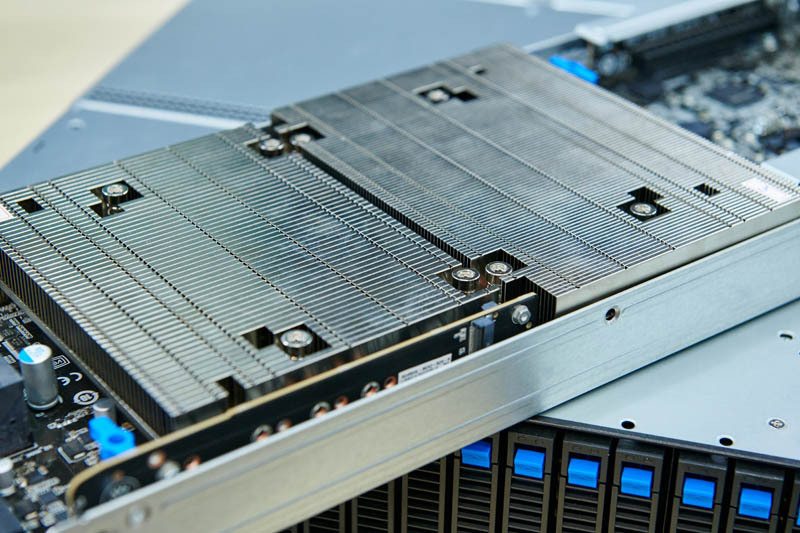

The NVIDIA Grace Superchip has a huge heatsink that is customized for the chassis and runs the entire width of the node. That is actually a big differentiator over current x86 servers where heatsinks are limited to the area around the socket to the DIMM slots. From a cooling perspective, Grace makes a lot of sense.

Another cool feature is that aside from the six front 2.5″ bays per node, there is a dual M.2 module for boot drives.

The node connects to the chassis via high-density connectors.

We are not going to focus too extensively on the chassis since that is something we have seen before.

Final Words

Overall, it was great to get to see the NVIDIA Grace Superchip system in-person. The onboard LPDDR5X memory gives this solution a lot of memory bandwidth along with the 144 Arm Neoverse V2 cores. What we probably under-appreciated before seeing systems is just how much having onboard memory changes how cooling solutions can be built for these servers since they do not have to take into account the height of DIMM slots.

Hopefully we will get to see more of these systems in the STH lab soon.

Hmmm…I wonder how the price / performance / availability / TCO of this beast would compare to the 32-core Epyc (Rome/Milan, Genoa was past my data center TTL) dual-socket (SMT on so 128 threads) some servers 512/ some 1024-TB RAM 2U/4-node beasts, used in the ~3K server financial research cluster I used to “server sit” for a living.

Said cluster moved from Xeon to Epyc at the advent of Rome, and that entailed a lot of application testing (some slight FP differences iirc)…Moving to Arm would have to give a big uptick in perf and/or lower TCO for such a move to be considered.

What is interesting about this, is that, even very early on in the Grace lifecycle, this is an unapologetic pure CPU server with nothing in the design suggesting this is meant to be paired with GPUs.