Something we get questions on rather regularly is the NVIDIA DGX versus the NVIDIA HGX platform, and what makes them different. While the names sound similar, they are different ways that NVIDIA sells its 8x GPU systems with NVLink. NVIDIA’s business model changed between the NVIDIA P100 “Pascal” and V100 “Volta” generations and that is when we saw the HGX model really take off to where it is with the A100 “Ampere” and H100 “Hopper” generations.

NVIDIA DGX versus NVIDIA HGX What is the Difference

First off, the current NVIDIA DGX and HGX lines are for the 8x GPU platforms connected via NVLink. NVIDIA has other boards such as the 4x GPU assemblies called Redstone and Restone Next, but the main DGX/ HGX (Next) platforms are 8x GPU platforms using SXM.

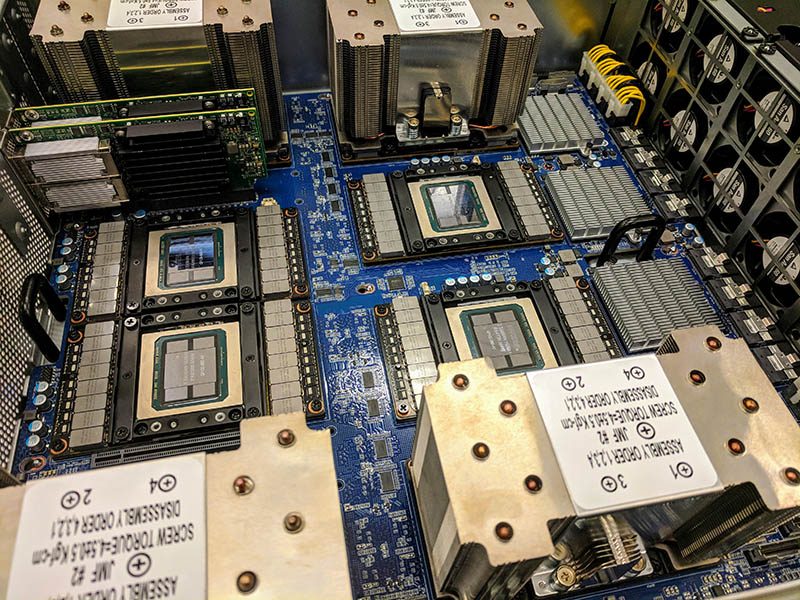

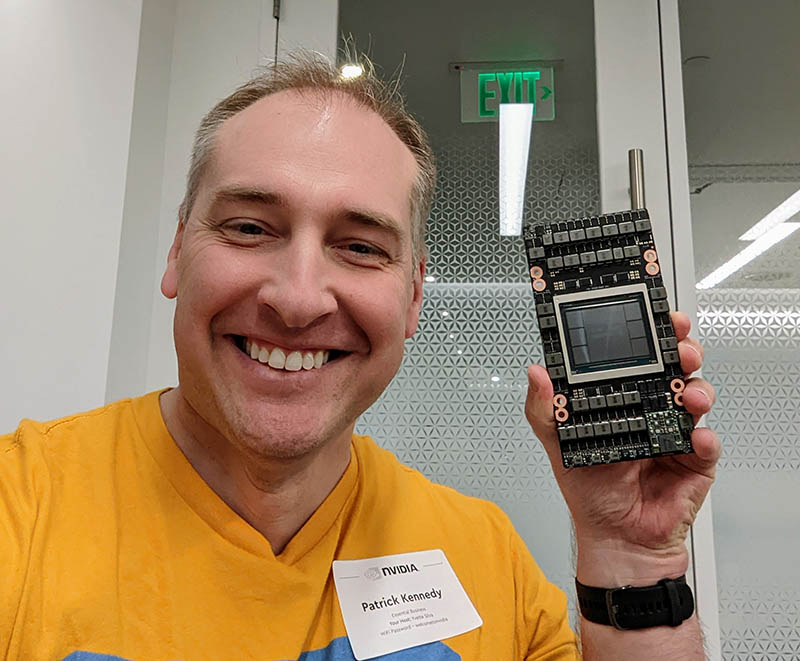

Taking a step back, we need to look at assembling a NVIDIA Tesla P100 8x SXM2 GPU system. This was the conference room table as we prepared our “DeepLearning12” build.

The process was that each manufacturer would build its own baseboard for the GPUs. NVIDIA would then sell the SXM form factor GPUs, and then the server manufacturer (or STH) would install the GPUs into the server.

This was not an easy process. A large server manufacturer in Texas allegedly had a layer of thermal paste that was too think on heatsinks and many trays of GPUs were cracked. We titled the video, one of our first, as “why SXM2 installation sucks!” for good reason. Installing the GPUs was tough because of the torque requirements.

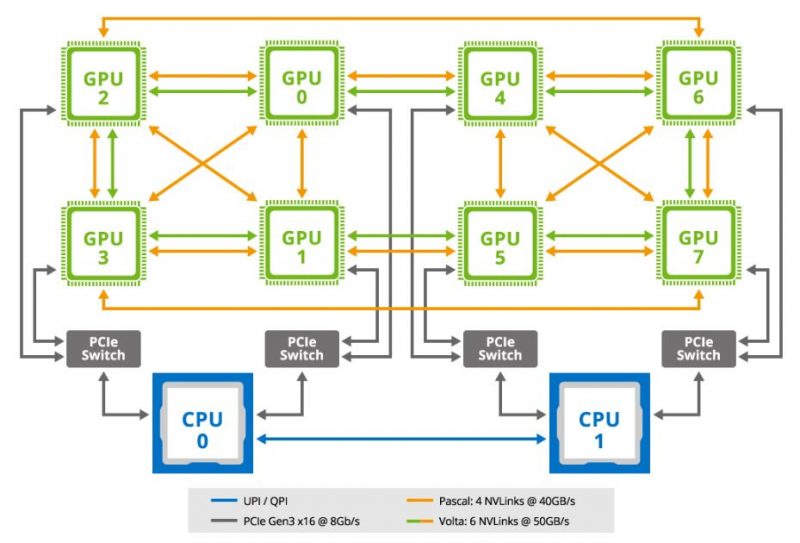

Moving to the Volta generation with the NVIDIA Tesla V100, NVIDIA added more NVLinks.

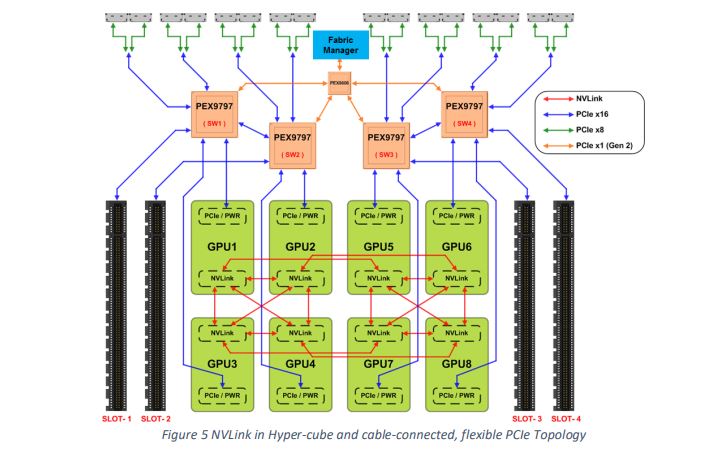

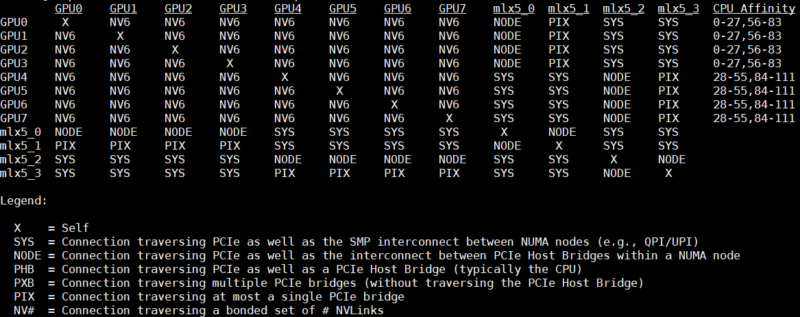

As part of this process, NVIDIA standardized the entire 8x SXM GPU platform. That included Broadcom PCIe switches for host connectivity (and later Infiniband connectivity.)

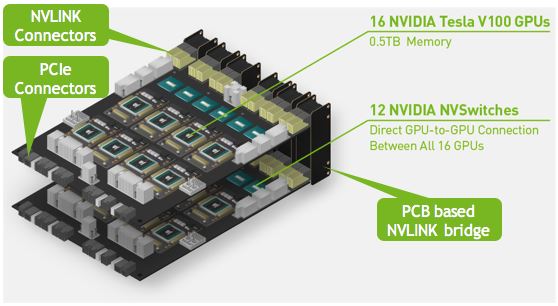

It also added NVSwitch. NVSwitch was a switch for the NVLink fabric that allowed higher performance communication between GPUs. Originally, NVIDIA had the idea that it could take two of these standardized boards and put them together with this larger switch fabric. The impact, though, was that now the NVIDIA GPU-to-GPU communication would occur on NVIDIA NVSwitch silicon and PCIe would have a standardized topology. HGX was born.

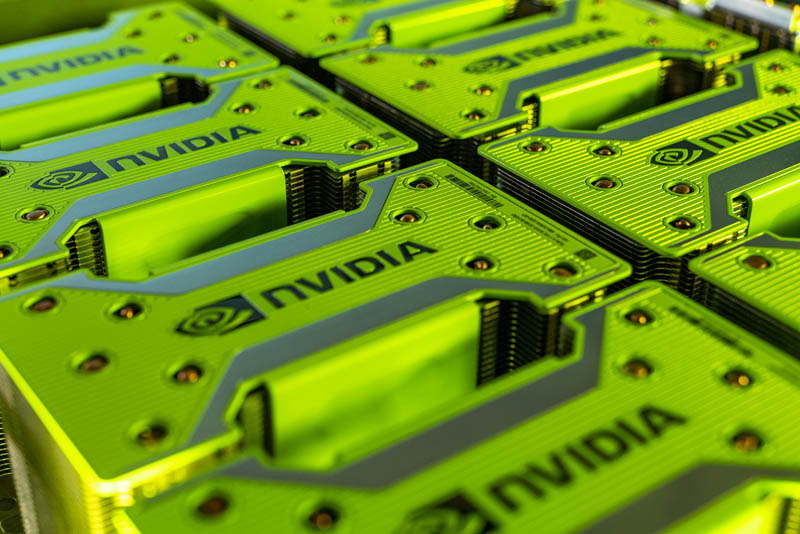

Here is a look at 8x NVIDIA V100’s from a 2020-era server review. It was also the best color scheme for the NVIDIA SXM coolers. If you look at the P100 version above, you can see the Gigabyte server had unbranded SXM2 heatsinks. That is because NVIDIA went a step further. Instead of just making the NVSwitch baseboard with SXM3 sockets, it would also install the GPUs and coolers.

Here is a review of one of those systems.

The impact of this was huge. Now server vendors could purchase an 8x GPU assembly directly from NVIDIA and not risk GPUs to thick layers of thermal paste. It also meant that the NVIDIA HGX topology was born. Server vendors could put whatever metal around it that they wanted. They could configure the RAM, CPUs, storage, and more. All of that, with the understanding that the GPU portion was a fixed topology by the NVIDIA HGX baseboard.

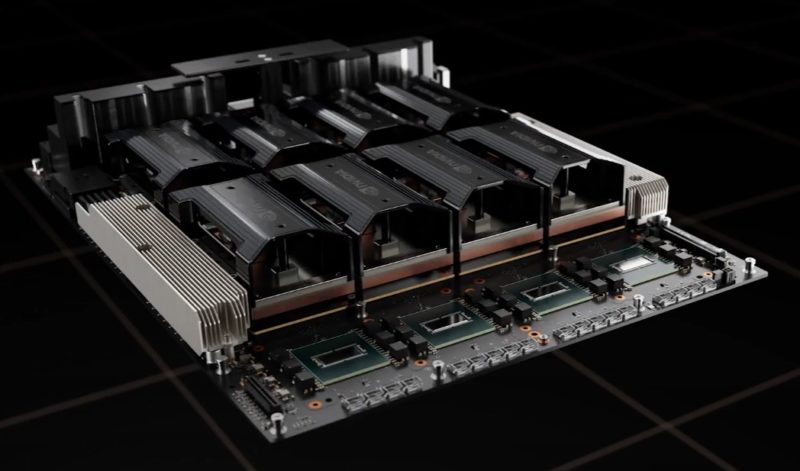

This was very successful. In the next generation, the NVSwitch heatsinks got larger, the GPUs lost a great paint job, but we got the NVIDIA A100.

The codename for this baseboard is “Delta”.

Officially, this board was called the NVIDIA HGX.

You can read a review of an A100 system and see more in our Inspur NF5488A5 8x NVIDIA A100 HGX Platform Review.

At this point, NVIDIA, its OEMs, and customers realized that with more power, the same number of GPUs could do more work. There was a catch. More power meant more heat. That is why we started to see liquid-cooled NVIDIA HGX A100 “Delta” platforms.

This is a challenge since the HGX A100 assembly was initially launched with included “NVIDIA” air coolers.

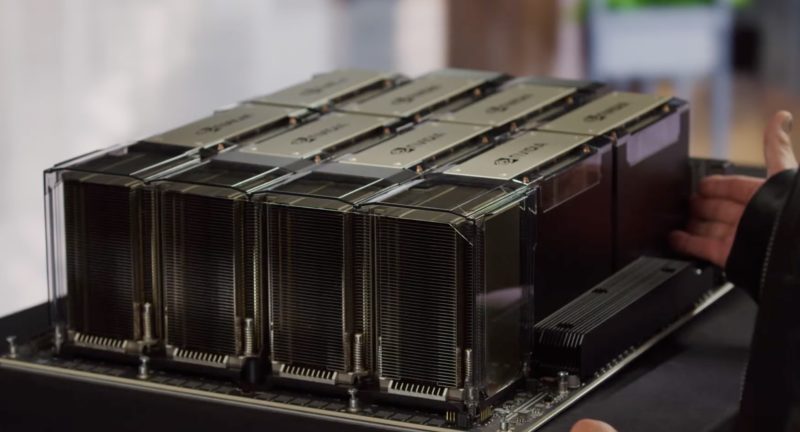

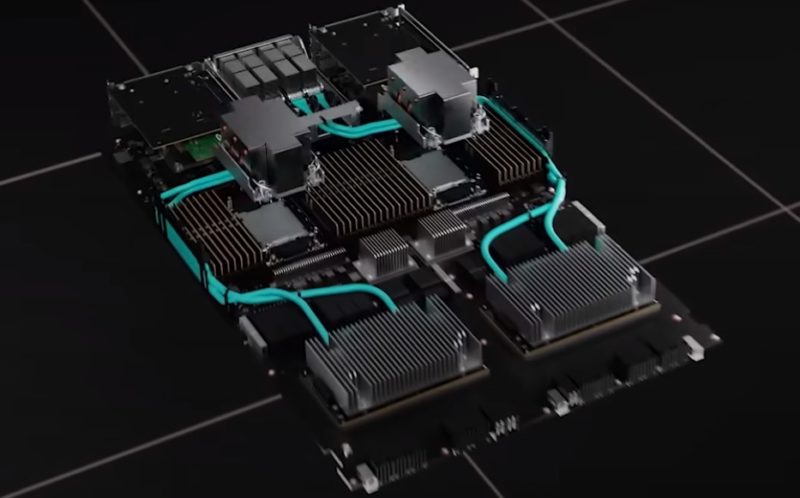

With the latest “Hopper” generation, the heatsinks had to grow taller to accommodate the higher-power GPUs as well as the higher-performance NVSwitch architecture. Here is the NVIDIA HGX H100 platform “Delta Next”.

NVIDIA also has liquid-cooled options for the HGX H100.

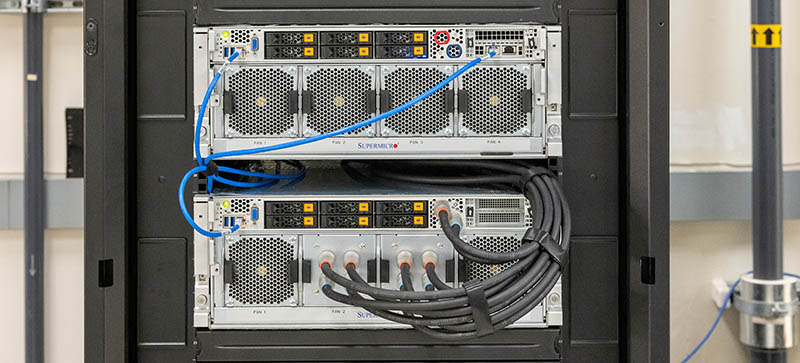

You can see some new HGX H100 systems in this video for Supermicro:

What you cannot do, is what we did in the NVIDIA Tesla P100 generation earlier and just buy H100 GPUs and install them. NVIDIA makes the SXM baseboards now.

At this point, we have gone through a number of NVIDIA HGX platforms and different vendors. At this point, it is worth noting what the NVIDIA DGX is. NVIDIA has had DGX versions since the P100 days, but the NVIDIA DGX V100 and DGX A100 generations used the HGX baseboards and then built a server around the DGX. NVIDIA has been rotating the OEMs it uses for each generation of DGX, but they are largely fixed configurations.

With the NVIDIA DGX H100, NVIDIA has gone a step further. It has new NVIDIA Cedar 1.6Tbps Infiniband Modules each with four NVIDIA ConnectX-7 controllers. With the Mellanox acquisition, NVIDIA is leaning into Infiniband, and this is a good example as to how.

While NVIDIA DGX H100 is something like a gold standard of GPU designs, some customers want more. That is why NVIDIA has one platform that it can bundle with things like professional services. It then has the HGX H100 platforms so OEMs can customize. We have seen a number of different designs. Those include denser solutions, AMD or ARM-based CPU solutions for more cores, different Xeon SKU levels, different RAM configurations, different storage configurations, and even different NICs.

Perhaps the easiest way to think about it is that the NVIDIA DGX series is as NVIDIA’s standard. It is still built around the NVIDIA HGX 8x GPU and NVSwitch baseboard, but it is a NVIDIA-specific design. The way that the DGX is trending is NVIDIA offering a higher level of integration on the networking side to be installed into things like DGX SuperPODs to cluster the DGX systems.

Final Words

With the NVIDIA HGX baseboards, the company removed a lot of the engineering effort required to get 8x GPUs linked on high-speed NVLink and PCIe switched fabric. It then allows its OEM partners to build custom configurations while NVIDIA can price a HGX board at a higher margin. NVIDIA’s goal with the DGX is different than many of its OEMs since the DGX is being used to go after high-value AI clusters and the ecosystems around those clusters.

Hopefully, that helps but the easiest way to think of it is:

- NVIDIA HGX is the 8x GPU and NVSwitch baseboard

- NVIDIA DGX is NVIDIA’s systems brand (we are not talking about the DGX Station here.)

The NVIDIA HGX A100 and HGX H100 have been hot commodities ever since it was revealed that OpenAI and ChatGPT are using the platforms. If you want to learn more about the different HGX A100 platforms, you can see ChatGPT Hardware a Look at 8x NVIDIA A100 Powering the Tool.

Is there a separate name for the 4x SXM “Redstone” assemblies? Will/is there a H100 version?

@Y0s The Dell PowerEdge XE8640 is a notable example of an OEM Redstone Next system with 4x H100 SXM5 gpGPUs available.

Nvidia says with their dgx platform you can connect up to 256 gpus at 900 GB/s via 4th generation nvlink switch. Is this also possible on the hgx h100 platform?

Hi what does DGX stand for?

nice