We are going to be covering the NVIDIA GTC 2023 keynote. We already have had a preview of a lot of the content today. In the next few hours/ days we are going to go into many of the new products. There will be a lot here given the popularity of AI topics over the past quarters.

Since this is being done live, please excuse the typos.

NVIDIA GTC 2023 Keynote Product Announcements

Here is the keynote link. As always, we suggest opening this link in a new browser, tab, or app for a better viewing experience. This page will refresh with updates as they come out.

Now onto our coverage. NVIDIA is starting the keynote by showing how it is updating many of its frameworks to improve installed base performance.

NVIDIA cuLitho is a HUGE Step Forward

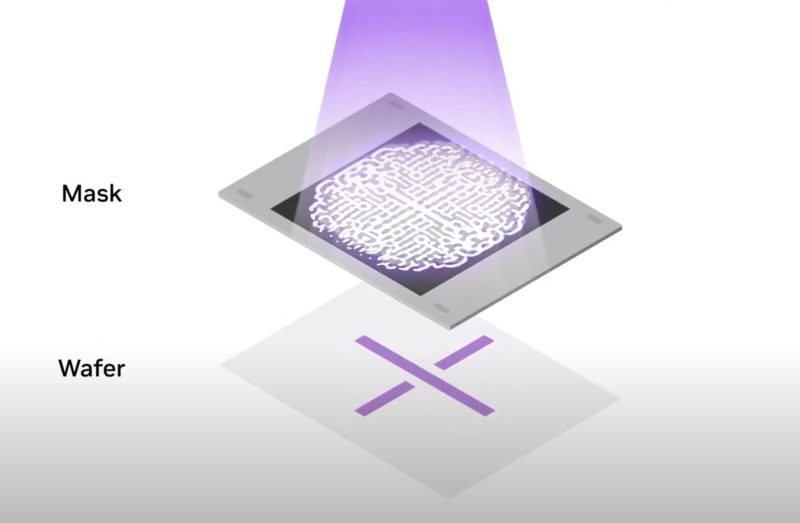

NVIDIA cuLitho is designed to help make masks faster. The patterns on the masks used for things like EUV lithography, no longer resemble the patterns etched into wafers. Designing what these masks should look like is a computationally intensive problem. NVIDIA says cuLitho can reduce the power needed to make these masks from 40,000 CPU systems to 500 DGX systems or 4000 GPUs, lowering costs and increasing speed. This is the largest computational workload in chip design and manufacturing.

ASML, TSMC, and Synopsys are all initial customers. These masks will continue getting more complex, so it is a problem that scales more than in a linear fashion. As a result, this is seen as a big one and is expected to be qualified for production in June 2023.

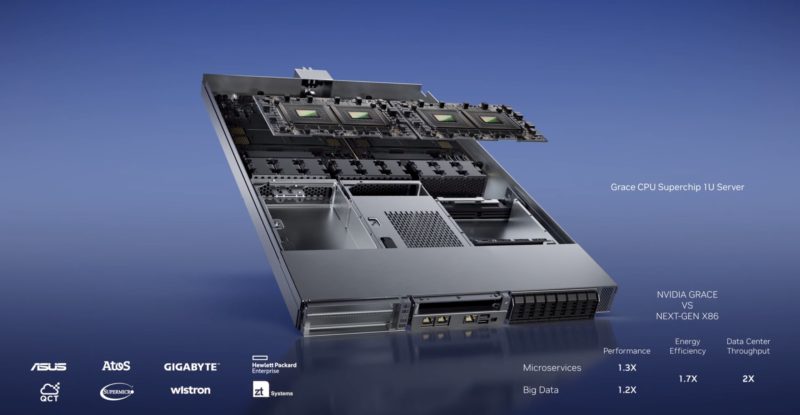

NVIDIA Grace CPU

NVIDIA says that the role of CPUs will be for things like web RTC and database queries as more workloads get accelerated. NVIDIA designed its Arm-based Grace CPU for AI first cloud workloads. The GPU accelerates AI workloads, and the Arm CPU does other processing.

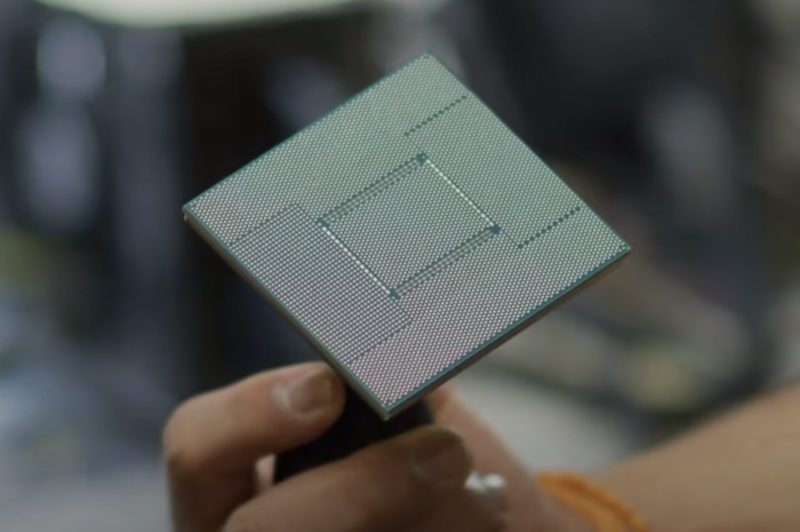

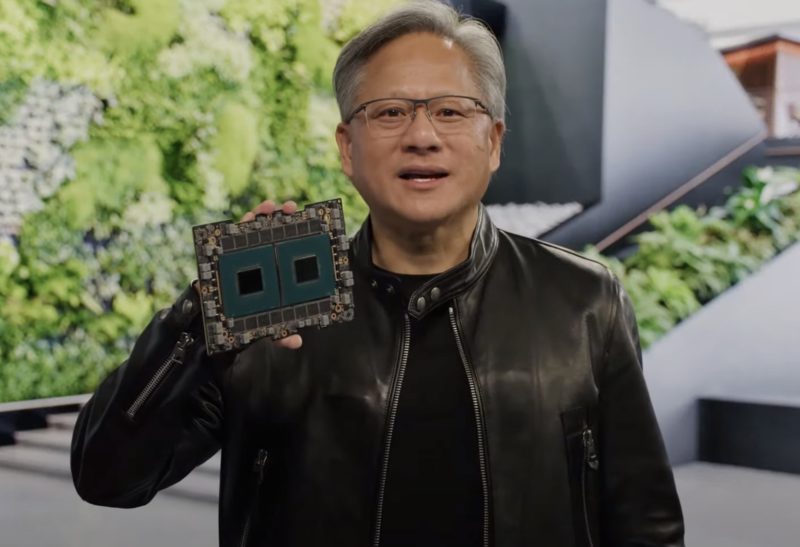

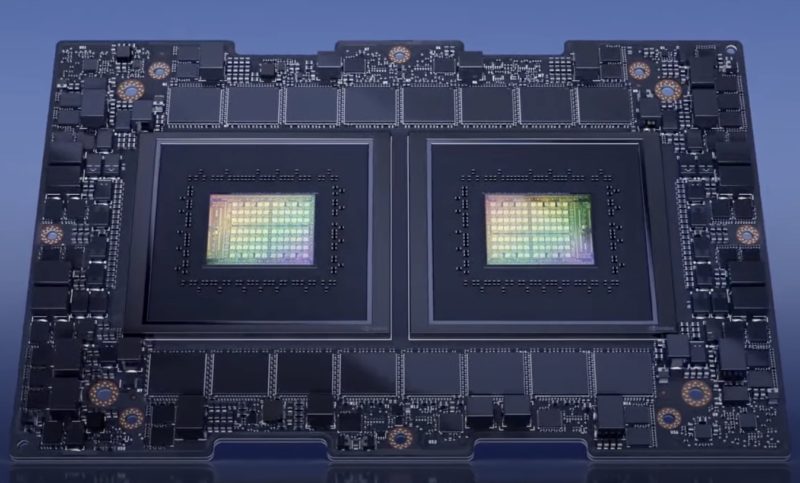

Jensen had a Grace Superchip assembly combining two of these Arm CPUs onto a single assembly co-packaged with memory. It looks huge.

Here is the close-up:

Here is the rendering of what is being held. One can see the LPDDR memory sitting outside the CPU packages.

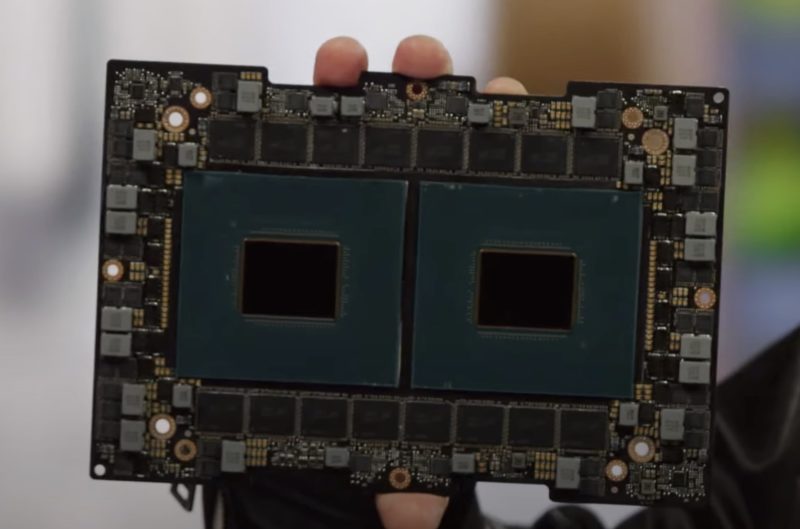

Here is what the packaged module looks like:

Here is the close-up. Jensen says this entire module is 5″ x 8″.

NVIDIA says it is 1.2-1.3x the performance of the average performance of new x86 chips at lower power. That claim sounds to us like NVIDIA is saying that modern x86 servers are much faster (otherwise it would be top-bin to top-bin.)

We hopefully will see these servers later this year.

NVIDIA BlueField-3

The NVIDIA BlueField-3 update is that it is in production and shipping to major cloud providers.

The Oracle Cloud and Microsoft Cloud are interesting since we would expect those to be AMD Pensando DPU customers.

NVIDIA DGX H100 Update

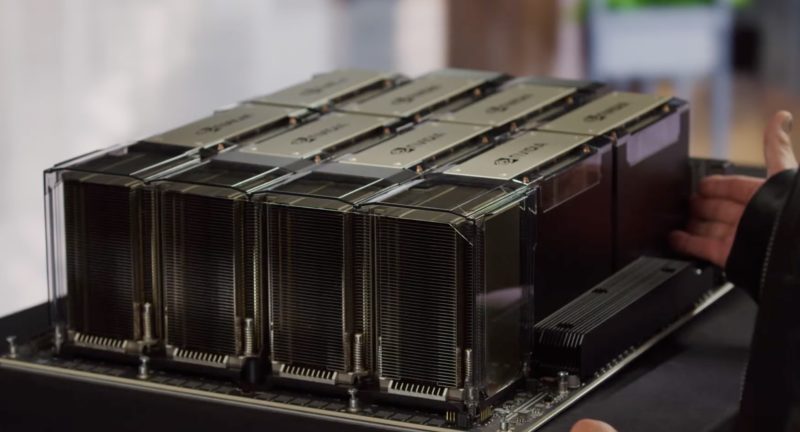

NVIDIA showed the air-cooled DGX H100.

Also that Microsoft Azure has previews of the H100 systems.

We are going to just show this here because it looks cool.

NVIDIA DGX Cloud

This is perhaps the least surprising one. NVIDIA is bringing DGX Supercomputers to the cloud as a service for enterprises.

The NVIDIA DGX Cloud offering is going to be optimized for NVIDIA AI Enterprise. Oracle will use ConnectX-7 for Infiniband and BlueField-3 for its DPUs in these. To us, this is the obvious move. NVIDIA can move up the stack and start to take cloud services revenue and charge more for each GPU it produces. Perhaps the only question is why has this taken so long.

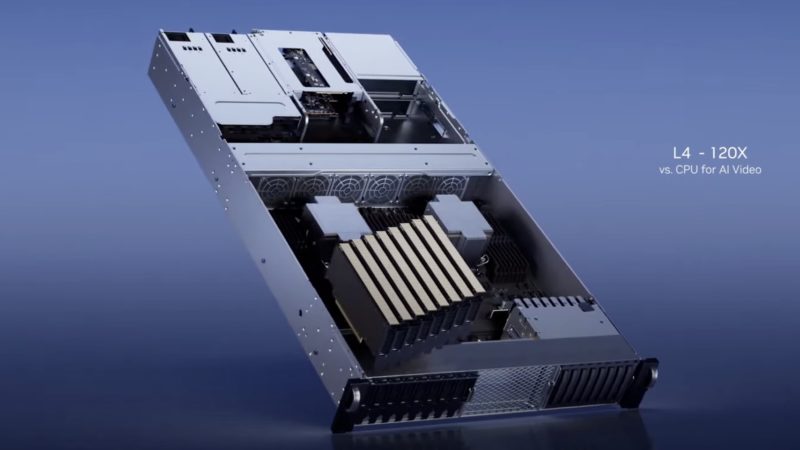

NVIDIA L4 and H100 NVL Announcement in NVIDIA AI Inference Portfolio

Here is NVIDIA’s new data center inference lineup.

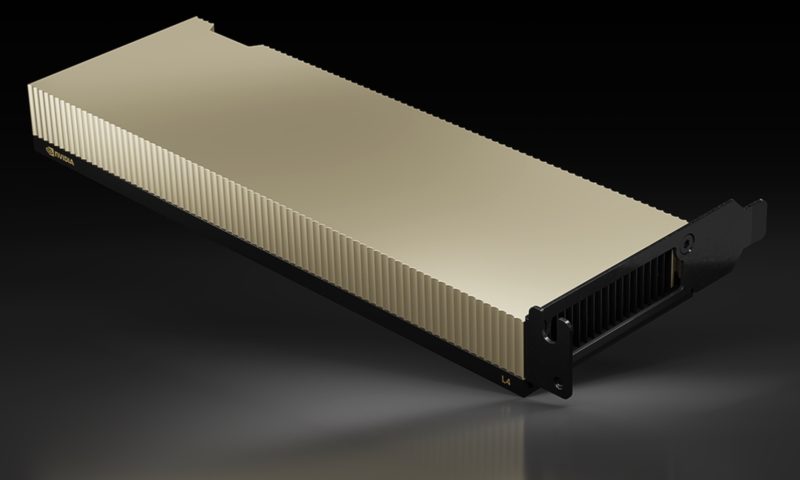

The first new product is the NVIDIA L4. This is the successor to the NVIDIA T4 and is a low-profile card that does not require a power connector. It also now includes AV1 support which will be big for cloud services that use these for video going forward.

NVIDIA says that Google Cloud is deploying L4 instances.

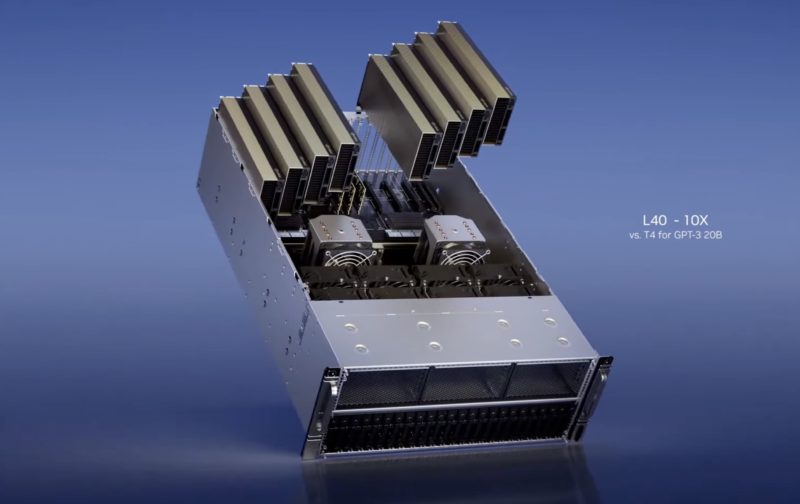

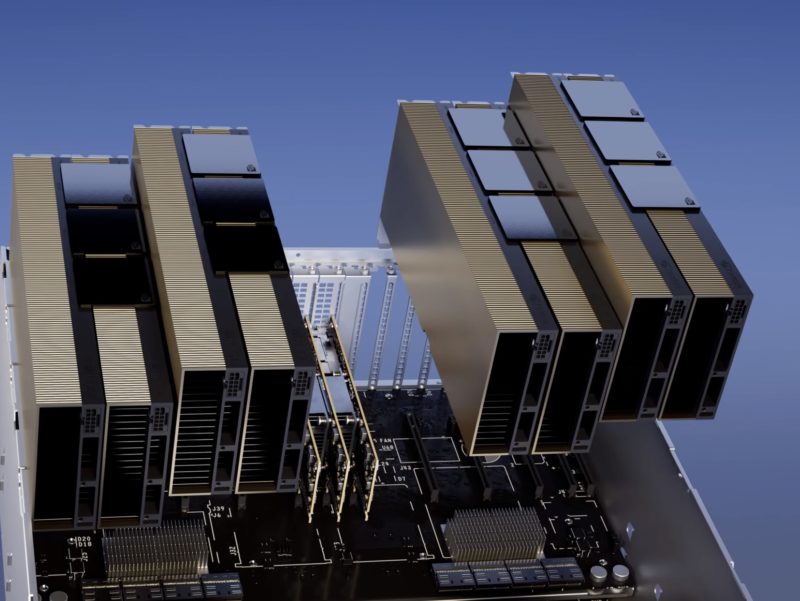

The NVIDIA L40 is the existing solution. This is the Omniverse GPU. It is also significantly faster than the T4/ L4 given its larger footprint. NVIDIA says that the L40 is being launched today, but we already covered the NVIDIA L40 Omniverse GPU Launch.

The NVIDIA H100 NVL is really interesting. These are two updated 94GB of HBM3 NVIDIA H100 GPUs connected via NVLink. NVIDIA said on a pre-brief call that these are 2x 350W cards.

NVIDIA says that four NVIDIA H100 HVL sets are up to 10x the performance of a HGX A100 for ChatGPT.

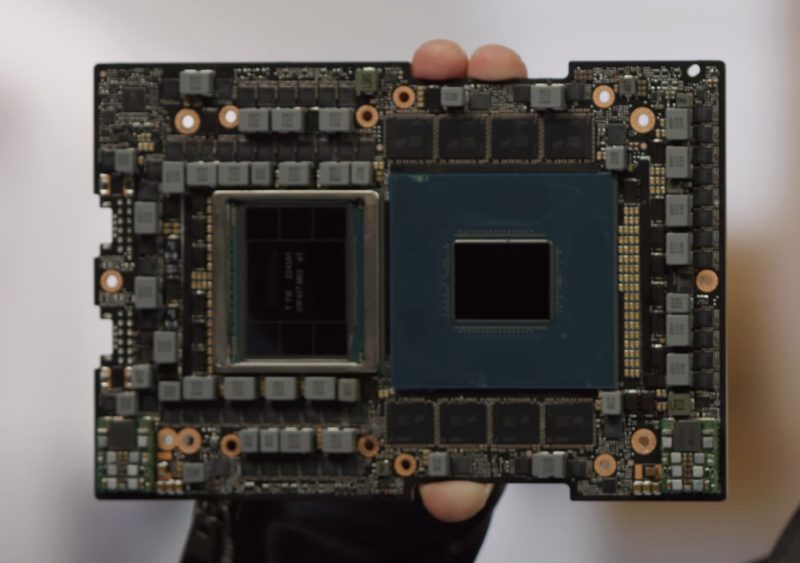

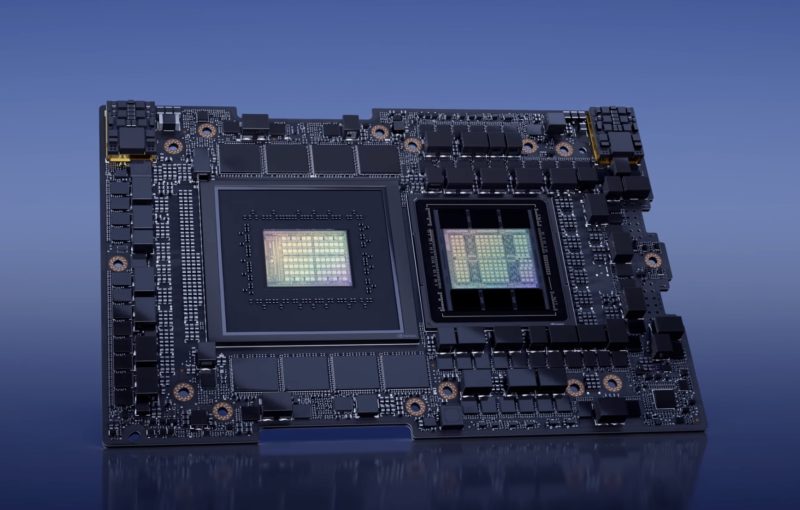

We also get a look at the new Grace Hopper Superchip ( with Hopper on the left and Grace on the right.)

Here is the new GTC 2023 render of the new platform, oriented with Grace to the left and Hopper to the right.

Stay tuned for more to come.

NVIDIA RTX 4000 SFF Professional GPUs

These are not getting time, but since we are past the embargo, there is a new GPU, the NVIDIA RTX 4000 SFF. This is a dual-width but low-profile GPU meant for compact workstations.

This is meant for SFF workstations. After we reviewed the Lenovo ThinkStation P360 Ultra with the NVIDIA RTX A5000, we are going to be reviewing other professional workstations in this class.

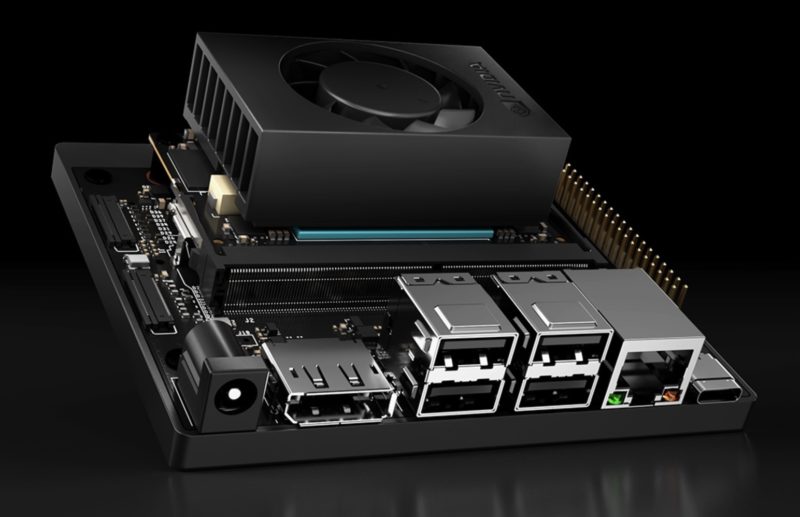

NVIDIA Jetson Orin Nano

These are not getting a lot of time either, but since we are past the embargo, there is a new NVIDIA Jetson out. This is the NVIDIA Jetson Orin Nano. This is a lower-cost option that we covered in the NVIDIA Jetson Orin Nano Launched Cheaper Arm and Ampere.

New for GTC 2023 is the developer kit. We have one of these kits that will have a review coming on STH.

Final Words

Stay tuned for more updates from GTC 2023. We are going to go into many of these announcements in more detail after the keynote is over. We are also reviewing several of these new products at STH right now like the RTX 6000 Ada, ConnectX-7, Jetson Orin, and more. Those reviews will be online over the next few weeks. We also just showed Using DPUs Hands-on Lab with the NVIDIA BlueField-2 DPU and VMware vSphere Demo.

We also did a piece some time ago on ChatGPT Hardware a Look at 8x NVIDIA A100 Powering the Tool and a recent short on the same. Today we expect OpenAI will confirm that it is using the NVIDIA A100 as we showed.

On the H100 side, Dell will announce it is shipping the H100-powered Dell PowerEdge XE9680 8x NVIDIA H100 system. We also expect other OEMs like Supermicro to show off its H100 systems like we did for the X13 launch:

BlueField-3 is not really shipping in numbers yet. It’s ramping in H2 2023 according to their GTC analyst presentation:

https://s201.q4cdn.com/141608511/files/doc_presentations/2023/03/GTC-2023-Financial-Analyst-Presentation_FINAL.pdf

———–

I don’t think Bluefield/Pensando (or intel or Marvell) have exclusivity at any cloud vendor. They might use different DPUs for different servers.

Confirmed for OpenAI

OpenAI used H100’s predecessor — NVIDIA A100 GPUs — to train and run ChatGPT, an AI system optimized for dialogue, which has been used by hundreds of millions of people worldwide in record time. OpenAI will be using H100 on its Azure supercomputer to power its continuing AI research.

https://nvidianews.nvidia.com/news/nvidia-hopper-gpus-expand-reach-as-demand-for-ai-grows