We have been creating a few cheat sheets lately to understand generations of systems over time. A big part of that is so that we have them for STH’s reference. We figured instead of just using them internally, we would share them externally as well. In this one, we are going to quickly have the DDR, DDR2, DDR3, DDR4, and DDR5 GB/s and MT/s numbers by generation.

Guide DDR DDR2 DDR3 DDR4 and DDR5 GB/s Bandwidth by Generation

Here is a hopefully simple table with the memory bandwidth for a single channel DIMM by generation:

| Generation | Common Name | MT/s | GB/s |

| DDR | DDR-266 | 266 | 2.1 |

| DDR | DDR-333 | 333 | 2.6 |

| DDR | DDR-400 | 400 | 3.2 |

| DDR2 | DDR2-533 | 533 | 4.2 |

| DDR2 | DDR2-667 | 667 | 5.3 |

| DDR2 | DDR2-800 | 800 | 6.4 |

| DDR3 | DDR3-1066 | 1066 | 8.5 |

| DDR3 | DDR3-1333 | 1333 | 10.6 |

| DDR3 | DDR3-1600 | 1600 | 12.8 |

| DDR3 | DDR3-1866 | 1866 | 14.9 |

| DDR4 | DDR4-2133 | 2133 | 17.0 |

| DDR4 | DDR4-2400 | 2400 | 19.2 |

| DDR4 | DDR4-2666 | 2666 | 21.3 |

| DDR4 | DDR4-2933 | 2933 | 23.4 |

| DDR4 | DDR4-3200 | 3200 | 25.6 |

| DDR5 | DDR5-4800 | 4800 | 38.4 |

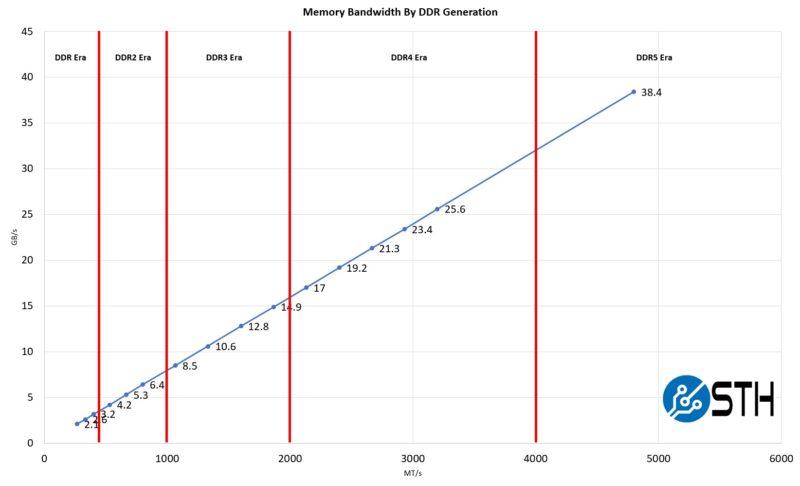

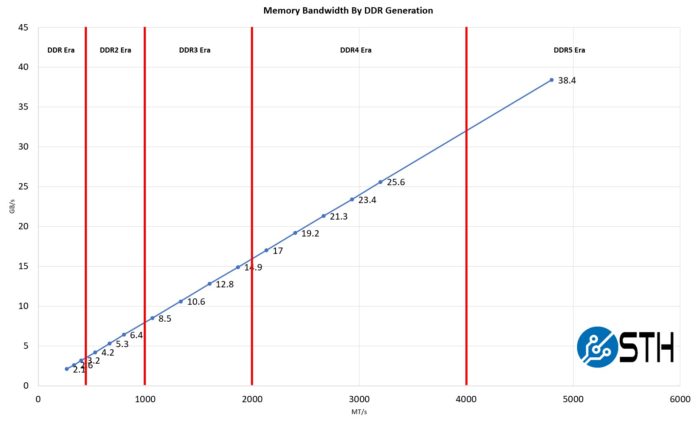

In a system, there are often many memory channels, and one does not achieve peak bandwidth on all platforms and scenarios, but it is still interesting to look at. For some sense, here is what the above looks like charted:

When folks in the industry say that DDR5-4800 found in AMD EPYC 9004 Genoa and 4th Gen Intel Xeon Scalable Sapphire Rapids is a big deal, this is why. Memory bandwidth per DIMM gets a ~50% boost. That is why we say that AMD EPYC Genoa, with 50% more memory channels, has the capability to do more than 2x the DDR4-3200 “Rome/Milan” generation bandwidth. Likewise, the Intel Xeon Sapphire Rapids may have eight memory channels (omitting that technically DDR5 is two 40+8 channels while DDR4 is 72+8), it has 50% more theoretical bandwidth than the previous-gen Ice Lake Xeons.

Final Words

If folks have more that they think we should add, we can look into it. We will probably update this when servers use faster DDR5 memory later in 2023 or in 2024. Still, we hope having a little chart like this is something that our readers find useful. We have the chart just to bookmark for our team.

It would be nice to create a generational CPU to RAM memory latency chart as well. My suspicion is that 1/latency has not uniformly increased in the same way as bandwidth.

DDR5 is two 32+8 bit channels and DDR4 is one 64+8 bit channel. Maybe you meant the same but it was confusing.

DDR5 also has more banks, allowing lower latency random access and thus higher real-world throughput compared to DDR4 even at the same frequency. This is especially important for servers with lots of VMs accessing different memory addresses at the same time.

i’d love to see a performance comparison of xeon processors across generation family skus like xeon E5-2650, v2,v3,v4 or similar. see the performance gains over time.

Great article summarizing the bandwidths of the various memory generations – Thank You!

@Eric – DDR5 is slightly higher latency, but it is very small in this generation. The two channels per DIMM also helps a lot.

@Lasertoe – Clarified. Good point.

@Epwich Something like these? https://www.servethehome.com/a-look-at-7-years-of-advancement-leading-to-the-xeon-bronze-3204/ and https://www.servethehome.com/intel-xeon-bronze-3104-v-intel-xeon-e5-2603-v3-v4-three-generations-compared/

I’m bookmark long this one

Hi Patrick

Would you be so kind and include DDR4-2933 Mhz ?

I’d be also more than grateful if you could also include the table with whole socket bandwidth comparison for all the x86 server platforms for the last 8 – 10 years or so.

Having such table in your page will make the job of explaining to the C-suits why the servers are getting more expensive each generation easier.

Zibi – added

First thought: “Wow, that’s a remarkably linear relationship!” Second thought: “Wait…”

Hey do any of you know where to find a nice chart to explain DDR5 CLs aka what timings they are? While the CL numbers for DDR5 are higher, afaik the actual latency should be the very same.

Low-key this is the most valuable post of 2023 on STH I’d say.

It is informative to add SDRAM DIMM-50, 66, 100 and 133MHz on the list.

According to Memtest86, my old Compaq Proliant 575 is 16MB/s bandwidth

with dual channel fast-page SIMM with 50MHz clock.

“Faster memory is faster.” Wow. What a pointless article. You could have at least included latency or something.

I’d say great guide. jpuroila this article isn’t saying faster is faster. It’s a table showing the bandwidth for a given MT/s speed. I’m also liking that chart

i think the graph would be more interesting if the x axis was time.

maybe a blue line for the intel generations and a red line for the amd generations.

Thanks for sharing. More like this. This is useful. Regards.

@jpuroila “Faster memory is faster.” depicts general qualitative tendency but the graph above shows quantitative data

it has much more info than that.

Would be interesting to also have the latency overlapped there, for standard server memory timings.

And what would be extremely interesting is a test that shows practical number of random 8 byte accesses per channel. For example, allocating a big fat array that fills whole memory then using multiple threads, access randomly 8 bytes from the array. This should break the cache lines and show how many memory accesses are possible in average per channel. Due to efficiencies from one generation to another, I’d expect this number to improve slightly with every generation. Tests would not be 100% comparable due to CPU number of cores and clock speed but will show clearly that memory accesses/s can be quite a heavy problem. Another dimension of this test would be to see how many threads are needed to saturate the memory controller. Normally this value should coincide with number of memory channels but optimizations in memory controller might give different results.