Today I am taking a look at the HighPoint SSD6204 PCIe Gen3 M.2 RAID card. This is a full-height PCIe 3.0 x8 card that is host to four PCIe 3.0 M.2-22110 slots and allows RAID 0 and 1 functionality. This card is like the Gen 3 baby brother to the HighPoint SSD7540 which we previously reviewed. I happen to have used several of the SSD6204 in builds over the past year, and since I was building a server with one anyways I decided to take a quick look at the card for STH.

HighPoint SSD6204 M.2 PCIe RAID Card

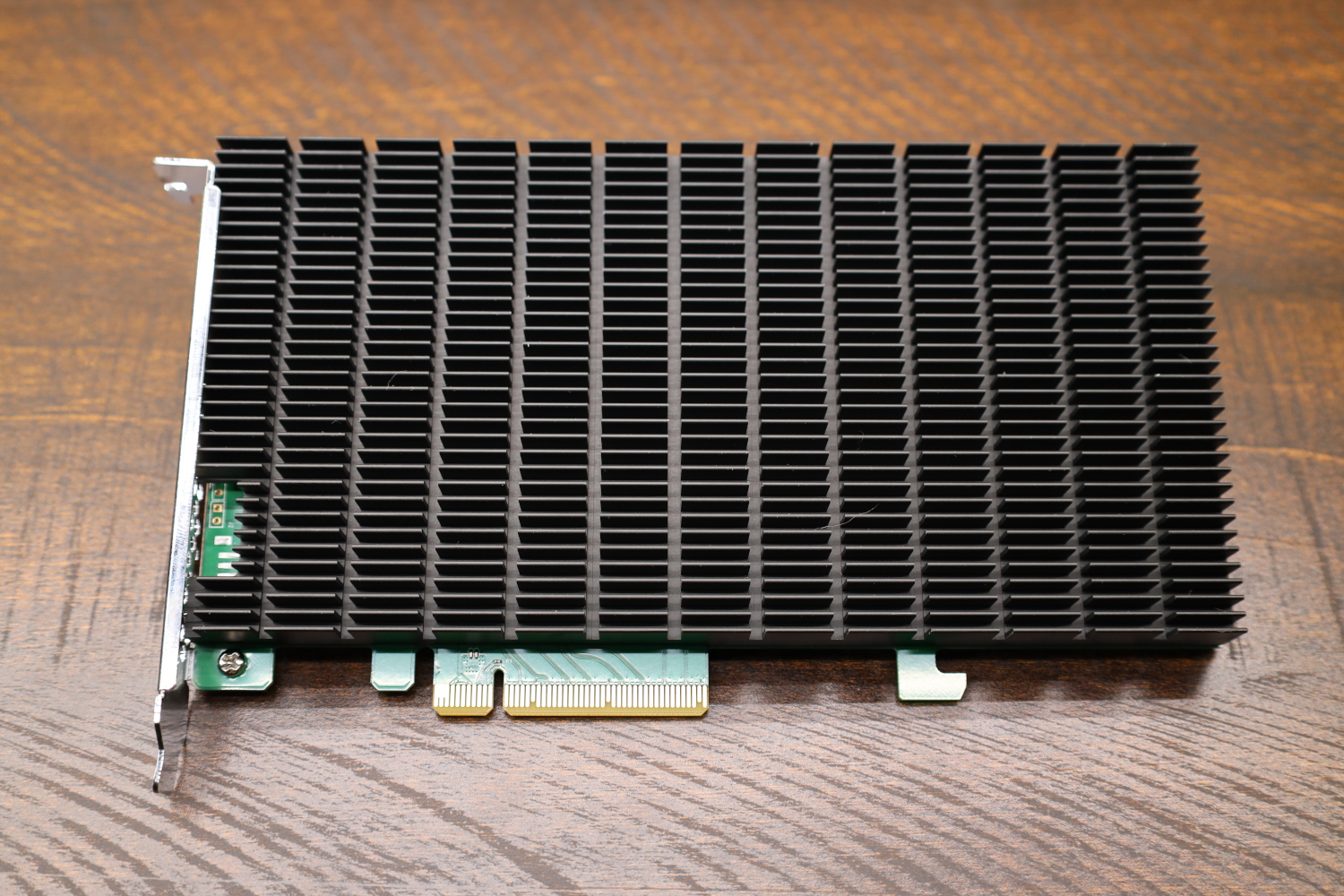

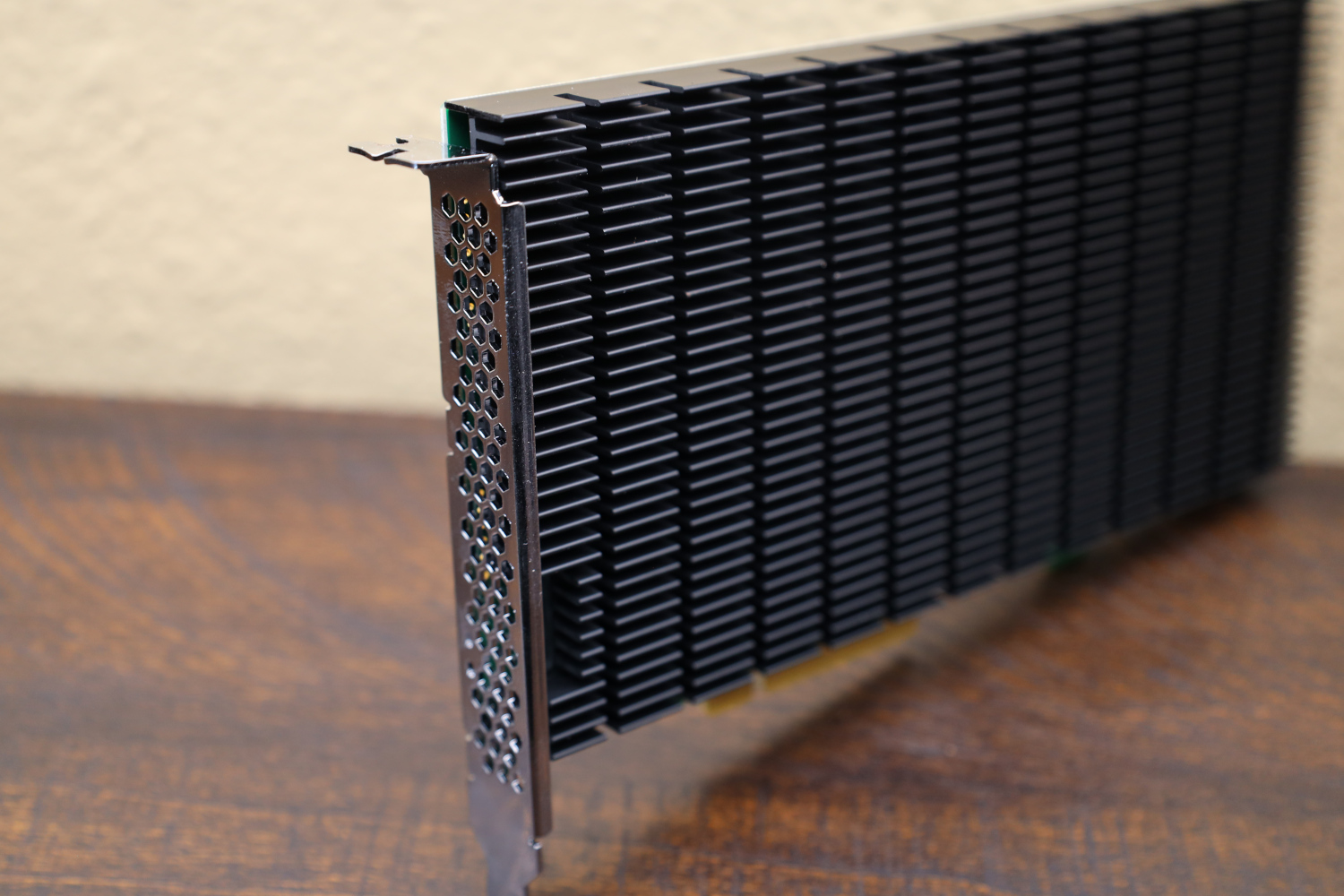

The HighPoint SSD6204 is a full-height PCIe x8 card dominated by its large, passively-cooled heatsink.

The 8-slot HighPoint SSD7540 was actively cooled, while the SSD6204 is passive. The heatsink is still impressively large and with even a moderate amount of airflow should be able to keep most drives under control.

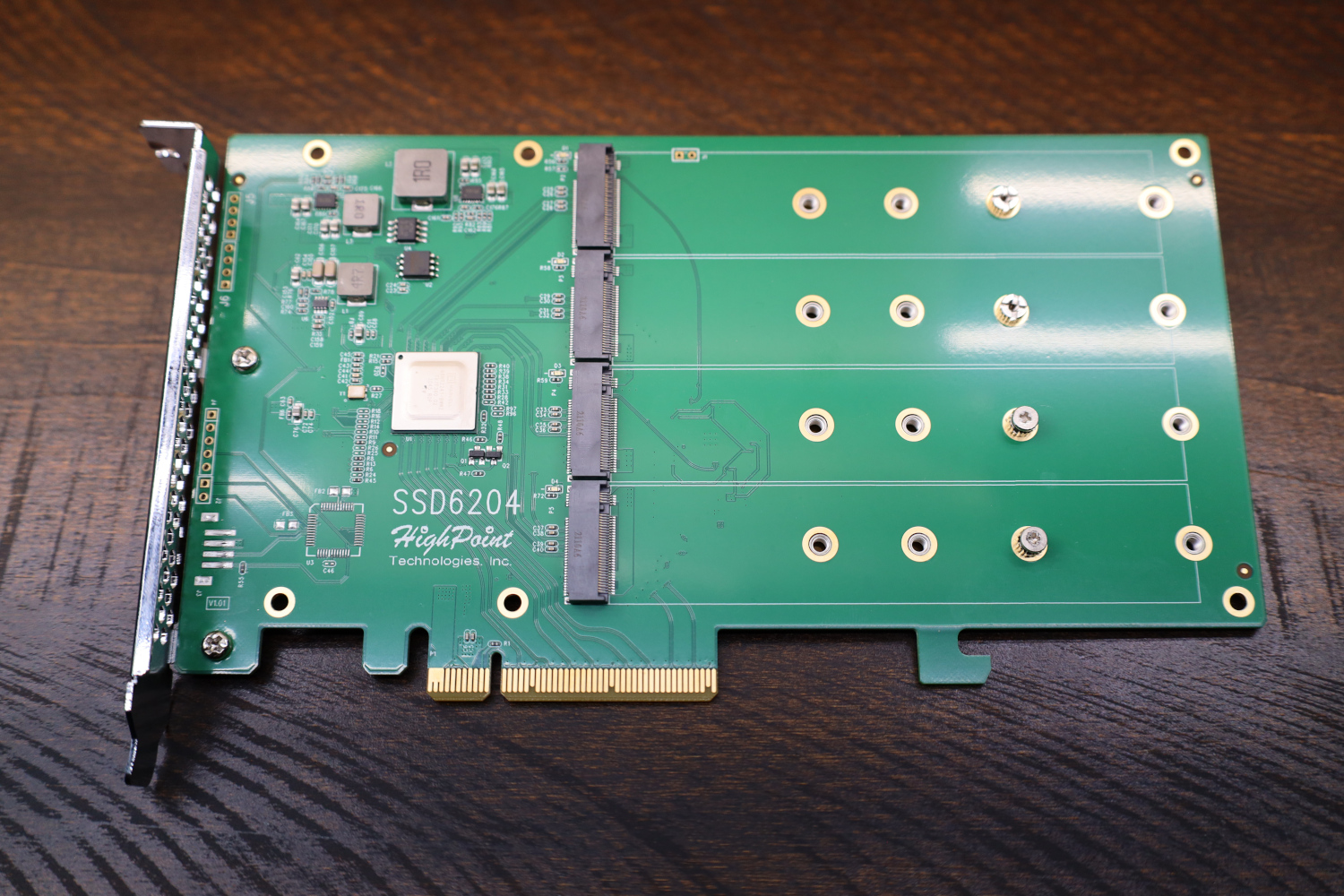

Beneath the heatsink are the 4x M.2 slots, each allowing up to M.2 22110-sized (110mm) SSDs to be installed. This card is only a PCIe 3.0 x8 design, which means that fully populated there is zero chance of a full bandwidth 4-drive RAID 0 array. These SSDs are connected to a Marvell 88NR2241 NVMe switch.

The 88NR2241 switch handles the RAID 0 and RAID 1 functionality, as well as the host PCIe connectivity. As a result, this card does not require bifurcation to operate. Unfortunately, the while the 88NR2241 supports PCIe x8 for the host interface, each individual M.2 drive is only supported at x2 in a 4-port configuration. This will have consequences for performance down the line, which we will get to.

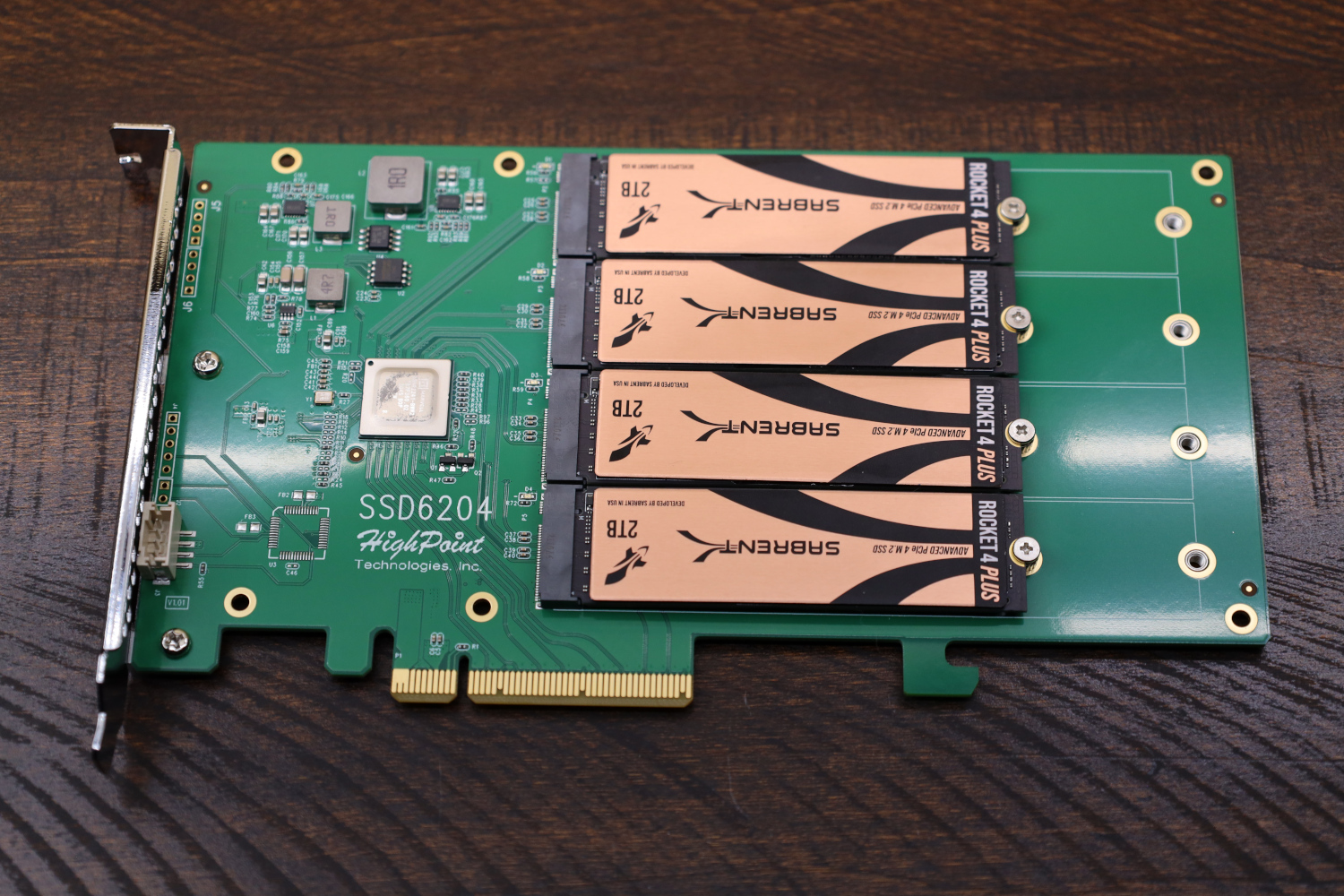

For my testing, I once again used the Sabrent Rocket 4 Plus drives provided by Sabrent. These drives are over-spec for the SSD6204, since they are Gen 4 drives, and the SSD6204 tops out at Gen 3, but for the purposes of my quick testing, they will work just fine.

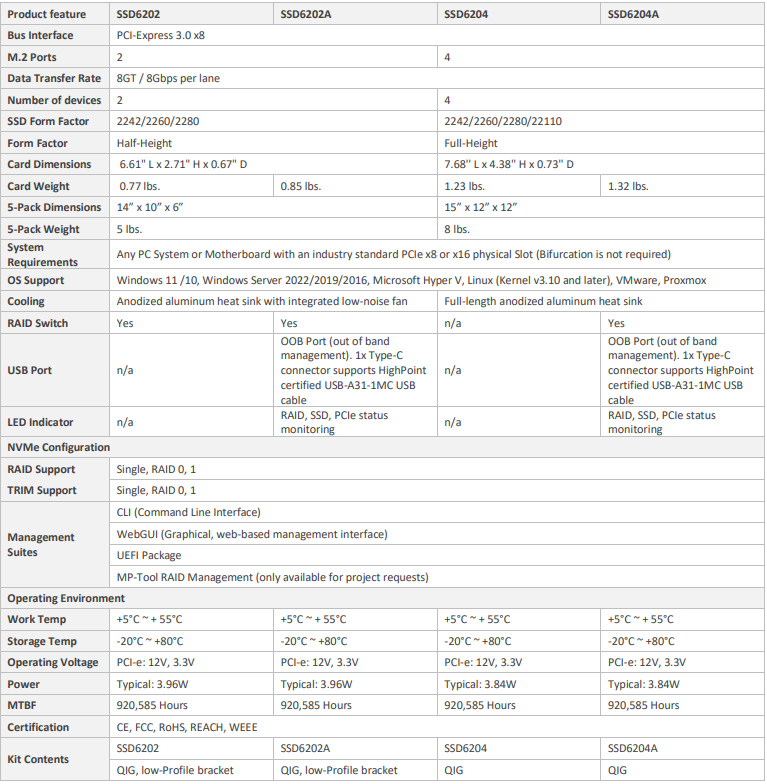

HighPoint SSD6200 Series specs

The SSD6204 I have today is part of the SSD6200 series cards, available in 2 or 4-slot designs.

The 2-slot variants, the SSD6202 and SSD6202A, only support M.2 2280 size drives. With that said, they are half-height models. In addition, it is possible that per-drive performance would improve on the SSD6202 and SSD6202A because the 88NR2241 controller supports x4 connections in a 2-port deployment, but I have not personally tested a SSD6202 model so I cannot verify that is the case.

The model I have today is the basic SSD6204. The A-suffix models include an out-of-band management port in the form of a Type-C connector, plus onboard LEDs for indicating RAID health. My basic SSD6204 omits both of those features.

SSD6204 Management

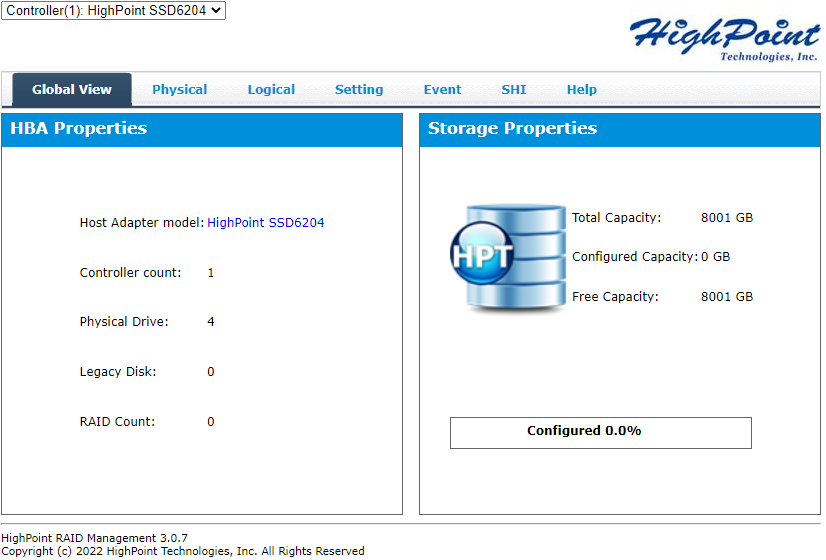

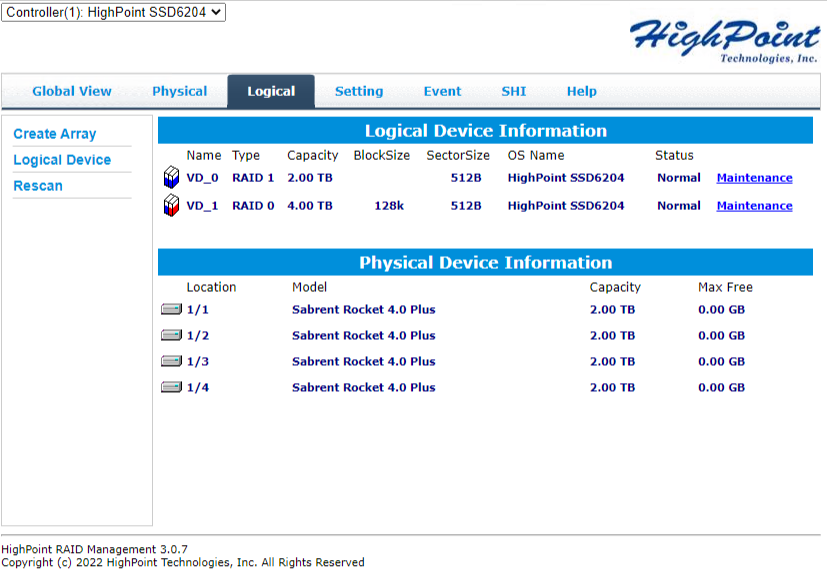

On Windows, the management of the SSD6204 is handled through HighPoint’s RAID Management GUI.

From this GUI, RAID arrays can be created and monitored. This is an interface that I have seen many times over the years, and it seems almost unchanged from my time with a HighPoint RocketRAID 2720SGL that I owned many years ago.

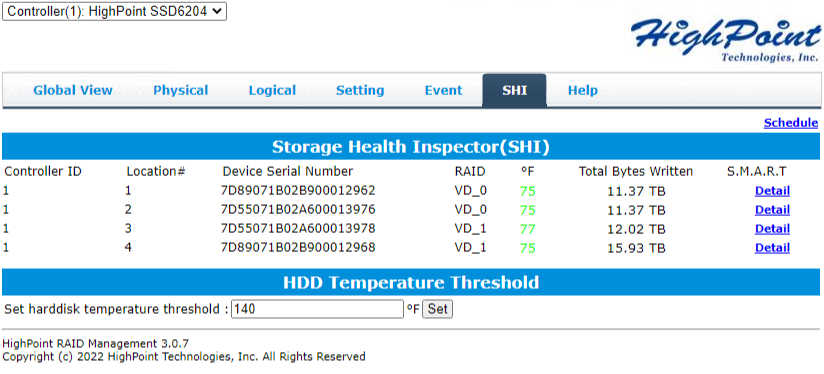

One important part of this interface is the health inspector interface. Individual drive SMART reporting is not passed through to the operating system, so the SHI tab on the RAID interface is where that data can be accessed.

The RAID management utility is also capable of sending alerts in the case of a failure or pending failure detected by SMART. There is also a physical beeper on this card, which I always appreciate.

Test System Configuration

My basic benchmarks were run using my standard SSD test bench.

- Motherboard: ASRock X670E Steel Legend

- CPU: AMD Ryzen 9 7900X (12C/24T)

- RAM: 2x 16GB DDR5-6000 UDIMMs

HighPoint SSD6204 Performance Testing

The HighPoint SSD6204 is equipped with 4x Sabrent Rocket 4 Plus 2TB SSDs and put through a small set of basic tests. As mentioned in the title, this is a bit of a mini-review and I did not have time to run the full test suite, but I wanted to give a general idea of the performance you might encounter. In addition, please keep in mind the SSD6204 card is not heavily marketed for its performance, and you will see why shortly.

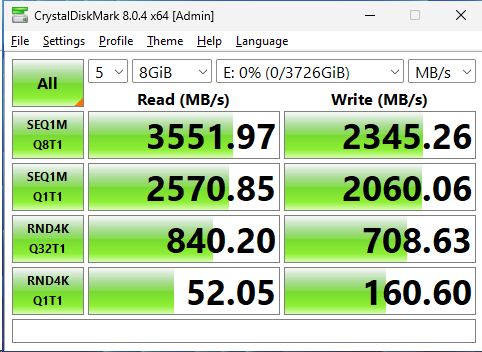

The first test I ran was a single drive connected to the SSD6204. As I mentioned, individual drives are only connected at PCIe Gen 3 x2, a fact which is borne out in the sequential performance results. The sequential results here indicate a fully saturated PCIe Gen 3 x2 interface, and this is the best you will get given those limitations.

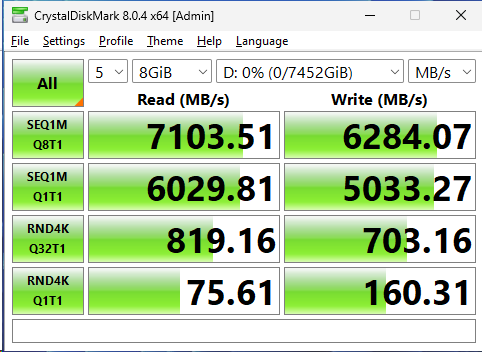

Next, I did a 2-drive RAID 0 array run. Performance has doubled relative to the single drive performance, which is in line with expectations. As a result, performance is now in line with a single PCIe Gen 3 x4 drive, which is not exactly exciting.

Lastly, I did test a 4-drive RAID 0 array and achieved the best performance to date. These results are in line with expectations of a saturated PCIe Gen 3 x8 interface.

That speed is closer to a single PCIe Gen4 x4 NVMe drive.

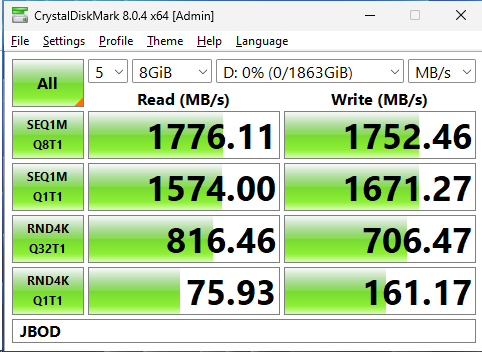

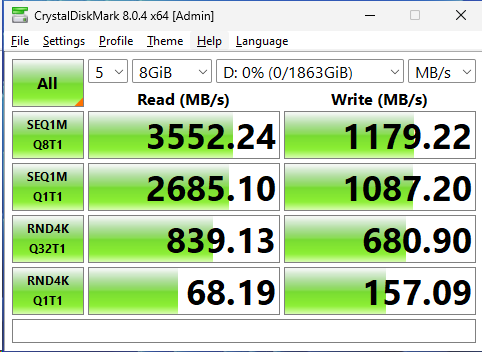

With all of that said, the SSD6204 was never going to be a performance superstar given its specs. What I am interested in it for is the RAID 1 support, so I tested that.

The performance here is actually pretty encouraging. Write speed in RAID 1 is limited to the performance of a single disk as you might expect, but read speed is pulling data from both drives and performs well. This is the configuration I will be using in my server build, and these results are fully acceptable for my deployment.

VMware OS Support

The HighPoint SSD6204 has a trick up its sleeve; it works with VMware ESXi. If you have read my build articles in the past, you might remember that most of the servers I build run VMware. The SSD6204 is a relatively inexpensive path to a RAID 1 NVMe array on an ESX host, and that is exactly what I am using it for. The pictures I showed earlier and the benchmarks I ran were under Windows, but the SSD6204 can also be configured via an ESX command-line utility or even pre-boot in an EFI environment. If you have read this whole article and wondered “But why?” then this is my answer; because this card works in ESX.

Final Words

The HighPoint SSD6204, to me, is a means to an end. I want RAID 1 support on inexpensive ESX hosts, and the SSD6204 does that. My card was purchased for around $360, which to me is an acceptable price to pay for RAID 1.

This solution is not for everyone, but it happens to be the perfect solution for my particular scenario. STH still has at least one more M.2 accelerator card review coming; we are going to delve into some of the cards that require bifurcation next. Until then, if you just need RAID 1 out of your M.2 NVMe drives and you are not too concerned with top-end performance, then the SSD6200 series might work well for you.

As it is the article is a bit light on useful information. Perhaps an idea for future expansion is to compare the performance to vSAN with and without RAID to see if the RAID1 capabilities are actually needed, or if storing bytes in multiple hosts is a better approach in 2023.

David,

I’m sure storing in multiple hosts is a better approach. Unfortunately for me, that requires multiple hosts, which I will not have available in (most of) the places where this card gets used.

I’m my opinion, the main point of RAID is redundant reliability. For this reason, one would expect a hardware RAID card to include some sort of power loss protection to avoid a resilver when something goes wrong with the host.

I didn’t see any testing that concerns what happens when there is a power loss or when one of the drives is missing from the array.

Is a resilver needed after power loss?

How long does a resilver take?

What is performance like during a resilver?

In case the RAID card fails, is it possible to recover the data without buying another card?

If an SSD is removed from the array, does the array assemble automatically in degraded mode?

How fast is it while running is degraded mode?

How long does it take to rebuild the array after replacing a failed SSD?

One more question. You mentioned SMART data is not passed on to the host. What about TRIM information?

Can the host issue TRIM commands to the RAID array to indicate which blocks are not being used by the filesystem?

Are TRIM commands passed on to the underlying SSDs?

Eric,

I can answer a few of your questions.

Is a resilver/rebuild needed after a power loss? Nope.

In the case the RAID card fails, can you recover the data without the card? In a RAID 1 array, yes. Not sure in a RAID 0 setup, but RAID 0 isn’t for reliability obviously.

If a SSD is removed and then you replace it, does the array auto-rebuild? Yep.

Several of your performance questions will be entirely dependent upon which drives you populate into the array. Performance during rebuild, time to rebuild, performance while degraded, etc. In general terms, since this is just RAID 1, performance drops to the level of a single drive when running degraded. I did not run any benchmarks while I had it rebuilding. The rebuild was moving fairly quickly, but I will be honest that I did not actually wait for it to complete and instead just deleted and recreated the array after confirming the auto-rebuild.

TRIM does pass through, though I do not know if the cards auto-issue TRIM or perform any other form of garbage collection type management without the OS initiating the process.

Have you tried to create raid 1 and then take out the ssd and find out if the datas are accessible on the baremetal? ( nvme in the PC not in the card )

I am asking for disaster recovery if the card dies.

It feels to me so disturbing being tied to the absurd hardware compatibility matrix of a product this much.

The fact a producer can use “it’s compatible with XXX” as a value proposition claim just breaks the whole paradigm of virtualisation.

That being said I would be interested in this product. If I am not mistaken in RAID1 configuration the bandwidth should be high enough to get full advantage (give the mirroring “streams” shouldn’t need to leave the controller).

The price seems reasonable as well!

Greetings

Thanks Patrick for the great info. I have 2 concerns in the spc. sheet it is rated at .92M hours while most of enterprise SSD are rated at 2M hours is that a concern.

It is in $360 price range which is almost the same as LSI 9400-16i. It is a Gen3x8 Tri mode card that supports 4 nvme drives as direct connection how it is compared to it

Again thanks for everything

Yacoub,

The LSI 9400-16i is an interesting comparison point, and would obviously be the model you should lean towards if you have room for 2.5″ NVMe drives in your chassis. The SSD6204 has the advantage/position of operating with M.2 format drives, which are a bit more common in the consumer/prosumer space. In other words, I can slap a SSD6204 into a Dell workstation or off-the-shelf server, where that might not be true for a LSI 9400-16i.

Hello. Can you please test if the card works in electrically 1x and 4x slots? This is very important info that is usually impossible to find.

I’d like to add to this as its the only review I could find and was quite useful, and its difficult to work out exactly what this card does from the HighPoint documentation.

I recently bought the SSD6402A and set it up in RAID 1/Mirror with two Samsung EVO 970 Plus 2TB drives. I got it because all 3 of the SSD drives I’ve bought in the last 5 years have failed and I don’t want to waste any more time. I got the A model only because of the audible beeper alarm – I didn’t want to rely on email notifications and if you don’t know when a drive fails there’s not a lot of point having RAID. I’ve no use for the OOB port functionality.

I’d have preferred to move the existing SSD from the motherboard to the card, added a second empty drive, then have it rebuild the RAID array from the existing data. That probably can’t work as the manual states that the existing data on the drives will be deleted when you configure the DIP switched in RAID 1. Instead I used two new drives and the data was indeed deleted.

I installed it in the PC, cloned the existing boot SSD onto it with Macrium Reflect, removed the boot SSD, then used the SSD6204A card from that point on. Now I have an extra SSD that I don’t need.

A few issues.

There’s no way to test the beeper alarm, at least without removing one of the drives. I don’t want to do that because its too much hassle, and it may degrade the thermal pads unnecessarily. I don’t know if it does or not because the manual doesn’t say, nor do I know where to get replacements.

I tried setting up the failure email notifications on the card, but its too difficult. When you get the settings wrong it deletes all the settings you typed in. If you save the settings first it appears you can’t then test it (!), nor can you see the settings or edit them (!!!). Lucky I got the beeper, assuming it works.

I can’t easily see the indicator LEDS once its all setup. It would have been nice if there was at least an alarm led some along the internal edge of the card as well. I could see that.

The web interface displays temperature and has a temperature threshold that triggers… something. I don’t know what, perhaps the email? It doesn’t seem possible to send notifications to Windows like most applications would, and where I’d easily be able to see them.

I could find no other alarm notifications.

As the card deletes existing data when setting up the RAID array I assume its writing some additional data to the drives (because otherwise that’s very unfriendly), and it seems unlikely that you could take then take working drive from a failed card and extract the data from it – i.e. you’d need to buy a new card. Both expensive and not necessarily possible the long term. I can’t find any documentation covering this.

These issues are a bit disappointing as they are all very small design (or documentation) issues and the entire point of the card for me is uptime. It still worth buying because it does actually appear to work, and I expect its going to be more reliable that consumer grade SSD’s. However this all ends up being quite expensive and I may take a look at an Enterprise grade SSD next time instead.

Hello Will, thanks for the review.

I’m also running Esxi… version 8

Any new NVME raid cards on the market now?

You said ‘STH still has at least one more M.2 accelerator card review’ to come ..is this available…is this also a RAID card?

Thanks

Michael