At Intel Innovation 2022 this week, we saw what might be one of the most interesting pieces of technology for servers, storage, and networking gear. It can re-define the entire space. Specifically, this is the new Intel Silicon Photonics Connector. Let us get into what Intel is doing with the new solution and why it is such a big deal.

This Intel Silicon Photonics Connector is a HUGE Deal

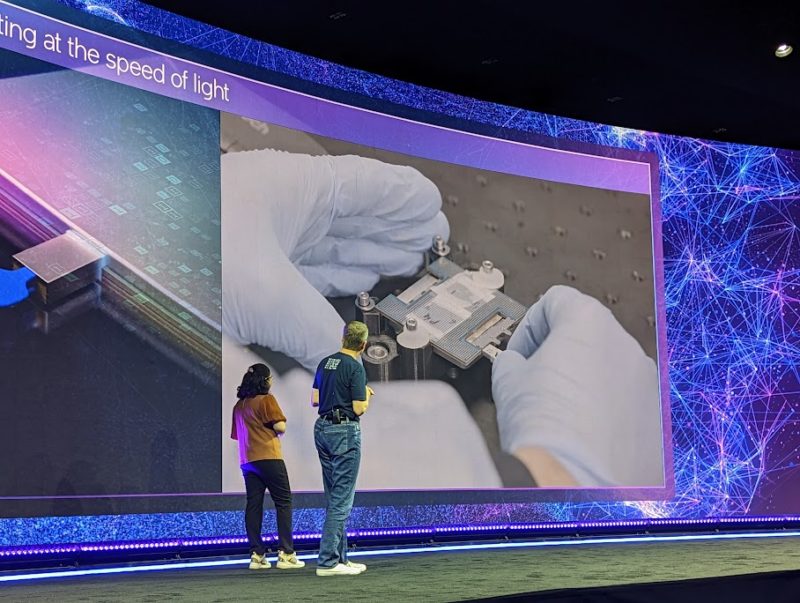

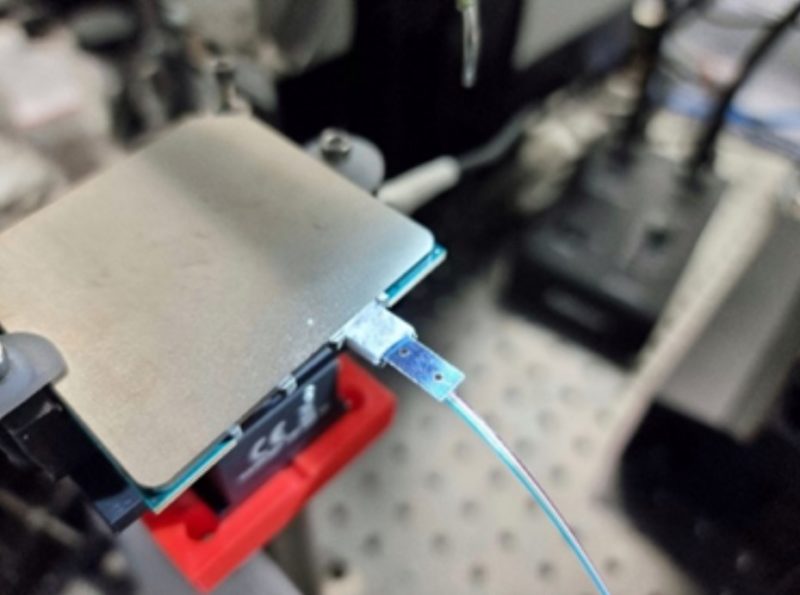

At Intel Innovation 2022’s Day 1 keynote, Pat Gelsinger called a Scottish Intel Lab and we witnessed a magnificent demo of a new technology. This is a new pluggable form factor for silicon photonics. Part of the reason is that the video and audio actually worked, even though that is still not a given in 2022. The real magic is that the Intel team showed a live demonstration of plugging in a new pluggable form factor for co-packaged optics.

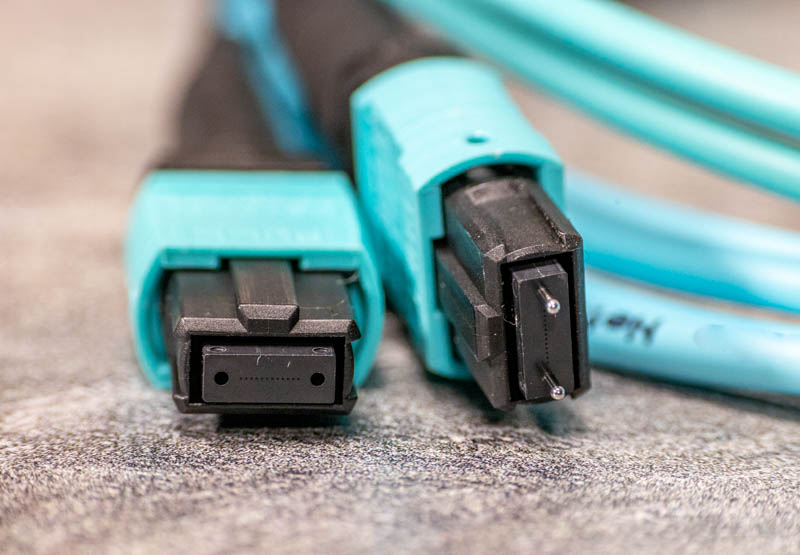

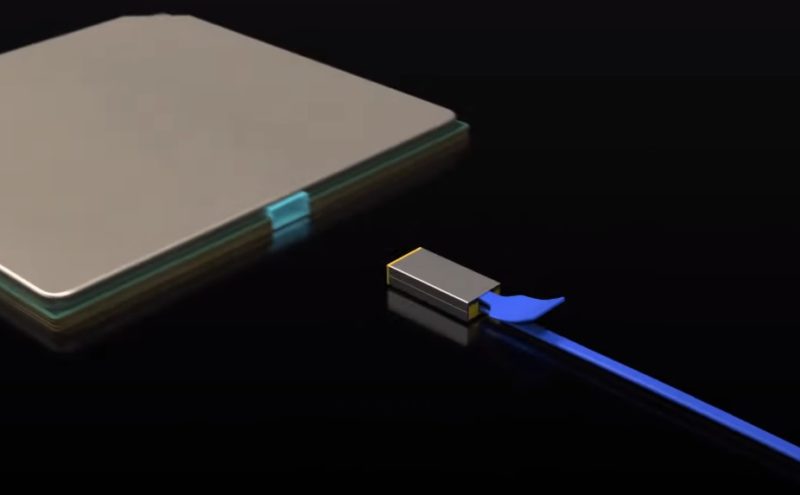

The way to think about what this pluggable optic module is, is that it is a smaller version of a MPO/MTP connector that is designed to connect directly to chip packages.

Just to visualize what this plugs into on your switch or NIC, the left 100GBASE-SR4 QSFP28 module on the left is the other end for this cable. You will notice that, unlike the CWDM module (right), multiple dense fibers are being connected along this MTP-12 link. For the 100GBASE-SR4 there are four pairs, so eight of the 12 fibers are being used, but these are the images we had on hand. In some of Intel’s animations, they actually start with a MTP-12 cable and then show re-packaging it into the new silicon photonics connector.

This is delicate work. Here is an example of the careful lab work happening on this early unit.

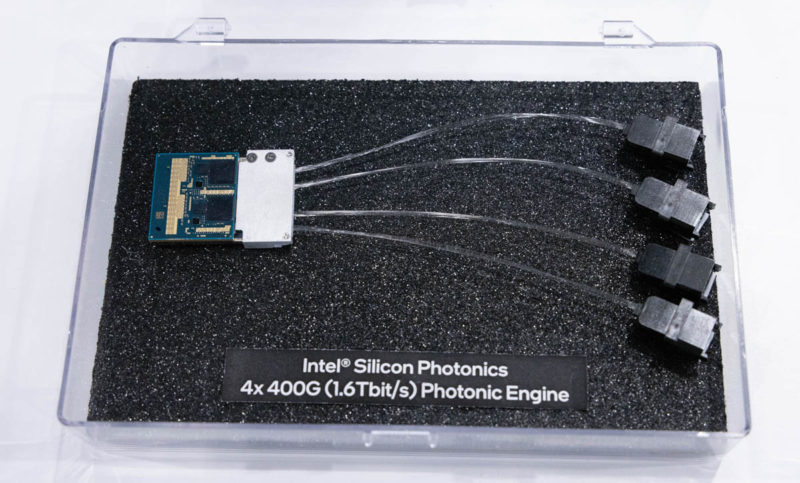

While many have seen networking pluggable optics, the current world of silicon photonics has a very physical challenge. Intel knows how to manufacture silicon photonics modules, but putting them onto a chip is a challenge because one needs to connect fiber to the chip directly. Most of the current approaches involve “pigtails” where short fiber runs go from the chip to a secondary connector.

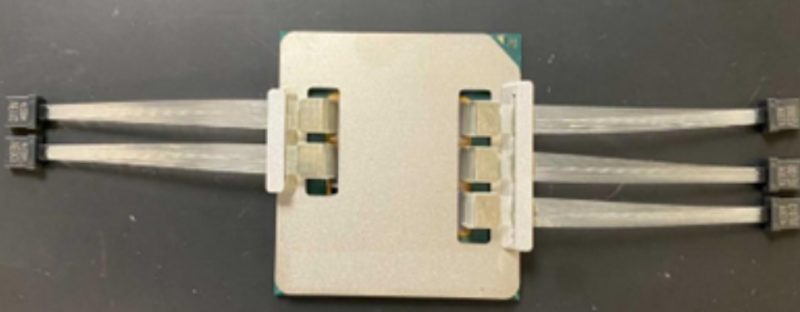

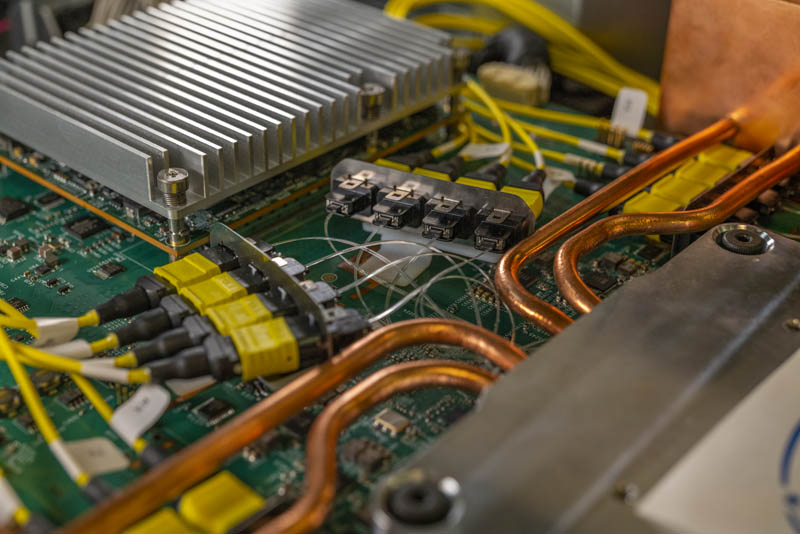

These pigtails we have seen recently. One example is the 4x 400G version we saw at Intel Vision 2022.

We have seen these pigtails as well in our pre-pandemic Barefoot co-packaged optics piece. Here you can see the co-packaged optics pigtails that look like the ones above connected to MPO/MTP connectors in yellow.

For more on that, here is the video from when STH YouTube had 10,000 subscribers, pre-pandemic. STH YouTube just hit 100,000 a few hours ago.

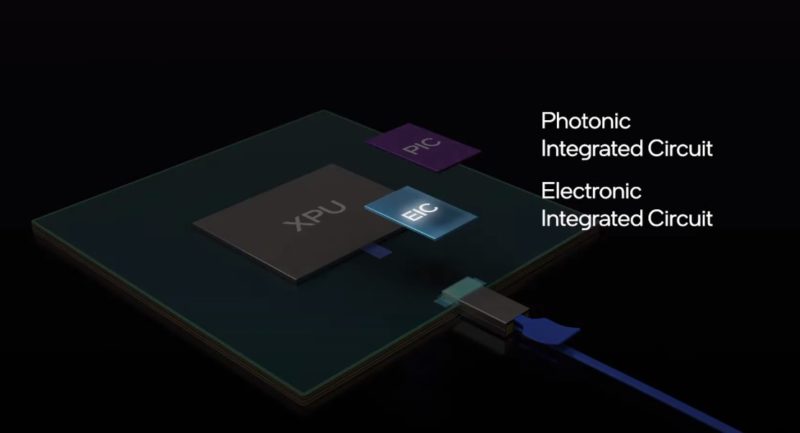

Getting a bit into the demo we saw on stage, Intel has a Photonic Integrated Circuit and an Electronic Integrated Circuit together on the XPU package, and then that is what is handling on-package electrical signaling to photonics. Keeping electrical signaling on a package is significantly lower power than driving signals off-package. Given I/O power demands, this is going to be a huge deal in the future. Network switches are already starting to run into this challenge just given how much I/O they have. That will be one of the first segments to transition chips to co-packaged optics. It will likely just happen a generation or two later than what we showed in that 2020 piece.

Intel’s innovation is that something that looks like an AOC or active optical cable, but is apparently more akin to a specialized multi-fiber connector like a MTP connector.

If you look closely at Intel’s test package, there is something else happening. We can see one connector installed, but there appear to be spaces for two more that have black covers on in this photo.

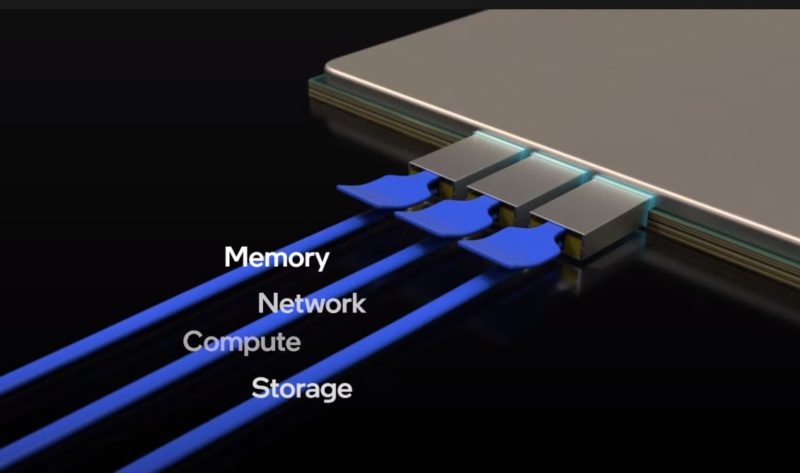

While that may seem far-fetched at first, consider Intel’s own renderings depict a very similar package with three connections. It looks like not only is Intel delivering co-packaged silicon photonics with a pluggable connector form factor, but they are already working on multi-connector solutions with tons of bandwidth.

Of course, with multiple fibers, one does not need to have specific fibers for memory, network, compute, and storage, but the point is this is the vision. Intel can connect devices through optical networking cables.

Final Words

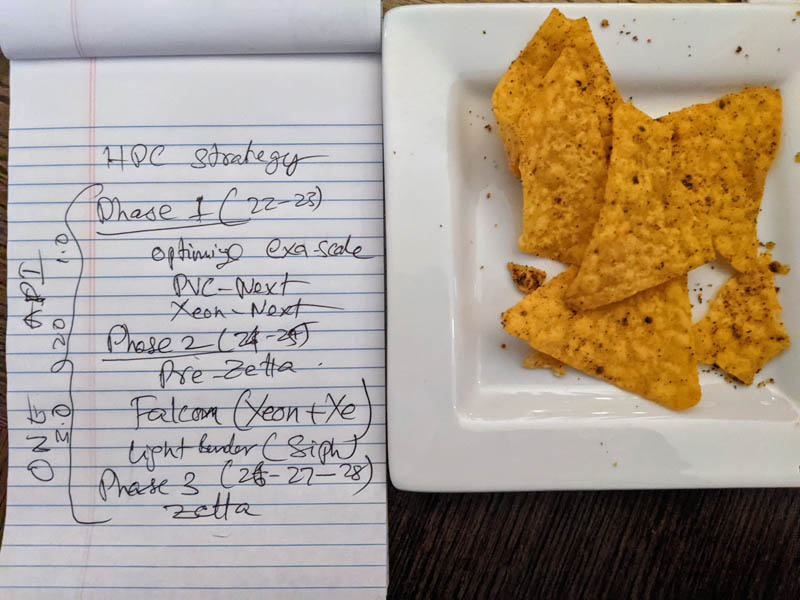

The “so what” of this is truly awesome. Reading the tea leaves a bit, here was Raja Koduri’s notes (with Cool Ranch Doritos) from the Raja’s Chip Notes Lay Out Intel’s Path to Zettascale piece:

You will see something called “Lightbender” or “Light bender” as a codename and “SiPh” shorthand for silicon photonics in the 2024-2025 era along with Falcon Shores.

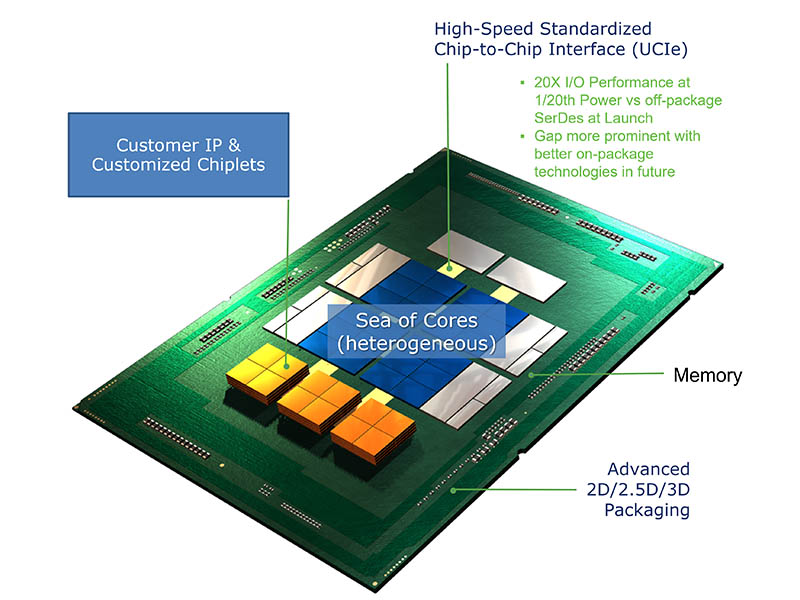

Intel’s strategy is to have a silicon photonics tile that it can co-package with other resources and expand chips beyond current packaging technology using optical connections. Imagine, instead of being limited to 6-8 HBM packages due to packaging considerations, that the HBM could sit off-package and connected in via optics and thereby allow larger HBM footprints.

We know chips are going to multiple tiles as we see from UCIe, so this will be a silicon photonics tile that will have to incorporate some form of EIC and PIC as shown in the Innovation 2022 demo.

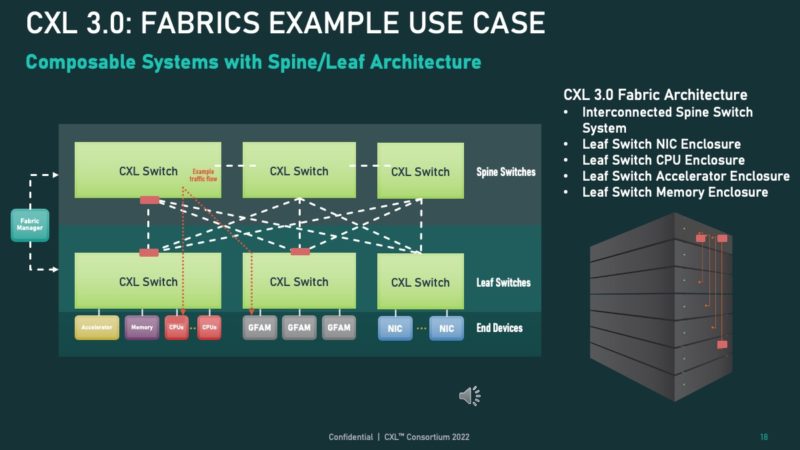

Where this also gets exciting is that in CXL 3.0 we can have fabric topologies. If you want to enable larger rack-scale high-performance CXL communication, this would be a very useful technology.

The innovation shown at Innovation (yes that just happened) 2022, is not that Intel can do silicon photonics. Hyper-scalers already buy millions of silicon photonics modules in pluggable form factors from Intel. One of the biggest challenges to co-packaged optics and the adoption of silicon photonics in chips is physically how to connect them. The pigtail approach is one that is often seen as challenging for real-world service. Intel showed its vision of going beyond optical pigtail bundles and to a familiar pluggable connector-based approach. While we may not be seeing the final mechanical design, this is one of those small innovations where we expect to see some form factor standardized over the next few years in this area, and that to help adoption. Between UCIe, CXL, and the next-generation of network switches, this is an area that needs a common path forward. That is what makes the Intel Silicon Photonics Connector a huge deal.

Sorry but it just reminds me of the constant failure mode of Intel operations and how Thunderbolt was promoted before it was born and adopted by Apple to its own benefit. We dont need tech previews.. we know your labs are decade away from what we poor simple people are given. Make it happen or stop talking. Same sh*t as the 1g to 2.5g to 5.0g to 10g that would take 3 decaces to complete. We all be probably dead by that time.

Despite Daniel having reservations regarding the value of this piece, I personally find this crazy exciting. Advances in optics really is one of the missing links for connecting systems at scale, at distance and most importantly to reduce cost so that multi TB/s bandwidth connections eventually become the norm, rather than just for the organizations with very deep pockets.

Daniel, I can tell you in the enterprise space we’ve been at 100g since about 2016. It has been very slow to go to 400 and beyond.

I echo your sentiments that everything didn’t just go 10g – this 2.5 and 5g nonsense they are doing in the consumer space is heinous. You’re far better off getting an older managed 10g copper switch and using it then getting some consumer grade “curren-gen” 2.5g garbage.

Thanks Patrick! This is good enabling tech. RJ45 in it’s day was a breakthrough, eh? Let’s hope Intel takes this to market and licenses it as promiscuously as possible. High speed switches are too hot, this ought to help with that. Perhaps a trade off situation between even more dense switches or less heat.

@Daniel, I agree about 2.5G, 5G. It’s a scam AFAICT, much like the way mobos have become dollar for dollar less flexible and capable over the past 5 years or so. But… if you are adventurous and your systems have a free slot each, look into running used QDR Infiniband or 10GbE gear bought off ebay. I can tell you that QDR IB will not be a bottleneck for most home/non-hyperscaler systems.

How easy is it to terminate – someone has to run it throughout your home and or business.

Also what about POE – with so much POE these days, where does it leave that?

Excellent. I have been wanting 20+ yrs for such progress. About 8 yrs ago, I wrote up for prototype development, a multi spectral, micro grating tuned, free space version e.g. multi channel free space laser comm, but with all the interfacing micro optics, planar waveguides…, embedded between CPU and MB, optically interfacing point to point (socket to slot), memory, PCIe, network.

Tbe is coming soooooooooon