Today Arm announced its next-generation cores for higher performance per core applications. Dubbed the Arm Neoverse V2 or “Demeter” core design, this will be the core design used for the NVIDIA Grace CPU when it launches in 2023.

Arm Neoverse V2 Cores Launched for NVIDIA Grace and CXL 2.0 PCIe Gen5 CPUs

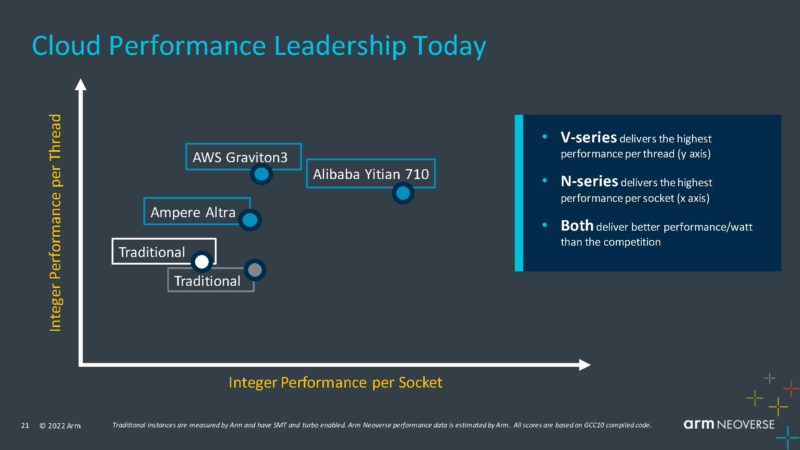

During the event today, Arm recapped its data center CPUs built on Arm Neoverse cores such as the A64fx powering Fugaku, the Ampere Altra / Altra Max, AWS Graviton3, and Alibaba Yitian 710. For some reason, this slide leaves out the Huawei HiSilicon Kunpeng 920 Arm Server CPU.

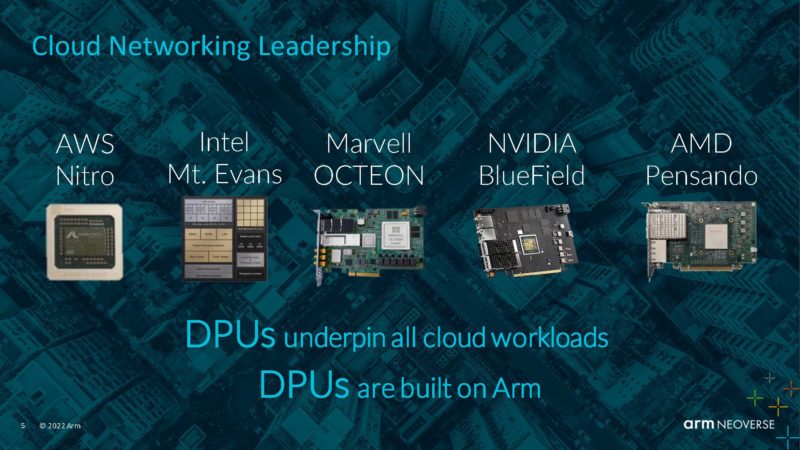

Arm also says that it is leading in the DPU space as well with many AWS Nitro, Intel Mt. Evans, Marvell Octeon 10, NVIDIA BlueField, and AMD Pensando. Companies like Fungible have struggled, partly because they are not built on architectures like MIPS instead of Arm.

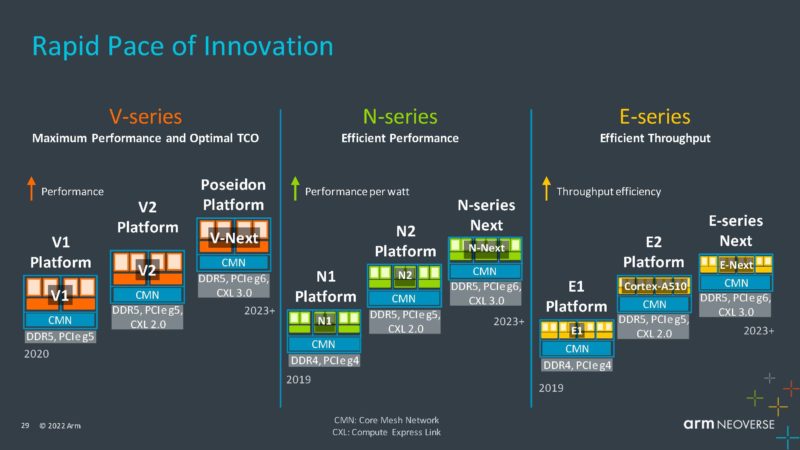

The AWS Graviton3 is a V1-series customer according to Arm’s diagram. The Altra / Altra Max are N1 cores. The Yitian 710 is N2 but with higher core counts. Unfortunately, Arm does not have the Altra Max on this chart.

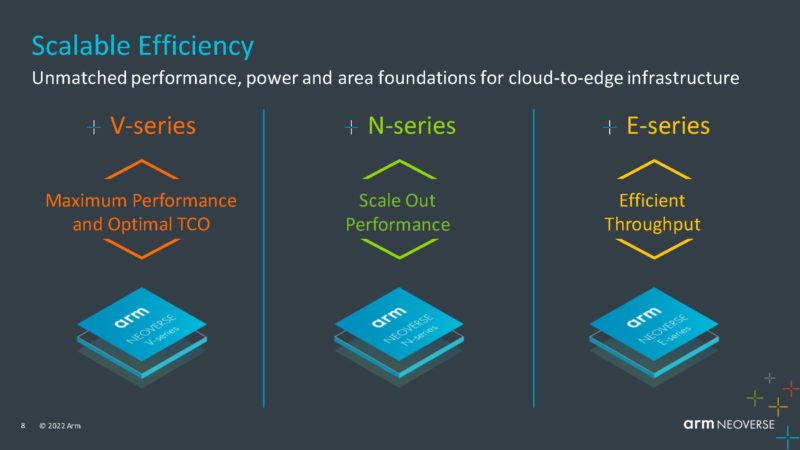

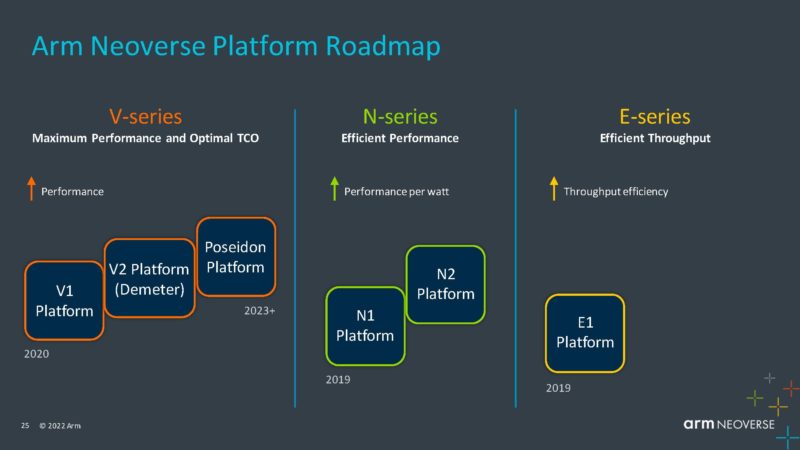

For a quick overview of the difference between the Neoverse V, N, and E-series cores, they are targeting three different types of performance. V-cores are for higher performance per core at higher power. E-cores are designed to be more efficient to scale at lower power. N-cores are designed to scale out between the two.

The Arm Neoverse roadmap now has the Neoverse V2 Demeter platform and then the V3 Poseidon platform coming next year.

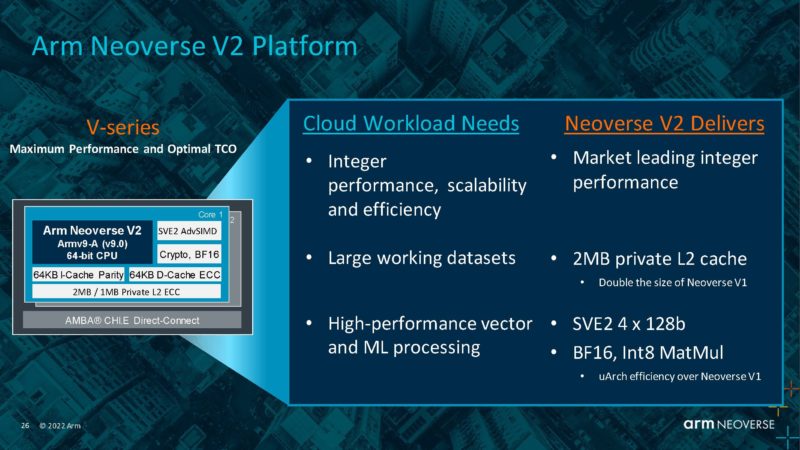

The Arm Neoverse V2 platform is a new Armv9 core that is designed for higher performance than the N2 cores. Usually the N core launches, then the V core so we had N1, V1, N2, and now V2.

One of the other big changes is the new (up to) 2MB L2 cache. For cloud focused chips, L2 size is important. AMD is focused on L3 cache as we saw with AMD Milan-X. Adding more L2 cache provides more performance, but it also increases the size of a core.

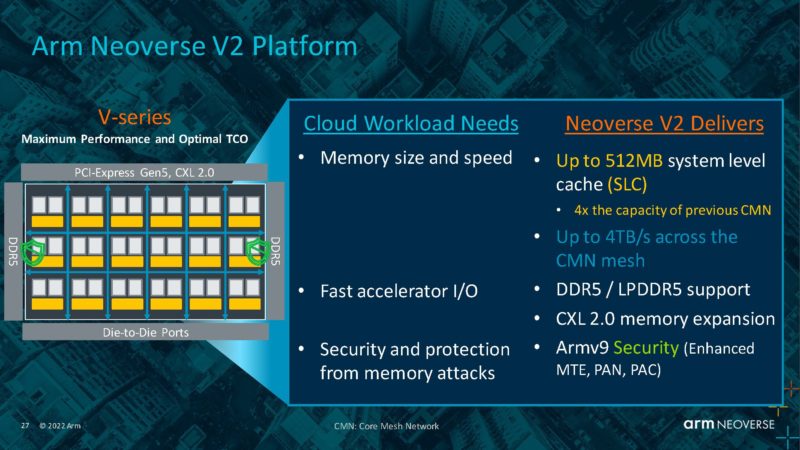

Part of the Neoverse V2 platform is being able to add not just many cores, but also build a larger system. That means adding features like CXL 2.0 support, security features, and large caches/ memory with DDR5 and LPDDR5(X) support.

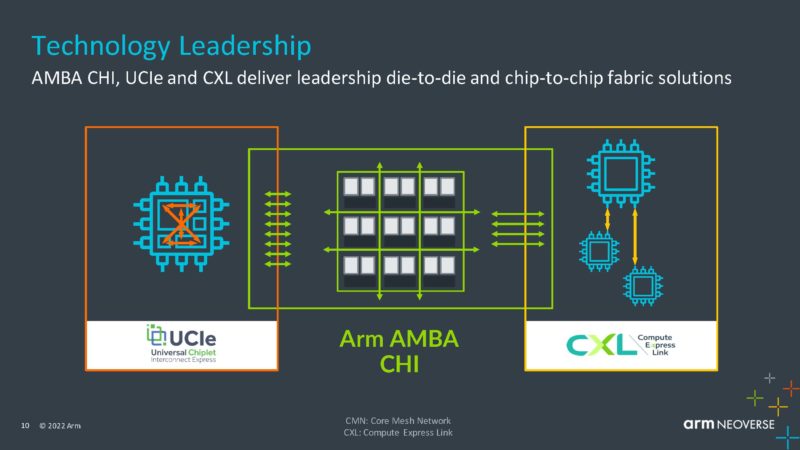

Arm is investing in its AMBA CHI as its fabric with UCIe and CXL. Both UCIe for chiplets and CXL for expansion and new topologies.

Beyond the Neoverse V2 and Neoverse N2 for the PCIe Gen5 and CXL 2.0 generation, will be the V-Next and N-Next (V3? and N3?) For the lower-power markets, there is the new Neoverse E2 core with the Cortex-A510.

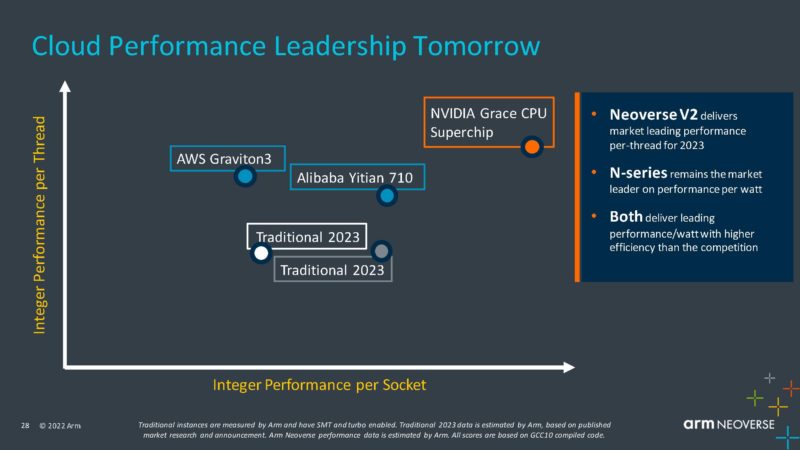

The big launch customer with the Neoverse V2 is NVIDIA Grace. The “Grace CPU Superchip” is the two-chip solution in a single package design with an interconnect between the two 72-core Grace halves. Arm does not have a scale here, but the x86 2023 projections seem a bit conservative from what we have seen thus far. Arm declined to share detailed PPA benefits of V1 versus V2 at this launch.

Something consistent here is that Arm is focused on integer performance throughout this presentation. The x86 CPUs have been so focused on providing both FP and INT performance for so long that it is an area where they can better optimize their chips for cloud workloads.

Final Words

The big item that we always mention to keep in mind with an Arm CPU core launch is that the cores are not a product. This is akin to Intel announcing its Sapphire Rapids cores months before launch or AMD announcing Zen 4 before launching Genoa. Still, we expect that in 2023, we will see more V2 product lines launch.

Developers interested in what it is like to work with Arm CPU and NVIDIA GPUs/DPUs? Join @ServeTheHome Patrick Kennedy as he shares what is possible. Video available 9/19.

Register GTC free: https://t.co/YURgdBkhng

Session link: https://t.co/nhqkc1STyg#GTC #NVIDIA #AI #Arm pic.twitter.com/ReCCpOWN9w— GIGABYTE Server (@GIGABYTEServer) September 14, 2022

In the more immediate features, today it was announced that I am going to have a session next week at NVIDIA GTC showing off an Ampere Altra Max-powered Gigabyte server with NVIDIA A100 GPUs. It was quite easy to get running and is a far cry, even with accelerators, from running versus what we saw years ago with the Cavium ThunderX. As someone who has experienced the pain of the 2016 generation, the relative ease of getting an Arm server up and running with NVIDIA GPUs was eye-opening (the BlueField-2 DPU worked as well.)

More coming with Arm on STH in the near future.