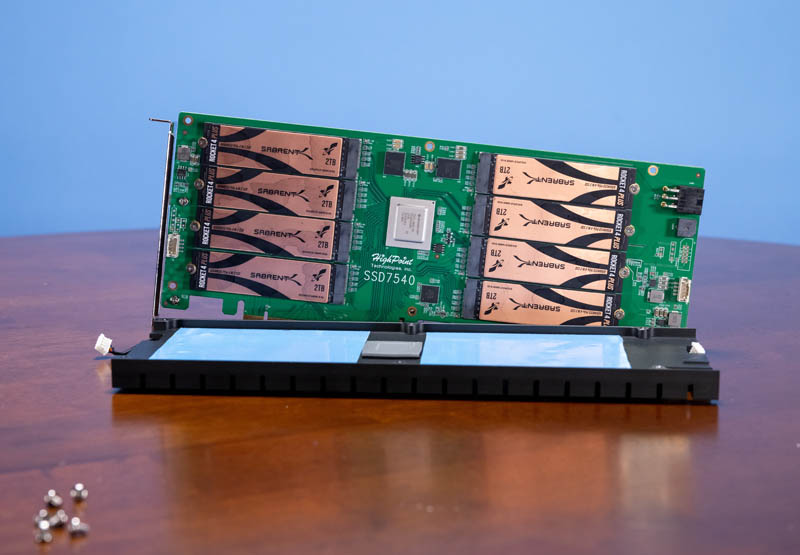

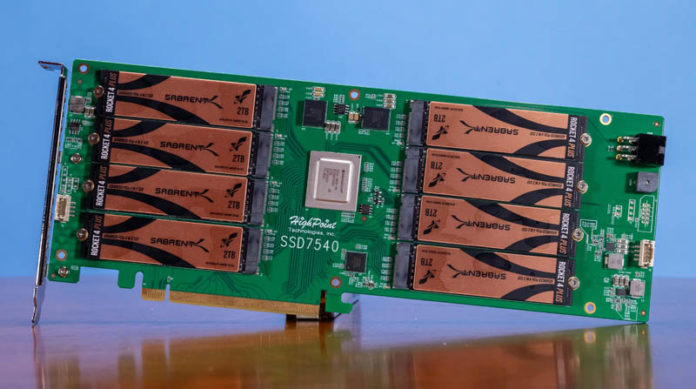

Today I am taking a look at the HighPoint SSD7540 PCIe Gen4 M.2 RAID card. This is a full-height PCIe 4.0 x16 card that is host to 8x PCIe 4.0 M.2-2280 slots, and allows RAID 0, 1, and 10 functionality. Sabrent participated in this review by graciously providing eight of their Rocket 4 Plus 2TB drives, and I am very grateful to them as it would be hard to review something like this without fully populating the card with identical drives. This review is the first in a series of PCIe M.2 RAID cards, so stay tuned for future reviews! For now, let us see how the SSD7540 fares.

HighPoint SSD7540

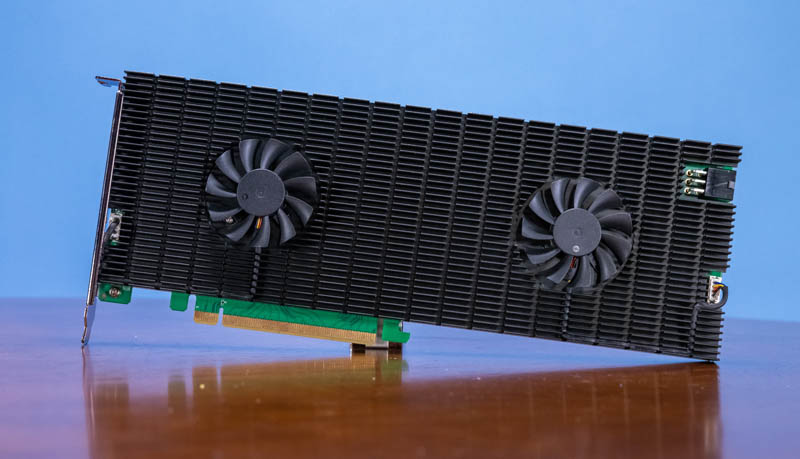

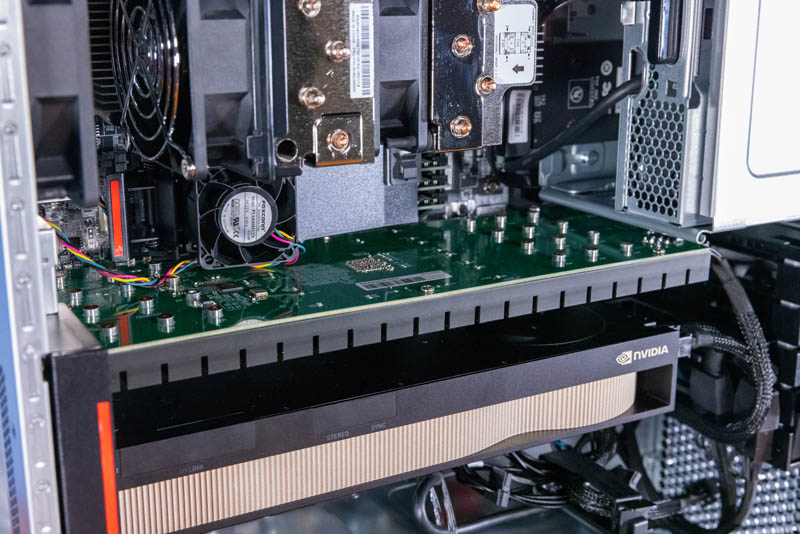

The first impression I had of the SSD7540 was simply its size; this card is very large.

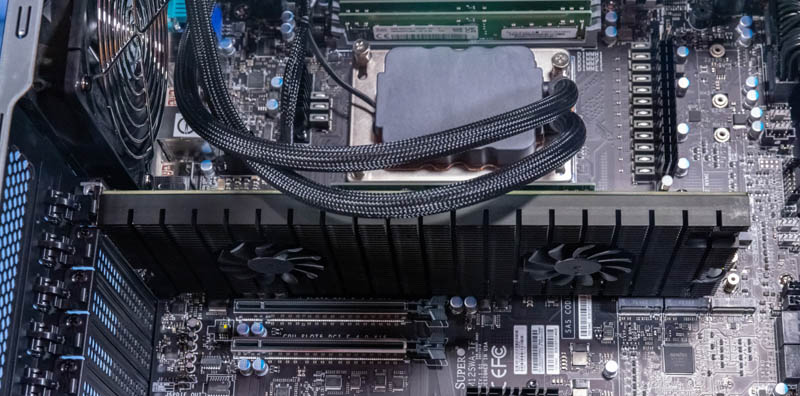

The front of the SSD7540 is dominated by its large heatsink equipped with twin fans. M.2 SSDs are known to generate quite a bit of heat, and 8x of them will definitely require active cooling.

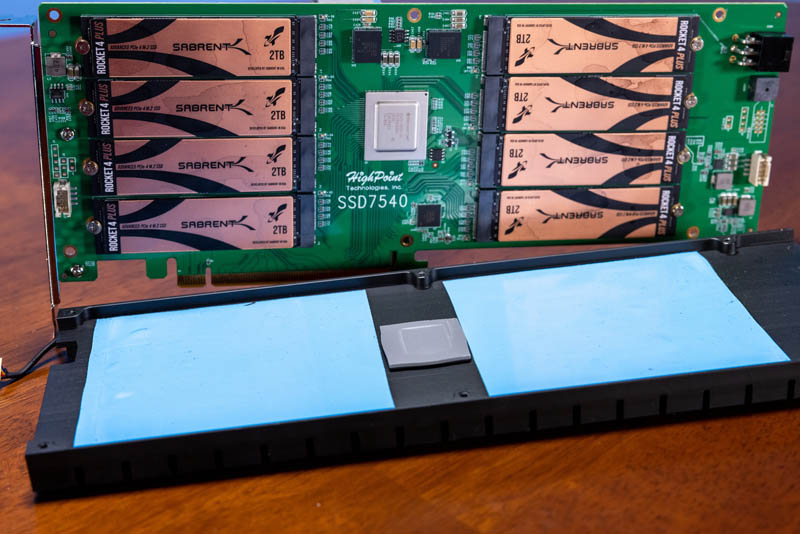

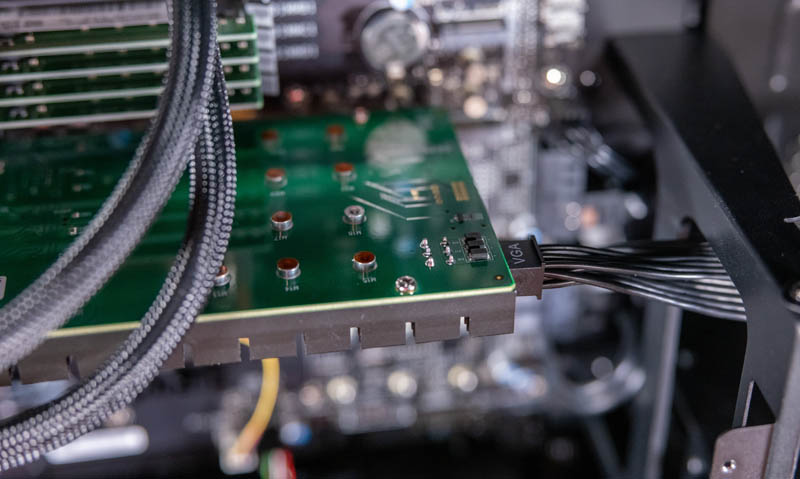

On the end of the card, we have one of the fan connections, as well as a 6-pin power connector. Removing the heatsink reveals the good stuff inside.

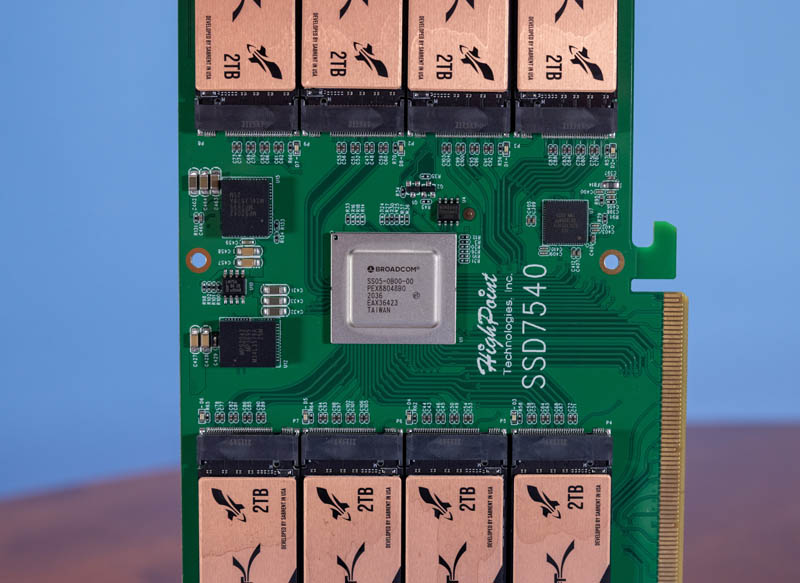

Eight PCIe 4.0 x4 M.2-2280 slots flank the Broadcom PEX88048B0 PCIe switch chip in the center. This chip hosts enough PCIe lanes to allow each individual M.2 SSD their own dedicated PCIe 4.0 x4 lanes. The main advantage of using a PCIe switch chip like this is that bifurcation is not required on the PCIe slot where this card is connected. Other than the large PCIe switch chip, there is no controller on this card. That is because fundamentally we are dealing with a software-based RAID solution.

The connection to the host interface is a PCIe 4.0 x16 slot, so there is a 2:1 lane oversubscription at play, but there is clearly a lot of bandwidth available. For users that demand even faster performance, HighPoint has a technology they call ‘Cross-Sync RAID’ that allows you to utilize a pair of SSD7540 cards in tandem for double the performance, though this is not something we tested.

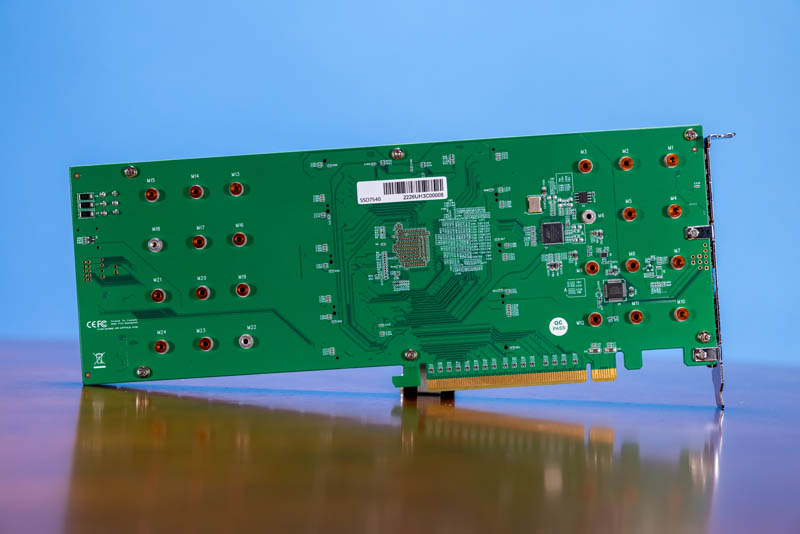

Compared to the front of the SSD7540, the rear has almost nothing going on. Most of what you see on the rear of the card is part of the M.2 mounting points.

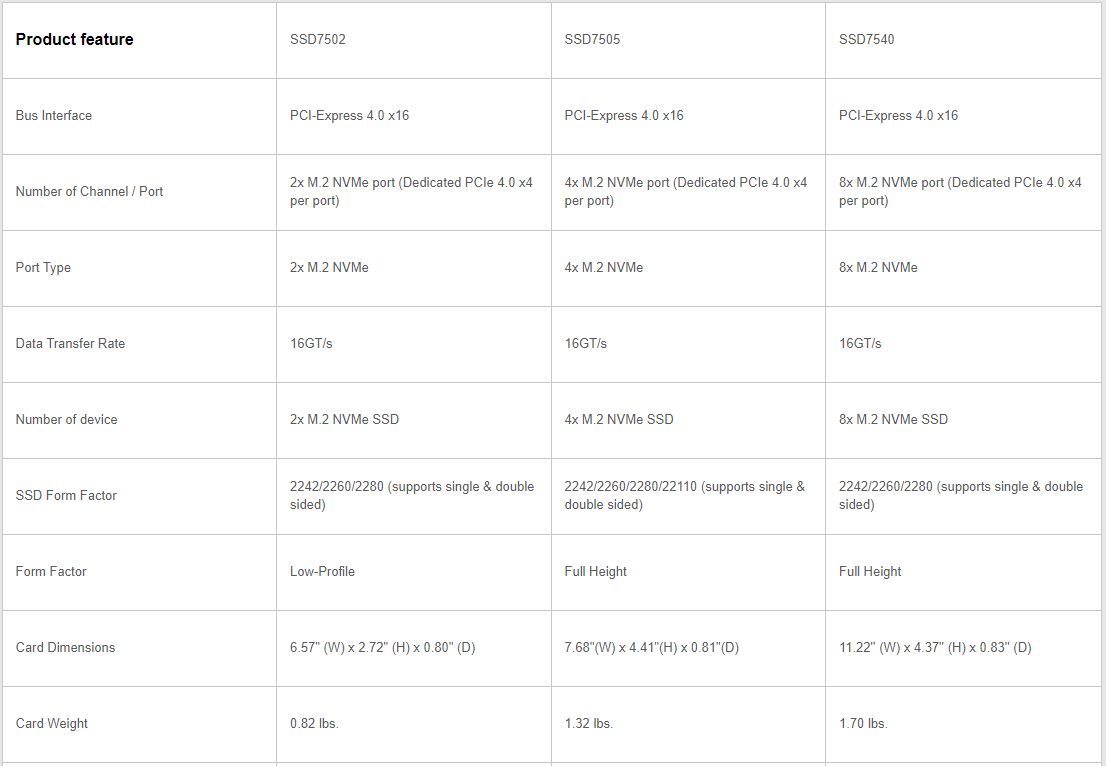

HighPoint SSD7500 Series specs

The SSD7540 I have today is part of the SSD7500 series cards, available in 2, 4, or 8 port capacities.

For the most part, other than the port count and physical size, these cards are very similar. The SSD7505 (4-port card) supports M.2-22110 size SSDs, while the other two top out at M.2-2280.

In addition, the SSD7540 we have today is the only dual-fan model, as well as the only model that can draw power from a 6-pin power header. That power header is optional depending on the SSDs you use; my 8x Rocket 4 Plus drives were happy to operate without the 6-pin power header connected, but it is possible that more power-hungry drives could necessitate the use of external power.

Driver support exists for Windows, Linux, and Mac, though the ability to boot to his card varies depending on OS. Notably absent from that list – at least to me – is VMware ESXi support. For that I will be reviewing the HighPoint SSD6204 in the future, along with some other non-HighPoint products.

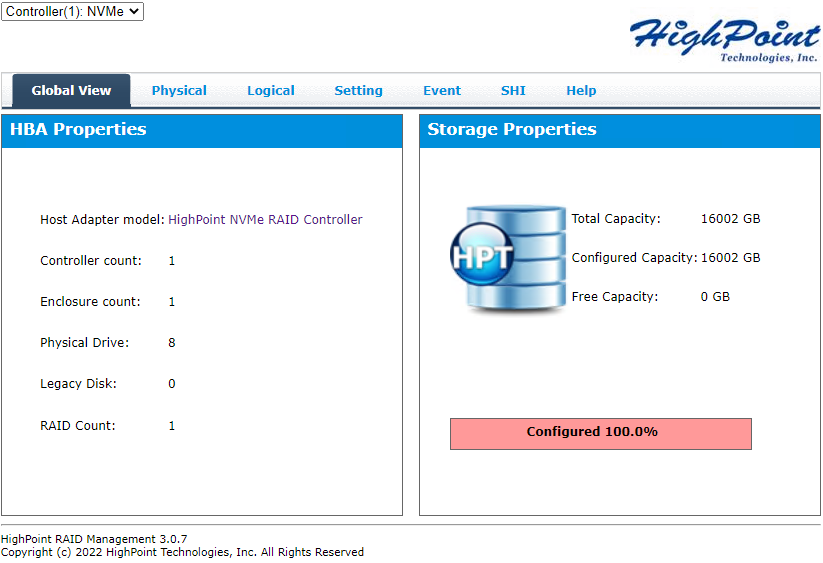

SSD7540 Management

On Windows, the management of the SSD7540 is handled through HighPoint’s RAID Management GUI.

From this GUI, RAID arrays can be created and monitored. This is an interface that I have seen many times over the years, and it seems almost unchanged from my time with a HighPoint RocketRAID 2720SGL that I owned many years ago.

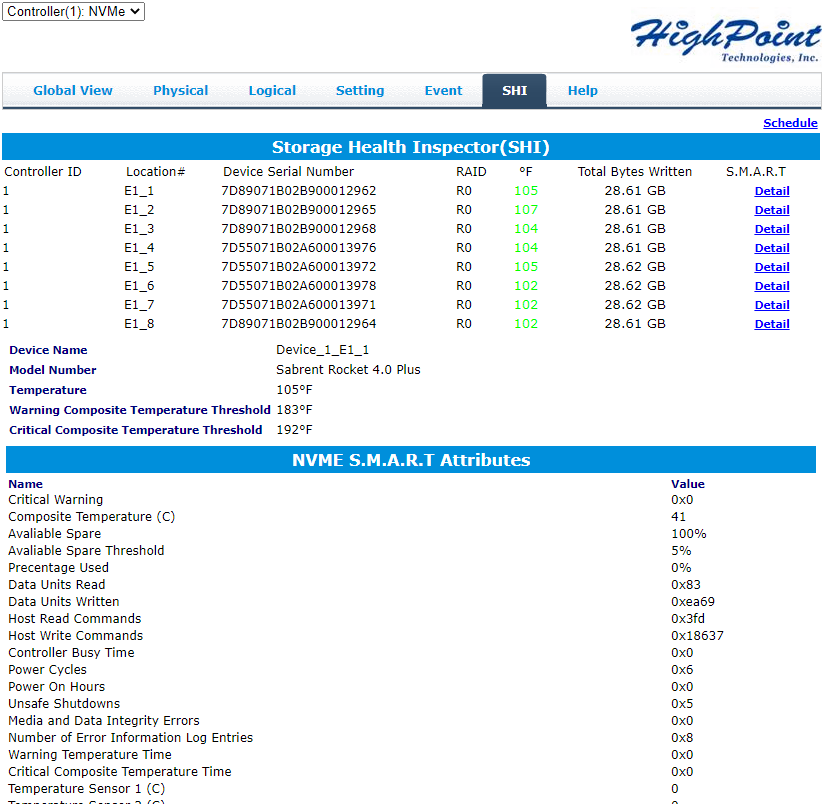

One important part of this interface is the health inspector interface. Individual drive SMART reporting is not passed through to the operating system, and so the SHI tab on the RAID interface is where that data can be accessed.

The RAID management utility is also capable of sending alerts in the case of a failure or pending failure detected by SMART. There is also a physical beeper on this card, which I always appreciate.

Speaking of health, that big heatsink did a good job keeping my army of Rocket 4 Plus drives nice and cool during my testing; I never saw temperatures hit 60C.

Test System Configuration

My basic benchmarks were run using my standard SSD test bench.

- Motherboard: ASUS PRIME X570-P

- CPU: AMD Ryzen 9 5900X (12C/24T)

- RAM: 2x 16GB DDR4-3200 UDIMMs

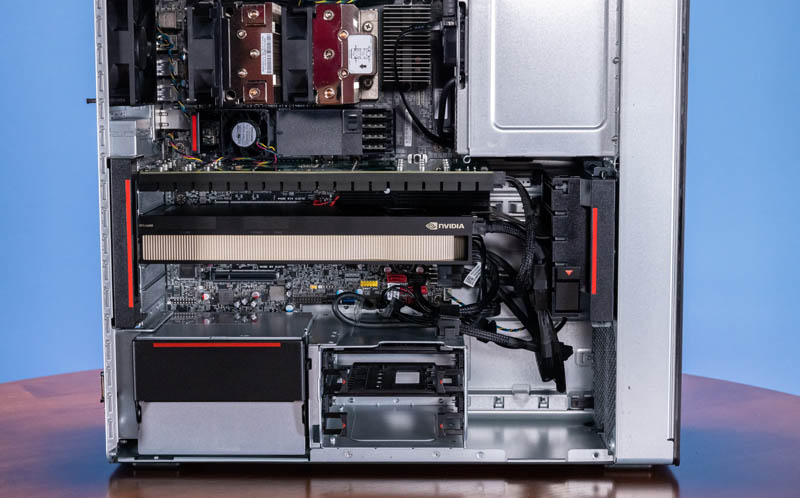

In addition to my test bench, Patrick tested the SSD7540 in a Supermicro AS-5014A-TT as well as a Lenovo ThinkStation P620 system, which are more the target market for an accelerator like this.

Both systems were also configured with professional NVIDIA GPUs like the NVIDIA RTX A4500 and RTX A6000.

SSD7540 Performance Testing

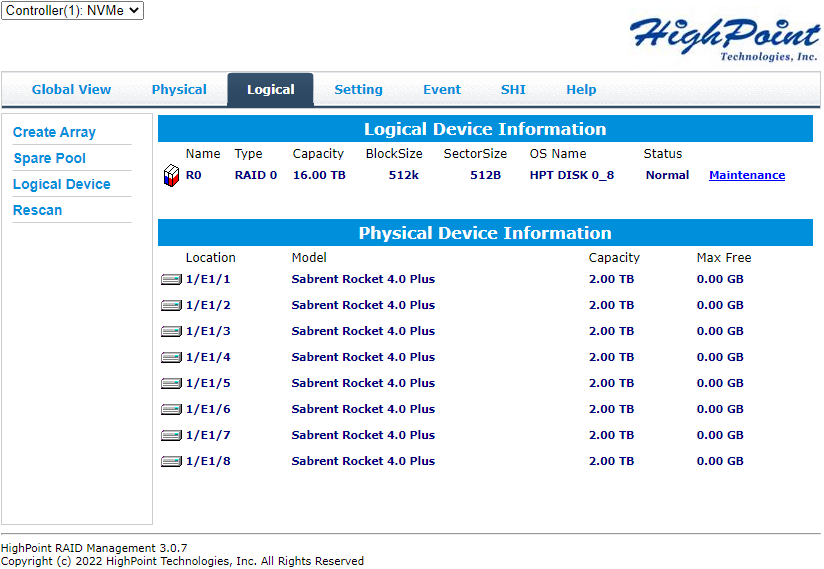

The HighPoint SSD7540 was equipped with 8x Sabrent Rocket 4 Plus 2TB SSDs and put through a small set of basic tests in both RAID 0 and RAID 10. The point was not to comprehensively catalog the performance of the SSD7540. As you will see shortly, it generates some big numbers that you can rely on. The performance you encounter will depend on which drives you choose to populate the card with, along with the RAID level you choose. I simply wanted to give some kind of a general idea as to the performance profile you might encounter.

RAID 0 Testing

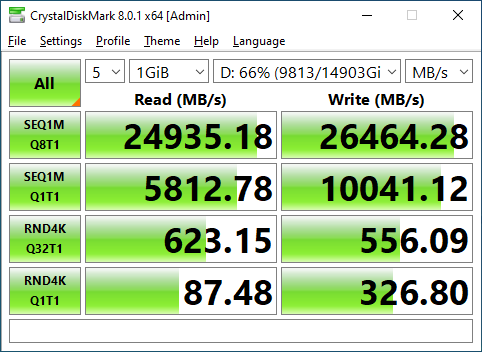

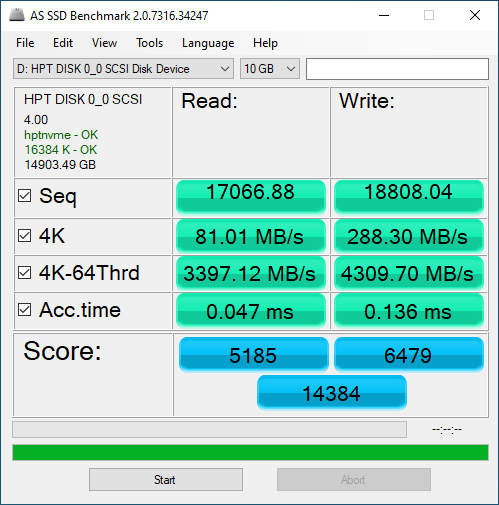

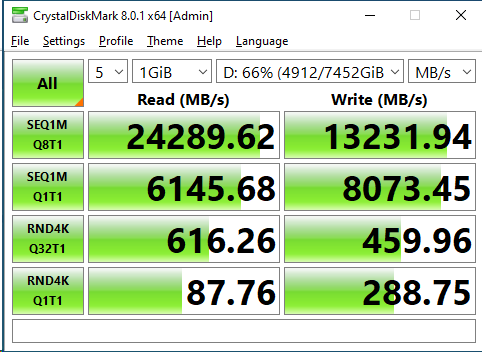

CrystalDiskMark is often used to highlight the sequential throughput potential of a drive or accelerator card, which is the purpose it is serving here.

Following the standard I set for my single-drive testing, I tested at both the 1GB and 8GB test sizes. If you came to this review just to look at big numbers, here you are. Nearly 25000 MB/s sequential read and north of 26000 MB/s sequential write is just absurd. Even more absurd is the fact we are limited by the PCIe 4.0 x16 slot interface; in theory, the combined performance of our 8x Rocket 4 Plus drives could be above 55000 MB/s.

One interesting bit to look at is the Q1T1 sequential performance, which barely improves over a single drive; one Rocket 4 Plus 2TB clocks in around 4200 MB/s read and 5900 MB/s write in Q1T1. You really need a highly threaded and highly parallel workload to necessitate something like the SSD7540 in the first place. 4K random read and write numbers are also not improved by a solution similar to this.

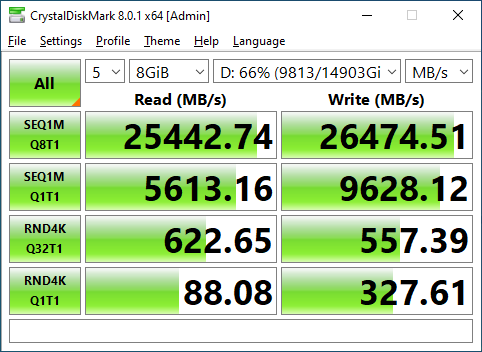

I did not expect this card to care about the larger test set, and as expected it performed very similarly at 8GB.

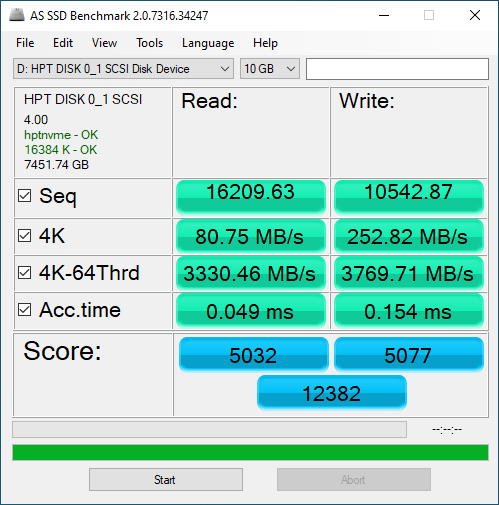

I only ran the one AS SSD test, the larger 10GB set. Once again, sequential performance is frankly amazing, which is what you would expect. 4K random performance is improved over a single Rocket 4 Plus, but not anywhere to the degree of sequential performance.

RAID 10 Testing

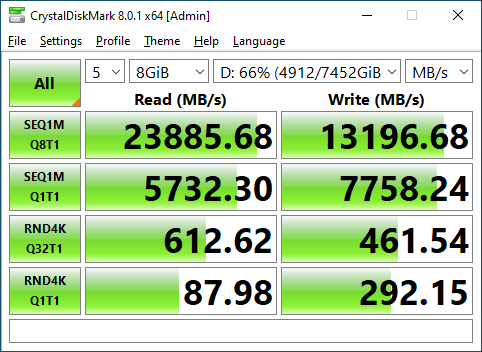

I repeated my test set, this time with the 8x Rocket 4 Plus drives in a RAID 10 array.

As expected, even in RAID 10 the sequential read performance is largely unchanged compared to RAID 0. I am unsure if this is because the RAID array is smart enough to read from both sides of the RAID 1 array, or simply because even if you cut the potential combined read performance of our eight drives in half, there is still enough performance on the table to max out a PCIe Gen4 x16 link.

Write performance, however, was a surprise to me. I conferred with HighPoint and my results are as they expect; write performance in RAID10 will be half that of RAID0. In my head, though, I make a distinction between “half the RAID0 performance” and “half the combined performance of the 8 drives” and those are different numbers. Half the RAID0 performance is exactly what we have received; RAID0 write are around 26000 MB/s and RAID10 writes are 13000 MB/s. However, the combined write performance of half of our drives should still be able to deliver 26000 MB/s. I suspect the limitation here a side effect of the SSD7540 being a ‘software’ RAID adapter. The end result is that RAID 10 write performance with 8 drives installed is more like the combined performance of two drives than four.

Just like with RAID 0, the larger CDM test performs just as well as the smaller one.

Lastly, I have AS SSD, which like CrystalDiskMark showed a drop in write performance in RAID 10 compared to RAID 0.

Final Words

The HighPoint SSD7540 is a very interesting card. Over the course of examining it, I came to consider it more in the context of an accelerator card and less in the context of a ‘normal’ mass storage device or SSD. I do not know if HighPoint would agree with that line of thought, but it is what I consistently thought of when considering this card.

At $1100, I see some plausible use cases for something like this. If you fully populate this card with moderate to large SSDs, the $1100 may very well get lost in the overall price of the device. As an example, the SSD7540 + the cost of the Rocket 4 Plus drives would come to somewhere in the neighborhood of $3500 for 16TB of (RAID 0) storage; that price point is high, but very large SSDs do exist like 15.36TB units such as the Seagate Nytro 3031 and one of those is in the neighborhood of $4800. While obviously comparing a single Nytro 3031 15.36TB drive with the SSD7540 monstrosity is an exercise in apples to oranges to say the least, the SSD7540 is both less expensive and higher performance. This is one of the reasons that I think of the SSD7540 more like an accelerator; if I had some need for a large scratch volume such as temporary storage for data warehousing processing, then the SSD7540 may very well scratch that itch.

Perhaps the biggest market for this card currently is with folks that are doing video editing or creators. Many of those folks have largely sequential workloads, and the performance of PCIe Gen4 NVMe SSDs is fine. What they need is a lot of local storage and so being able to put two of these with 8x 8TB NVMe SSDs, as an example, gives 128TB of storage in only two PCIe card slots.

I think the biggest strength of the SSD7540 is the “what if?” type possibilities that it enables users to consider. In addition to allowing some pretty crazy sequential performance numbers, the SSD7540 also allows you to build some very large storage devices using off-the-shelf hardware. Nobody serious about the long-term protection of their data should ever store it on an 8-drive RAID 0 array, but the read and write performance of the RAID 10 array was nothing to sniff at as well and certain usage scenarios like scratch volumes may not be bothered by the inherent data insecurity of so many drives in RAID 0. For professionals copying network storage media locally to work with at higher speeds, this solution is fine.

At the end of the day, the HighPoint SSD7540 is simply neat, and I enjoy the possibilities that it enables me to ponder. At STH, we are going to have a few other multi-drive solutions coming that we are excited about.

What shows lspci under linux with this card?

VERY niche product. God far datacenters and not much else.

I’ve checked the price – €1.200-ish EUR for what is essentially PCIev4 switch chip on a board.

Mere mortals would be much better off with some cheap EPYC board and simple passive 4x NVMe card for €100-ish or less.

If it had some nice functions, like RAID5/6, it might have been a (somewhat) different story.

Interesting would be JBOD performance with ZFS.

Also IF you can mix & match NVMes e.g. 4×2 + 4×1 TB – for future upgrade/expansion.

Thank you, Will, well done testing!

“Individual drive SMART reporting is not passed through to the operating system, and so the SHI tab on the RAID interface is where that data can be accessed.”

why is it not passed through? pcie-switches normally are largely be transparent to the os.

“Driver support exists for Windows, Linux, and Mac, though the ability to boot to his card varies depending on OS. Notably absent from that list – at least to me – is VMware ESXi support.”

Wait, what? So for an absurd €1200 you get something akin to pre 2000’s hardware raid solutions, where you need a deficient proprietary driver?! This is just a pcb with a $350 pcie-switch!!

Thanks but for that money i’d rather get an Epyc/Threadripper and 4 passive PCie x16 to 4xM2 Cards for $20 each and use bifurcation. Or if bifurcation is not an option, simply use two PLX8747 cards with 4 PCIe 3.0 SSDs each, should result in the same performance.

TL;DR use only if you need more than one, or have limited PCIe x16 slots…

don’t even get me started on pcie-switch pricing… with the Z690 intel essentially offers a 32-lane 16-port PCIe 4.0 (well half the lanes are pcie 3.0, but adds a ton of other high-speed I/O) switch for $50. with the H670 a 28-lane version for just $33.

jay,

You can have two separate 4 drive arrays no problem, or 4 separate 2 drive arrays. Each array can be comprised of different model disks. I did not try arrays with mixed model drives; I would imagine that they might work, but generally be a bad idea.

Gertrude & bernstein,

While I agree with you that you can use a 4x M.2 card that is cheap and use bifurcation, but not all boards out there support bifurcation. And many that do only support bifurcation on the primary PCIe slot, which might be occupied by a GPU. And this isn’t a 4-drive M.2 card, it’s an 8-drive M.2 card. Is it niche? Yes. Is it a useless product? Not at all.

We’ll be reviewing one (or more) of the bifurcation cards later on, so they will have their day. Also coming later is a true hardware M.2 card that does support RAID 5/6.

Does it support just functioning as a transparent PCIe switch and directly exposing the NVMe devices; or is it always running the abstraction layer; like some of the old SAS HBAs that couldn’t expose individual disks, only whatever RAID volumes were configured?

.@Will Taillac wrote:

>While I agree with you that you can use a 4x M.2 card that is cheap and use bifurcation, but not all boards out there support bifurcation.

So what ? This thing is literally PCIe switcvh chip on a piece of PCB and 8 M.2 connectors and it costs a friggin €1.200.

Fur that kind of money one can easily have whole EPYC board AND lower end CPU.

Something like:

THis superMIcro:

https://geizhals.de/supermicro-h12ssl-i-bulk-mbd-h12ssl-i-b-a2426251.html?hloc=at&hloc=de

this Epyc:

https://geizhals.de/amd-epyc-7252-100-000000080-a2114759.html?hloc=at&hloc=de

And there would still be left plenty of difference for some RAM AND 4x M.2 cards.

It seems Highpoint’s software quality is about on par with what it was back in the SATA/SAS days. I had a lot of problems with overall jankiness with their cards, including one of their newer PCIe NVME RAID cards.

How about some iops test results? This seems like a beast for caching larger spindle arrays or even as VDI storage where significant random IO occurs.

damn son

Question about this. The RAID config manager is a GUI, but they say the device allows for booting Windows. So you can configure the array(s) outside of Windows? I am guessing there is BIOS setup option for the card?

any sort of raid is kind of bad other than real fake raid which is portable and always works – no hw drivers needed – you want your raid arrays when you are running 100g or 200g (bonded/bridged) – a simple 4 nvme card that can be bifurcated into 4×4 is more than enough to saturate 200g – use 2 of these cheap cards to run 8-16tb nvme and then have some spinning rust arrays – use nvme to cache the spinning arrays. the new platforms will support gpu/nvme and 100g – why not nave a redundant netfs that is very quick – nvme scales well so smb value tier can scale out by adding a node – this is fast alone but with right caching tier and clones it can scale out welll also #raid10 #mdadm #portability #gluster on zfs

Just as a fun one, we actually have been working on other multi-drive solutions. See https://twitter.com/Patrick1Kennedy/status/1572560635216695300

For some context on this one, William, who used to do SSD reviews at STH and who helped us with the Sabrent drives, actually told me that they have trouble keeping the 8TB drives in stock. We discussed doing 8TB drives on this because that is 8x 8TB per card, but it was a challenge just getting drives. Usually, even in TR Pro systems, there are only 1-2 PCIe slots available after other cards are installed. These HighPoint cards are being used because they offer 8x 8TB per card and 64TB of capacity per PCIe slot.

On the $1200/ card. I totally agree this is pricey. That is why we are going to have more options we show off soon. At the same time, by the time you have 8x 8TB in TR Pro systems, people will pay the $1200 to add 32TB per PCIe slot to a system they could not add otherwise.

That is just for some extra context on this.

Seth,

The RAID array can be configured from a UEFI Shell command line in a pre-boot state. HPT provides a utility and documentation on how to do this on their product page.

It is very interesting and I appreciate the review. I’m more interested in bifurcation and looking forward to more such reviews *and also their compatibility with ESXi versions 7 and above. As you guys are aware, VMware compatibility is very limited when it comes to M.2 PCIe SSDs. I think they only support a couple enterprise models and none of the consumer models because of those SSD controllers firmware lacking required feature for v7+. I think some consumer sabrent m.2 pcie SSDs actually have the required firmware features even if not on the vmware compatibility list.

@ Will Taillac

Thank you for the response

Much appreciated.

Please please please run some zfs tests instead of using the onboard raid.

Loving the content recently

Stuart,

This card isn’t a M.2 HBA; it does not expose the underlying M.2s directly, they are hidden behind the RAID abstraction. I would wager this is not a good card for use with ZFS. That is perhaps a better case for the bifurcation cards that we will take a look at here soon, so I will try to get some ZFS tests in there.

Thank you for doing this. Count me in the camp that is interested in a hardware NVMe RAID 5 solution for Windows. By most accounts Windows software RAID is still to be avoided, and Intel VROC is seemingly to be orphaned, leaving us stuck with the old LSI/Broadcom approaches or their modern equivalent for direct attached storage.

I have an older Highpoint 3.0×16 RAID card with 4x 2TB HP EX950s in it acting as the ingest tier on my home storage server and I’ve been pretty happy with it. BTW, I have a 10GBase-T home network with a 40Gbit connection between my server and the network switch, so the Raid card does get properly utilized.

When you’re surprised, that’s a good opportunity to investigate instead of suspecting and judging.

25GB/s is quite simply the typical net bandwidth from 16 lanes of PCIe 4.0: how could anything go higher than the bottleneck? And when you write to two devices into one for the mirror mode, half the write performance again is exactly what you should expect.

There is zero acceleration in a PCIe switch, its major point is zero slowdown and there is rarely any acceleration in storage.

Some Control Data mainframes used to have hardware ISAM disks drives and these days WD is starting to include pattern/string searches into NVMe(CXL) devices: don’t know if that has left the labs yet.

If you consider a buffering RAID controller an accelerator, you’ll be disappointed by NVMe which is all about getting rid of the SCSI/SATA abstractions and letting the CPU get closer to the hardware. So software RAID is all you’ll ever get, even with a HBA, which will turn into a switch for NVMe and a SAS controller for SAS/SATA devices.

Building a “smart” NVMe RAID controller would be rather hard (and somewhat pointless), because designing for >100GB/s bandwidth is quite involved: you’d have to resort to GDDR memory or lots of DRAM channels, only to face PCIe bottlenecks afterwards.

If you’re concerned about CPU consumption, a lot of the checksum logic that used to be the RAID ASICs have become x86 ISA extensions and these days you get all those E-cores, which should be quite sufficient to act as what would be channel processors on a mainframe.

The real benefit that I see in using a switch based vs. a bifurcated M.2 board is that you can recycle older M.2 drives in newer hardware. If you happen to have 8 PCIe 3.0 M.2 drives left over, this board should allow you to get the full aggregate bandwidth and that’s actually a far more meaningful benchmark I’d like you to try than using PCIe 4.0 drives, where 2:1 oversubscription is the only advantage vs. bifurcation you can hope for.

However, a quad M.2 bifurcation board using PCIe 3.0 M.2 drives is very likely not going to deliver more than 13GB/s even on a PCIe 5.0 x16 slot, while a PCIe 5.0 switch based 8x M.2 board should deliver 25GB/s from 8 PCIe 3.0 NVMe drives on a PCIe x8 slot operated in bifurcated mode next to a GPU in a desktop mainboard.

In other words: a PCIe switch should be able to trade lane counts for PCIe versions and I’d love you to verify that using the Highpoint adapter with PCIe 3.0 M.2 drives like the Samsung 970 Evo Plus.

There is zero acceleration in a PCIe switch and there is rarely any acceleration in storage.

Some Control Data mainframes used to have hardware ISAM disks drives and these days WD is starting to include pattern/string searches into NVMe(CXL) devices: don’t know if that has left the labs yet.

If you consider a buffering RAID controller an accelerator, you’ll be disappointed by NVMe which is all about getting rid of the SCSI/SATA abstractions and letting the CPU get closer to the hardware. So software RAID is all you’ll ever get, even with a HBA, which will turn into a switch for NVMe and a SAS controller for SAS/SATA devices.

It’s major point is trying to ensure zero slowdown

Quoting @Will: “This card isn’t a M.2 HBA; it does not expose the underlying M.2s directly”

Are you sure about that? How is that even possible considering it seems it’s just a PCIe switch? And why would HighPoint make an effort to decrease the value of their product so much?!

Have you tried it in Linux? What does lspci show?

In response to the various comments:

I’d be very surprised if it did anything but expose the M.2 drives underneath: that’s exactly what a PCIe switch chip will do. And there is no extra hardware on top to hide it.

Unfortunately that switch chip will need to be configured and if it’s on an add-on card, there is little chance a desktop BIOS will do that for you. I guess that’s where the UEFI driver comes in, which should configure the switch and provide boot support, but still can’t hide the PCIe bus underneath AFAIK. Have a go with HWinfo on Windows or investigate via lspci/hwinfo on Linux.

Bifurcation support should be in any hardware since Sandy Bridge or even longer. But again it needs to be configured so BIOS support is the main issue.

I’m always ready to assume the worst from Apple, but anything else x86 I tried with cheap €50 quad M.2 adapters worked just fine.

Just one more remark: Most cheap desktop mainboard won’t wire a full set of lanes on any slot not “GPU”. So even if your 2nd and 3rd slots may appear to be x16, they may only have 8 or 4 lanes connected respectively. In other words, they are already bifurcated and placing cards there activates it, even without an explicit BIOS option.

Here the HighPoint cards can still make all M.2 drives accessible at the cost of bandwidth but a bifurcation board will only let you see M.2 drives that have wired connected to them.

Missed a trick by the card itself not supporting PCIe5.

Since this is a soft RAID, the driver no doubt relies on access to SSE and AVX to perform the calculations. What was the CPU impact when doing this type of work?

To find the upper limit on random requests, run more than one session at the same time. The AD SSD and Crystal probably cant generate enough threads to exercise the PCIe interface.

As far as use in an enterprise setting, probably not. Dell used to OEM these soft RAID adapters to meet corporate buying checklists and they (the large corporates) found out right away that they do not scale, and throw I/O errors when the CPU is under a load due to a general lack of a cache and because that driver can’t get enough time for its calculations.

Sad QD1 performance – a single Intel U.2 P4510 performs better than that as far as I remember.

Hello, did you check what is the compatibility on boards that do not have 16x slot electrically? It would be very handy if the card worked in 8x and more important, in 4x slot, providing the option to install additional 8 drives in case performance is not your top priority.

whats the power usage of this card btw? can’t find it anywhere nor did anybody test this. on idle, on load and relative to how many SSDs are connected, as well as fan speed and power draw of it etc.

A couple of notes:

My Threadripper Pro system can “only” accommodate 10 NVME disks using bifurcation and AICs, so the two 8-disk cards I bought are almost half useless. On the other hand, one could presumably fill all available x16 slots with cards like this, so it definitely isn’t useless (but rather niche.)

Second, a reviewer on Amazon stated the Raid 1+0 on this card is actually 0+1. I wonder if that is where the performance drop comes from?

Dose any one check if VMware esxi can see it as storage not boot raid?

Will, I am not an IT guy but quick question. We have a SQL server and need to run big data set queries. Would this make it a lot faster or no better than individual m.2 drives due to how SQL server works?