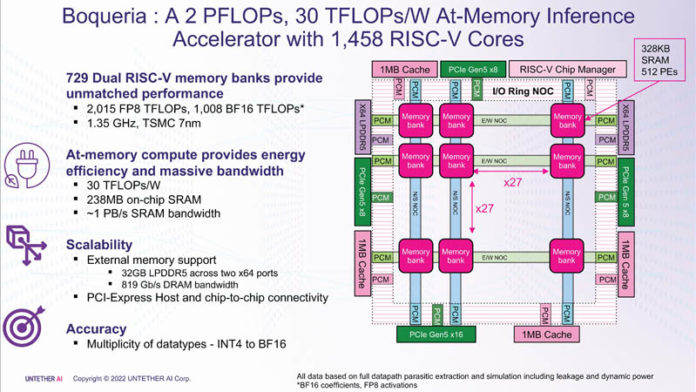

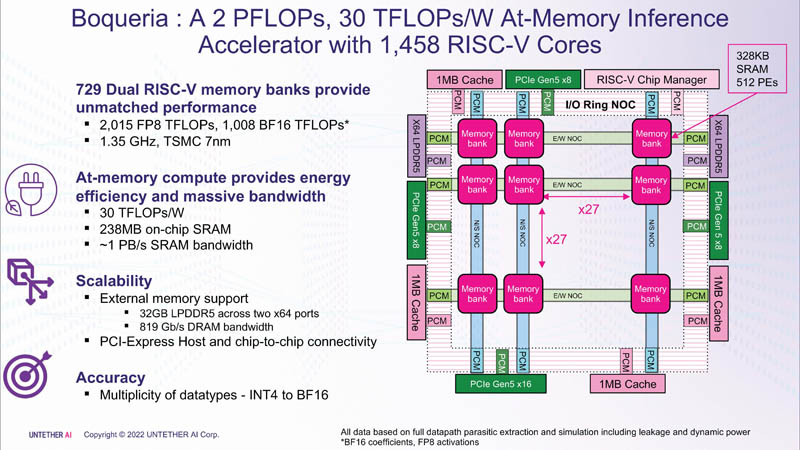

At Hot Chips 34, Untether.AI showed off its newest AI accelerator. The Untether.AI Boqueria is a 1458 RISC-V core AI accelerator that tries to match compute with memory.

Note: We are doing this piece live at HC34 during the presentation so please excuse typos.

Untether.AI Boqueria 1458 RISC-V Core AI Accelerator at Hot Chips 34

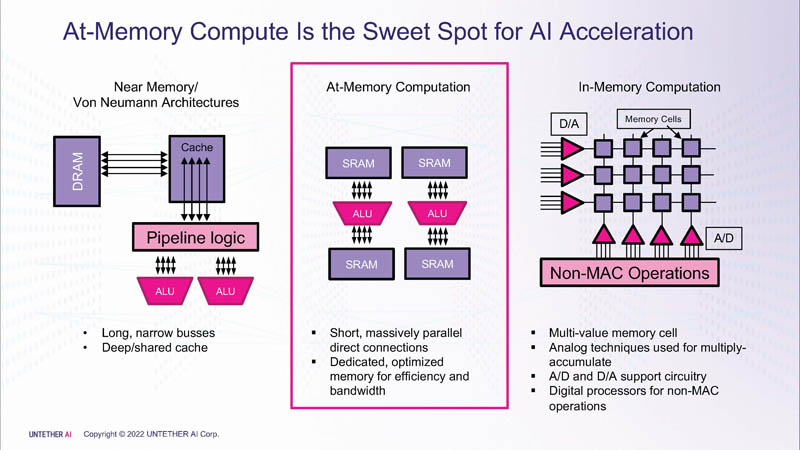

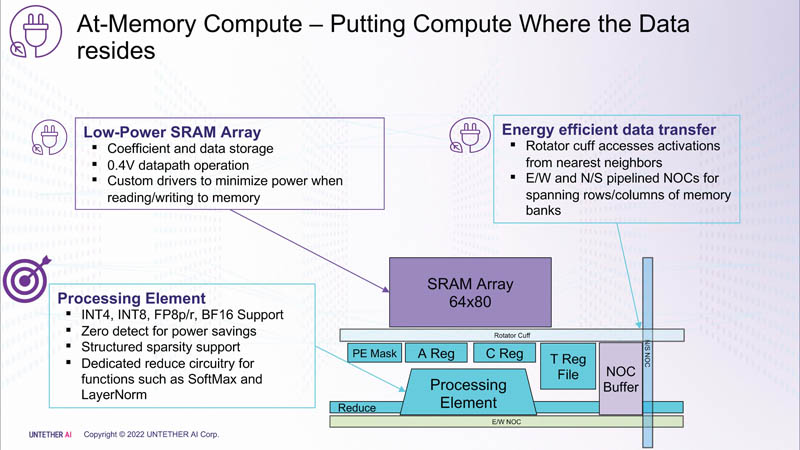

Untether.AI has a common theme that data movement is expensive in terms of performance and power consumption. Part of the goal is to bring compute closer to memory to minimize movement.

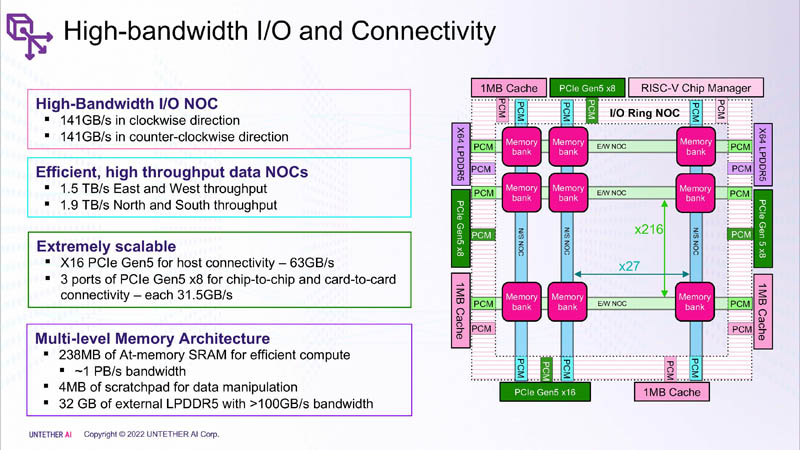

Boqueria is a 2PF of FP8 processor built on TSMC 7nm. The 238MB of on-chip SRAM gives the chip around 1PB/s of SRAM bandwidth, plus it can access external memory. The FP8 is important as that is a key part of Boqueria’s architecture.

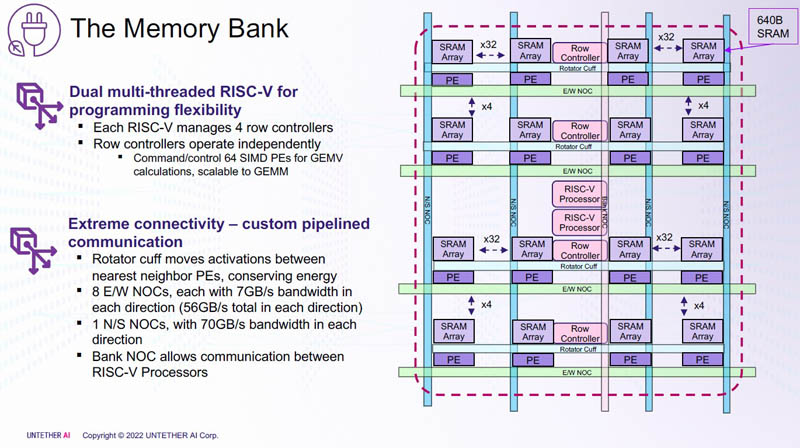

Each Memory Bank (the memory/ compute clusters on the NOC) has two multi-threaded RISC-V cores. All of these Memory Banks are connected via the NOC.

Here is a diagram of how Boqueria puts SRAM and compute together.

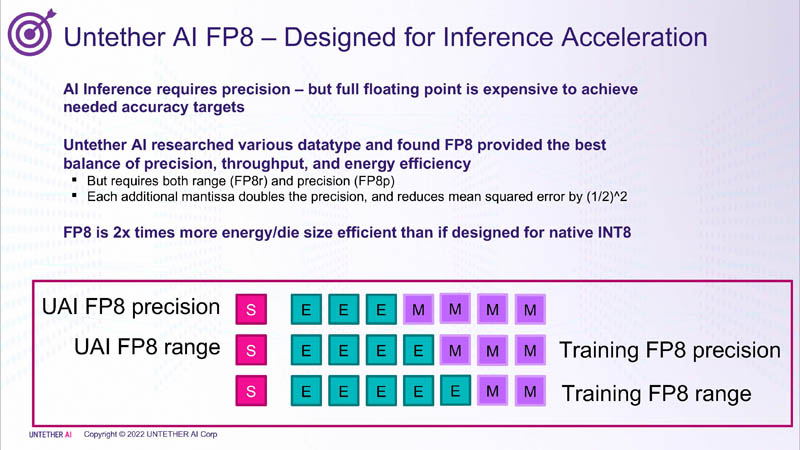

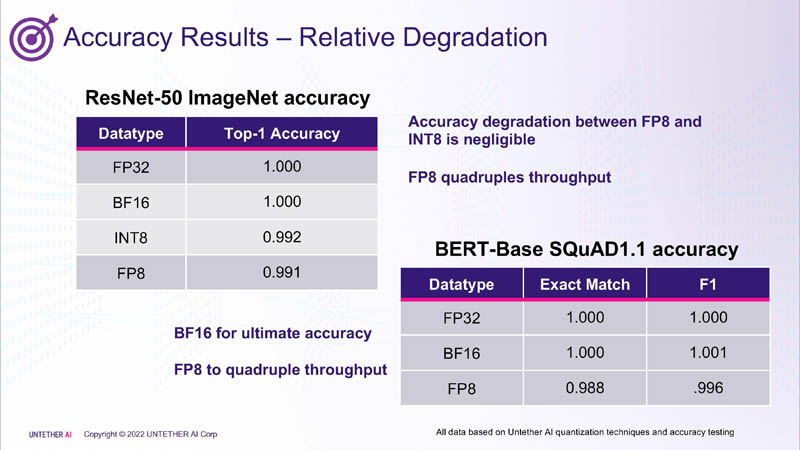

A big insight and design principle of Untether.AI is that FP8 is suitable for inference. It says that FP8 is more efficient than INT8 to design for.

FP8 has a small accuracy impact on inference so that is why Untether.AI is using FP8 since it is more efficient and has a low impact on accuracy.

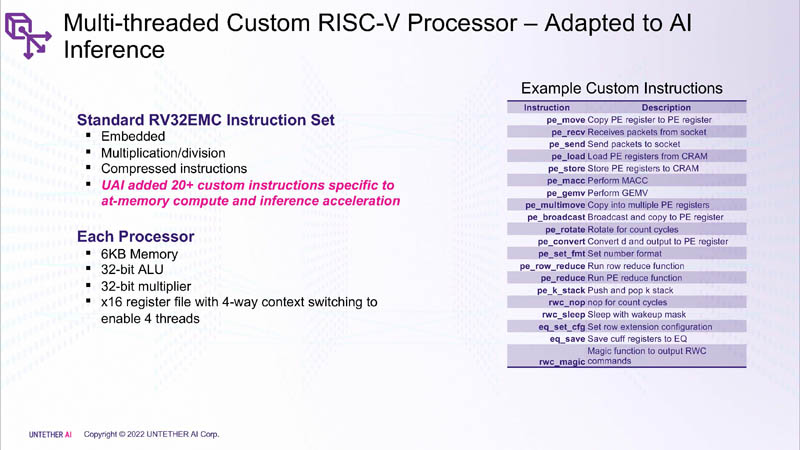

The RISC-V processor is a RV32EMC instruction set, but then custom instructions. That is part of the power of RISC-V.

Here is more detail about the on-chip NOCs.

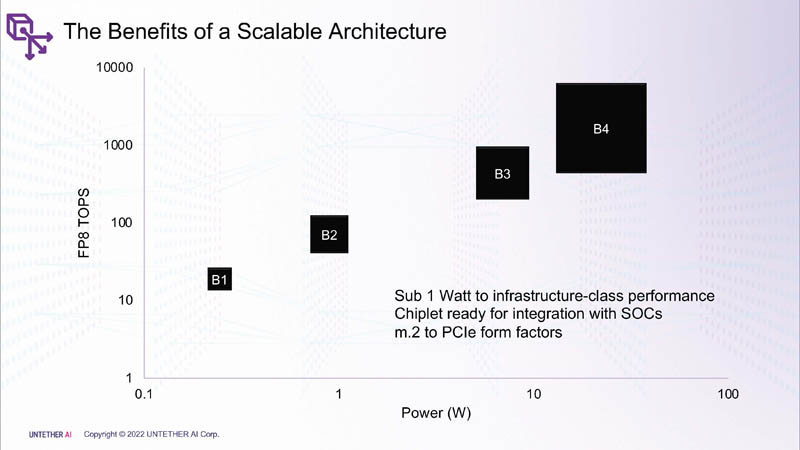

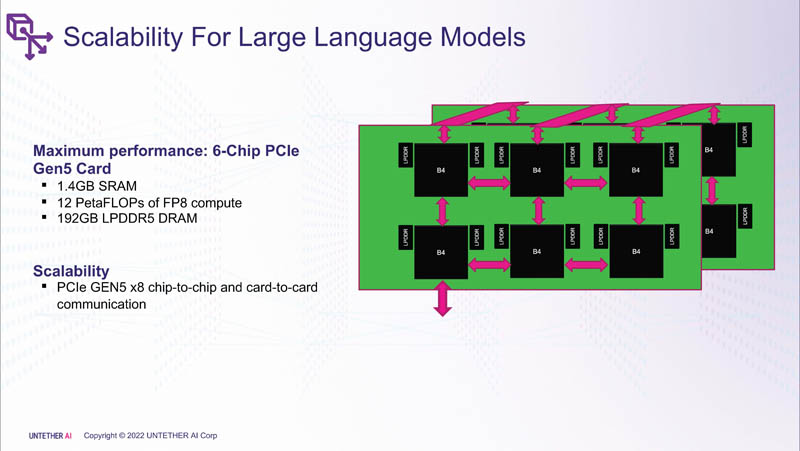

The company says its architecture scales from very low power to higher power devices. It is not discussing 500W+ chips but is instead targeting M.2 type of power envelopes.

The idea is to then aggregate a number of these smaller chips to achieve more performance. Note that this will be a PCIe Gen5 device as well.

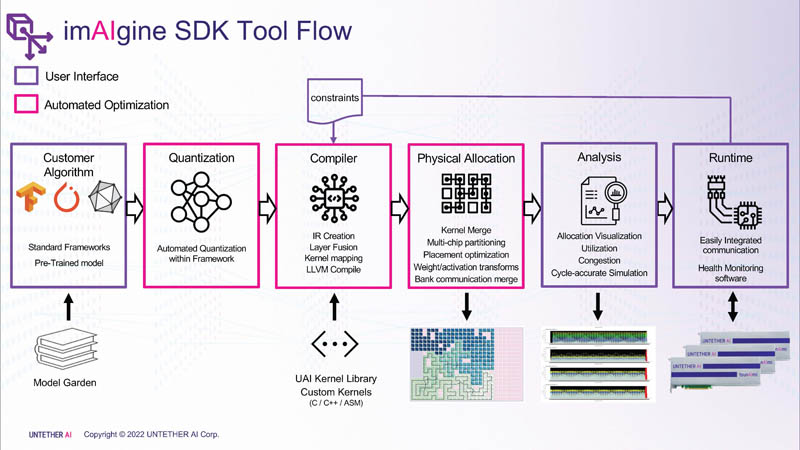

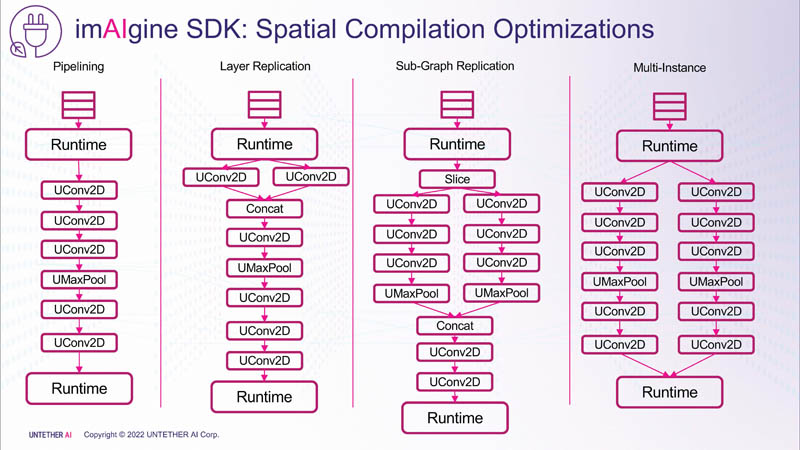

The company’s software is called the imAIgine SDK.

Like most AI accelerators, the compilers need to be highly optimized for the hardware.

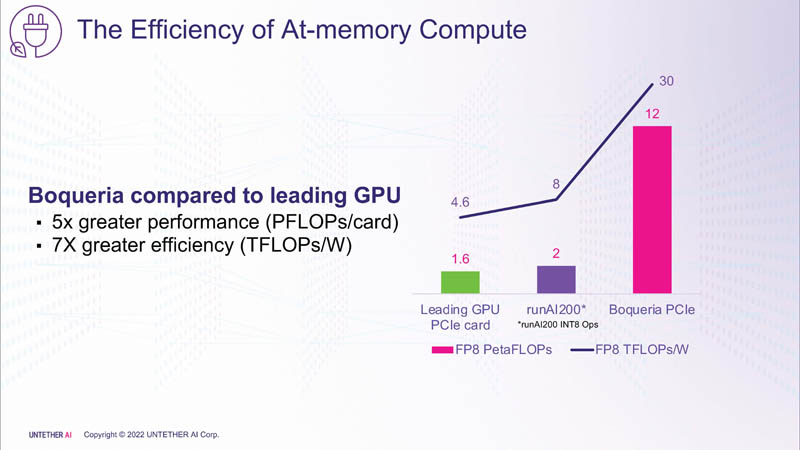

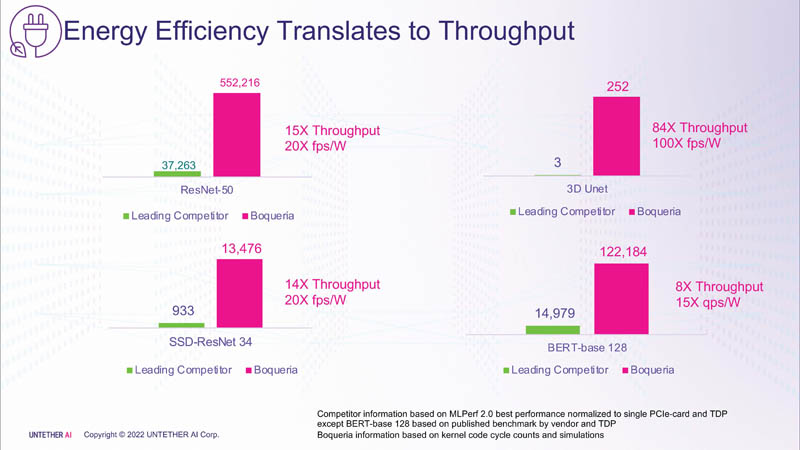

With that, the company says it can have higher performance than a GPU.

Here are the throughput and energy efficiency comparisons:

Of course, one has to remember that the GPU being compared is a more general-purpose accelerator device that is currently commercially available.

Final Words

Every year at Hot Chips we get a number of AI startups. Usually, startups that try to simply match what NVIDIA is doing at a lower price we do not cover. This we thought was interesting not just because of the inference accelerator angle, but also because it is using RISC-V. These are the types of applications where RISC-V can make inroads on Arm’s market before trying to go into more mainstream markets.