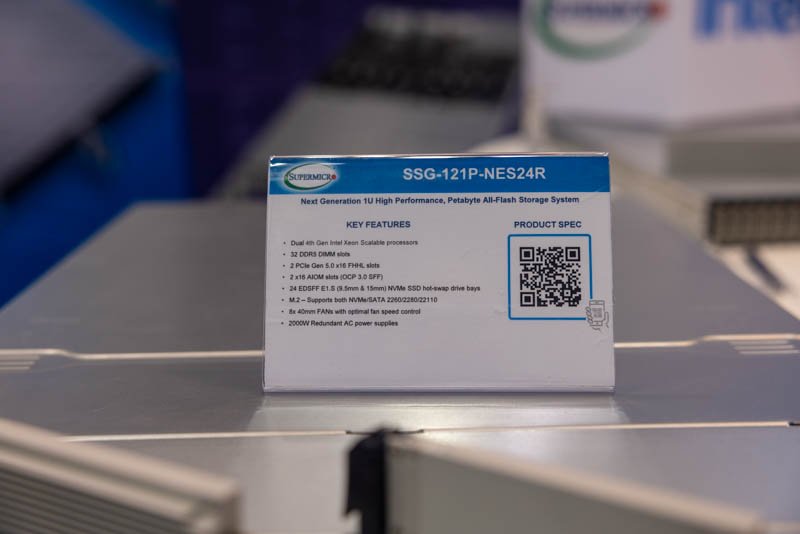

At FMS 2022, we saw the next-generation Supermicro SSG-121P-NES24R. This is a 1U system that takes up to 24x EDSFF NVMe SSDs, making it much denser than current generation 2U 24x 2.5″ NVMe SSD solutions. Since it is based on the 4th Generation Intel Xeon Scalable (Sapphire Rapids) we could not look inside, but there was at least one new feature: MCIO that we could see.

Supermicro SSG-121P-NES24R

The front of the system should immediately be exciting to storage folks. The SSG-121P-NES24R has 24x EDSFF SSD bays. That means we get PCIe Gen5 storage and 9.5mm or 15mm EDSFF SSD support.

Between having twice the bandwidth moving from PCIe Gen4 to Gen5 and the more SSD optimized form factor, this is twice the density in terms of the number of drives. It is also four times the density in terms of maximum throughput per U compared to the PCIe Gen4 2.5″ front serviceable solutions. For those wondering, EDSFF was all over the FMS show floor for PCIe Gen5 and CXL devices. Here is our E1 and E3 EDSFF to Take Over from M.2 and 2.5 in SSDs piece:

In terms of specs, this should also be exciting. The dual-socket Sapphire Rapids server has 32x DDR5 DIMM slots. That corresponds to the 8-channel DDR5 and 2 DIMM per channel operations we have seen in other Sapphire Rapids platforms. Also, the 24x PCIe Gen5 SSDs are being connected without PCIe switches. There are also two AIOM (Supermicro’s OCP NIC 3.0 slots) and two PCIe Gen5 x16 slots onboard for lots of networking potential. It also means Intel will be increasing PCIe lanes while moving to PCIe Gen5/ CXL 1.1.

There is also M.2 boot for storage. We could not look inside the chassis because Intel is not allowing that to happen, even though we have now seen plenty of the Archer City/ Eagle Stream Intel development platforms open on show floors since Intel Sapphire Rapids CXL with Emmitsburg PCH were shown at SC21 in November 2021.

What we could see is a look at the new connector for the PCIe Gen5 cables. For the 24x EDSFF SSDs we see 12x cabled connections between the EDSFF backplane and the motherboard.

These use the new MCIO PCIe Gen5 cable connector and look like PCIe Gen5 x8 connectors.

MCIO cables and motherboard quality to support DDR5 and PCIe Gen5/ CXL are increasing system costs. Overall, while the density increases 2:1 to 4:1, the server costs are going to increase a bit. That is not just on the Intel side, but AMD and Arm will see this as well and it is just something that is part of the new era of compute in the post-Xeon E5-Cascade Lake PCIe Gen3 era. Part of these costs will be offset by not having to use PCIe switch chips as often as CPUs increase PCIe lane counts. The Ice Lake version of this Supermicro system would require PCIe switches to get this much I/O.

Final Words

From a density perspective, moving to PCIe Gen5 and EDSFF, plus new generations of denser NAND will allow for this system to achieve 1PB+ in 1U. At the same time, the performance should be higher than the first generations of 1PB per U systems, like we saw in our hands-on with the 1U Cascade Lake Supermicro EDSFF Server years ago. Here is the video for that one:

While Supermicro could not lift the lid at FMS 2022, this system was all over the show floor. Many OEMs had this in their booths. Next to the Intel Eagle Stream/ Archer City development platforms running all of the CXL demos, this was probably the second most spotted Sapphire Rapids platform at FMS 2022. It seems like this is a popular EDSFF PCIe Gen5 SSD platform among SSD vendors. It was also interesting to see the MCIO connector that we will see more of in the PCIe Gen5 era.

Now we just need Sapphire Rapids chips to be released so we can start using these platforms!

One of the most interesting new developments I’ve seen since I retired from being a “Xeon/EPYC farmer”, last summer.

Alas I will continue to make do with my 85-Watt 6-Gbit SATA based circa 2014+ Xeon E5-2630 V3 quad server (2U / 4 node) data center castoff’s in my $HOMElab.

…I do wonder at which point I will have to stop taking gear off the “2B-Recycled” pile from my previous employer…Because my modest home-rack power budget can no longer fire them up…Ah, no one will notice if I redeploy the circuit for the electric dryer!

When or will you cover the control plane side that will take advantage of these servers? Something has to be setting up the nameapaces, erasure coding and many other new features.

Awesome internal bandwidth, between the EDSFF, PCIe5 and DDR5

Watch this space, more coming in regards to the PCIe Gen5 capability that isn’t MCIO. You’ll be seeing it sooner than you think :-)