As one of my very first posts for STH, I submitted a build guide to a small business server with built-in disaster recovery capabilities. That system was DIY assembled, with a mix of consumer and enterprise-class products designed to fit a specific niche. Well, today I am back with another build; this time I am building a pair of servers, and they definitely contain some DIY flair. Also, please note this is not a sponsored post in any capacity, and in fact, none of these parts were even purchased by STH. This build was done outside of my capacity as a STH reviewer, I simply chose to document it in case any of the STH readership found it interesting or informative.

Build Design and Reasoning

This client is upgrading an older server setup with new hardware and software. The old servers are a pair of Dell PowerEdge T320 systems with Sandy Bridge generation Xeon CPUs, 16GB of RAM each, and mechanical storage.

Aside from their age, there actually is nothing wrong with the existing servers, but the client wants to migrate to the newest version of their line-of-business application which comes with new hardware and software requirements, thus necessitating the upgrade.

The new servers were originally set to be ordered from an official Supermicro systems integrator, and would probably have been based around an Intel Xeon E-2300 Series platform. This particular client has performance requirements in excess of what Xeon D or EPYC 3000 series can reasonably provide, but they do not have the budget, nor the need to make the jump to Xeon Scalable or EPYC 7000.

Unfortunately, lead times from my vendor were going to be 8+ weeks for system delivery, and even that was a “guesstimate” for an ETA. That amount of time is simply too long, so I was forced to explore other options. As I considered my options, the idea to do a DIY build started to seem appealing.

Bill of Materials

First up, here is the bill of materials used in this build. Please note, I purchased *two* servers, so everything here was purchased in double quantity. The combined hardware cost of both servers is a bit under $6000.

Chassis: Antec VSK 10 $200 (after PSU and fans)

The Antec VSK 10 was chosen for this server because it satisfies a small list of requirements. It had to be mATX, inexpensive, and fit the cooling setup. It also needed to not look like a “gamer” case; no tempered glass side panels please. My first choice chassis was the Fractal Design Core 1000, but that case did not end up being compatible with the chosen CPU cooler. The VSK 10 was purchased to replace the Core 1000 and is working well. Along with this case, an EVGA 550W 80+ Gold modular power supply was purchased, and I already had a few extra case fans on hand for better airflow. Nothing on this setup is hot-swap, but in the context of this particular client that was deemed acceptable.

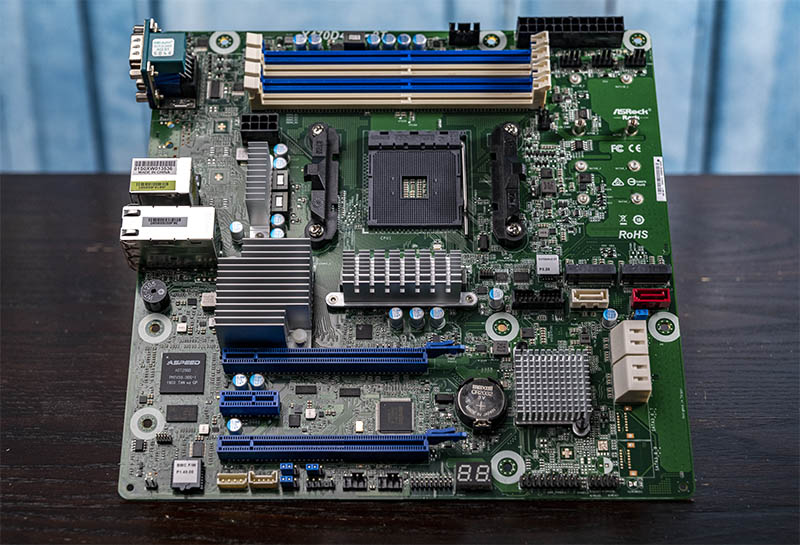

Motherboard: ASRock Rack X470D4U2-2T $430

I have reviewed this board before and I liked it. This was once again my second choice; originally I purchased some much newer ASRock Rack B550D4U motherboards, but I ran into some compatibility problems with my add-in 10 GbE NICs; more on that later.

After running into that stumbling block, I located the X470D4U2-2T boards on eBay and they worked perfectly. I was not planning on utilizing the PCIe Gen4 capabilities of the B550D4U anyways, so there was no great loss in swapping them out. Additionally, since this board includes onboard 10 GbE networking, I was also able to return the 10 GbE add-in cards I originally purchased.

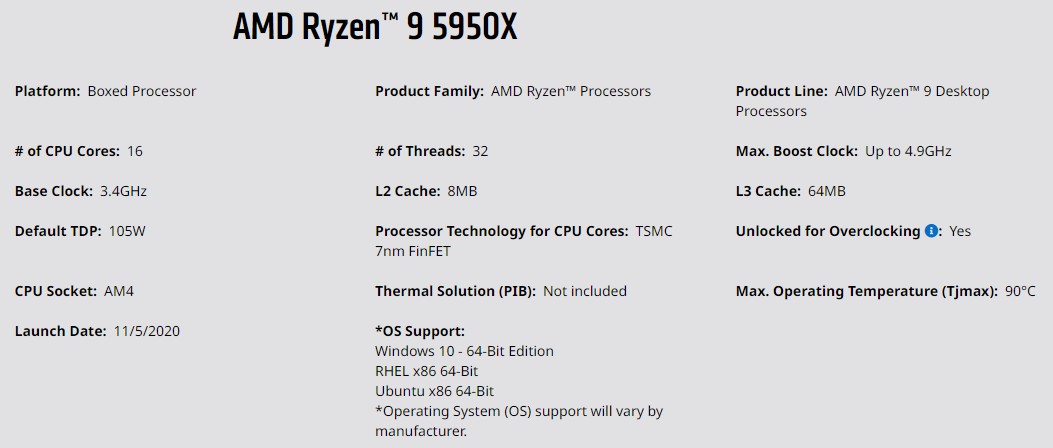

CPU: AMD Ryzen 9 5950X $585 (with HSF)

This CPU choice is simply the top-end SKU for the socket. For a modern server in 2022 only having 16 cores is not much, but this client is upgrading from two old Dell systems, one with a single Xeon E5-2407 installed and the other with a single Xeon E5-1410.

Compared to the old servers, this Ryzen 5950X will absolutely blow both of those systems out of the water without even breaking a sweat. A lower-performance SKU could probably have been used, something like the 5900X or 5800X, but the expense to upgrade to the 5950X was relatively minor in the context of the overall project cost and so the best CPU for the socket was selected. Since this CPU obviously needs a cooler, I picked up a Noctua NH-U12S Redux.

Memory: 4x Crucial 32GB DDR4 3200 (running at 2666) $480

This system was configured with 128GB of DDR4 memory running at 2666 MHz.

The chosen memory speed and capacity are dictated by the platform in this case. DDR4 3200 DIMMs were purchased because they were the least expensive option, but the motherboard is restricted to 2666 MHz for the operating speed. 128GB is the maximum capacity allowed on this platform as well. The two existing servers have a combined memory capacity of 32GB, so moving to 128GB is still a huge upgrade.

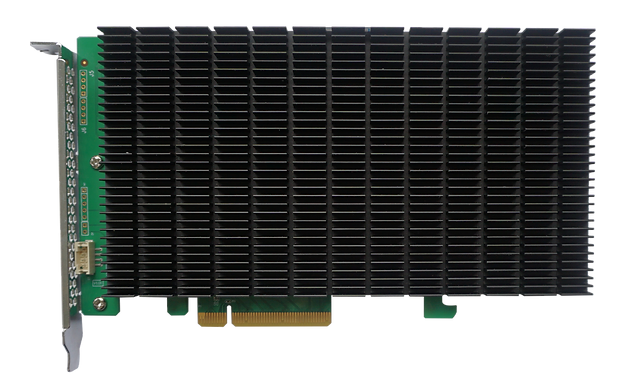

RAID Controller: Highpoint SSD6204 $336

I always prefer some kind of drive redundancy, and my favorite RAID cards are currently prohibitively expensive as well as out of stock. As a result, I went looking for alternatives and landed on the SSD6204.

This is a 4-port M.2 NVMe RAID card capable of RAID 1 and compatible with ESXi, which is all I was looking for. This card does not require bifurcation on the PCIe slot and the RAID functionality is handled in hardware. The 4-port SSD6204 was chosen over the 2-port SSD6202 to allow future storage expansion if necessary. I am not 100% happy with this purchase, but it was relatively inexpensive and combined with other forms of redundancy and backup should be more than sufficient for my needs. My biggest gripe with this card is that there is no audible beeper in the case of a drive failure, which leaves open the possibility that one of the drives could fail silently.

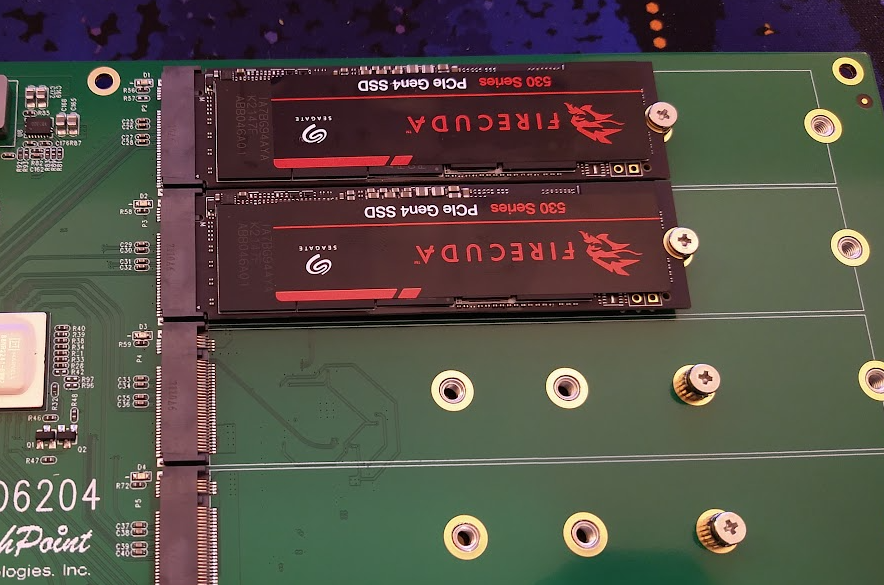

Data SSDs: 2x Seagate FireCuda 530 2TB $650

Anyone who read my review of the Seagate FireCuda 530 1TB knows that I came away very impressed. I chose a pair of the 2TB FireCuda 530 drives in a RAID 1 array for my primary data store. 2TB might not seem like much capacity, but right now the old servers have less than 500GB of data on them; 2TB should provide ample room to grow.

The SSD6204 is not Gen 4 which means these drives will be running well below their maximum potential, but the performance requirements for this server will not present a problem when sticking to Gen 3 performance. The SSD6204 has a big heatsink which should help keep these drives cool in operation.

Boot SSD: Samsung PM981A 256GB $37

I simply need something to install ESXi on, and this is what I chose. An alternative solution would have been to boot from USB, any number of other model M.2 SSDs, or even a SATA DOM. The PM981a was chosen because it was inexpensive and had same-day delivery on Amazon. That last bit mattered because, honestly speaking here, I forgot to order the boot SSDs until I had the rest of the parts already in hand; oops!

Backup HDDs: Toshiba N300 6TB 7200RPM $280 (Backup only)

The second server is intended to function as the backup. That second system includes identical hardware to the primary server so that VMs can be replicated and boot up with essentially identical performance in the case of a failure.

For longer-term backups, a pair of 6TB mechanical hard drives have been added to the second server. The presence of these disks is the only differentiating factor between the two physical servers.

Software

I am not going to get into the software licensing costs as part of this article. With that said, a paid copy of VMWare ESXi Essentials was purchased at around $580. This will be combined with Veeam to handle backups. The largest single cost in this project is actually software, specifically the Microsoft licensing. This infrastructure project was designed around a proprietary piece of software that requires three VMs, all running Windows; Domain Controller, Remote Desktop Services, and SQL services.

Adventures in DIY

As you might have gleaned from my bill of materials, not everything went smoothly in this build. Some of this is my fault and could have been caught with research ahead of time; other problems were entirely unforeseen and have me stumped even now.

The very first stumbling block I ran across was with my original chassis, the Fractal Design Core 1000.

This case was originally chosen exclusively for its exceptionally low cost. Once I had it in hand, however, I did not find myself particularly impressed and did not like the airflow setup. The mesh front gives the impression that it is well ventilated, but the top half of the mesh has solid sheet metal behind it preventing airflow. I soldiered onward, but then immediately discovered that the Noctua NH-U12S was too tall to fit into this case; the side panel would not go back on. In truth, I was somewhat relieved to have an excuse to swap this out. My second choice was the Antec VSK 10. This case had a moderately higher price tag, but everything fit inside the case much better and it did not suffer the constrained airflow problem of the Core 1000.

The next problem was the real head-scratcher of the build. As mentioned in the BOM, my original motherboard selection was the ASRock Rack B550D4U. This board was selected because it was available from my vendor brand new and it would be Ryzen 5000 ready out of the box. It was also mATX; this client does not have much physical space in their server room so size is a premium. 10 GbE networking was on the list of requirements, but the X570D4U-2L2T was both out of stock and cost more money than the B550D4U + a 2-port 10 GbE NIC, so I chose the B550D4U.

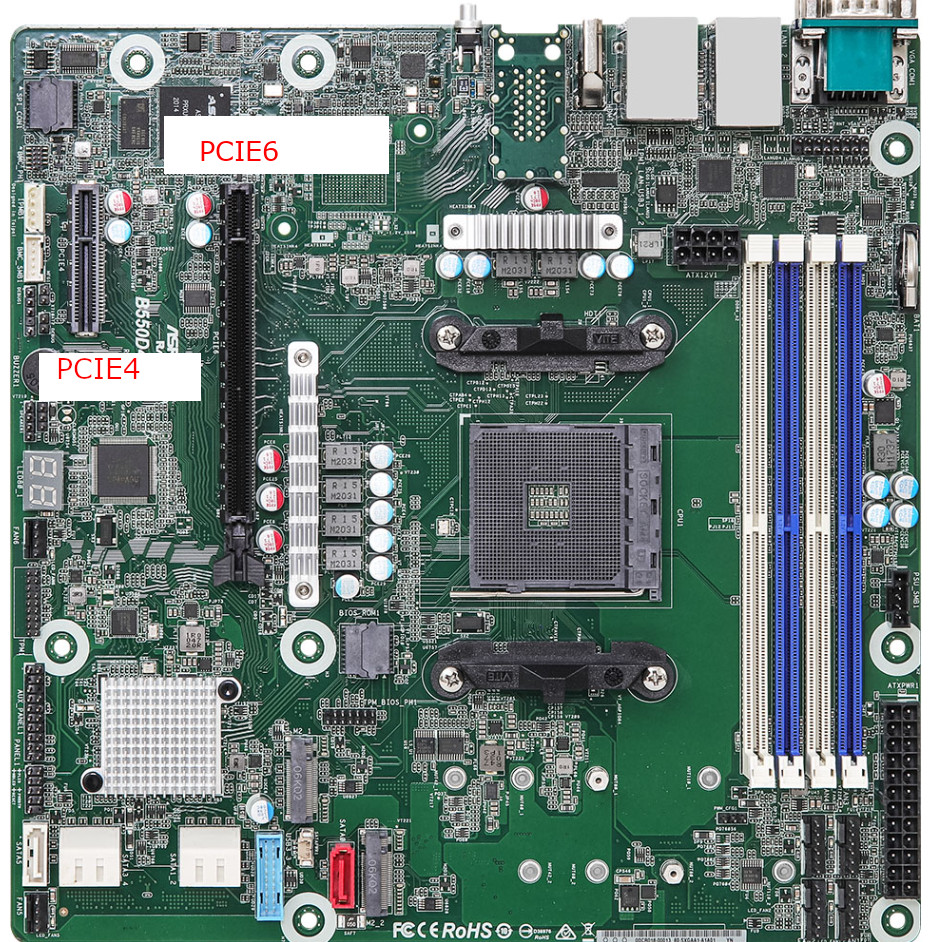

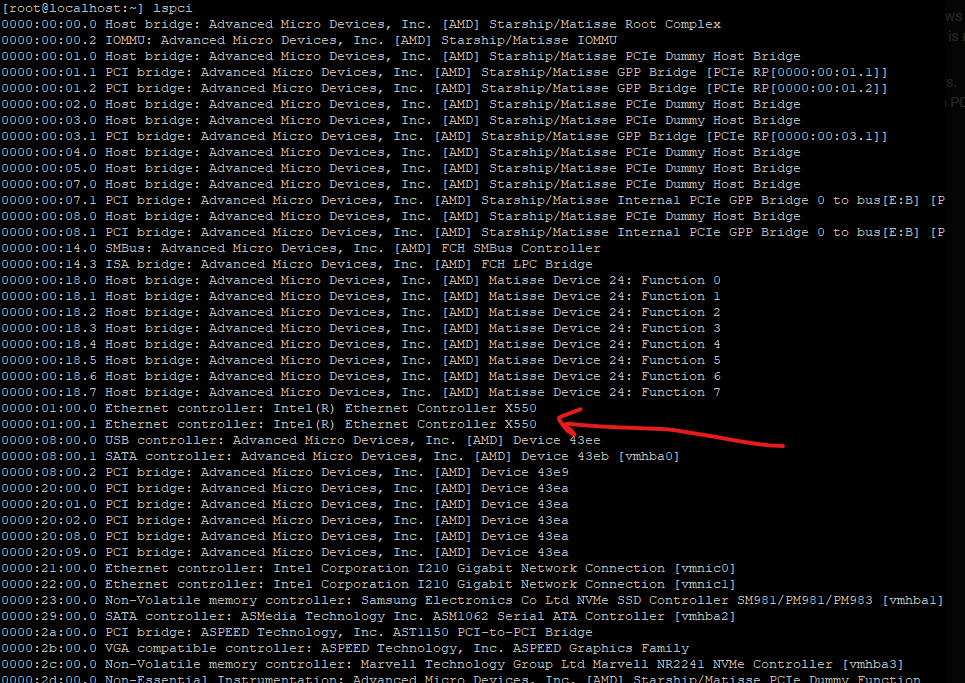

Unfortunately, I ran into problems almost immediately. For some reason, when both the main PCIe slot (PCIE6) and the secondary slot (PCIE4) were occupied, whatever was installed into PCIE4 would not actually work. The card in PCIE4 would show up in lspci in VMware, but drivers would not be loaded nor would the device be assigned an ID. If the SSD6204 RAID card was in PCIE6 and the X550-T2 NIC was in PCIE4 then the NIC would not work; if I swapped them then the RAID card would not work. I had two motherboards and four NICs (two different models) to test with so I was sure the problem was not isolated to a single piece of equipment.

After reaching out to ASRock Rack support for assistance but not solving the problem, I pulled the trigger on an eBay listing for some X470D4U2-2T boards that were brand new. These fixed the problem in two ways; since they come with onboard 10 GbE networking I no longer need the add-in cards. Second, I did go ahead and test the 10 GbE cards temporarily and they do work on the X470D4U2-2T, in case I ever need that PCIe slot down the line. ASRock Rack has promised to keep me up to date if they can figure out why my 10 GbE cards were not working on the B550D4U, but for now I am happy with the X470 boards.

One additional note regarding the X470D4U2-2T is that my boards required a BIOS update to accept the Ryzen 9 5950X CPU. Thankfully I was able to perform this update via the BMC, which meant that I did not need to find a temporary Ryzen 2000/3000-class CPU to use for the update. I also took the opportunity to install the firmware update for the BMC itself, which enabled BMC fan control settings.

The Build

Once all the incompatibilities and parts selection was completed, the system looks fairly standard and mostly resembles a standard tower PC build.

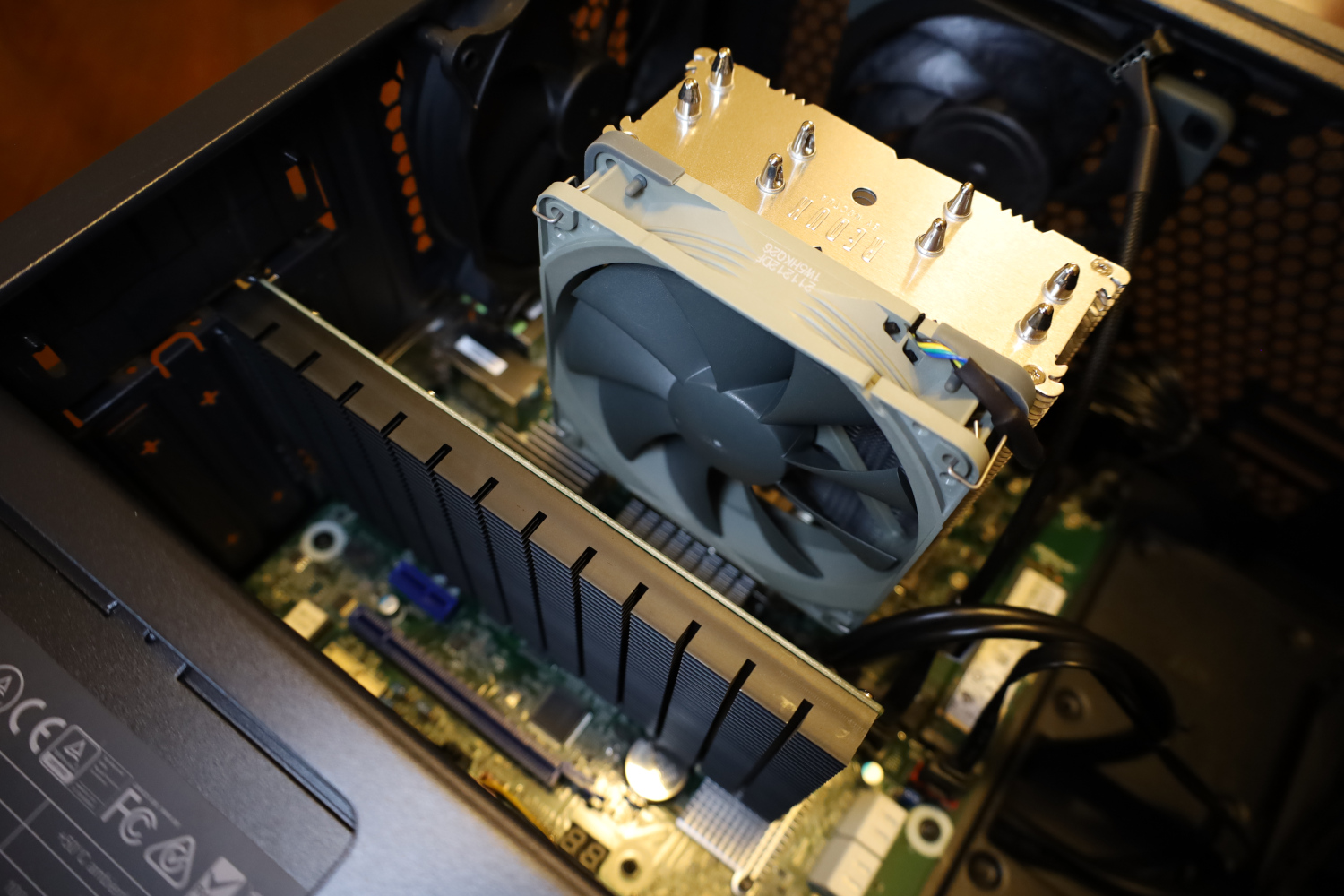

One peculiarity users will note is the orientation of the cooler. On the X470D4U2-2T the processor socket is rotated in comparison to the standard orientation on a consumer motherboard. As a result, the Noctua NH-U12S Redux cooler ends up in a bit of an odd orientation.

I elected to install the fan on the underside of the cooler and blowing upwards, with an additional 120mm fan mounted at the top of the case also blowing up as an exhaust.

The two fans at the front are configured as intakes to provide fresh air, and in my burn-in testing this configuration has proven stable with processor temperatures peaking at around 85C under full load. 85C is hot, but not unreasonable given we are talking a 5950X at 100% load under air cooling. Just in case, I enabled a 100% fan duty cycle in the BMC and under light to moderate load the CPU temperatures stay well below 50C.

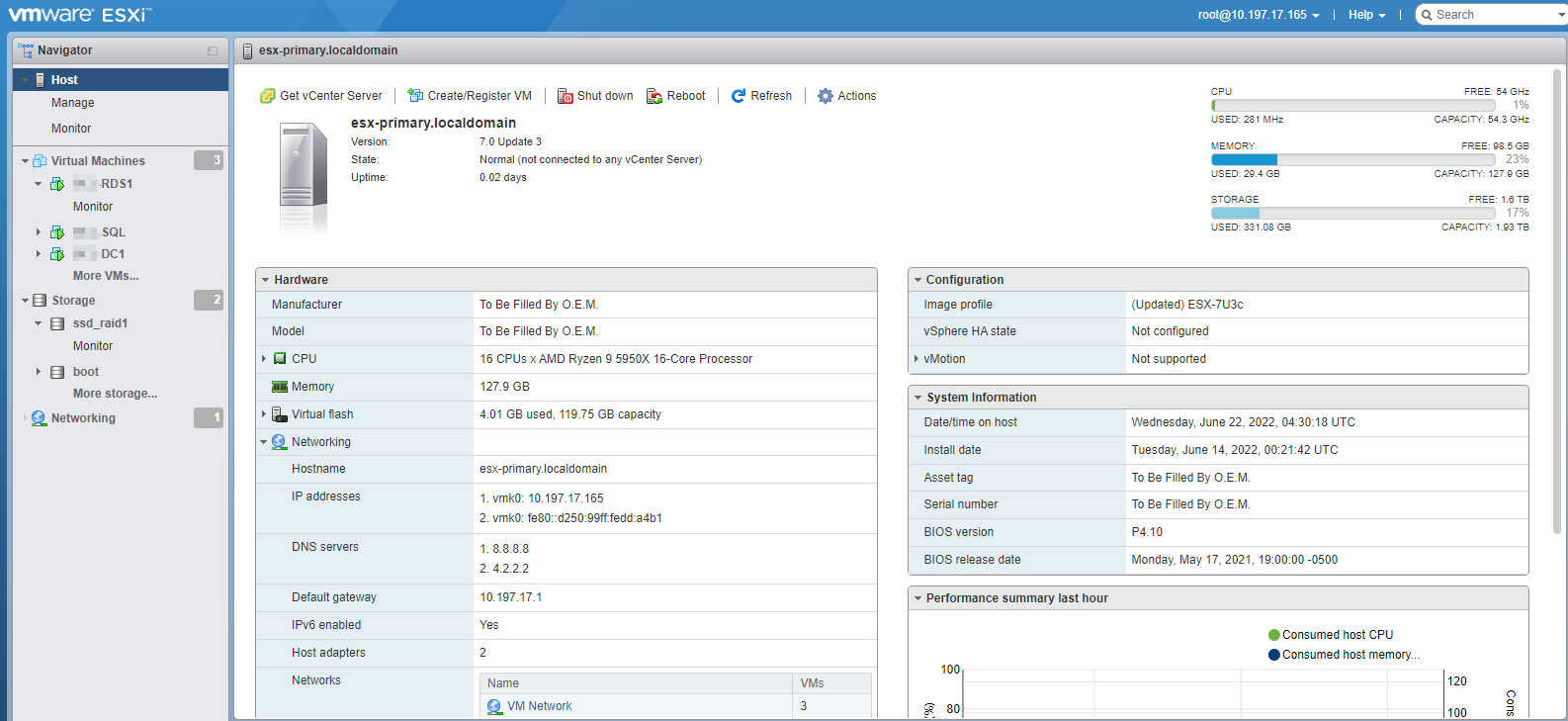

Once physical assembly was complete, things proceeded fairly normally for a server build. VMware ESXi was installed:

On the primary server, three VMs were created and appropriate software has been installed. These systems have not yet been delivered to the client so they are not fully configured on the software side of things, but they were built up enough to perform some basic burn-in testing.

Dual System Design

With two nearly-identical servers at my disposal, I have the opportunity to set up some fairly resilient failover scenarios. Each system has a 2TB SSD array, and on the primary server that is where the trio of VMs will live. Veeam will be used and configured to replicate those three VMs to the 2TB SSD array on the backup server. They will sit on that backup server powered off in case they are ever needed.

Additionally, a workstation VM will be installed on the backup server and allocated space from the 6TB mechanical disks. Veeam will use that disk space to store hourly backups going back at least a month.

The entire second server will exist on a separate network, separated by a VLAN and firewall, from the rest of the primary network. With that isolation in place, if a workstation or server becomes infected on the primary network it will have no path to the server where backups are stored. As a final layer of defense, the backups stored on this server will also be sent offsite.

Final Words

Aside from the use of some desktop-class parts, this is a remarkably traditional, or perhaps even old-fashioned server design. There are no docker containers, no hybrid cloud integration, almost nothing fancy at all. In this case a traditional environment is what satisfied the requirements for the chosen line-of-business software, and so that is exactly what was delivered. I hope you enjoyed coming along with me for this pseudo build log!

While having a BMC and 10GB Ethernet are important, I’m surprised no mention is made of using ECC RAM which Ryzen 5950X and the motherboard presumably support. From my point of view the one essential feature of a server is reliability. For this reason probably the most important part of a do it yourself small-business server build is reliability testing.

As an example, some time ago I built four dual-socket PIII servers, each with RAID controllers and multiple network interfaces. Random crashes were eventually traced to cases when the bandwidth on both network cards was simultaneously maxed out. Weirdly, the random crashes didn’t happen when only one card was busy.

Of course similar things can and do happen with branded servers; however, a followup article how to stress test a DIY small business server and what to look out for would be great.

My personal view on the ‘reliability’ issue is perhaps slightly different. My burn-in testing was done, maxxing out the CPU and RAM for around 2 days. After that the project has a long ramp where the servers will be in use during the migration but not yet mission critical, which can function as an additional burn-in time.

Beyond initial stability testing, there are two ways in which I consider this system fairly reliable. First off, the idea that the primary server might fail is essentially baked in with a fully equipped secondary server ready to take over at a moments notice. Second, a large number of the parts in the servers are functionally available at Best Buy. If the power supply dies, I can just grab almost any ATX power supply down the road and swap it out. Same for the fans, SSDs, and even the motherboard if push comes to shove (though one at Best Buy won’t have the BMC).

Hi Will, thanks for the very informative article. It would seem that business continuity superseded raw performance and the latest and greatest hardware. I have a couple of questions from a complete novice perspective looking to setup a HomeLab. Any UPS on either server? Would a case with redundant power supplies have added greatly to the BOM?

This client already owns a very nice EATON UPS, so that was not a necessity in this case.

Moving to a redundant power supply would either drastically increase the cost of the power supply or the cost of the chassis. There is technically such a thing as redundant power supplies that fit into the ATX form factor, but they are uncommon and expensive ($450 and up as best I can tell). Alternatively this system could have been built inside a purpose-built server chassis from Supermicro or ASRR, but again at a significant cost increase for the chassis.

While it would be nice to have redundant power supplies, this client does not have redundant power *sources*, so the only thing a redundant PSU really protects against is a failed power supply. Since the power supply used is a standard ATX model power supply that is readily available at multiple stores within a 15 minute driving range of the client, it simply isn’t worth it.

I find it interesting that you didn’t install vCenter even though you have the option with Essentials. If the customer needs to do any maintenance it is MUCH easier with vCenter than having to deal with the ESXi Web GUI.

Will, so you save how much on non-ECC RAM instead of ECC? $20? Or was it even lower number? Basically you build one very nice machine but which cannot be deployed as a server due to your RAM choice. Your choice of dependence on second slave server to take over master is a nice one. The problem is that without ECC RAM your primary server may silently corrupt data for months before going to crash. And I mean silently, you will not know about it. With ECC, you would know since your will see that in logs.

Very poor RAM choice indeed.

Jeremy,

vCenter included with the ESXi Essentials bundle is pretty limited and does not support key tech like live vMotion and distributed vSwitch configurations. Plus, the Web GUI built into the modern ESXi is pretty good! I can always install vCenter later on if it is of some benefit, typically running on the backup host, but for now it has not been set up.

KarelG,

The choice to omit ECC memory was conscious. You might disagree, but there were reasons. One is financial; the cost differential was $55 per module, which is a 45% increase in RAM price.

Second, and most important, is that ECC memory support on Ryzen platforms is hobbled. While the ASRR board and the Ryzen CPU will *work* with ECC memory and it will indeed actually perform error correction, it does *not* support reporting that activity back to the system. In other words, you will not be able to “see that in logs” since ECC effectively operates in silence. I think this reporting is actually the most important part of ECC functionality, since it helps you diagnose and locate failing modules. My understanding is that this is a platform limitation of Ryzen.

The most likely result of a malfunctioning memory module is going to be a system crash and not silent data corruption. Those crashes might be harder to detect without the error reporting of ECC, but it is not impossible to perform without it.

You might have different opinions on the remaining worth of ECC on the Ryzen platform, or whether the lack of complete ECC support should invalidate the concept of using Ryzen in a server environment. In my specific case, the benefits – performance, cost, and availability – were deemed to outweight the negatives.

Will,

I know what you get with the ESXi Essential bundle as I am a VMware Admin. Live vMotion is 100% allowed in Essentials vCenter, however, you do not get DRS. Having to use standard switches instead of DVS does make a difference but standard switches are still easier to manage from the vCenter side. For the Web GUI perhaps things have been improved in ESXi 7.0, but in 6.7 it is still a pain I’ve found. Wouldn’t make much sense to only run it from the backup host. With Veeam you can make a replica for vCenter as well. That would make sure that in the even of a crash you will easily be able to spin up vCenter for simple management again.

thx for a nice article. Can you provide some info on power consumption?

Jeremy,

We’re definitely running 7, but I’ll contend that live vMotion is not allowed on the Essentials kit. Take a look here at the list of licensed features for Essentials – https://www.servethehome.com/wp-content/uploads/2022/06/VS-Essentials.png

This is in comparison with for example our VSPP license, which does include vMotion – https://www.servethehome.com/wp-content/uploads/2022/06/VSPP.png

In addition you can look at my picture of the ESXi 7 home page and see that vMotion is “Not supported” – https://www.servethehome.com/wp-content/uploads/2022/06/SMB-Dual-Server-Build-ESXi.png

If you know how to get live vMotion working with Essentials, please let me know as I would be happy to use it!

brian,

Sorry, never put it onto the kill-a-watt. With that said, an educated guess should put each system somewhere in the 200W-250W range under full load and 30W-50W idle. The 5950X can use 150W on its own under a non-overclocked full load, and the minimum idle I’ve seen out on a less-fully-loaded X470D4U2-2T is 26W. Throw in some power consumption for the RAID, SSDs, and fans and load should be somewhere around 200W or perhaps 250W as a worst case. The 550W power supply was chosen to hopefully sit somewhere around the 50% usage efficiency sweet spot under maximum load.

Out of curiosity, but how did you get this to work? The 5950X, b550 motherboard and raid card? I was wondering because I have a 3600x, b450 board and had a 4 Port NIC I wanted to use, but was wondering how did you get your build to boot without a graphics card? Or am I missing something with the pcie slots? Does the on-board graphics “work”, just enough to read/boot from USB, install esxi, etc? Or is it a non-issue?

Matt,

The ASRock Rack motherboards include a ASPEED BMC or baseboard management controller. Integrated into that BMC is an incredibly basic video card. Just enough to handle the BIOS, and installation of your OS of choice. This leaves the PCIe slots free for general use.

Nice build, with flaws indeed. I don’t want to be the smb at the other end receiving these systems. Not critical system proof indeed. No ECC is a no go. And why spend money on Vmware while Proxmox is rocksolid and much cheaper?

Very nicely written article, thank you.

It is very refreshing to read an STH post without the overuse of “one” as in “one can see…”. It shows that it is not necessary in order to be professional and readable. Congratulations sir!

What a nice and smart build! I’m in a similar situation: replacing a not-so old Dell T430 server to take advantage of Ryzen 5900X single thread performance over my current Xeon E5-2690 v4.

Due to high and deep limitations I’ve choose a Thermaltake Core V21 case which has enough clearance for Noctua NH-U12S cooler and a FSP/Fortron Twins 2x500w redundant ATX PSU and the airflow should be quite nice with additional fans from Corsair.

The motherboard is the ASRR X570D4U (the X470D4U2-2T is impossible to find here in Italy).

Now I’m looking for the storage equipment and I think I’ll go with a pair of 1.92 TB Samsung PM9A3 U.2 in sw raid 1 through a Supermicro AOC-SLG4-4E4T retaimer in bifurcation mode. Maybe I’ll buy two more sata enterprise-grade SSD dedicated to Proxmox.

Ah, for the RAM I’ve found a very interesting deal with Kingston ECC 2×32 GB for 150 €. Maybe I’ll buy another pack in next future.

Peter,

VMware was chosen because it is where my expertise lies. I’ve got experience with hundreds of ESX servers, and experience with exactly two Proxmox servers. Plus, it works with our chosen backup software (Veeam) and Proxmox does not.

Tiredreader,

Thanks! I was a bit more personal/first person with this article since it was essentially just a journal entry dressed up as an article!

Davide,

Good luck with your build! Post about it in the forums when you are done!

Interesting build, not in the least because some of the components don’t seem to make much sense. Why not go with a case that has decent airflow, or even a simple DIY server case so you can rack-mount it? On the CPU cooler side you would have wanted to use a Dynatron cooler, as those have the correct orientation for this board, which is optimized for front to back airflow. I would be concerned for your VRM airflow if you don’t have some decent case fans providing static pressure in your case.

Then PCIe wise the issue with this board is that there’s more lanes in use than the chipset + CPU offer. Having two NVMe drives installed will cause the issues you’re observing, what works better is to use an M.2 SATA bootdrive instead.

For the PSU, the optimal efficiency of most modern PSUs is between 40 and 80% of rated capacity, however larger capacity PSUs perform less effective at lower power consumption. So you probably would’ve been better off with a 300-400W PSU.

For backup storage I would probably have chosen a SATA SSD instead of HDD as that would likely improve performance in case of a restore and I would expect a better drive lifetime, no noise and a lower power consumption as well.

I’ve got some X470D4U based systems running myself, maybe I’ll get around to a writeup on the forums as well. They’re nifty little boards but not without quirks

Wow that info about Ryzen not being able to report ECC events back to the OS is a usefull tidbit to know. It feels like AMD needs a CPU that supports that and they may get some more of the SMB market. Dell T55 T355 T455 would be a great option to have, I know that Dell ship a lot of those tower servers as they are great quiet reliable workhorses and competition is always nice.

David,

Rackmount was not chosen because the client does not have, nor plan on purchasing, a rack. Airflow seems pretty decent in the VSK10 and everything seems nice and cool. Something like a Dynatron A19 would have been nice, but everywhere I could find it was “ships from China” with a 3 week lead time that I was not willing to wait on.

As for PCIE lanes, they were not oversubscribed. Per the ASRR website, PCIE6 is x16 from the CPU and PCIE4 is x4 by the CPU, with both M.2 drives being driven by the chipset. It is possible the website is wrong, but there is no manual included with the board nor available on the website that can be downloaded. With that said, initially I guessed similar to you and tried out both a M.2 SATA boot-drive as well as directly installing ESXi to a USB thumbdrive and omitting the M.2 drives entirely. The problem persisted, so I do not think it was lane oversubscription.

For backup storage, while I would have preferred to stay with SSDs there is a dramatic cost difference to pick up a 6+ TB SSD versus the 6TB HDDs equipped in the system. For longterm archival type backups the HDD solution will be just fine.

Will,

I am wrong on the Essentials side. I forgot that they have 2 levels, Essentials and Essentials Plus, and the Plus includes things like vMotion and HA. The customers I work with that have Essentials all have the Plus version and everything I do at my DC is on the Enterprise Plus. At that point the only reason to have vCenter installed with basically a single host is the easier management.

Will said, “My burn-in testing was done, maxxing out the CPU and RAM for around 2 days.”

Thanks for your reply about reliability testing. In my experience stressing IOPS on network and storage can also lead to crashes that are pretty important to resolve before going into production.

As seen by the number of comments to this blog, there is great interest in DIY use of consumer-level gear to create server-level infrastructure for business and of course the home lab. It’s great to hear a discussion of the design tradeoffs for a particular project. I particularly enjoyed that aspect of this article.

I would reiterate that an article on how to test a DIY server to ensure reliability (as well as those repurposed mini-micro nodes) would–considering the interest show here–in my opinion be well received.

Again thanks for sharing the personal day-by-day details of building this server.

I have in a server install using the Fractal Design case mentioned in this article. Here’s a few things that I noticed:

(1) The 5.25 inch drive bays had steel knock-out plates as part of the steel forming process. I knocked out the 5.25 inch knock-out plates when I first installed the chassis and used Noctua fans all-around to keep the noise down. Fan noise is the only noise that would emanate from this chassis.

(2) Drive mounting is vertical while exposing the narrowest part of a HDD to airflow. I still find the drive mounting methodology used in this Fractal Design case to be a real hassle when I need to change drives; screws & little rubber bushings everywhere.

(3) Airflow was satisfactory in my case; mobo with Intel C2758 fanless processor. Airflow has never been an issue as the CPU runs consistently around 50 degrees Celsius, and maybe peaking to 55 degrees Celsius, regardless of system load. Memory temperatues, available in most ECC chips, were also steady. HDD temps were steady, never peaking over 40 degrees Celsius. SSD temps remain consistently under 35 degrees Celsius, and usually less than that.

(4) I liked that all the fans used in the case were 80 to 120mm in size; I forgot the exact sizes. Large fans can turn slower while still moving the same amount of air as a smaller, faster spinning fan. Additionally, the fan sizes used in this case are standard, commonly available sizes…and that meant I could find a fairly silent Noctua fan (a BIG PLUS).

(5) Front grille can be partially detached (cables prevent full detachment) from the steel case for cleaning of the front bottom case fan, grille, and metal case front. I have done this with the server in operation.

I got rid of the original 2 HDD (4 TB each in Raid-1) in my design that were used for storage, replacing them with a pair of SSD (2 TB each in Raid-1) after spending a year or more studying the storage needs of the application that I was using. Perhaps 4 TB SSD will be an economical purchase in the future relative to purchasing 2 TB SSD; 200 USD is my upper budget limit on “per SSD cost”.

The Fractal Design cases are well-built but do have some ‘quirks’ (my view, you might call them ‘features’) that might not be to everyone’s tastes. For my specific use it has proven to be an adequate solution.

Eric,

I agree, more than just CPU and RAM testing is key for ensuring longterm stability. In the case of this setup, the two servers will go into place for a relatively long ramp where the existing line-of-business software is migrated from the old hosts to the new. During that time, data will be replicated to the new hosts from both the application as well as the domain and file server functions. In particular, I have set up live synchronization of the client’s main file server with the replacement one, keeping a ~500GB volume of data in sync between the old environment and new. This time period will last up to a month and will serve as the final testing period to ensure stability of the new servers. None of that could happen at my house where I built these servers, though, since I don’t have the original servers with me! I’m glad you enjoyed the give-and-take aspect of deciding on the hardware; this is something I do fairly often and will keep it in mind for future articles.

Sleepy,

I love Fractal in general – my personal PC is built in a Define S – but the 1000 just did not work out in this case. Had I ended up with a shorter cooler I would have stuck with the 1000 case, but in the end I was glad to have a reason to swap to the VSK10. If nothing else the VSK10 provides the ‘behind-the-motherboard’ cable routing opportunity that the 1000 was missing as well, allowing for cleaner internal cable routing.

My alternate DIY server with a SAS12 RAID10 (29TB useable) and ~30-45GbE IPoIB connectivity. Not for a workstation, but as storage server, and can run other services. The mini-itx mobo has only one PCIe Gen 3 x16, but you can bifurcate it to x8 + x8. Contrary to its predecessors the 5000g series provides 16 lanes of PCIe Gen 3.

domih@r7-5700g:~$ lspci

…/…

01:00.0 RAID bus controller: Adaptec Series 8 12G SAS/PCIe 3 (rev 01)

…/…

07:00.0 Network controller: Mellanox Technologies MT27520 Family [ConnectX-3 Pro]

…/…

domih@r7-5700g:~$ sudo lspci -vv -s 01:00.0 | grep Width

LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM not supported

LnkSta: Speed 8GT/s (ok), Width x8 (ok)

domih@r7-5700g:~$ sudo lspci -vv -s 07:00.0 | grep Width

LnkCap: Port #8, Speed 8GT/s, Width x8, ASPM L0s, Exit Latency L0s unlimited

LnkSta: Speed 8GT/s (ok), Width x8 (ok)

domih@r7-5700g:~$ df -h -t ext4

Filesystem Size Used Avail Use% Mounted on

/dev/nvme0n1p5 484G 94G 366G 21% /

/dev/sda1 29T 6.3T 21T 24% /mnt/storage

domih@r7-4750g:~$ iperf3 -c r7-5700g.ib

Connecting to host r7-5700g.ib, port 5201

[ 5] local 10.10.56.38 port 60950 connected to 10.10.56.39 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 4.27 GBytes 36.7 Gbits/sec 0 5.56 MBytes

[ 5] 1.00-2.00 sec 5.48 GBytes 47.1 Gbits/sec 0 5.56 MBytes

…/…

[ 5] 8.00-9.00 sec 5.49 GBytes 47.1 Gbits/sec 0 5.56 MBytes

[ 5] 9.00-10.00 sec 4.32 GBytes 37.1 Gbits/sec 0 5.56 MBytes

– – – – – – – – – – – – – – – – – – – – – – – – –

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 52.4 GBytes 45.0 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 52.4 GBytes 45.0 Gbits/sec receiver

BOM with a mixed of new and used (eBay) hardware: $2,753.33

ASRock B550M-ITX/AC AM4 AMD B550

AMD Ryzen 7 5700G Desktop Processor 8C/16T OEM

Noctua NH-L9x65 SE-AM4

Western Digital WD BLACK SN750 NVMe M.2

Team T-FORCE VULCAN Z 32GB (2 x 16GB) 288-Pin DDR4 SDRAM DDR4 3600

CORSAIR SF Series SF600 600W 80 PLUS

PCIe Bifurcation Card – x8x8 – 2W × 1 (c-payne.com)

ASR-8805 ADAPTEC 12GBPS SAS/SATA/SSD RAID CONTROLLER CARD (used eBay)

Adaptec Flash Module AFM-700 + AFM-700CC Battery (used eBay)

2 x 4 Bay 3.5 inch and 2.5 Inch Hard Drive HDD Rack Cage 4×2.5,3.5

8 x HGST Ultrastar He8 HUH728080AL5200 8TB 7200rpm SAS III 12Gb/s 3.5″ Hard Drive (used eBay)

Mellanox ConnectX-3 Pro VPI (used eBay)

2 x 1.6 ft Mini SAS SFF-8643 to 4x SFF-8482

PC Case Cooling Fan Hub

65cm PC Case Motherboard 2x ATX Power Switch Reset Button

45pcs 6mmx7mm Black Plastic Button Caps for 8.5mmx8.5mm Tact Switch

40PCS PC HDD Hard Disk Drive Shock Proof Anti Vibration Screws+Damping Ring

Computer PC Dustproof Cooler Fan Case Cover Dust Filter Mesh Roll 1m Length 1 x

USB2.0/USB3.0 Hub Spilitter USB3.0 Front Panel 3.5mm

ADT-Link PCIe 3.0 x16 Pci-e 16x Graphics Card Extension Cable with SATA Cable

Multiple extension cables(*) + 1 Fan

Miscellaneous for building custom case with white wood

HTH

The ECC reporting issue is fixed using a Ryzen 9 PRO-series sku. Obviously you can’t buy them at retail but the PRO version would have resolved the ECC skepticism.

I built a very similar system last year and have been very happy with how it performs.

I use the ASRock Rack X570D4U-2L2T which has the built in 10GBE and supports PCIE Gen 4. I used ECC Ram as it was not much premium.

The main difference is I hate RAID controllers, so instead of that I installed an $80 NVME x16 to quad X4 Asus HYPER M.2 X16 GEN 4 CARD since this board does support bifurcation natively.

I used 2 of the slots to run 2X Addlink S90 2TB GEN4 (3600TBW endurance) NVME drives mirrored through ZFS. It has been very fast and reliable.

I also made a backup server from a Lenovo Desktop small tower. It runs the excellent Proxmox Backup Server as well as being a Proxmost VM host.

I use Proxmox (ZFS based) replication to sync my important VMs to the backup every 15 minutes. This allows a very quick migration if I want to work on the main server.

Would like to set up server at home to learn windows server 2019/2022 Hyper-V Domain Controller ect. Looking for entry level hardware to learn the Microsoft way. Just not sure device drivers would work. I hope one day Servethehome does a review on hardware that would work with windows server 2019 and or 2022. Would the machine in this review be a good one to use for windows servers?

Hey, Will. Thanks for this post, I quite enjoyed reading your write-up.

I wasn’t aware of the ECC reporting issues, so I found the especially interesting.

Perhaps it might be wise to briefly write that in your thread to prevent additional comments regarding the same thought, as well as providing additional information to users like myself(who may not go through the comments).

I spent countless hours reading about RAM to help decide on what to get for my personal desktop a year ago and nothing came up regarding the lack of report functionality, so I value that little piece of new knowledge.

bob,

Windows Server 2019 and 2022 will, for the most part, install anywhere you can install Windows 10 and can share drivers from those OS. The exception to that is some intel workstation class network card drivers don’t like to install on servers, though there are workarounds for that if push comes to shove. It won’t be a *supported* installation, but for home lab learning stuff you were never going to call in for support anyways, so it is plenty to learn on.

Joel,

Glad you enjoyed it! I didn’t go into much detail on the RAM, and in truth the presence or lack of ECC is unimportant to me in the context of these servers, so it just did not occur to me. I suppose I invited the commentary by including a picture of the RAM, but that wasn’t really why I put the pic in there as you might imagine!

Main build:

X470D4U

AMD 5700G

P1000+T600

4x32GB Fury

Silverstone sst-cs330b

4xSSD,2xM.2. Works like a charm. After unlock Nvidia drivers, P1000 can make 6streams 4k->fhd.

Anyone tried this or similar setup on esxi 8?