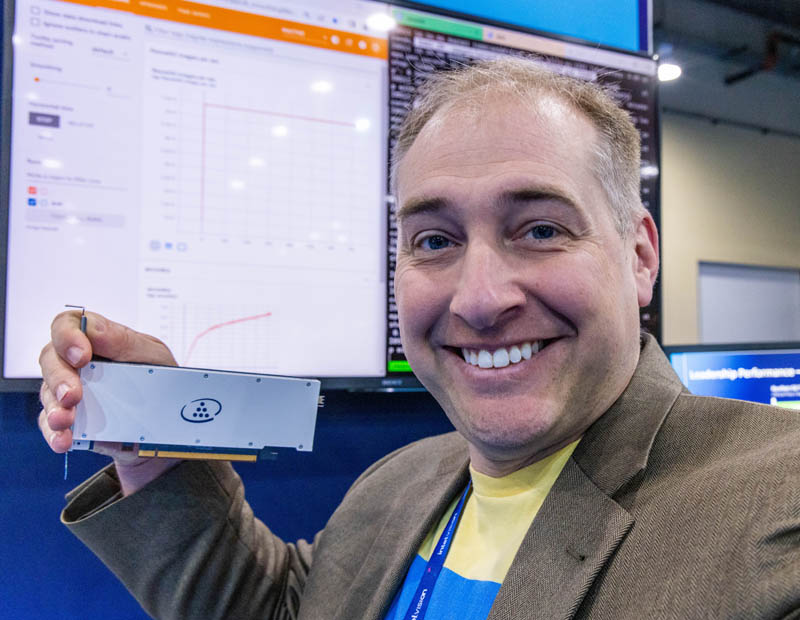

We recently got to see the new Intel AI inferencing accelerator PCIe card. This new Intel Habana Greco card is absolutely a step in the right direction as it re-defines the offering both in terms of performance and form factor. At Intel Vision 2022, we were able to see the card in-person.

Intel Habana Greco AI Inference PCIe Card at Vision 2022

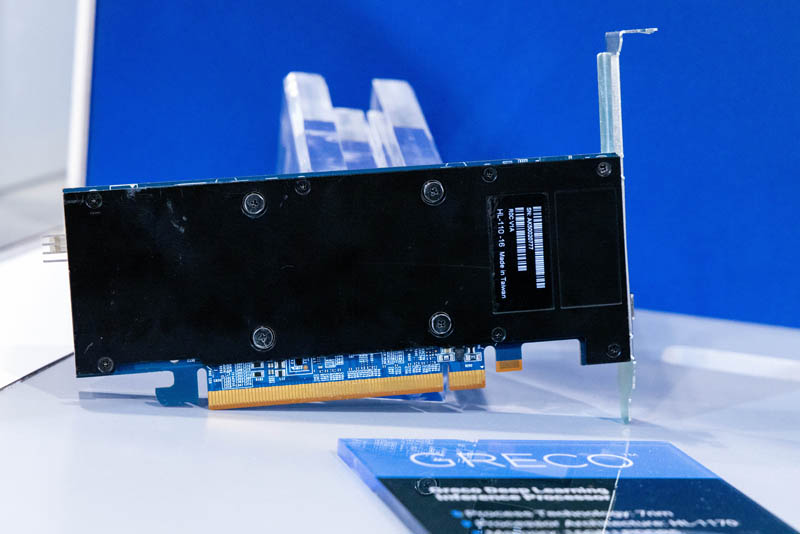

The new Intel Habana Greco AI inference card is a low profile PCIe card.

Do not let the form factor fool you. The new card is a huge upgrade over the previous generation. Along with moving from 16nm to 7nm, memory bandwidth goes from 40GB/s to 204GB/s although still 16GB in capacity. It also goes from 50MB to 128MB of SRAM.

Here is the I/O faceplate. One of the fun parts is that there is actually a USB Type-C service port here.

The rear of the card has a giant back plate.

The low profile card is a big change. The previous generation Goya was a dual slot full-height card that used 200W. This is a huge change since it means that the new Greco can go into many more servers than the Goya was able to go into, while at the same time providing more resources for inference.

Here is an old picture from Hot Chips of the Goya.

Overall, this is a big change for the inference products.

Final Words

Realistically, the low profile 75W form factor is extremely popular for AI inference since it fits in not just traditional 1U/ 2U servers, but also the edge appliances that do inference. The new generation Intel Greco also has media decoding capabilities because video analytics is such a big workload.

The other interesting aspect of this is that Intel now has both the Greco dedicated AI inference accelerator, but the company is also positioning the Intel Arctic Sound-M as an AI inference GPU. It will be interesting to see how these product lines evolve.

Looking sharp! You look even sharper as the card you’re holding :)

Was there any mention of oneDNN support for Greco?

@JayN:

Habana products don’t implement any part of oneAPI at all.

It’s a separate division entirely with a closed-source user-space stack (SynapseAI Core is unusable in production). There’s no overlap in provided APIs at the driver level.