Today we are taking a look at the Dell EMC PowerEdge R750xa. This is an accelerated 2U system that Dell hopes will slot between the PowerEdge R750 below and the Dell EMC PowerEdge XE8545 above. As a 3rd generation Intel Xeon Scalable system, codenamed Ice Lake, we get new features for accelerated computing in this platform. In our review, we are going to take a look at those new features and some of the unique design angles that Dell EMC took.

This is a tough system to review. Most STH readers will know I really like the PowerEdge designs. The PowerEdge R750xa would be an absolutely great system if we did not review so many GPU servers at STH. Since we review so many GPU servers from Dell and other companies, it is much harder to fall in love with this system. In this review, we are going to show why.

Dell EMC PowerEdge R750xa Hardware Overview

We are going to split this review up into external and internal hardware overviews as we have been doing with many of our recent reviews.

Dell EMC PowerEdge R750xa External Hardware Overview

The PowerEdge R750xa is effectively replacing the PowerEdge C4140 and there is an immediately obvious change: this replacement is 2U instead of 1U. That extra height allows for more components and more cooling. It also means that the depth is only around 837.2-872.8mm deep, or in the 33″ to 34.5″ range. While it is still a fairly deep server, this is much easier to fit in racks than its predecessor. Also, Dell told us that for most of its customers, rack density is less of an issue because these accelerated systems use so much power.

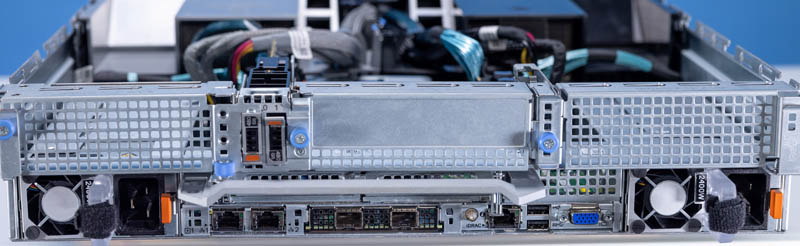

Something to quickly note is that the front of the system has the USB, VGA, and service ports on the rack ears while the rest of the system is dedicated to GPU airflow and disks.

On the front of the system, we have SATA, SAS, and NVMe 2.5″ bays. One can see the four SAS drives, as well as the four NVMe drives here.

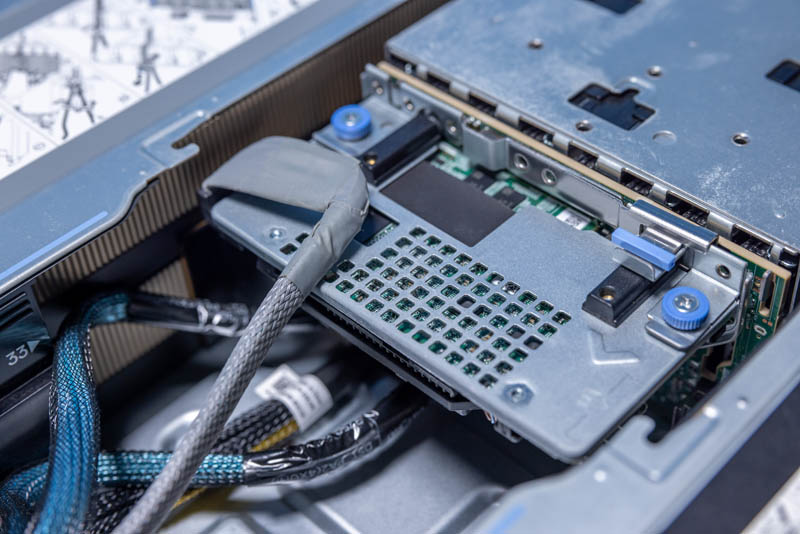

Something that is a bit interesting is that the PERC card attaches to the rear of the drive bays rather than being located at the rear of the chassis. Our general guidance these days is to skip SAS controllers and just use NVMe for SSDs.

These massive vents are there to provide cool airflow to the hot accelerators behind them.

On the rear of the system we get perhaps more features. Since this is an accelerated system, we get two 2.4kW power supplies. Dell uses PSUs on either side for shorter cable runs if a rack has A+B PDUs on either side of the rack.

With this generation, Dell has moved to a more modular rear I/O configuration, so the lower-speed I/O gets its own PCB for the iDRAC NIC, USB ports and VGA.

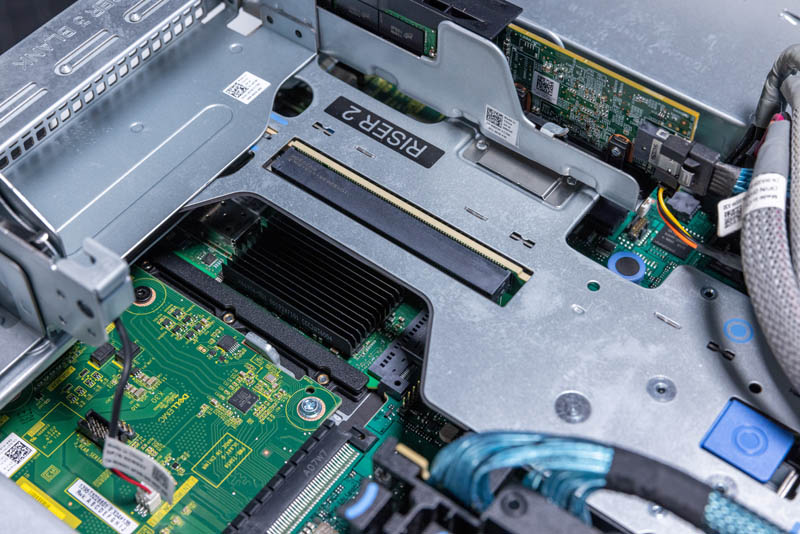

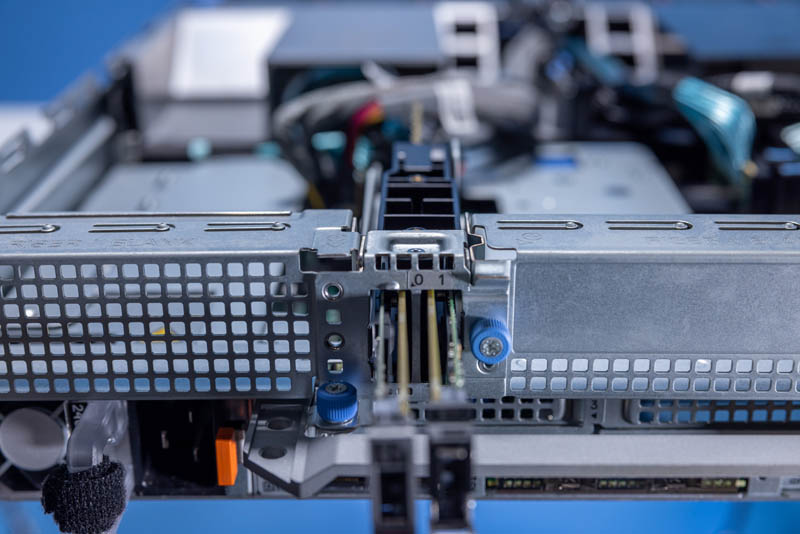

Networking is handled via an OCP NIC 3.0 slot. Since this is an Intel system, rather than an AMD system, we have more limited PCIe lanes available at 128 in a dual-socket server rather than 160. The impact of this is that with front SAS/ NVMe and four PCIe Gen4 x16 GPUs, we only have the OCP NIC 3.0 slot as well as a low profile x16 riser on both sides. As you can see from this shot, Dell has a tool-less design to get to the riser, but it certainly took us longer than the average 2U GPU server riser to access because of how many modules need to be removed in the process.

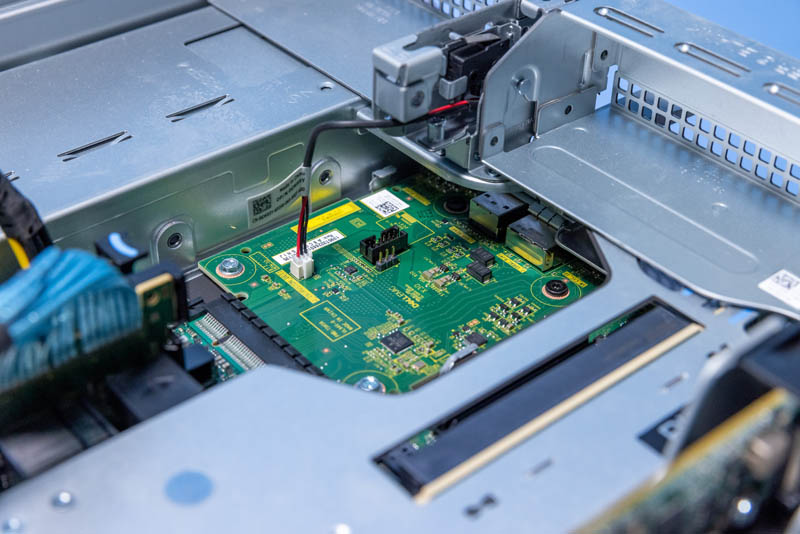

Perhaps the coolest feature is the Dell BOSS option. This has two M.2 SSDs on adapter boards and they are being placed at the rear of the chassis. In the future, we expect that these will be E1.S SSDs as this is a specific application that the EDSFF form factor is addressing in hyper-scalers already, so we expect over the next few years Dell will bring it to its customers.

Here is just a different look at the carrier:

Something that is really nice in this system, versus some that we review, is that Dell has great labeling throughout the system.

Next, let us get to our internal hardware overview.

It’s nice to see a real review of Dell not canned junk.