A quick one today is the super-simple tutorial for getting NICs passed through to virtual machines on Promxox VE. Passing-through NICs avoid the hypervisor overhead and also can help with compatibility issues using virtual NICs and some firewall appliances like pfSense and OPNsense. The downside is that unless the NICs support SR-IOV, they most likely will not be shared devices in this configuration.

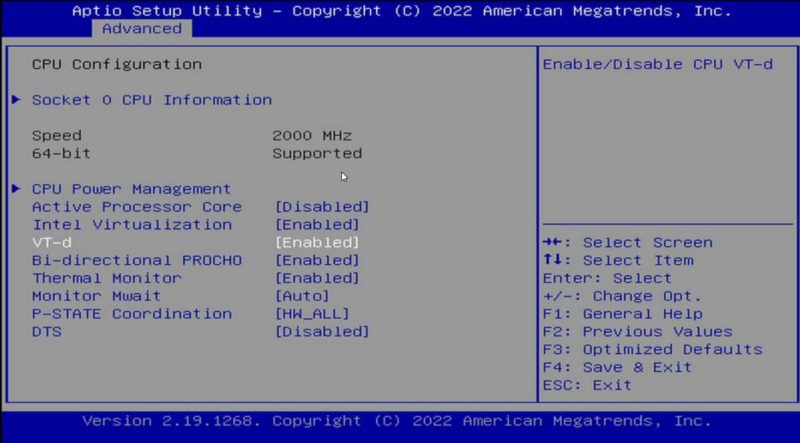

Step 1: BIOS Setup

The first thing one needs to do is to turn on the IOMMU feature on your system. For this, the CPU and the platform need to support the feature. These days, most platforms will support IOMMU, but some older platforms do not. On Intel platforms, this is called “VT-d”. That stands for Intel Virtualization Technology for Directed I/O (VT-d.)

On AMD platforms you will likely see AMD-Vi as the option. Sometimes in different system firmware, you will see IOMMU. These are the options you want to enable.

Of course, since this is Proxmox VE, you will want to ensure your basic virtualization is on as well while you are in the BIOS. Also, since it is going to likely be a main focus for people using this guide, if you are making a firewall/ router on the machine, we usually suggest setting the On AC Power setting to “Always on” or “Last state” so that in the event of a power failure, your network comes up immediately.

Next, we need to determine if we are using GRUB or systemd as the bootloader.

Step 2: Determine if you are Using GRUB or systemd

This is a newer step, but if you install a recent version of Proxmox VE, and are using ZFS as the root (this may expand in the future) you likely are using systemd not GRUB. After installation, use this command to determine which you are using:

efibootmgr -v

If you see something like “File(\EFI\SYSTEMD\SYSTEMD-BOOTX64.EFI)” then you are using systemd, not GRUB.

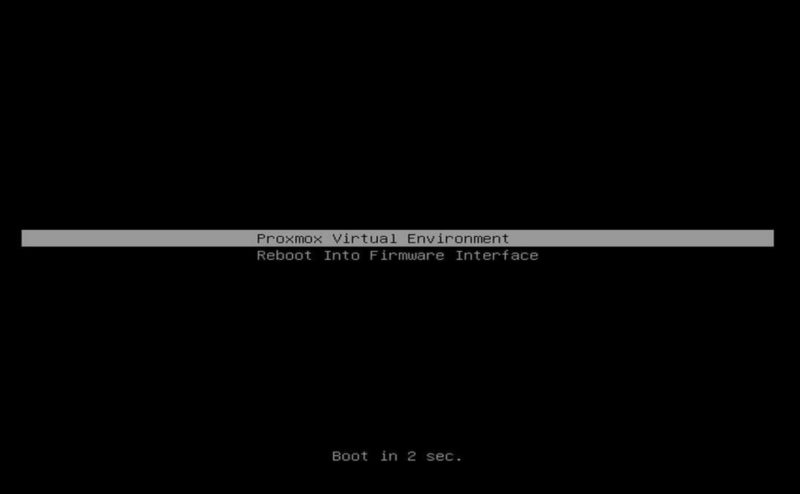

Another giveaway is when you boot, if you see a blue screen with GRUB and a number of options just before going into the OS, then you are using GRUB. If you see something like this, you are using systemd:

This is important because many older guides are using GRUB, but if you are using systemd, and follow the GRUB instructions, you will not enable IOMMU needed for NIC pass-through.

Step 3a: Enable IOMMU using GRUB

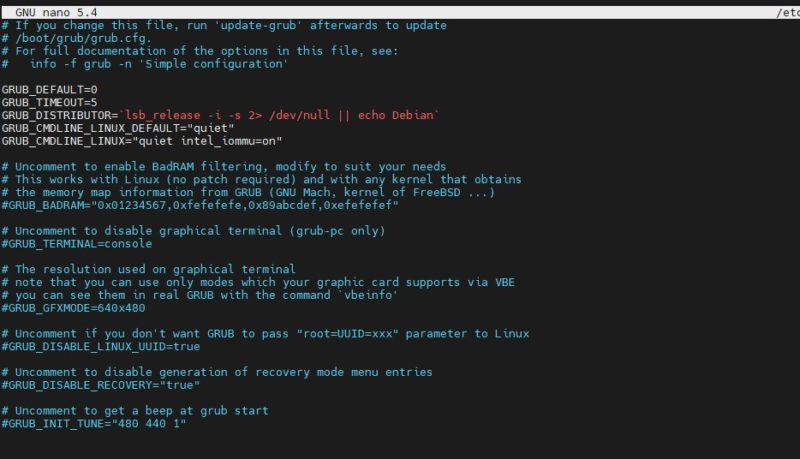

If you have GRUB, and most installations today will, then you will need to edit your configuration file:

nano /etc/default/grub

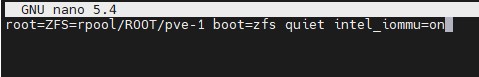

For Intel CPUs add quiet intel_iommu=on:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on"

For AMD CPUs add quiet amd_iommu=on:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on"

Here is a screenshot with the intel line to show you where to put it:

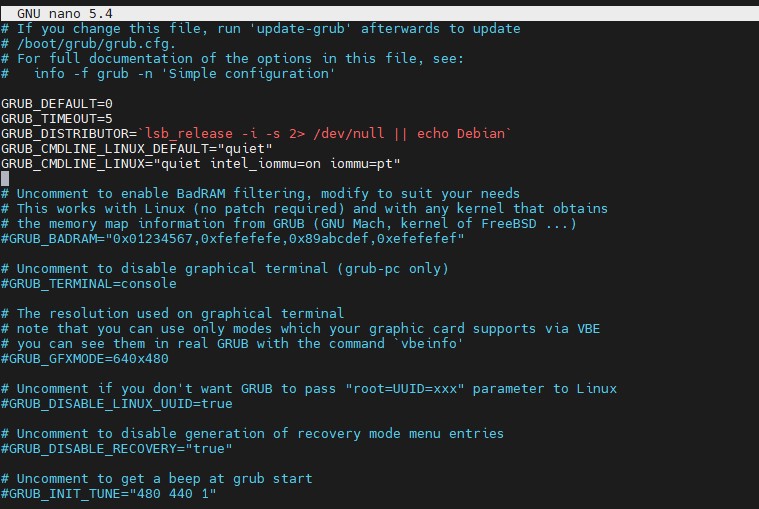

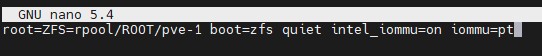

Optionally, one can also add IOMMU PT mode. PT mode improves the performance of other PCIe devices in the system when passthrough is being used. This works on Intel and AMD CPUs and is iommu=pt. Here is the AMD version, of what would be added, and we will have an Intel screenshot following:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"

Here is the screenshot of where this goes:

Remember to save and exit.

Now we need to update GRUB:

update-grub

Now to go Step 4.

Step 3a: Enable IOMMU using systemd

If in Step 2 you found you were using systemd, then adding bits to GRUB will not work. Instead, here is what to do:

nano /etc/kernel/cmdline

For Intel CPUs add:

quiet intel_iommu=on

For AMD CPUs add:

quiet amd_iommu=on

Here is a screenshot of where to add this using the Intel version:

Optionally, one can also add IOMMU PT mode. This works on Intel and AMD CPUs and is iommu=pt. Here is the AMD version, of what would be added, and we will have an Intel screenshot following:

quiet amd_iommu=on iommu=pt

Here is the Intel screenshot:

Now we need to refresh our boot tool.

proxmox-boot-tool refresh

Now go to Step 4.

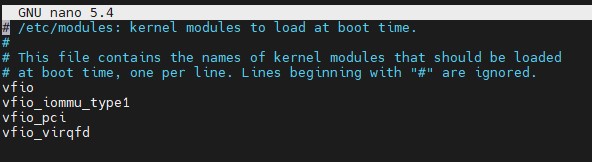

Step 4: Add Modules

Many will immediately reboot after the above is done, and it is probably a good practice. Usually, I like to add modules just to save time. If you are more conservative, reboot, then do this step. Next, you will want to add modules by editing:

nano /etc/modules

In that file you will want to add:

vfio vfio_iommu_type1 vfio_pci vfio_virqfd

Here is what it should look like:

Next, you can reboot.

Step 5: Reboot

This is a big enough change that you will want to reboot next. With PVE, a tip we have is to reboot often when setting up the base system. You do not want to spend hours building a configuration then find out it does not boot and you are unsure of why.

We will quickly note that we condensed the above a bit for more modern systems. If something fails in the verify step below, you may want to reboot before adding modules instead, and also not turn on PT mode before rebooting.

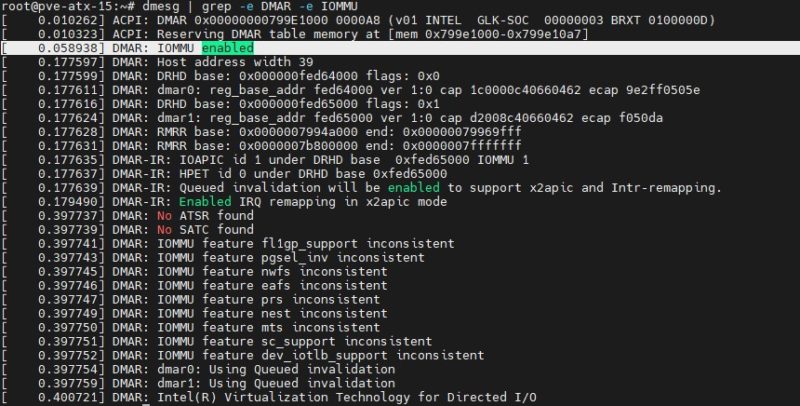

Step 7: Verify Everything is Working

This is the command you will want to use:

dmesg | grep -e DMAR -e IOMMU

Depending on the system, which options you have, and so forth, a lot of the output is going to change here. What you are looking for is the line highlighted in the screenshot DMAR: IOMMU enabled:

If you have that, you are likely in good shape.

Step 7: Configure Proxmox VE VMs to Use NICs

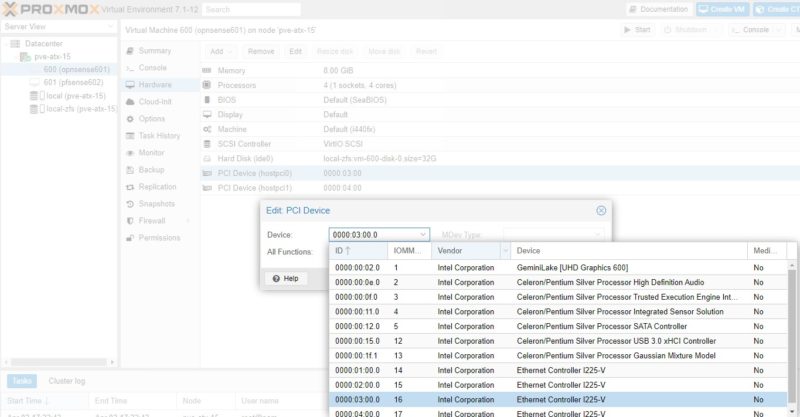

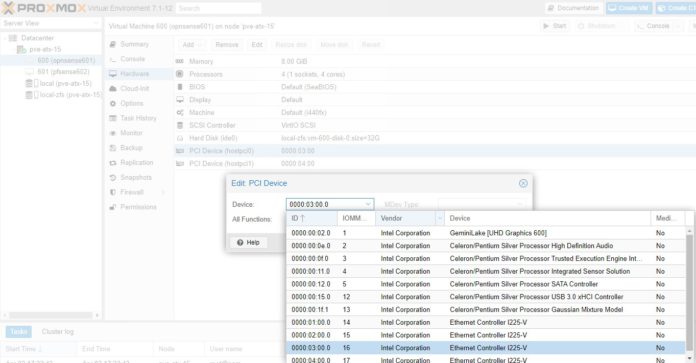

For this, we are using a little box very similar to the Inexpensive 4x 2.5GbE Fanless Router Firewall Box Review. It is essentially the same, just a different version of that box. One of the nice features is that each NIC is its own i225-V and we can pass through each individual NIC to a VM. Here is a screenshot from an upcoming video we have:

In the old days, adding a pass-through NIC to a VM was done via CLI editing. Now, Proxmox pulls the PCIe device ID and then also the device vendor and name. This makes it very easy to pick NICs in a system. One point that is nice about many of the onboard NICs is that the physical ordering as the NICs are labeled on the system should mean that we have sequential MAC addresses and PCIe IDs. In the above 0000:01:00.0 is the first NIC (ETH0). The device 0000:02:00.0 is the second, and so forth.

At this point, you are already done. You can see we have this working on both OPNsense and pfSense and the process is very similar. The nice thing is that by doing this, pfSense/ OPNsense have direct access to the NICs instead of using a virtualized NIC device.

A Few Notes on IOMMU with pfSense and OPNsense

After these NICs are assigned there are a few key considerations that are important to keep in mind:

- Using a pass-through NIC will make it so the VM will not live migrate. If a VM expects a physical NIC at a PCIe location, and it does not get it, that will be an issue.

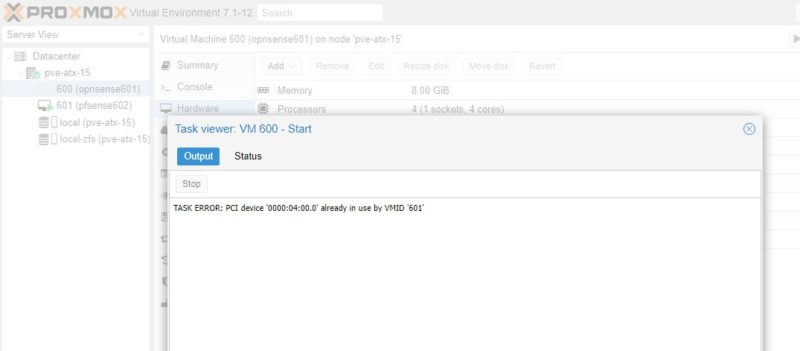

- Conceptually, there is a more advanced feature called SR-IOV that allows you to pass through a NIC to multiple devices. For lower-end i210 and i225-V NICs that we commonly see in pfSense and OPNsense appliances, you will be conceptually dedicating the NIC to the VM. That means, another VM cannot use the NIC. Here is an example where we have the pfSense VM (600) using a NIC that is also assigned to the OPNsense NIC. We get an error trying to start OPNsense. The Proxmox VE GUI will allow you to configure pass-through on both VMs if they are off, but only one can be on and active with the dedicated NIC at a time.

- Older hardware may not have IOMMU capabilities. Newer hardware has both IOMMU and ACS, so most newer platforms make it easy to separate PCIe devices and dedicate them to VMs. On older hardware, sometimes how PCIe devices are grouped causes issues if you want to, as in this example, pass-through NICs separately to different VMs.

- You can utilize both virtual NICs on bridges along with dedicated pass-through NICs in the same VM.

- At 1GbE speeds, pass-through is not as big of a difference compared to using virtualized NICs. At 25GbE/ 100GbE speeds, it becomes a very large difference.

- When we discuss DPUs, one of the key differences is that the DPU can handle features like bridging virtual network ports to physical high-speed ports and that happens all on the DPU rather than the host CPU.

- This is an area where it takes longer to setup than a bare-metal installation, and it adds complexity to a pfSense or OPNsense installation. The benefit one gets is that doing things like reboots is usually much faster in the virtual machine. One can also snapshot the pfSense or OPNsense image in the event one makes a breaking change.

- We suggest having at least one more NIC in the system for Proxmox VE management and other VM features. If one uses pass-through for all NICs to firewall VMs, then there will not be a system NIC.

This is probably not exhaustive, but hopefully, this helps.

Final Words

This is a quick guide to setting up a PCIe pass-through NIC on Proxmox VE for when you are virtualizing pfSense, OPNsense, or another solution. It is more geared towards newer hardware made since 2017 or 2020 so if you have an older system, there may be more tweaking required. This used to be a feature that companies like Intel used for heavy segmentation for its chips in markets, but most will support VT-d these days.

If there are any other tricks you feel should be added, feel free to use the comments section or the STH forums.

TLDR: unless you require direct access to the hardware e.g. if you are intending to use DPDK inside your VM, doing this is probably no longer necessary.

So it is worth mentioning here that tying a particular VM to a particular piece of hardware breaks the notion of seamlessly migrating VMs throughout the PVE cluster.

I have benchmarked 10 Gigabits per second throughput to my OPNSense VMs using the paravalrtualised virtio network drivers and the modern UEFI bios, NOT the old intel 440 type of VM emulation.

Don’t mention it.

;-)

I was a little surprised to read this article, as I’m running Proxmox 7.1 across a mix of 1st gen Threadripper and Epyc Milan servers, hadn’t done this setup, but had been able to assign PCIE devices without issue to my VMs.

(To clarify, I had completed the bios setup step one!)

Since I hadn’t brought one of my nodes into production yet, I thought I’d be on the safe side and followed the instructions above, ran the final “dmesg | grep -e DMAR -e IOMMU” command, compared them to the output on one of the identical servers I hadn’t configured, and the output is completely the same.

In short, for reasonably current AMD kit on a UEFI systemd boot, these steps might now be redundant.

Command output is as follows:

# dmesg | grep -e DMAR -e IOMMU

[ 0.293797] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.293822] pci 0000:40:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.295291] pci 0000:00:00.2: AMD-Vi: Found IOMMU cap 0x40

[ 0.295297] pci 0000:40:00.2: AMD-Vi: Found IOMMU cap 0x40

[ 0.296166] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

[ 0.296218] perf/amd_iommu: Detected AMD IOMMU #1 (2 banks, 4 counters/bank).

Note for grub, additional options should go in GRUB_CMDLINE_LINUX_DEFAULT as they are effective for a normal boot (i.e. not recovery mode) where as GRUB_CMDLINE_LINUX is for options that are always active (normal and recovery)

Make sure your motherboard bios supports “ACS” you will want to turn this on before you start using PCI passthrough. This will resolve alot of headaches for you.

Can you provide a tutorial how to do the PVE MGT, PVE LAN (Pass thru means?), and FW LAN and FW WAN set up.

I am new to virtualization, and this subject is perplexing to a me. Thank you in advance.

Can you provide a tutorial how to do the PVE MGT, PVE LAN (Pass thru means?), and FW LAN and FW WAN set up. I am new to virtualization, and this subject is perplexing to a me. I am still learning proxmox. Can you point me to a good resource if not.

“tutorial how to do the PVE MGT, PVE LAN (Pass thru means?), and FW LAN and FW WAN ”

Would love this as well

Hi Correy,

I agree. Patrick left out the details, and didn’t specify the PVE network, and the whole scheme of the pfsense system. I posted the details in the forum. Hoping Patrick and STH team can clarify the setting.

https://forums.servethehome.com/index.php?threads/how-to-pass-through-pcie-nics-with-proxmox-ve-on-intel-and-amd.36087/

Is it possible to add a network card to this device?

I have a J4125 unit running latest Proxmox and OPNSense as a VM – seems to work well but I have issues trying to reach the Proxmox IP from within the private network – I explained this in the forums but got no real help: https://forums.servethehome.com/index.php?threads/how-to-pass-through-pcie-nics-with-proxmox-ve-on-intel-and-amd.36087/post-339203

The Proxmox is on the vrmb0 bridged interface which is also configured as a vswitch in OPNSense . . .

Sometimes I can reach the Ip, sometimes not – not sure if it’s a routing issue or what?!?

I have a new AM5 system and enabled every possible virtualization setting in the BIOS. Also thinking like the OS has changed. I just installed ProxMox 7.3 and do not have a modules file so created one but seems like it should exist. Also there is no update-grub option. When the system starts it’s using grub. Also the dmesg command there is zero DMAR entries at all returned.

One thing I haven’t seen mentioned is the Proxmox machine_type setting. I tried these instructions to setup a Topton box (i226 NICs) with an OpenBSD VM, and it didn’t work until I switched the “Machine” hardware setting from the default “i440fx” to “q35”

Without that, on the OpenBSD side I’d get messages saying “not enough msi-x vectors” and “unable to map msi-x vector 0”

“The screenshot of where this goes” does not match your description in the text.

Your text says that I should modify GRUB_CMDLINE_LINUX_DEFAULT, whereas the screenshot has this info just in GRUB_CMDLINE_LINUX…

So what is correct? Please provide a consistent description.

Thanks,

Volker

Hi,

I have followed instructions here (and via the proxmox website) but still cannot seem to get IOMMU enabled. Please can you help!?

I have IOMMU enabled on my motherboard, in addition to VT-x and VT-d being enabled.

But still when trying to add PCI devices to a VM it says no IOMMU is detected.

Running dmesg to check also shows no line saying it’s enabled (see below).

What am I doing wrong or missing??

root@pve:~# dmesg | grep -e DMAR -e IOMMU

[ 0.011902] ACPI: DMAR 0x00000000798E6000 000070 (v01 INTEL EDK2 00000002 01000013)

[ 0.011921] ACPI: Reserving DMAR table memory at [mem 0x798e6000-0x798e606f]

[ 0.113151] DMAR: Host address width 39

[ 0.113152] DMAR: DRHD base: 0x000000fed91000 flags: 0x1

[ 0.113156] DMAR: dmar0: reg_base_addr fed91000 ver 1:0 cap d2008c40660462 ecap f050da

[ 0.113158] DMAR: RMRR base: 0x0000007a292000 end: 0x0000007a4dbfff

[ 0.113160] DMAR-IR: IOAPIC id 2 under DRHD base 0xfed91000 IOMMU 0

[ 0.113161] DMAR-IR: HPET id 0 under DRHD base 0xfed91000

[ 0.113162] DMAR-IR: Queued invalidation will be enabled to support x2apic and Intr-remapping.

[ 0.114639] DMAR-IR: Enabled IRQ remapping in x2apic mode

Great tutorial, worked perfectly.

Thanks for taking the time.

the easiest command to determine which bootloader you are using is:

ps –no-headers -o comm 1

this returns systemd or grub

Hi,

thanks, but in steps 3, the lines in the screenshots differ from what you are writing in the text.

Then, step 6 is missing, but step 7 is there twice :)

Best regards

Michael

This may not be current for latest Proxmox VE 8. My AMD setup already had IOMMU setup with none of these extra configs existing and the check for whether it is enabled does not work for AMD. Other docs say IOMMU is enabled by default for AMD and to check using ‘journalctl -b 0 | grep -i iommu’