Today we have some fairly interesting news. NVIDIA released a new benchmark with its BlueField-2 DPUs. The results may be shocking to some folks, and we will try to get into why. Note: We held this story pending comment from NVIDIA and will update when we hear back.

New NVIDIA BlueField-2 DPU NVMe-oF Performance Faster than Optane SSDs

We are going to use the official release here as a reference. In that release, NVIDIA sad that it had achieved:

The 41.5 million IOPS reached by BlueField is more than 4x the previous world record of 10 million IOPS, set using proprietary storage offerings. (Source: NVIDIA)

Likely this was referencing Fungible’s 10M IOPS claim although NVIDIA did not call this out specifically. If you want to learn more about the Fungible DPU solution Fungible DPU-based Storage Cluster Hands-on Look, and you can see it there hitting 10M IOPS even in our cover image:

Getting back to the NVIDIA configuration:

This performance was achieved by connecting two fast Hewlett Packard Enterprise Proliant DL380 Gen 10 Plus servers, one as the application server (storage initiator) and one as the storage system (storage target).

Each server had two Intel “Ice Lake” Xeon Platinum 8380 CPUs clocked at 2.3GHz, giving 160 hyperthreaded cores per server, along with 512GB of DRAM, 120MB of L3 cache (60MB per socket) and a PCIe Gen4 bus.

To accelerate networking and NVMe-oF, each server was configured with two NVIDIA BlueField-2 P-series DPU cards, each with two 100Gb Ethernet network ports, resulting in four network ports and 400Gb/s wire bandwidth between initiator and target, connected back-to-back using NVIDIA LinkX 100GbE Direct-Attach Copper (DAC) passive cables. Both servers had Red Hat Enterprise Linux (RHEL) version 8.3. (Source: NVIDIA)

A few quick notes here:

- The P-series DPU is the company’s performance DPU. The DPUs you have seen on STH are slower-clocked but lower-power E-series DPUs that do not require external power connectors.

- NVIDIA did not call out the storage it was using so we do not know what the storage configuration was. More on that in a bit.

- The HPE ProLiant DL380 Gen10 Plus servers have 24-bay SFF front panels for storage. This is the 3rd Generation Intel Xeon Scalable Ice Lake update to the DL380 that we looked at the Made in the US version of in our HPE ProLiant DL380T Gen10 Trusted Supply Chain Server Teardown.

HPE is currently selling a mix of Ice Lake and Cascade Lake platforms. The higher-end platforms have the Ice Lake refresh while the lower-end platforms do not. We asked HPE about this a few months ago and we got a response along the lines of the market feels the 2nd Gen Xeon Scalable parts are OK for the lower cost segments.

With that, let us get to what NVIDIA did:

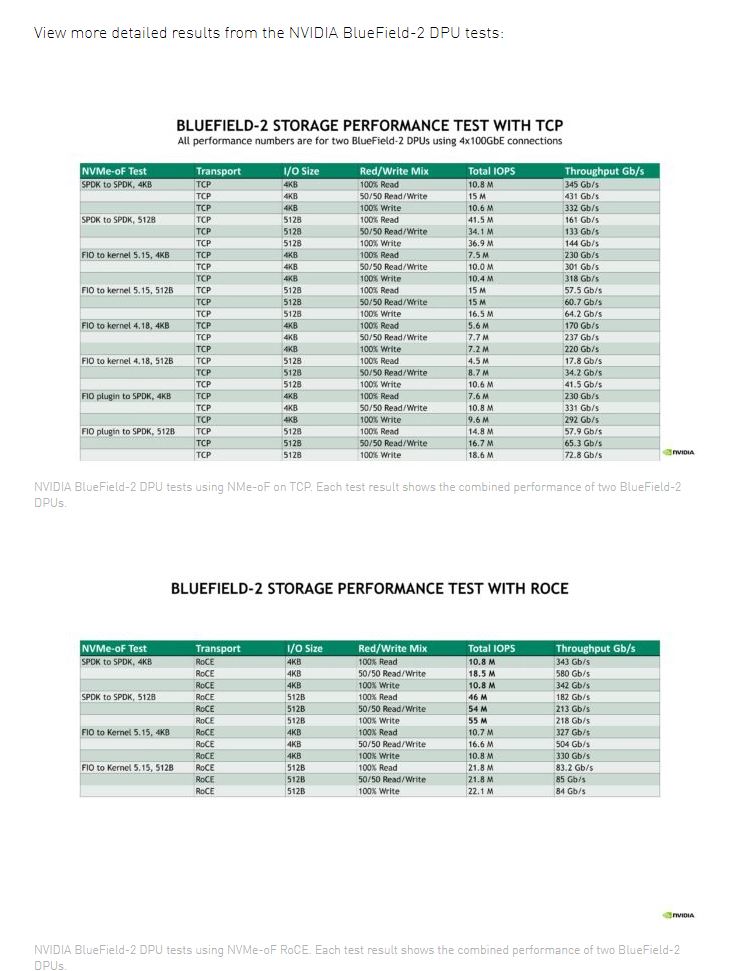

The NVMe-oF storage protocol was tested with both TCP and RoCE at the network transport layer. Each configuration was tested with 100 percent read, 100 percent write and 50/50 read/write workloads with full bidirectional network utilization.

Our testing also revealed the following performance characteristics of the BlueField DPU:

- Testing with smaller 512B I/O sizes resulted in higher IOPS but lower-than-line-rate throughput, while 4KB I/O sizes resulted in higher throughput but lower IOPS numbers.

- 100 percent read and 100 percent write workloads provided similar IOPS and throughput, while 50/50 mixed read/write workloads produced higher performance by using both directions of the network connection simultaneously.

- Using SPDK resulted in higher performance than kernel-space software, but at the cost of higher server CPU utilization, which is expected behavior, since SPDK runs in user space with constant polling.

- The newer Linux 5.15 kernel performed better than the 4.18 kernel due to storage improvements added regularly by the Linux community. (Source: NVIDIA)

Many drives are optimized for 4K reads/ writes these days. There are drives that also have good sub-4K performance like 512B performance. A good example of this is the Intel Optane P5800X that is basically the highest performing drive one can get today. Intel quotes ~1.5M 4K random read IOPS and in our testing, they are usually slightly conservative. Intel also suggests it can hit 2M IOPS in a 70/30 mixed workload scenario. The P5800X also has the ability to do 512B at up to 5M IOPS. (Source: Intel updated from the original 4.6M 512B RR IOPS in its original release below.)

We will note that NVIDIA did not specify in its release the storage medium nor the block size at 41.5M IOPS. Given the language below, it seems like NVIDIA must be using 512B and mixed workloads.

While the 41.5M IOPS may seem like a good number, but it lacks context as to how staggering that figure is. Let us put it into the context of the HPE DL380 Gen10+. Assuming 24 NVMe SSDs:

- NVIDIA is using 128 PCIe Gen4 lanes in the system (24*4 for the SSDs, 16×2 for the DPUs)

- Each SSD would need to put out around 1.73M 4K IOPS (41.5/24) to get to 41.5M, but it seems that NVIDIA is likely using 512B and mixed workloads instead.

- That 1.73M 4K IOPS is above the 1.5M rated read or write 4K IOPS for a P5800X 1.6TB, the fastest P5800X. There are some drives that claim slightly higher, but this is a PCIe Gen4 x4 limitation issue and that is why the P5800X is such a high-end drive. To get around the PCIe limitation, the P5800X can hit 2M IOPS in a mixed 4K use case or 5M IOPS in a mixed 512B use case.

- In the HPE server, the NVIDIA BlueField-2 DPUs are limited to PCIe Gen4 x16 connections each, and 2x100GbE is technically slower than the maximum PCIe bandwidth. We would expect the performance to be, therefore, lower than what eight PCIe Gen4 x4 NVMe SSDs can put out.

- NVIDIA, however, is achieving 41.5M IOPS, over this 100GbE fabric, which is faster than the rated P5800X speed at 5M 512B mixed IOPS times eight drives (8x PCIe Gen4 drives =32 PCIe lanes.)

NVIDIA’s announcement is very exciting. The team there seems to be delivering more performance per 32 lanes of PCIe Gen4, going through 100GbE interfaces than Intel is able to achieve with 8x P5800X NVMe SSDs.

In other words, NVIDIA’s solution is hitting NVMe-oF speeds with BlueField-2 faster than 3D XPoint on NVMe natively in a local machine using the same number of PCIe Gen4 lanes.

Final Words

Of course, we do not know the storage configuration that NVIDIA was using. It could have been using Optane DIMMs as we covered in Glorious Complexity of Intel Optane DIMMs.

NVIDIA can also be using a RAM disk or something like that (either on the host and/or on the DPUs themselves.) What is absolutely awesome though is that the NVIDIA BlueField-2 is able to push over 32x PCIe Gen4 lanes and then four (slower) 100GbE paths more data than Intel is able to push natively from eight Optane P5800X SSDs on 32x PCIe lanes, excluding the network links.

This is absolutely shocking performance from the NVIDIA BlueField-2 DPU team.

Update 2021-12-21 #1: NVIDIA told us they were not actually storing data, as Fungible showed in our 2020 demo. This was simply using null devices. We are seeking clarification if it is all going back to the host systems or stopping partially/ fully on the DPU itself. If not all of the I/O is going back to the host system, then the PCIe lanes are irrelevant here.

Update 2021-12-21 #2: NVIDIA confirmed via a phone call with Patrick that this is not going to storage, but is going to the host Xeons.

Update 2021-12-21 #3: Based on our feedback, NVIDIA added these tables for performance to the blog post. They confirmed that they were using /dev/null, not actual storage. Also, when asked about how much of the BlueField-2 was being used versus using the ConnectX IP NVIDIA said they would get back to us on that. The BlueField-2 DPUs can act either with the Arm core complex as a bump in the wire or the host system can run through the ConnectX IP without going through the Arm complex. If the latter is the case, then the DPU is functioning basically as a ConnectX part for this test. NVIDIA is confirming what they did here.

2021-12-21 Update #4: NVIDIA confirmed the Arm core complex on the DPUs was not used so this just followed the host networking path.

Uh, STH it doesn’t work like that.

You don’t get more performance by running NVMe over TCP or NVMe-oF than local.

You get lower performance going PCIe G4 x16 to 2x 200G

They’ve got to be using RAM disks and almost certainly on not just the HPE servers but on the DPUs too to get around PCIe limits. Even then they’re still stuck with the 100G links

I’m just saying, its BS and you need to call NVIDIA out on it. Even if that’s what they’re doing to compare it to 10M IOPS of a storage solution that has non-volatile storage it is using is not OK

Every other site just posted the BS number. STH put thought into it. That’s why STH is so great.

I read a story on another site earlier about this. I may as well just wait for STH. There’s only one enterprise storage and networking site that isn’t just PR reposting garbage now. Thanks Cliff for not just doing a wholesale repost.

NVIDIA you need to up your game or STH’ll find ya.

You don’t have 160 hyperthreaded cores on a dual Xeon 8380, you have 80 hyperthreaded cores or 160 hyperthreads.

Statements that aren’t shocking

Grass is green

Nvidia’s logo is green

Nvidia cheated on a competitive benchmark

Same ol’ same ol’

Leave it to the green team to pretend like they’re breaking storage records doing a networking test.

/dev/null for storage. So it is actually a networking speed test.

I gotta ask also, what is the prive tag for all that DPU gubbins? I have been faithfully following along as STH’s people explain this idea, but it still looks like a solution looking for a problem

And, Admin in NYC is right also. NVMe over Fabrics using RDMA built in to, say, Infiniband native wire protocol kicks the crap out of NVMe over Fabrics over TCP. I’ve tested this on my modest QDR Infiniband network, but honestly it should be obvious: there is a cost to de/encapsulating into TCP, and then traversing the TCPIP stack.

—

Unless this is what DPUs are for? Are they used to accelerate the crap out a rather dumb NVMe to TCP encapsulation? Is that what the DPU makers are actually selling?

This is complete BS. It is basically a ConnectX networking test. DPU arm-cores can’t be used as it is not acting as a root complex so all PCIe access to NVME would need to be via target’s host OS/processor. Either way it doesn’t matter for null devices as STH figured out.

So an accelerated networked null device? Color me impressed…

.

I think the title needs adjusting based on the clarified information. Also, now I’m left simply curious what its performance would *actually* be reading and writing to storage. Maybe sth can test it with the same hardware setup and give the world the real numbers