A often asked question is whether one should use an Amazon EC2 t1.micro instance or a VMWare ESXi 5.0 server at home. For this test I decided to use the Linux 64-bit version of Geekbench by Primate Labs which is a fairly popular benchmark that does a decent job of quickly profiling the performance of environments. I had planned to do this piece using an older version of GeekBench a few months ago, but I saw some performance anomalies that had performance well below what I expected. I took a look back four months later and I think the results are more in-line with what I was expecting. Here is the big question for many users, should I build my own server using ESXi 5.0 or use the Amazon cloud for development and testing work. I think that there a few really good reasons to go with the Amazon EC2 cloud over a build-your-own approach as moving things into production are much easier and Amazon is building a fairly robust infrastructure behind its cloud offerings that do allow massive scale-out. A lot of people think that anything in the cloud must be faster, even if they are paying $0.02/ hour or $0.48/ day. For one or two develoment instances, Amazon’s EC2 offering is very compelling. For those wondering, this site has been running in the Amazon EC2 cloud for about a year now and I do maintain one development instance for testing.

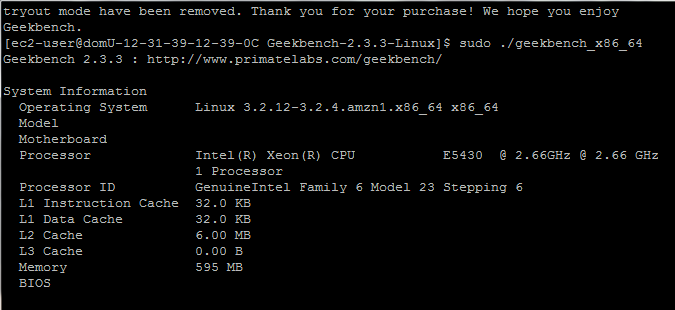

I started out this test looking at what kind of hardware my EC2 t1.micro instance in the US EAST region was running and saw an Intel Xeon E5430 which is a 2.66GHz LGA 771 CPU that first appeared in late 2007.

Double checking Geekbench’s output I did cat /proc/cpuinfo to see what was under the hood of the t1.micro instance:

[ec2-user@domU Geekbench-2.3.3-Linux]$ sudo cat /proc/cpuinfo

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 23

model name : Intel(R) Xeon(R) CPU E5430 @ 2.66GHz

stepping : 6

microcode : 0x60c

cpu MHz : 2659.998

cache size : 6144 KB

fpu : yes

fpu_exception : yes

cpuid level : 10

wp : yes

flags : fpu tsc msr pae cx8 cmov pat pse36 clflush dts mmx fxsr sse sse2 ss ht pbe syscall nx lm constant_tsc up arch_perfmon pebs bts rep_good nopl aperfmperf pni dtes64 monitor ds_cpl vmx est tm2 ssse3 cx16 xtpr pdcm dca sse4_1 lahf_lm dts tpr_shadow vnmi flexpriority

bogomips : 5319.99

clflush size : 64

cache_alignment : 64

address sizes : 38 bits physical, 48 bits virtual

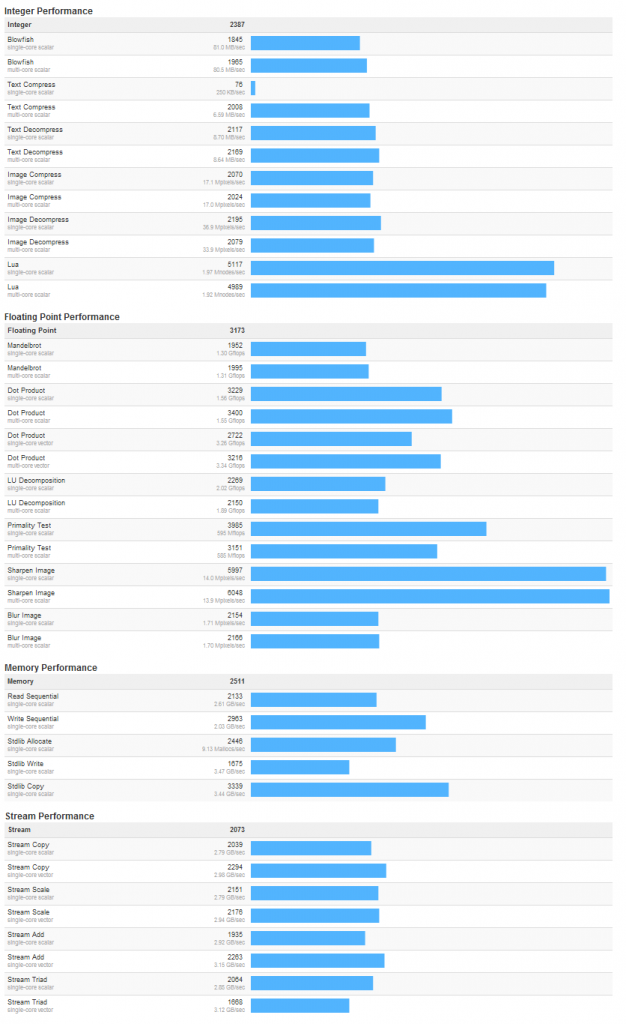

As for performance of the Amazon EC2 t1.micro instance, the benchmarks are much improved over the first time I ran the tests in March:

For the results in an easier-to-read online viewer, one can see my latest Amazon EC2 t1.micro instance results here. Of course, the question is, how does this compare to something you could have at home.

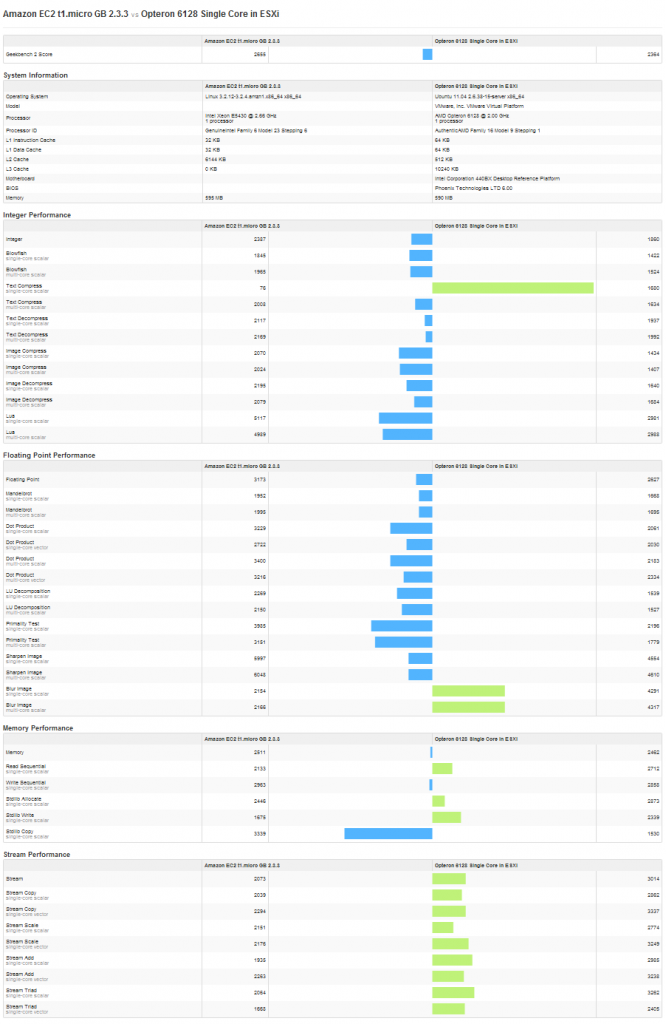

Since this is a budget instance, I decided to pit it against the $1,000 ESXi 5.0 dual Opteron 6128 machine. Now this was clearly not going to be a fair fight. An instance that running 24×7 would cost about $175 not including storage against a $1,200 server. Plus the AMD Opteron 6128 is just over half he age of the Intel Xeon E5430, still, we do know that Amazon does not give its AWS customers a full Intel Xeon E5430 core for $0.02/ hour. Still, with power and a few other goodies thrown in (such as massive storage for snapshots.) The cost of this server with power is much lower than eight Amazon EC2 t1.micro instances. Here are the quick specs:

- CPUs: 2x AMD Opteron 6128

- Motherboard: Supermicro H8DG6-F

- Memory: 8x 4GB Kingston unbuffered ECC 1333MHz DIMMs

- SSD: OCZ Vertex 3 120GB

- Power Supply: Corsair AX650 650w 80 Plus Gold

- Chassis: Norco RPC-450B – $80

- Cooling: 2x Dynatron A1 G34 Coolers

A bit of shopping for used components is required to keep the cost below $1,200, but as can be seen in the dual Opteron 6128 link above, my costs were under $1,200. Now what does the performance look like of a single Opteron 6128 versus the Amazon EC2 t1.micro instance?

For a comparison on the Geekbench site, see here, but we are going to look at the relative performance differences. The EC2 t1.micro instance is faster in the majority of CPU benchmarks. In terms of memory performance, the single AMD Opteron Magny Cours 2.0GHz core is quite a bit faster than the Amazon EC2 t1.micro instance’s Intel Xeon E5430 platform which makes sense because the Intel Xeon platform is an older generation with dual channel memory versus the AMD Opteron quad channel memory controller.

I will be looking at quite a few more instances in the near future, but looking at the bottom tier Amazon EC2 t1.micro instance’s benchmark results, performance is actually fairly close between the t1.micro instance and the AMD Opteron 6128 single core. If we were to call these about even, then the break even point of running multiple t1.micro instances versus a low-cost VMware ESXi development box is somewhere in the range of eight EC2 t1.micro instances always on for a year.

Amazon EC2 benchmarks now?!? Freaking great work bro. You guys rock.

Nice results. Are you going to benchmark the ec2 small instance?

Excellent article Patrick. There are too few good EC2 benchmarks on the web so your analysis is much appreciated.

Your research was mostly focused on CPU and memory benchmarks of course, but you did make a claim at the end about “breakeven”, which I interpreted as meaning total cost of ownership (TCO). You stated that EC2 micro instances were cheaper to run than at-home hardware until you were up to around eight VMs. I’ve run the numbers for my own purposes and came to somewhat different conclusions: By my math, EC2 is usually not the cheapest way to buy 24×7 server time.

Imagine you had some light workload that could be run at home or on EC2 – say a web server. You might be tempted to use a small workstation or rack mount server drawing 100 watts, but that would probably be a mistake from a TCO point of view. True, a continuous 100 watts of San Francisco Bay electricity would run $192 per year, more expensive than an EC2 micro instance even without amortizing the hardware. But then again you wouldn’t do that if you were trying to minimize cost. Spend $400 on an ultra-low-power computer drawing 10 watts (as I did) and your electricity costs drop to $19/year. Add in four year amortization of the hardware you are still only spending $125/year – far less than EC2.

If you need two small 24×7 servers then buy two of these new low-power devices, etc. At some number of VMs it may become economical to switch to a big VM machine like your Opteron for even more savings. The key point is that EC2 won’t ever be cheaper for this type of workload.

So does EC2 ever make sense? Absolutely. If instead of 24×7 you need a server ten hours per day then EC2 becomes cheaper than any low power server. If you need your CPU horsepower for a few hours every few days then EC2 is the only choice worth considering.

In the spirit of benchmarks, I ran this on my Linode 512 ($20/month) instance. CPU was slower, but memory/scalar/vector performance was double.

http://browser.primatelabs.com/geekbench2/view/885974

Great article guys, thanks. However can I suggest a better comparison. In our dev environment we use between 13 and 20 vm’s to work, and run our network infrastructure sites on another 2 (each vm is limited to between 1 and 2Gb of ram, and runs vmware tools). Those all talk to a large database server, which has to run in excess of 16 instances of our 4GB MySQL database (we’ve yet to implement the 16 instances of our sister PostgreSQL database).

We’re currently evaluating whether to move those vm’s into the cloud, or buy new hardware, and while ongoing performance is an issue, as long as things aren’t too slow, it’s bearable. The real issue is the ongoing power and hardware costs.

What would be useful would be to benchmark 8-16 vms running on your esx machine vs the same on ec2. Hence you’d probably need 8-12Gb or RAM rather than 4.

Also dba is right, our electricity costs are quite high, I’d be interested to know what the power consumption of the machine is, as clearly that’s less of a factor in the cloud.

I forgot to say, our current setup is an old desktop machine running an Intel i7 quadcore CPU @3.073Ghz, with 24Gb or Ram. It’s happily supporting our 20 vms (normally 10 running at any one time).

Thanks for this! Also note that Amazon EC2 Micro suffers badly from CPU steal under certain circumstances. I wrote a blog post about when not to use EC2s. But also consider similar specs offered for cheaper by DigitalOcean and Linode which can be more bang for the buck.