Sometimes there are servers that we open at STH and they make us do a double-take. This is one of those. ASRock Rack and their partner Core Micro Systems did something completely innovative with the 1U4GPU-ROME server. Sometimes when server companies do something innovative, performance can suffer a bit. In this case, we were pleased to see the ASRock Rack 1U4GPU-ROME performed well. Let us just get into this surprisingly expansive server 1U server review.

ASRock Rack 1U4G-ROME Hardware Overview

As we have been doing recently, we are going to split our hardware overview into external and then internal overviews. To help the lengths of each section, we are going to bring in a bit from the internal overview to the external to help with the balance. We also have a video that you can see here:

As always, we suggest opening the video in a new tab, window, or app for the best viewing experience.

Also, just as a quick one, this is the first server that was fully photographed and saw b-roll in the new studio after the STH Blue Door Studio was decommissioned. This is by no means the final look, but it is the start of a new era for STH with a much larger studio space. We also have a few very fun projects happening over the next few weeks. This server was a bit harder to photograph in the studio and is the longest chassis we have done here thus far.

ASRock Rack 1U4G-ROME External Overview

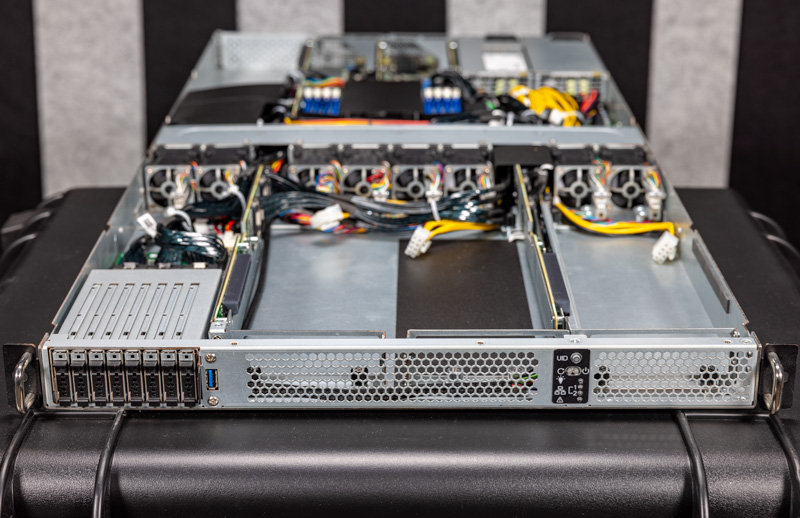

First off, one of the most defining features of the server is that it is a 1U design. Many of the GPU servers we test are 2U or 4U servers. GPUs are relatively large PCIe cards and they generate a lot of heat so 1U GPU servers offer density. The other side of the equation is that to fit so much in a 1U chassis server designers have to engage in a delicate balancing act that is on display with the 1U4G-ROME.

One of the first balancing points is depth. This is an 880mm or ~34.6″ server without the rack ears. That is deeper than many of the single socket servers that we review. At the same time, it is not as deep as the 926mm/ 36.5″ Dell EMC PowerEdge C4140. ASRock has a more modern design that allows the server to be almost two inches shorter than one of its competitors while offering a key capability difference.

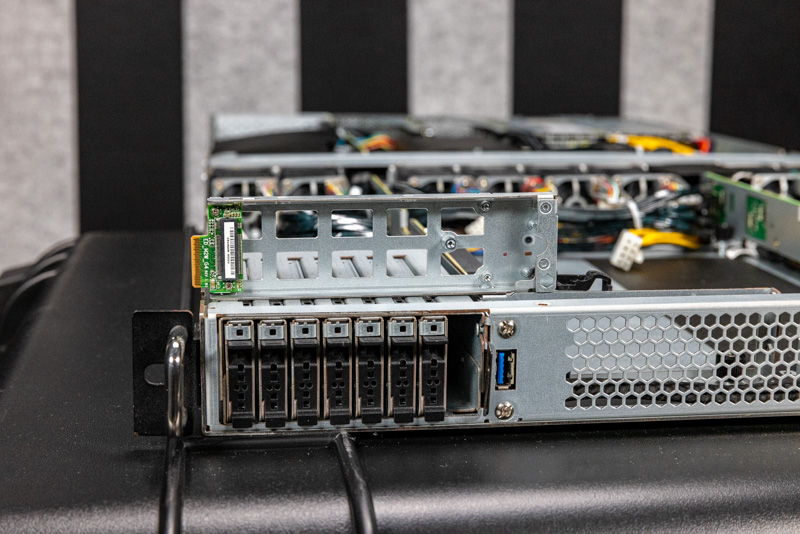

On the different side, ASRock Rack has eight EDSFF E1.S drive bays on the front of the system. This is a huge deal. Previous generation servers had 0-2 front hot-swap bays due to the amount of space in a chassis that 2.5″ drive bays occupy. Having eight in a system is a true standout.

As you will see above, ASRock Rack is using the E1.S 8.01mm form factor. This is actually not going to be as popular as the E1.S 9.5mm form factor that we will see in many servers soon, but ASRock Rack has an option, especially since there are limited E1.S drives in the market at this point. The company has an ED_M2W_G4 adapter card (shown above) that converts a M.2 2280 or M.2 22110 NVMe SSD into an E1.S form factor. So one can fill this server with eight 4TB M.2 SSDs and get a good amount of local cache storage.

If you want to learn more about EDSFF, see the article and video we did on the subject.

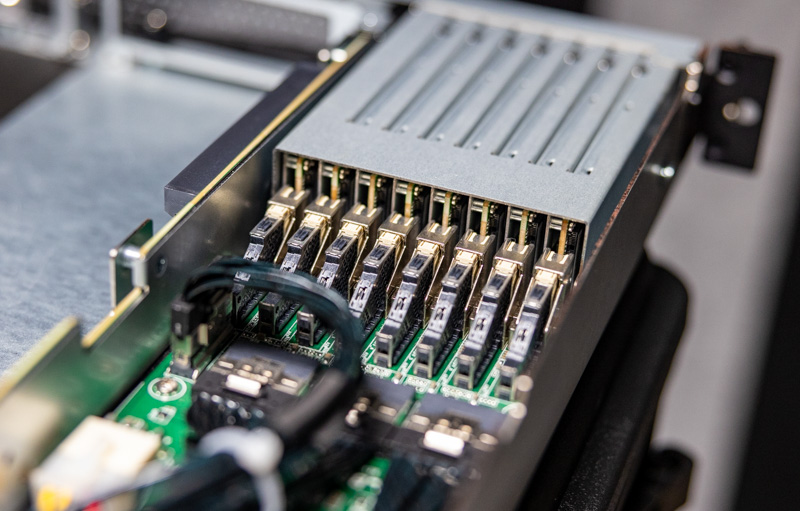

The rear of the cage has the E1.S connectors as well as the cabled PCIe Gen4 connectors. Again, on the balance side, one of these connectors needs to be vertical instead of horizontal to simply fit the connector in the PCB width.

This shot shows one of the key features for EDSFF. The connector is relatively low profile allowing for a larger airflow area above and around the drives.

Since many folks have not seen these connectors in a denser configuration like this, here is another shot of the backplane.

Moving to the rear of the system, we have a few key features. Specifically, we have the power supplies, followed by the rear I/O and the riser slots.

In terms of rear I/O we have USB 3.0 ports and a VGA port for local management. We then have two 1GbE NIC ports provided by an Intel i350-am2. This is a higher-end NIC solution but it is still 1GbE. The goal is really to use the two PCIe Gen4 x16 risers to add low-profile and high-speed networking. ASRock Rack is really maximizing PCIe lanes with this server.

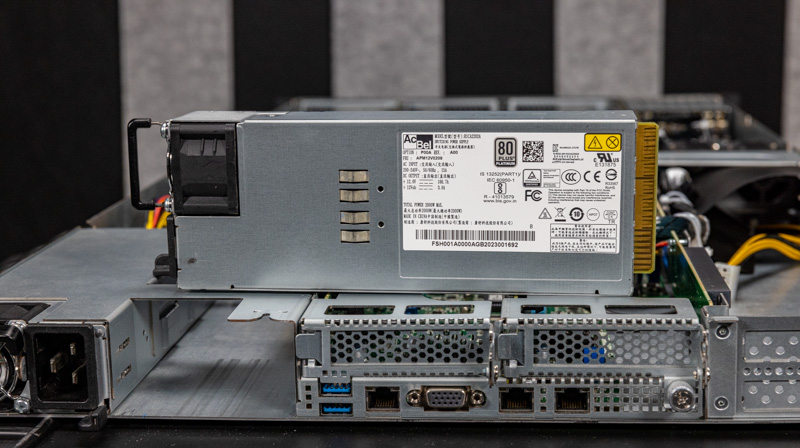

The power supplies are 2kW 80Plus Platinum rated power supplies.

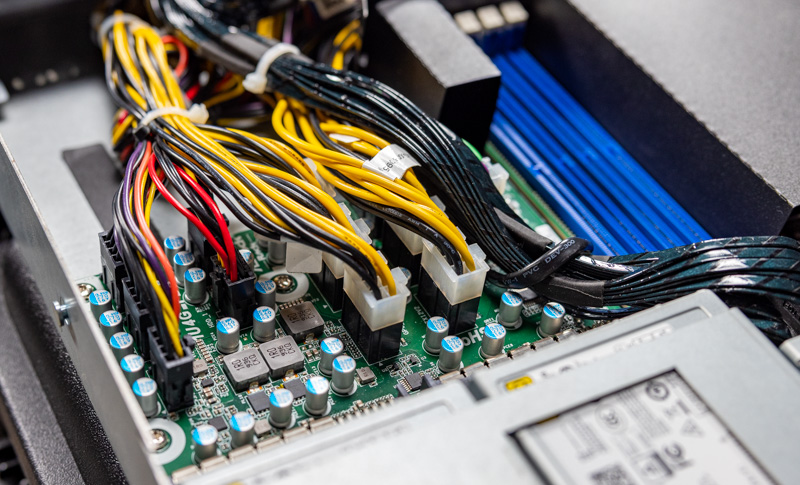

These power supplies do not plug directly into the motherboard. Instead, these power supplies plug into a power distribution board that has wired connections for the motherboard along with GPUs and other devices.

Overall, there is some interesting innovation going on around the server, but there is more inside.

Next, let us move to our internal hardware overview.

The EDSFF storage and connectors are a thing of real beauty. It would be wonderful if this tech supplants m.2 in the desktop world also.

@Patrick, can you tell us the part number and manufacturer of the fans used to force air theough this system?