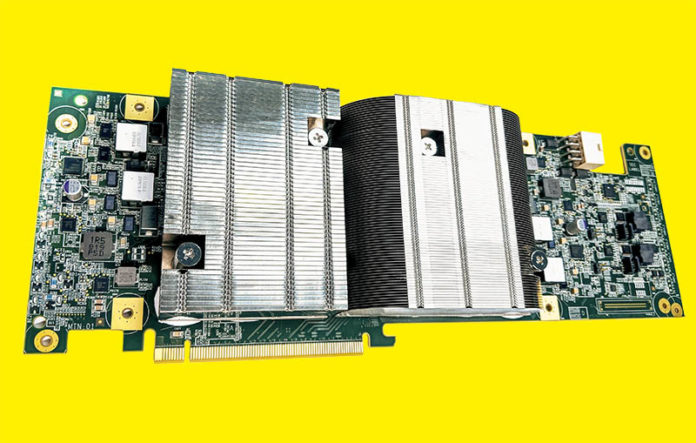

We previously covered the Google VCU or Video Coding Unit in Google YouTube VCU for Warehouse-scale Video Acceleration. At Hot Chips 33, the company gave more insight into the solution than it did in the original paper. We are doing this one live, so please excuse typos.

Google VCU Video Coding Unit at Hot Chips 33

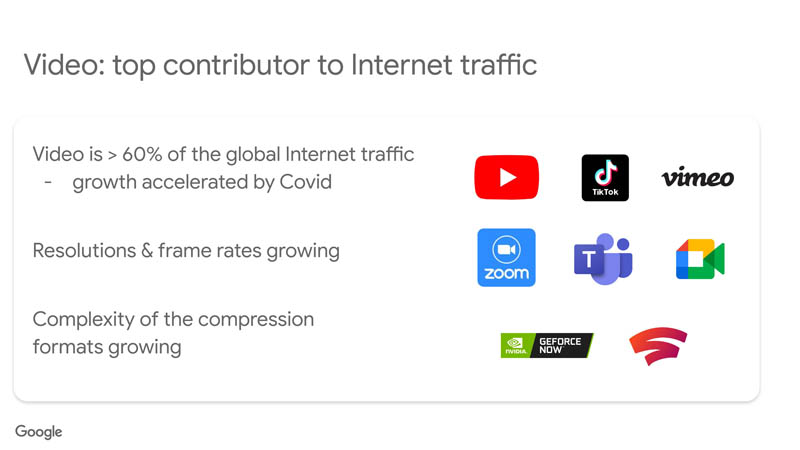

Google has some particular challenges. Specifically, it is one of the firms directly impacted by the overall types and mix of Internet traffic. It now says that video is more than 60% of overall video traffic and as video gets higher resolution and framerates, this increases bandwidth needs.

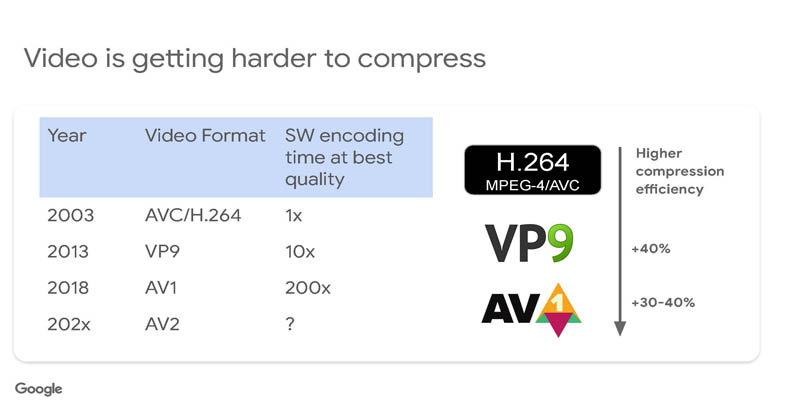

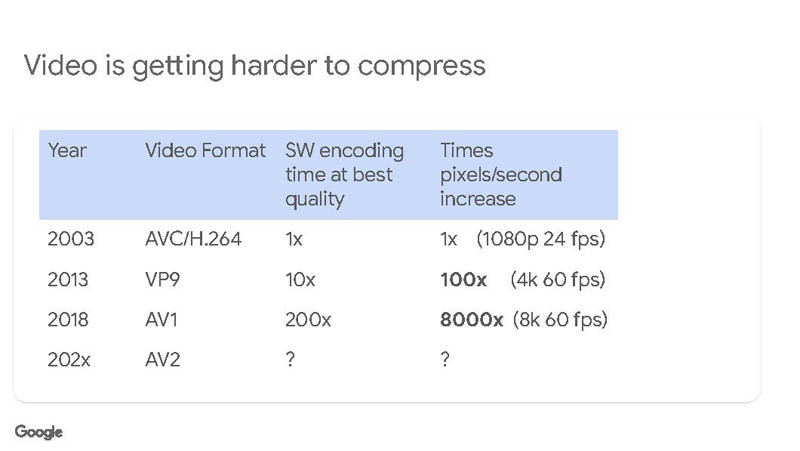

There are a number of different compression and encoding codecs. Each codec gets more efficient at compressing video leading to smaller file sizes and smaller streams.

However, the challenge is not to encode the same content. Instead it is to work with content that continues to get higher resolution and higher framerate. Also, the higher levels of compression generally require more compute. Ultimately, saving 30-40% on bandwidth is a good goal, but it requires a lot of compute on a growing problem to make that a reality.

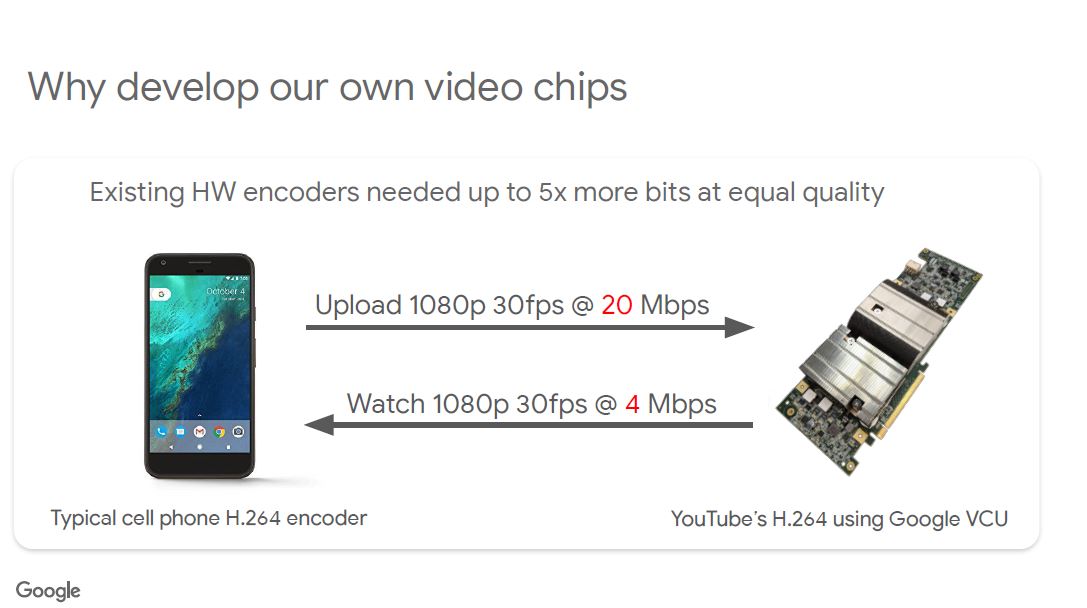

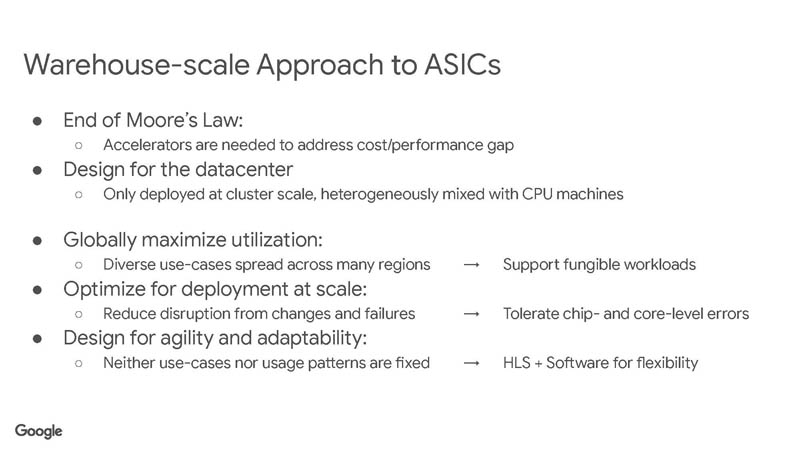

Google realized it needed to create its own chips to handle higher bitrate source video.

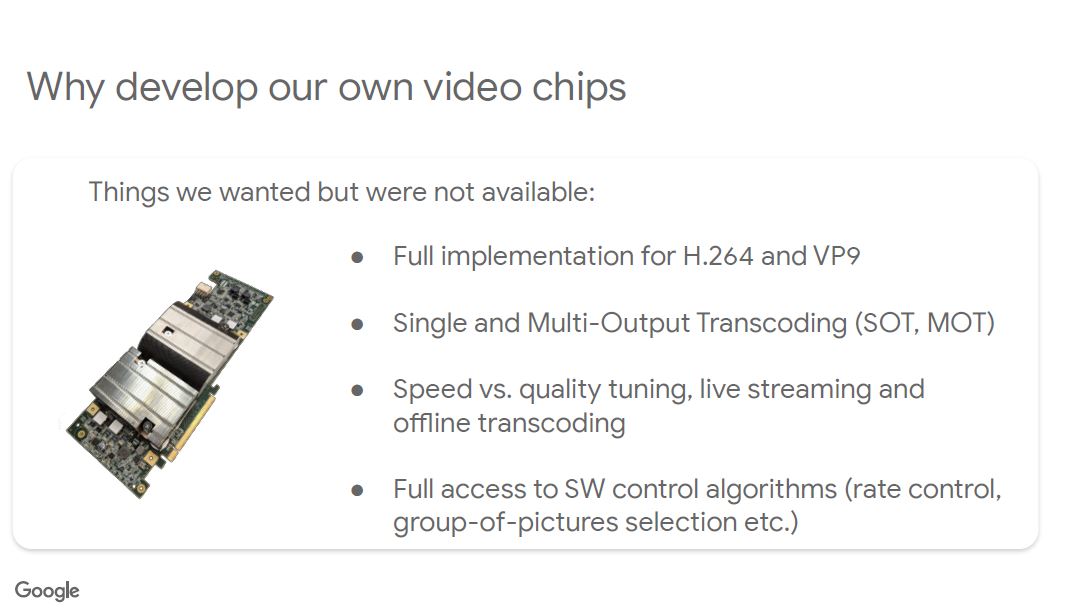

As a result, Google wanted a number of capabilities not available from commercial products.

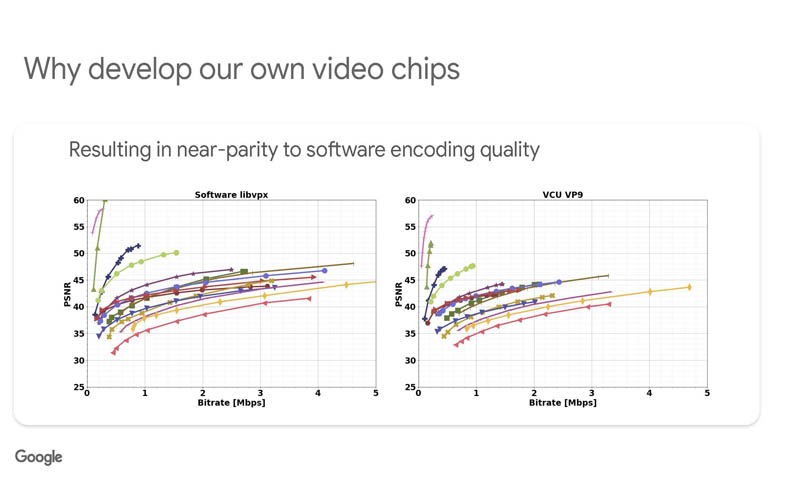

It also wanted to get close to software encoding, but with a lower power and faster ASIC.

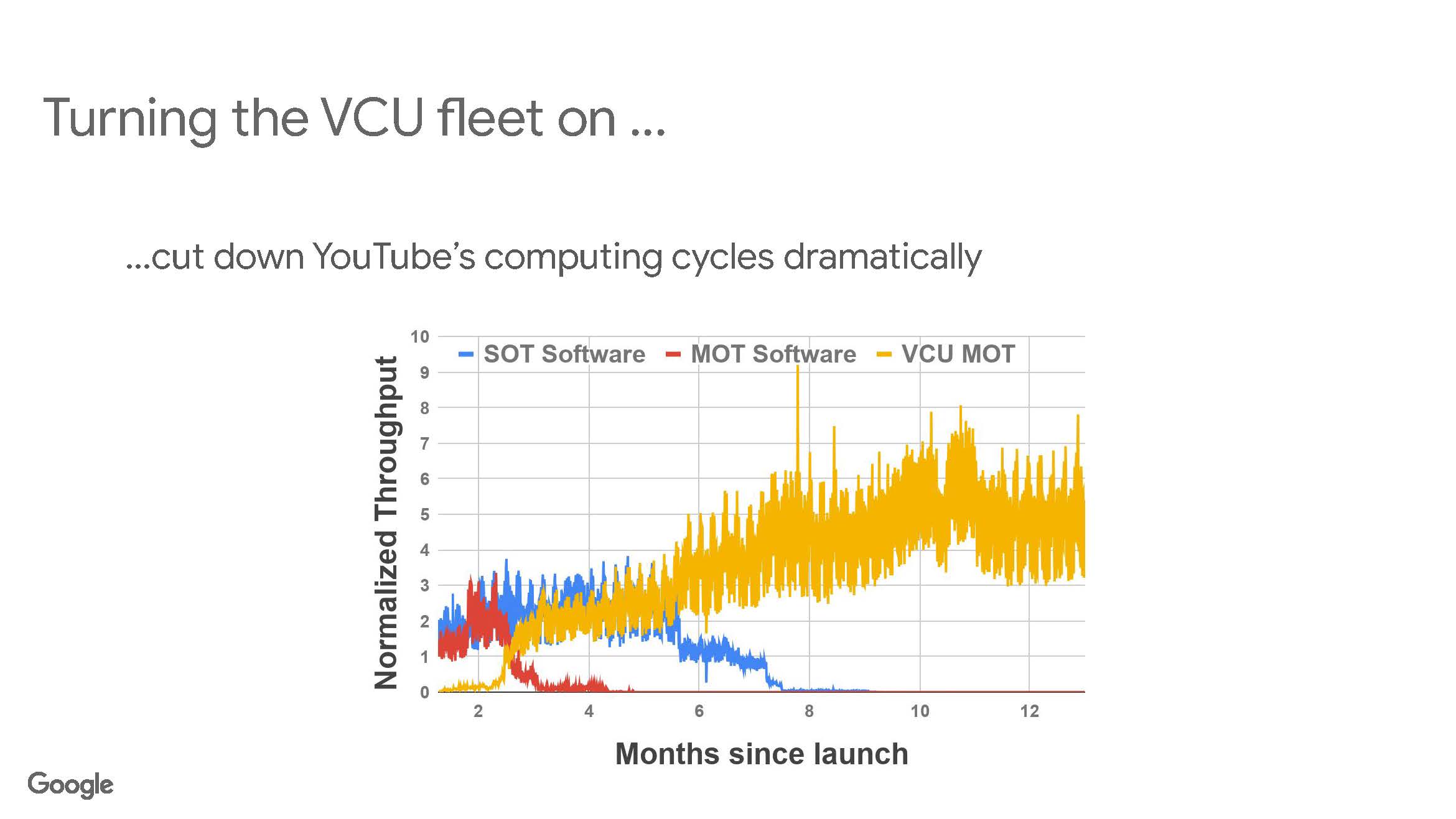

The impact of deploying the VCU ASICs was a massive decrease in CPU use.

Since we are doing these live, we are just going to show the slides for the video encoder cores.

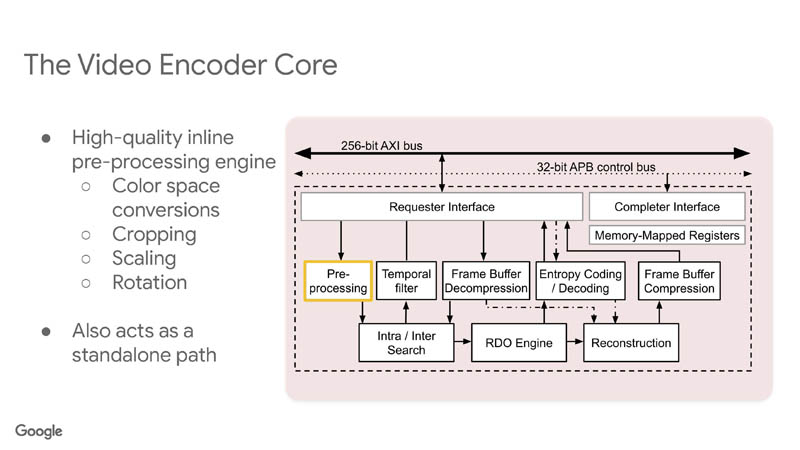

Here is the pre-processing:

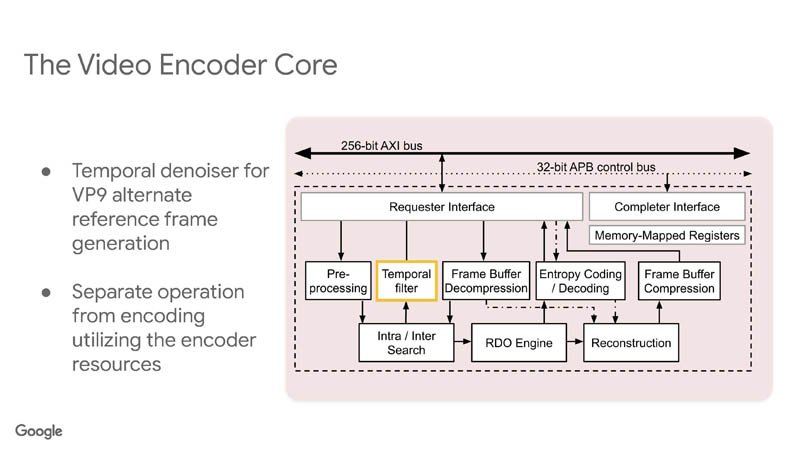

Here is the temporal denoiser/ filter:

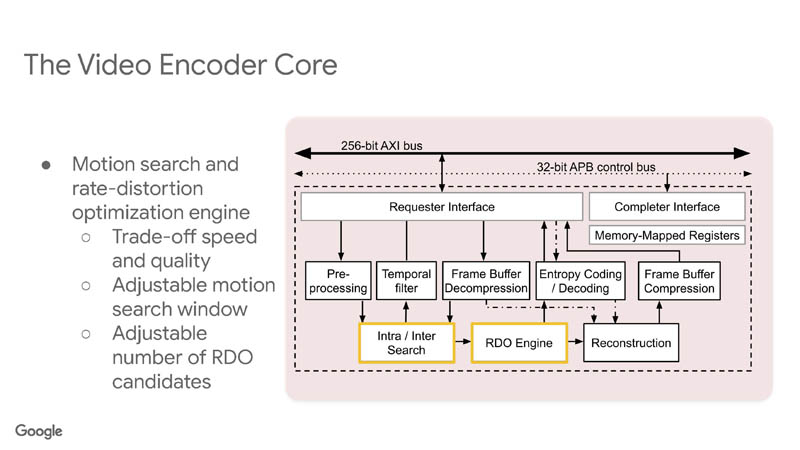

Here is the motion search and rate-distortion otpimization engine:

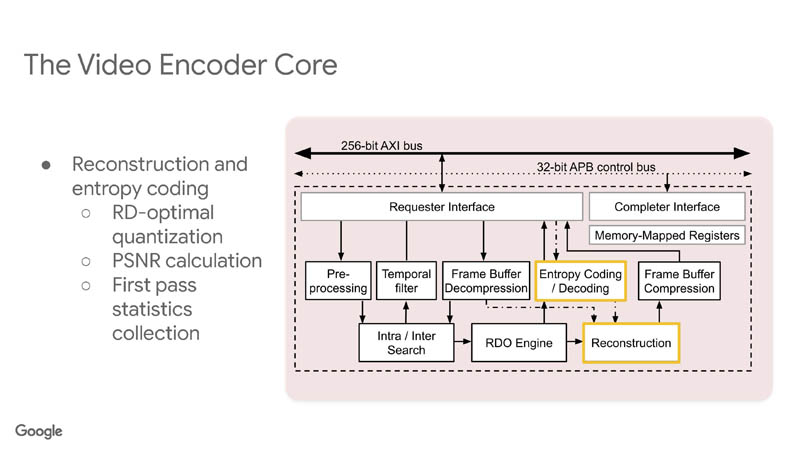

Here is the reconstruction and entropy coding:

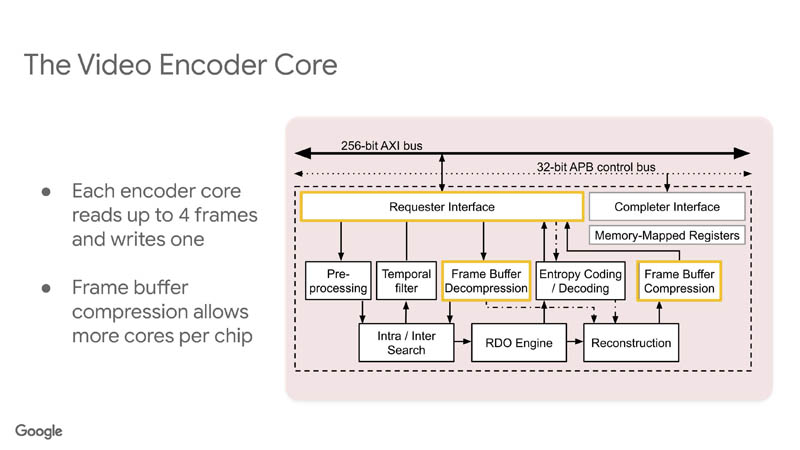

This has the interfaces to read frames and decompress/ compress the frame buffer:

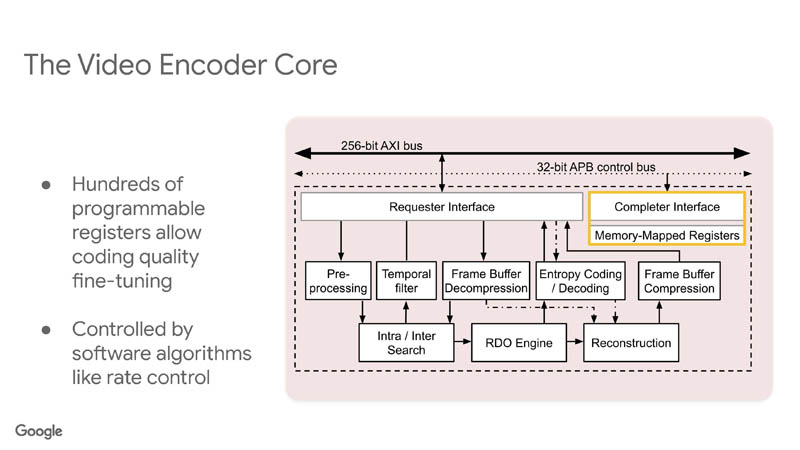

This has the software programmable registers.

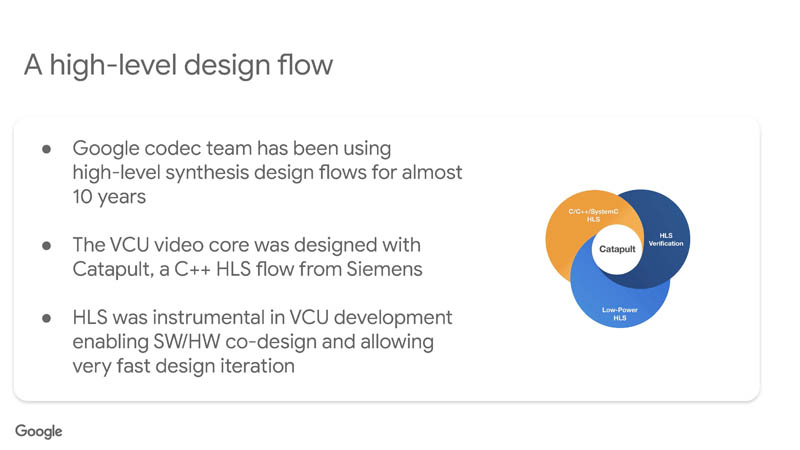

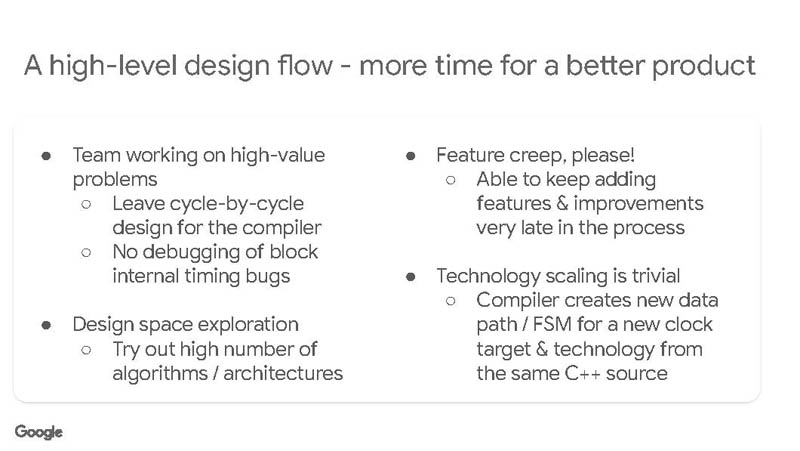

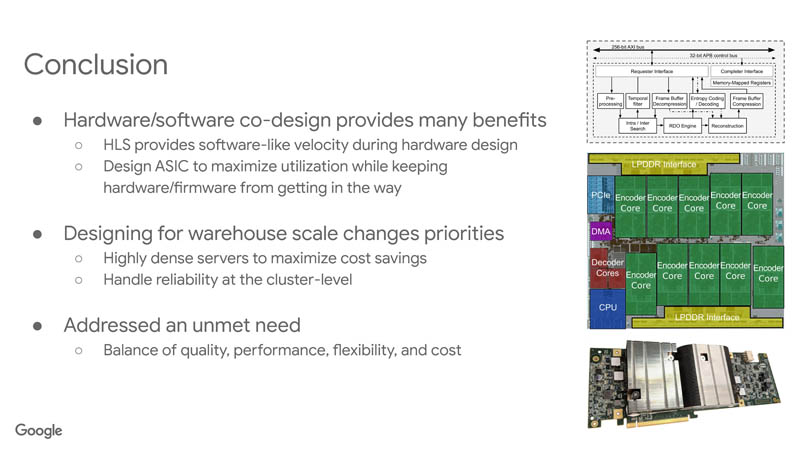

Google has teams that design hardware in addition to software. It used a high-level synthesis design flow.

This meant that Google could design the VCU using a higher level language (C++) making the development much faster.

It also kept the limited team working on high-value features and problems.

Overall, Google seems to be very focused on using VCU ASICs in the future. Google has many applications for video such as YouTube, Google Drive, and more.

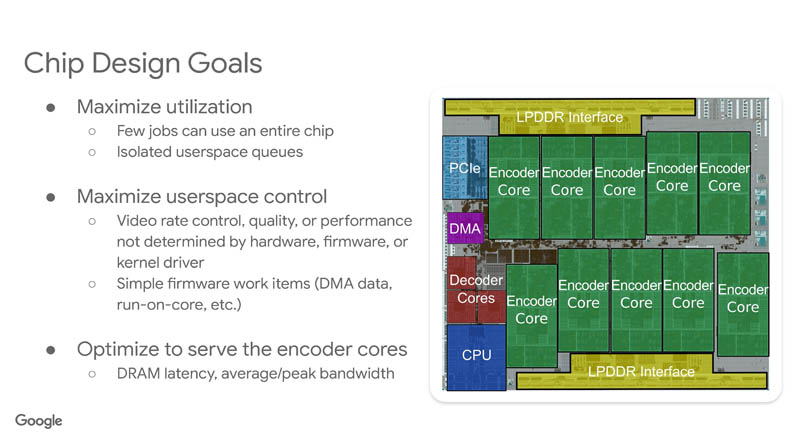

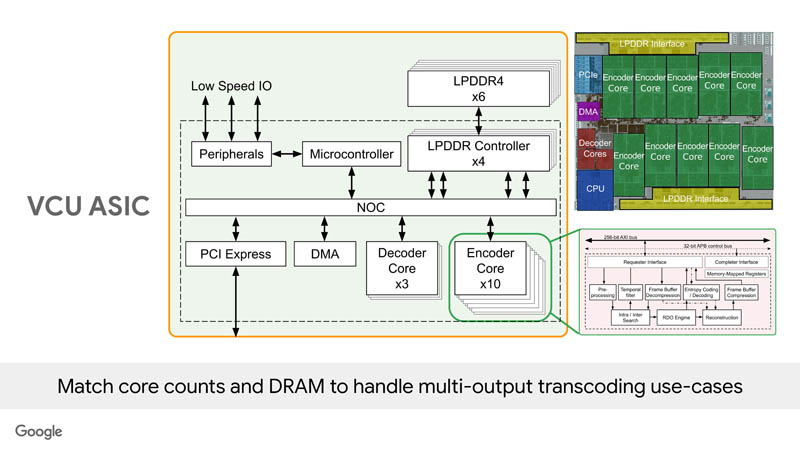

The VCU design goals included maximizing the utilization. There are ten encoder cores adn three decoder cores along with LPDDR interfaces.

Here is the drill-down into the ASIC:

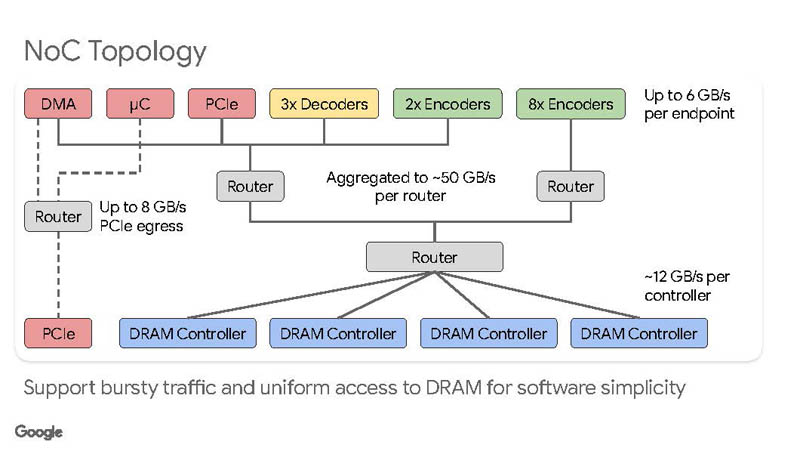

Here is the VCU network on chip topology:

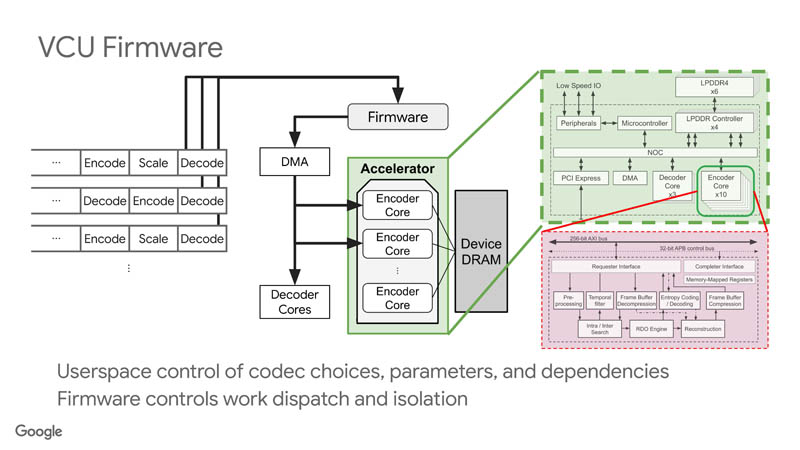

The VCU has its own firmware that runs the ASIC and allows userspace choices of codecs for example.

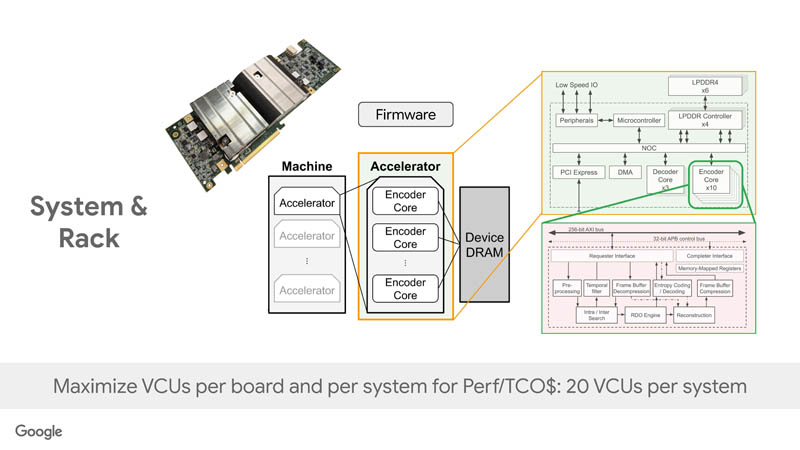

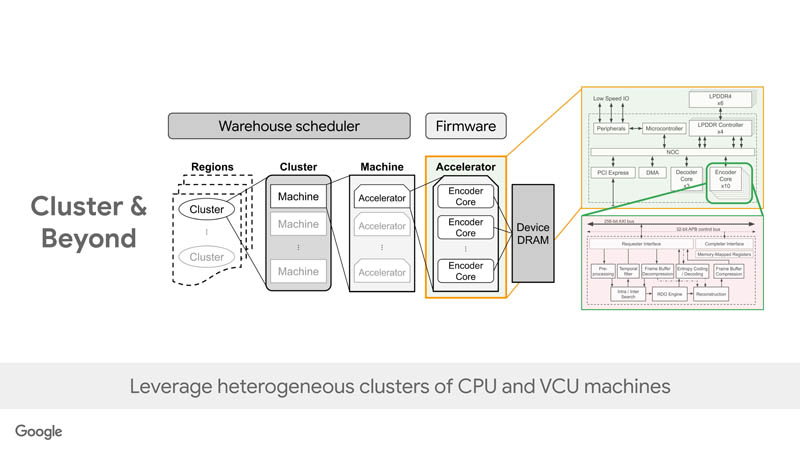

At the system level, these are deployed with 20 VCUs per system.

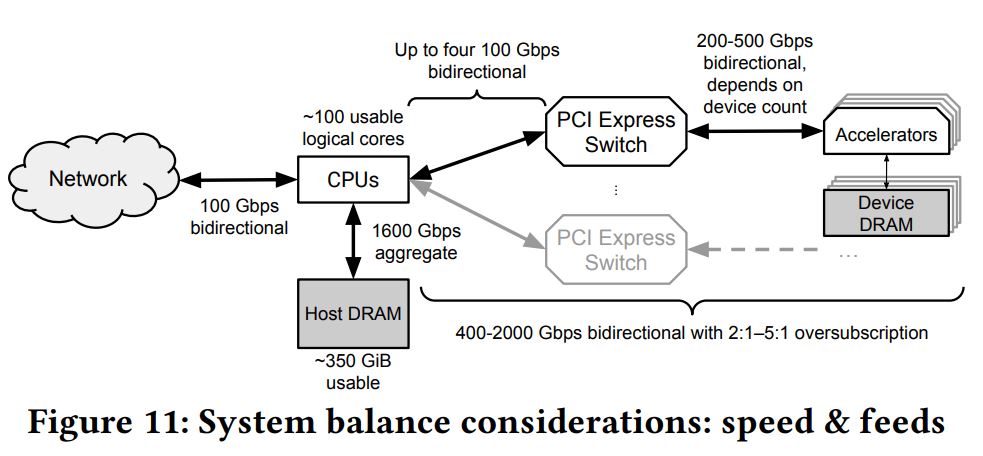

We covered this in the previous article on the VCU, but here is the architecture from Google’s whitepaper.

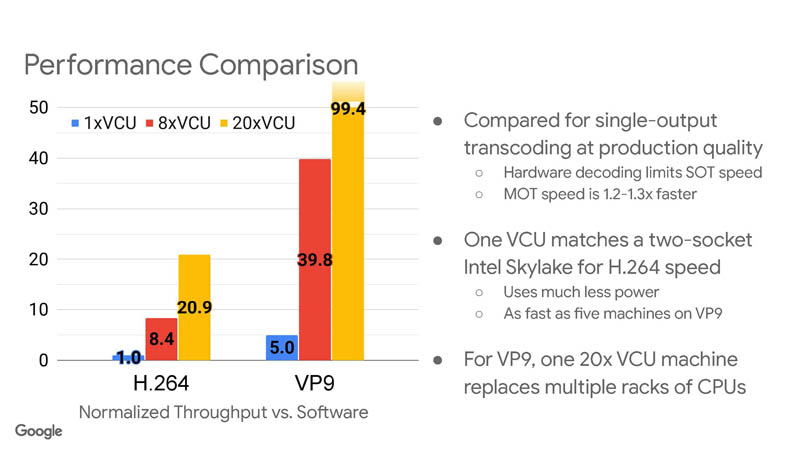

The net impact is that the VCU is more efficient than a dual socket 2017-era Intel Xeon syhstem for h.264 and five servers for VP9.

Google also focuses on building clusters of machines, but it is fairly clear that Google can put a large number of VCUs to work.

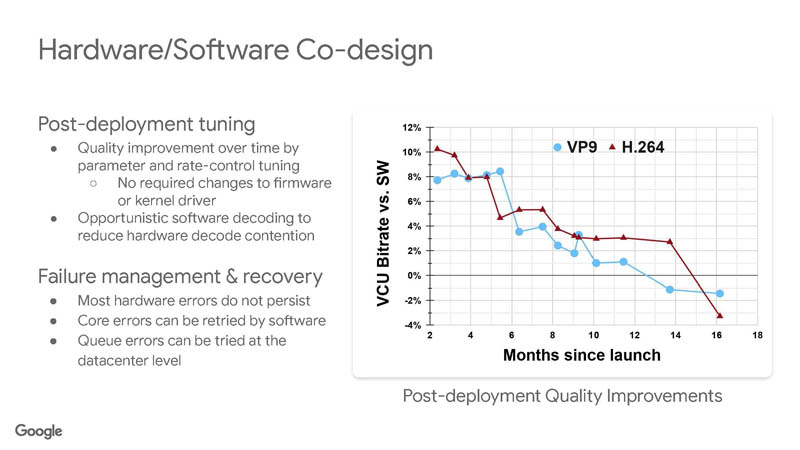

Google also found that over time, it was able to get its hardware encoders to beat software encoding. The “opprotnunistic software decoding” happens when sometimes encoding happens on the CPUs as available. Google also needs to be able to monitor and determine if a VCU is failing in the data center, or if a core is failing as an example.

It seems like Google is reaping a lot of benefit from the VCU.

If Google is showing us its VCU today, there is a non-trivial chance it is either working on, or has a newer version already.

Final Words

Overall, it is great to see Google is showing off more about its VCU. In our previous piece we offered to take a better photo, but were told that some of the not-shown and blurry parts of the VCU image was specifically to protect IP.

Now if we can just get Google to talk more about its hardware than just the TPU and VCU lines.