NVIDIA has a new Ampere-based GPU announced today alongside SIGGRAPH 2021. The new NVIDIA RTX A2000 GPU aims to bring a lower power and cost point to the Ampere generation. For those confused by the naming, the RTX A2000 would have previously been called a “Quadro” however the Quadro brand for workstation GPUs and Tesla brand for data center GPUs were retired. We covered this in Confirmed NVIDIA Quadro Branding Phased Out for New Products if you want to learn more.

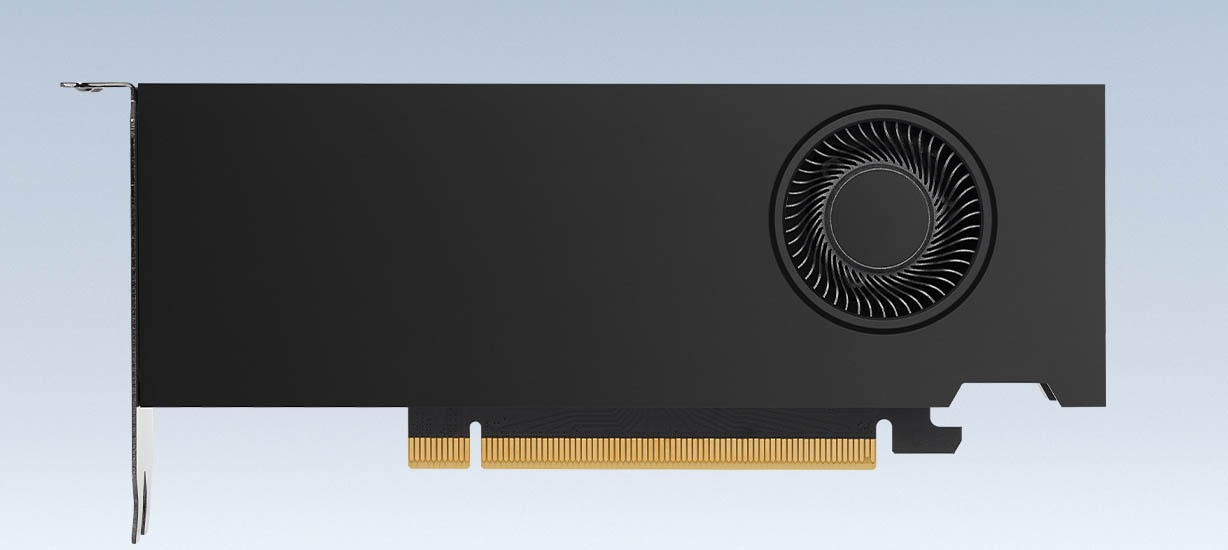

NVIDIA RTX A2000 GPU

The NVIDIA RTX A2000 is an 8nm Samsung Ampere GPU, but it is designed for a lower power footprint than many of the other options. First off, it is designed to be a low-profile PCIe Gen4 x16 card which means it can fit in many compact workstations.

In terms of memory, we get 6GB of GDDR6 with ECC and a 192-bit memory interface good for up to 288GB/s. The 276mm2 die has 13.25 billion transistors and 3328 CUDA cores, 104 Tensor cores, and 26 RT cores. This gives the solution the ability to do 8 TFLOPS of single-precision, 69.9 TFLOPS on the Tensor cores, and 15.6 TFOPS on the RT cores. We did not get double precision figures on the pre-brief for the card. There is also a NVENC and NVDEC onboard with AV1 decode.

The card uses a dual-slot active cooler and is a 70W card meaning it does not need external power connectivity instead drawing power from the PCIe Gen4 x16 bus. For graphics, there are four miniDP 1.4 ports.

Final Words

While big GPUs get a lot of attention, and for good reason, the trend towards AI inference and GPU acceleration being used by more applications is clear. NVIDIA along with AMD and Intel are racing to add GPU acceleration and AI inference to more edge systems. Intel has been adding DL Boost AI inference instructions as well as pushing its Xe graphics for this and NVIDIA cannot ignore this market. The RTX A2000 is a major step in the right direction here. While it may not be the fastest card on the market, getting Ampere-based compute to lower-end price points and smaller form factors is important for OEM adoption and inclusion in configurations.

Yuck, the professional line used to have 2x the VRAM than the Geforce counterpart, now the 192bit “Quadro” GPU has 6GB VRAM yet 3060 has 12GB. What a regression, a mistake that needs to be immediately fixed by Nvidia. 12GB VRAM on the professional A2100 please, and preferably single slot with all power loaded by an 8pin power connector. (A4000 is single slot, I don’t see why a lower 192bit part couldn’t be)

Given the power budget of only 70W and the small form factor, this A2000 is nowhere near the RTX 3060. It is much closer to the old 50 series SKUs, which don’t have an equivalent in the current lineup yet.

@AS

A2000’s 192bit bus means it is a lower clocked 3060 GP106 chip. 70W is impressive, but 6gb is deprecated. And loading all 70W from the PCIe bus means you can’t use multiple A2000s(unless the board specifically has a 6pin 75w to power each PCIe x16 slot, which is rare outside of specific GPU servers).

That’s the same issue also with A4000 because it only had a 6pin, which means the PCIe bus is loaded too much to prevent multiGPU use on Asrock EPYC boards (think 4GPU, 7GPU machines). An 8pin A4000 single slot would have been perfect.

While we are at it, the beautiful A10(proving that even 384bit is doable in single slot with 8pin 150w TDP) is missing a blower for workstation use. Make a new one and all it the A10C like the K40C?

So the entire 192bit, 256bit, 384bit line up all have some small but perhaps intentional market segmentation design flaws by Nvidia to prevent multiGPU computational use. (I mean, if they let you insert 7x A4000 or A10C on Asrock EPYC motherboard, you’d think they will sell more professional GPU to actual AI researchers instead of stupid miners)

Can’t wait to get one of this and put it in an OEM prebuilt. An RTX card at 70W alone just excited me so much, and its low profile size just makes it better.

You’ve made a very large assumption that is fundamentally incorrect @TS.

PCIe isn’t a bus as you believe, but instead is a point to point protocol.

The Wikipedia page shows the following https://en.wikipedia.org/wiki/PCI_Express#Power

“All PCI express cards may consume up to 3 A at +3.3 V (9.9 W). The amount of +12 V and total power they may consume depends on the type of card:[26]:35–36[27]

×1 cards are limited to 0.5 A at +12 V (6 W) and 10 W combined.

×4 and wider cards are limited to 2.1 A at +12 V (25 W) and 25 W combined.

A full-sized ×1 card may draw up to the 25 W limits after initialization and software configuration as a “high power device”.

A full-sized ×16 graphics card[22] may draw up to 5.5 A at +12 V (66 W) and 75 W combined after initialization and software configuration as a “high power device”.

”

Note that as a x16 lane part it can draw up to 75W. That’s each SLOT not for the entire motherboard. If it was for the whole board it wouldn’t comply with the specification.

I expected to have It the same CUDA core and VRAM of the GeForce RTX 3060 ti…

Why dual slot??

6Gb vram on a professional GPU in 2021?

I Can’t run any decent stress simulation on solid works with 6Gb

The low-power Quadro cards never had 2x the memory of the low power GeForce cards. You must be thinking of the top-of-the-line Quadros. Going back through Fermi, Maxwell, Kepler, and Pascal, the Quadro cards at 80 watts or less had similar memory capacities to the 80 watt or less GeForce offerings. There were also low-power Quadros that had half the memory capacity of some low-power Geforce offerings. And if you go ahead and compare ~75 watt Quadros to higher-power Geforce cards (as you are doing here with the A2000 and the 3060) then you also find instances of the Quadros having far less memory.

The only professional low-power cards that NVIDIA seems to have made with high memory capacity were the P4 and the T4, which weren’t Quadros at all, but Teslas.

NVIDIA hasn’t yet come out with a GA107 or GA108 to make this class of card on yet, so it’s built on a downclocked larger die, something unusual for Quadros. And the GeForce RTX 3060 is an odd goat in the realm of GeForce 30 series cards, as well, having more VRAM than the 3060 Ti, 3070, 3070 Ti, and 3080. NVIDIA likely did that for marketing reasons, because consumers might worry about future games requiring more than 6 GB, not because 6 GB actually wouldn’t have been enough for the card.

Would it be nice for some people to have 12 GB of memory on the A2000 instead of 6? Sure. But the main thing different from previous history here is the strange large capacity of the 3060 (which is a GPU with over 2.4 times the TDP of the A2000, anyway).

> “We did not get double precision figures on the pre-brief for the card.”

NVidia has said of the RTX Ax000 series: “The FP64 TFLOP rate is 1/64th the TFLOP rate of FP32 operations.”. This is a “graphics” card, as opposed to a “HPC” card.