The Supermicro SYS-120U-TNR is a dual-socket Intel Xeon “Ice Lake” generation server. In this generation, Intel adds more cores, new instructions, and more platform capabilities. In dual-socket servers, this translates to an overall more capable server. Supermicro with its “Ultra” line, which the SYS-120U-TNR is part of, takes advantage of these new platform capabilities for a massive generational upgrade.

Supermicro SYS-120U-TNR Overview

As we have been doing recently, we are going to split our review into the external overview followed by the internal overview section to aid in navigation around the platform.

Supermicro SYS-120U-TNR External Overview

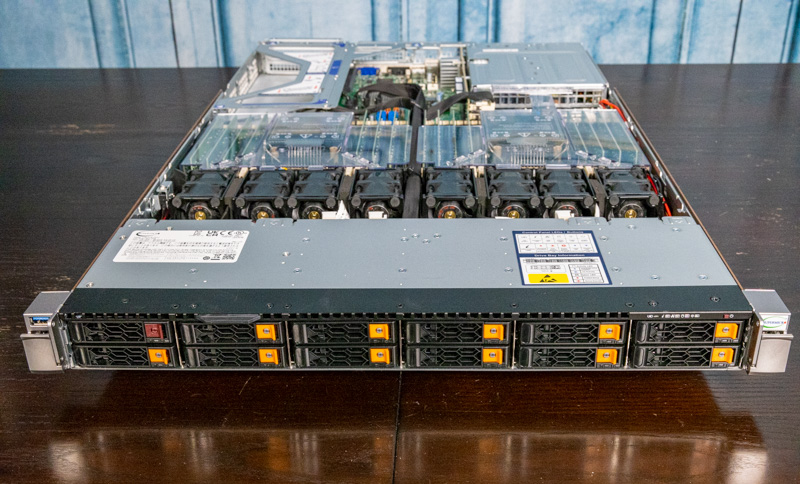

The system itself is a 1U server that is 29.1″ or 739mm deep. This is slightly deeper than the Supermicro SYS-510P-WTR 1U 1P server we reviewed, but one gains additional features.

Starting with the front of the system, we get twelve 2.5″ hot-swap bays. The system itself can support NVMe, SATA, or SAS drives, but one will need a few additional parts such as a SAS HBA/ RAID controller to allow the server to utilize SAS drives. These 2.5″ drive trays are not Supermicro’s legacy drive trays since they are thinner to allow for a full 12 drives in the 1U chassis. Even though they are different trays, they retain the same tab color scheme as the previous generation and that is what we are showing above.

On the rear of the system, we have a number of features. In the middle of the system, we have a standard Supermicro “Ultra” I/O block including USB 3.0, serial, VGA, and an IPMI port. Like other Ultra servers, and many competitive systems, the onboard NIC is provided via a LOM in this case a riser which also makes it customizable.

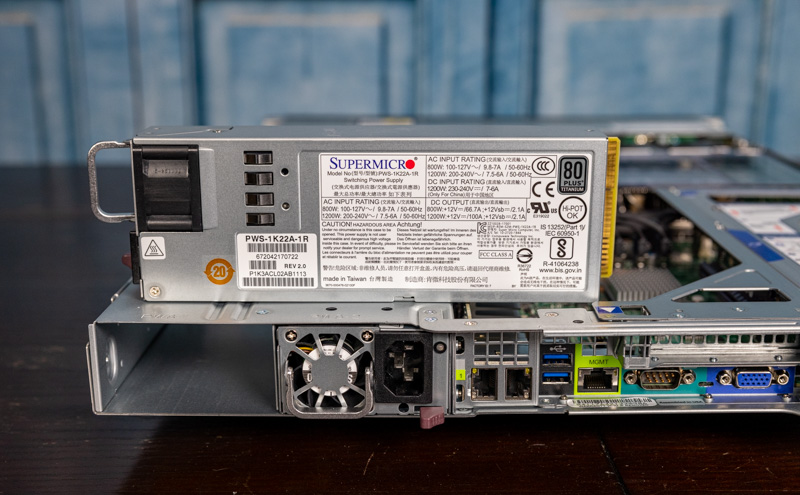

The power supplies are 1.2kW 80Plus Titanium units. Supermicro was early adopting the 80Plus Titanium power supplies in its systems and the Ultra line tends to use these high-efficiency power supplies.

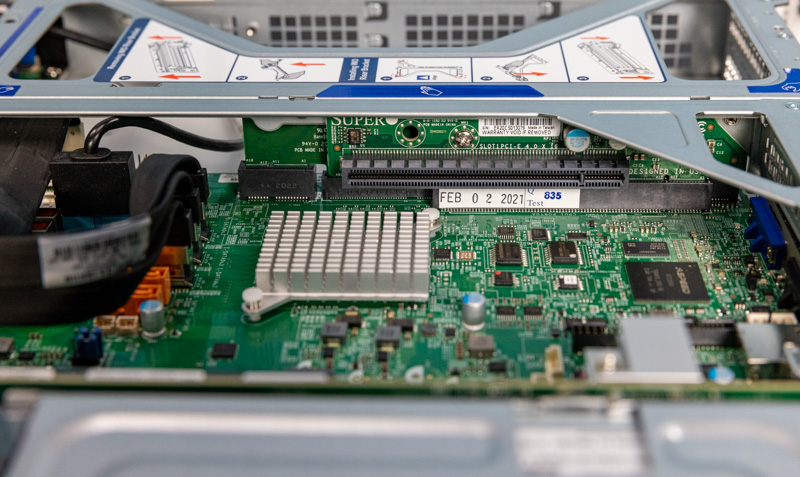

The far right (looking from the rear) I/O expansion slots are two PCIe Gen4 x16 full-height slots. Here is the riser powering those.

The middle slot is actually a change in this generation. While it is still a low-profile slot, it is now a PCIe Gen4 x16 slot.

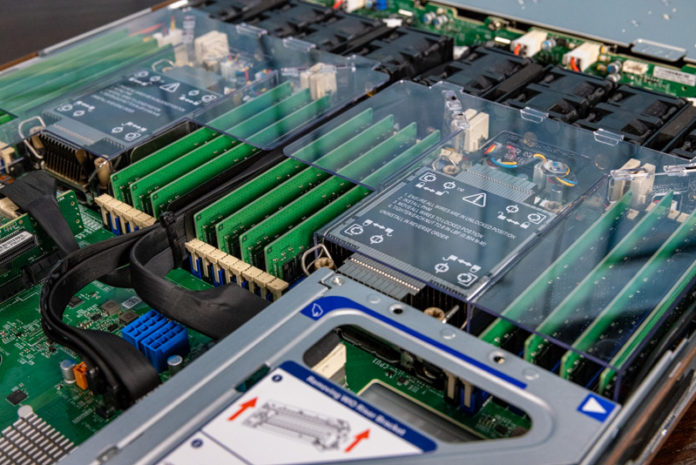

With the 64 PCIe Gen4 lanes per CPU, Supermicro has enough PCIe expansion lanes to handle the shift to 12x PCIe Gen4 x4 links for the front drive panel (48 total), and 3x 16 lanes for these expansion slots (48 total) while still having more lanes left in the system.

While many may focus on how AMD EPYC can use 160x PCIe Gen4 lanes in a dual-socket system, with current generation 2.5″ drives, it is challenging to use that many lanes in a 1U system. We recently did a E1 and E3 EDSFF to Take Over from M.2 and 2.5 in SSDs piece where we showed how the next-generation EDSFF form factor will help allow for more PCIe devices in a system in the coming quarters. As of now, the 128x PCIe lanes in a sub 800mm deep chassis is plentiful.

Next, we are going to get to the internal overview where we will see what is inside and even how Supermicro is able to fit all of those expansion slots.

Hi Patrick, according to Supermicro’s documentation, the two PCIe expansion slots should be FH, 10.5″L – which makes this one of the very few 1U servers that can be equipped with two NVIDIA A10 GPUs.

Can you confirm that it is the case ?

The only other 1U servers compatible with one or two A10 GPUs that I know of are the ASUS RS700-E10 that you recently reviewed, and the ASUS RS700A-E11 which is similar and equips AMD Milan CPUs.

For example, all 1U offers from Dell EMC only have FH 9.5″ slots.

This one supports 4 double width GPUs: https://www.supermicro.com/en/products/system/GPU/1U/SYS-120GQ-TNRT

Is supermicro viable from a support perspective for those around the 25 server range?

Like with any other product, the first thing you notice is the build quality. This is on the complete other end of the spectrum compared to Intel and Lenovo servers. Everything is fragile, complicated, tight, bendable. The fans; laughable.

IPMI usually works, but sometimes when you reboot, ipmi seems to reboot. Takes a minute or two and it’s back again.

HW changes updates in IPMI when it’s ready, it can take a couple of reboots to show up.

We tried a lot of different fun stuff due our vendor providing us with incompatible hardware, like;

Upgrading from 1 -> 2CPU’s with a Broadcom NIC in one of the PCIe slots. This does not work with Windows (for us).

Upgrading from 6 -> 12NVMe, drivers required which again requires .net 4.8.

Installing risers and recabling storage. How complicated does one have to make things ?

Hopefully we will soon start using the system. The road has so far been painful, not necessarily Supermicro’s fault.

It’s awesome that one can put 6 NVMe drives on the lanes of the first CPU. Making this a good cost-effective option for 1 CPU setups with regards to licensing.