Supermicro has been deploying liquid-cooled servers for years, but it is now taking the next step. With the Supermicro liquid cooling offering being launched, the company is signaling that this is going to become a bigger part of its business due to customer demand.

Supermicro Liquid Cooling Portfolio Launched

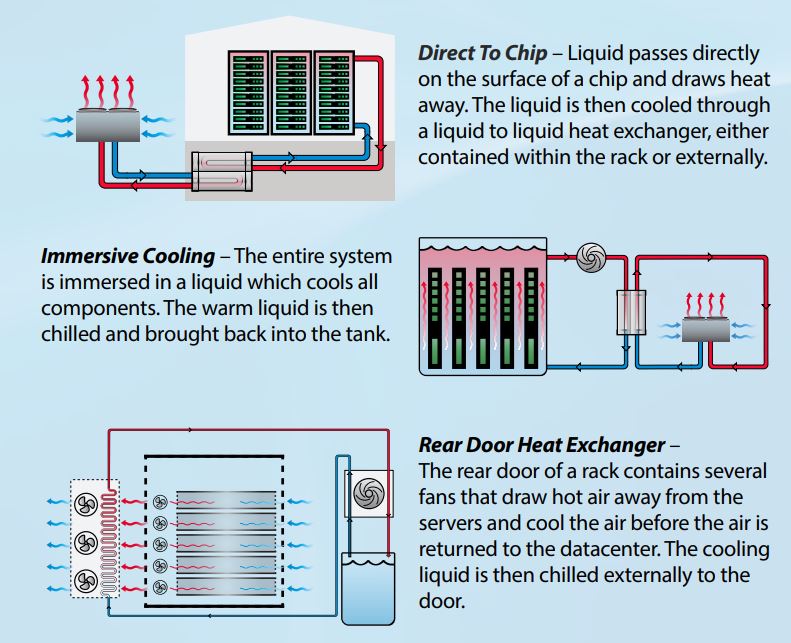

Supermicro has three primary types of liquid cooling that it is offering. Direct to Chip is most similar to what is used in workstations. At the same time, there is a major difference is that instead of the heat exchange and cooling of the liquid happening in a server itself, the hot liquid is pumped out of the chassis to have this process happen at a more aggregated level whether that is in a rack or in a data center.

Immersion cooling we have covered a number of times (see Submer at SC19 as an example. This is where we have usually horizontal tanks filled with liquid instead of vertical racks. There are a number of challenges converting existing facilities to immersion cooling since it is more than just the racks that are changing.

Rear door heat exchangers often allow less interruption to the servers to increase cooling, but at the expense of sometimes changing how servers are serviced.

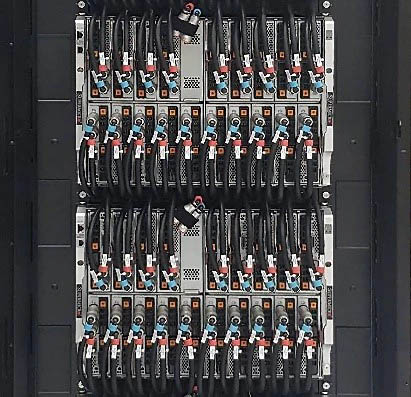

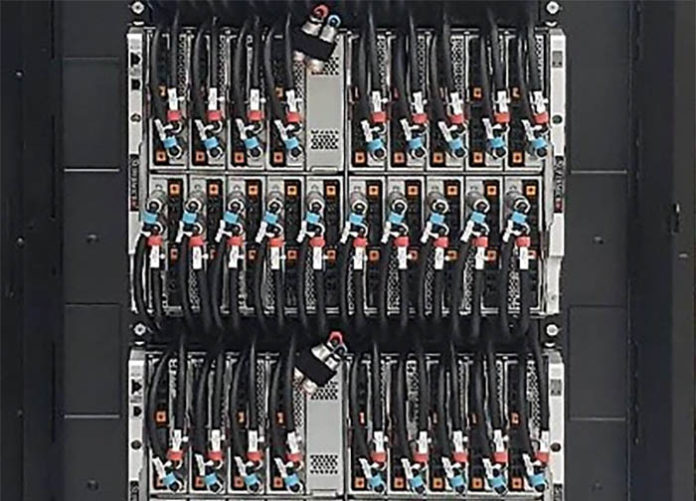

Along wit the portfolio, Supermicro is launching its liquid-cooled offerings. We are starting to see a lot of interest in this generation both with high core count/ power CPUs in dense nodes but also in GPU nodes. A NVIDIA A100 80GB SXM4 module can run at 500W and the power is going up from there. As a result, packing these components into a system means that cooling (and power) become big challenges.

Liquid cooling is different also because of the fact that there are different methods and there needs to be integration with the facility. Unlike air-cooled servers, liquid cooling may require facility integration from facility water loops to even floor loading and overhead access (especially for immersion cooling.) As such, part of Supermicro’s offering is a more solutions-based model that includes consultation.

With air-cooled servers, one basically just needs to know the power capacity of a rack and depth since the industry is so standard. Since liquid cooling is new, it requires more integration work.

Final Words

Looking beyond this current generation, liquid cooling is going to become more prevalent. More organizations will need to turn to liquid as the chip power increases due to both larger chips but also as the newer higher-speed and power SerDes for interconnects come online. 2021 is a minor architecture bump, 2022 is where the changes start to accelerate for the industry. This Supermicro liquid cooling solution announcement is somewhat designed for today’s servers, but the undertone of the message is that Supermicro is gearing up to help its customers with next-generation infrastructure.

We need standards for direct to chip cooling. This way servers from multiple vendors can just be plugged in, like power or networking.

A design that can only leak water out the back of servers would also help adoption.

Just so I understand size, these are 8U chassis that require and extra 1u to 2U for piping… Correct?

I wonder if racks will push past 45U to deal with some of the vertical space loss. I could also see the pipes being routed to the rails rather than over/under to possibly avoid the loss of rack units to just routing the lines.

@Hans there are quick disconnect connections in the custom liquid cooling world. Mainstream server markets are far behind in liquid cooling, but there have been industrial manufactures for them for many years.

Not sure there is much purpose though unless extremely high density gpu farms are needed. Only other purpose is noise reduction as cooling systems can be relocated. To secondary rooms.

But… Imagine a leak occurring in the top system on a full rack. Risk isnt worth it.