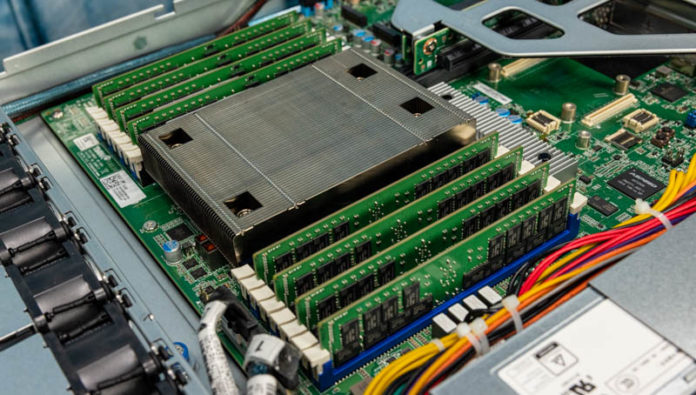

In our Tyan Transport CX GC68B8036-LE review, we are going to see how this system, also referred to as the B8036G68V4E4HR-LE, is designed to be a lower-cost AMD EPYC 7003 series platform, but with a twist. While typically we have seen low-end Xeon E5 and Xeon Silver systems where two sockets are populated with low-end processors, Tyan and AMD are offering a different value proposition with a full system powered by a single socket. The reason we call this “voracious” low-end consolidation is simply because the consolidation is much larger than normal. We found that this system is able to, in a single-socket platform, replace four of our lab’s dual Intel Xeon E5-2630 V4 nodes for an 8:1 socket consolidation.

Those same Xeon E5-2630 V4 nodes were current generation four years before this article was published making them just in the middle of the 3-5 year replacement window for servers. Instead of consolidating perhaps 1.5:1 or 2:1, we can now consolidate 8:1 which is a huge deal. Later in this article, we are going to look at how.

Tyan Transport CX GC68B8036-LE Hardware Overview

As has become our custom recently, we are splitting our hardware overview into two sections. First, we are going to look at the external hardware. Then we are going to look inside the system. For those who want to listen along, we also have a video version of this review:

We suggest opening this on YouTube in a new tab for a better viewing experience. Also, the video just by nature of the medium has a few more angles to see some of the hardware if you want to see that.

Tyan Transport CX GC68B8036-LE External Hardware Overview

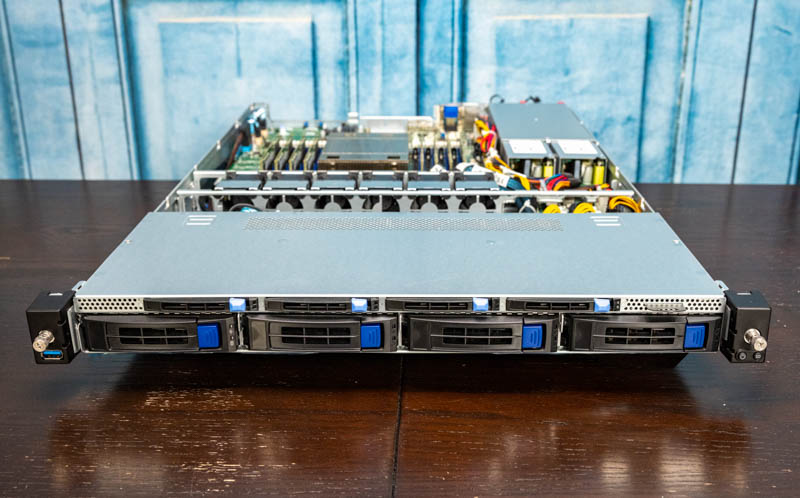

First, we should just mention that the 1U Transport CX is a relatively shorter depth server. Many of the servers we review are 30″ or deeper. This is only 26.77″ or 680mm. This may seem like a small feature, but many of the lower-end dual Xeon nodes have limited rack power density, and are also often found in shorter-depth racks. Shorter racks with less power mean that data center floor space can fit more servers. As a result, this depth may seem like a small feature, but it is actually useful in one of the target environments.

Looking at the front of the chassis, one can see a big feature. Specifically, we have four 3.5″ bays along with four 2.5″ bays. The four 3.5″ bays are designed for hard drives. The 2.5″ bays are only 7mm drive bays but are designed for NVMe storage. One can have both a 4-drive NVMe array and a 4-drive HDD storage array in this 1U chassis. Most legacy servers with 4x 3.5″ bays do not have any additional storage options, so this is a great feature.

What is more, Tyan is using tool-less drive trays on both the 3.5″ and 2.5″ drive bays. They are designed slightly differently but both allow quick drive swaps without using a screwdriver.

The rear of the system has a fairly typical set of features, but we wanted to highlight a few.

First, we are going to discuss the main rear I/O block. Here we get two USB 3.0 ports (plus one in the front), a VGA, and a serial port. There is an out-of-band management port in the rear as well. The onboard NICs are Broadcom BCM5720 1GbE NICs. Many servers we see with Intel, some with Broadcom and this is a Broadcom solution.

Normally, we would cover this in the internal overview, but since we needed to balance the sections, we are going to discuss the rest of the rear I/O capabilities in the external overview. This is a break from tradition, but we needed to do so in this instance.

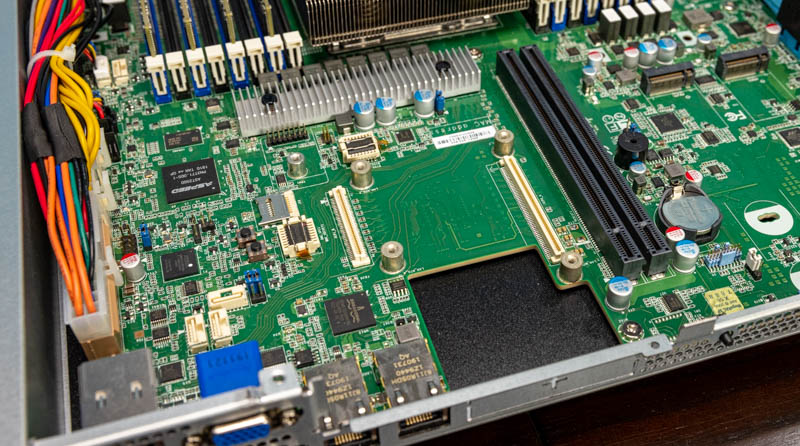

First, we are looking at the NIC slot next to the dual 1GbE. Here we can see an OCP NIC 2.0 slot. While the OCP NIC 3.0 form factor is going to be more prevalent going forward, and is a better form factor, for 10GbE/ 25GbE/ 40GbE/ 50GbE the OCP NIC 2.0 form factor is widely deployed. For basic high-speed networking in this segment, this is an inexpensive choice.

The two expansion slots are half-length slots via a riser that has PCIe Gen4 x16 slots on either side. We would have liked to have seen this be a tool-less assembly, but again, this is a cost-optimized platform so we do get screws. These slots are not going to fit high-power GPUs or accelerators, but they will fit lower power inferencing accelerators and NICs. For example, we put 2x 200GbE Mellanox ConnectX6 Dx NICs in here just to see the power of having 400GbE (2x 200GbE) in a system like this. Ultimately, that is probably a poor match for this class of system, but designs like that are possible if the OCP NIC 2.0 slot is too limiting.

The power supplies are redundant 850W 80Plus Platinum units from Delta. They are hot-swappable and have standard features such as status LEDs.

Next, we are going to take a look inside the system to see what is powering the solution.

What a mouthful codename

Keep consolidating those E5 v4 nodes. Because of people like you, prices for for E5 v4 systems are finally beginning to drop to reasonable prices on the second hand market. My business is finally able to afford upgrading our E5 v2 servers.

Would love to see how these do in a switchless Azure Stack with the new OS.