Today we are finally able to share details around the AMD EPYC 7003 series, codenamed “Milan.” This is AMD’s newest offering that will carry the EPYC line through 2021 and into a new realm with Genoa next year. This launch review happens at a somewhat strange time in the industry. Milan has been shipping since Q3 2020 and Intel Ice Lake Xeons are already shipping, but our comparison point is to Intel Xeon chips that were launched in 2017 with Skylake then largely tuned. So the challenge is that our Milan comparisons are going to be to Cascade Lake Refresh/ Cooper Lake not to Ice Lake which will be the real Milan competition.

The Plan and Background

In this article, we are going to have a basic structure of answering:

- What AMD is launching with Milan and the SKU stack.

- How the new EPYC 7003 series is better than the EPYC 7002 series.

- What the performance and power consumption are, including gen-gen comparisons.

- Some of the cool platforms for Milan showing how the market is positioning the chips.

- A high-level context to think about the EPYC 7003 versus 3rd Gen Intel Xeon Scalable (again we cannot go into details, so this is from public bits.)

- A rough guide in terms of where we expect to be by the end of Q2 2021 for server market positioning.

- Our final words.

If this seems like a lot, it is. It has taken the STH team weeks to get to this point, and we will have more on the new chips over the coming weeks as well. Still, we like for these launch pieces to set the stage for the industry since we know that they are read by almost countless folks in the community. We also have an accompanying video to this piece which you can find here:

As always, we suggest opening the video in a separate browser/ tab for the best viewing experience.

Now that we have the plan laid out, it is time to get onto the piece.

AMD EPYC 7003 and the SKU Stack

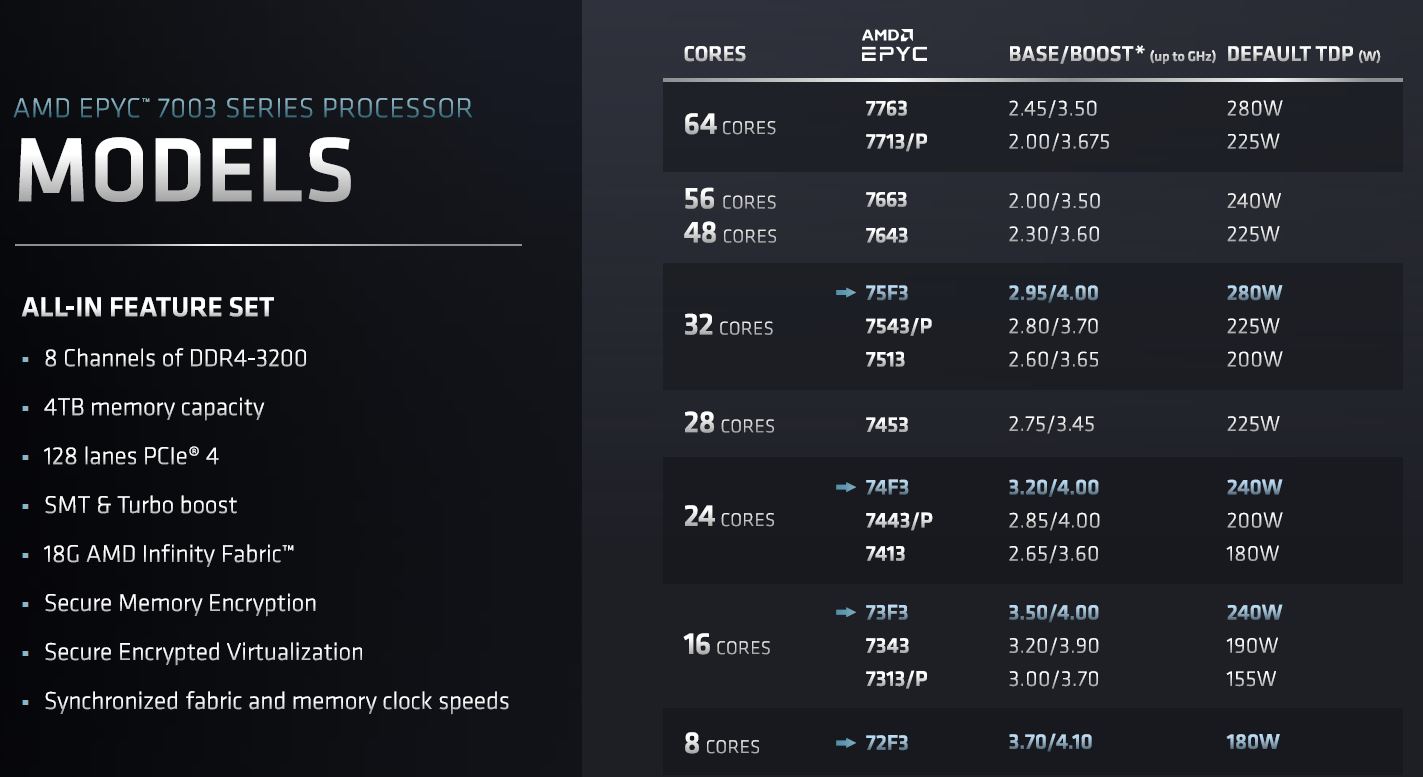

We are going to have Eric do one of our normal SKU list and value analysis pieces, however, we also need to cover what is being launched. AMD has 19 public SKUs that are launching as part of the EPYC 7003 series, although a partner discussed another off-roadmap SKU that we will cover in the partner section of this review.

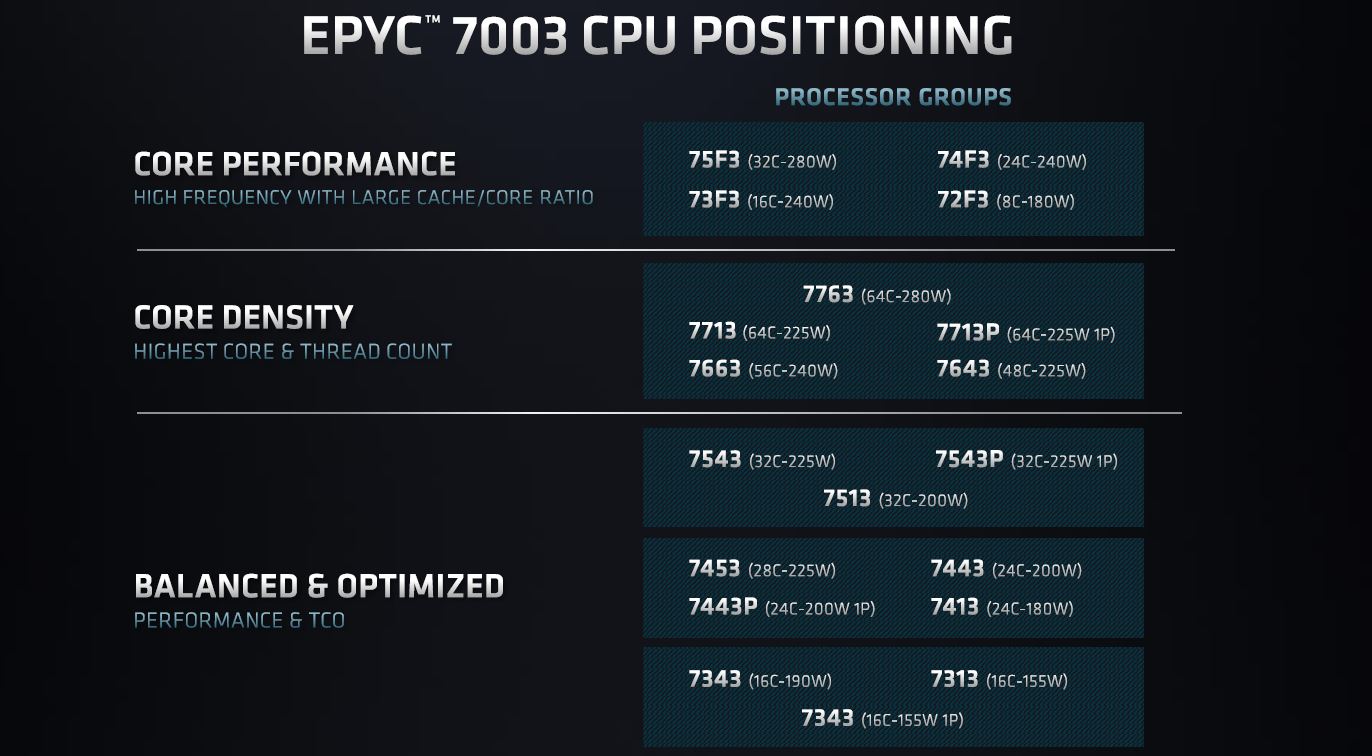

There are four main families. There are “core performance” SKUs which should be labeled “per core performance” SKUs. These are chips where AMD is offering chips at higher TDPs that may offer fewer cores but have higher frequencies and more cache per core. There are “core density” SKUs which are effectively the higher core count SKUs. Finally, AMD has its “balanced & optimized” segment which is a clever way of saying “the mainstream parts we expect intense competition from Intel Ice Lake Xeons around.” Admittedly, AMD has better marketing headline titles.

There are a few major points here. There are 28 core and 56 core CPUs in this generation. We can pretend that it is innovative and that AMD just decided to benevolently create 28 core parts to bridge the gap between 24 and 32 cores. Or we can take a more practical approach and see that this is directly targeted at 28 core Intel Xeon customers since 28-cores has been the maximum Xeon core count from Q3 2017 through Q1 2021. One could argue Cascade Lake-AP/ Xeon Platinum 9200 but for that and for a 2:1 consolidation ratio, AMD has the 56-core parts.

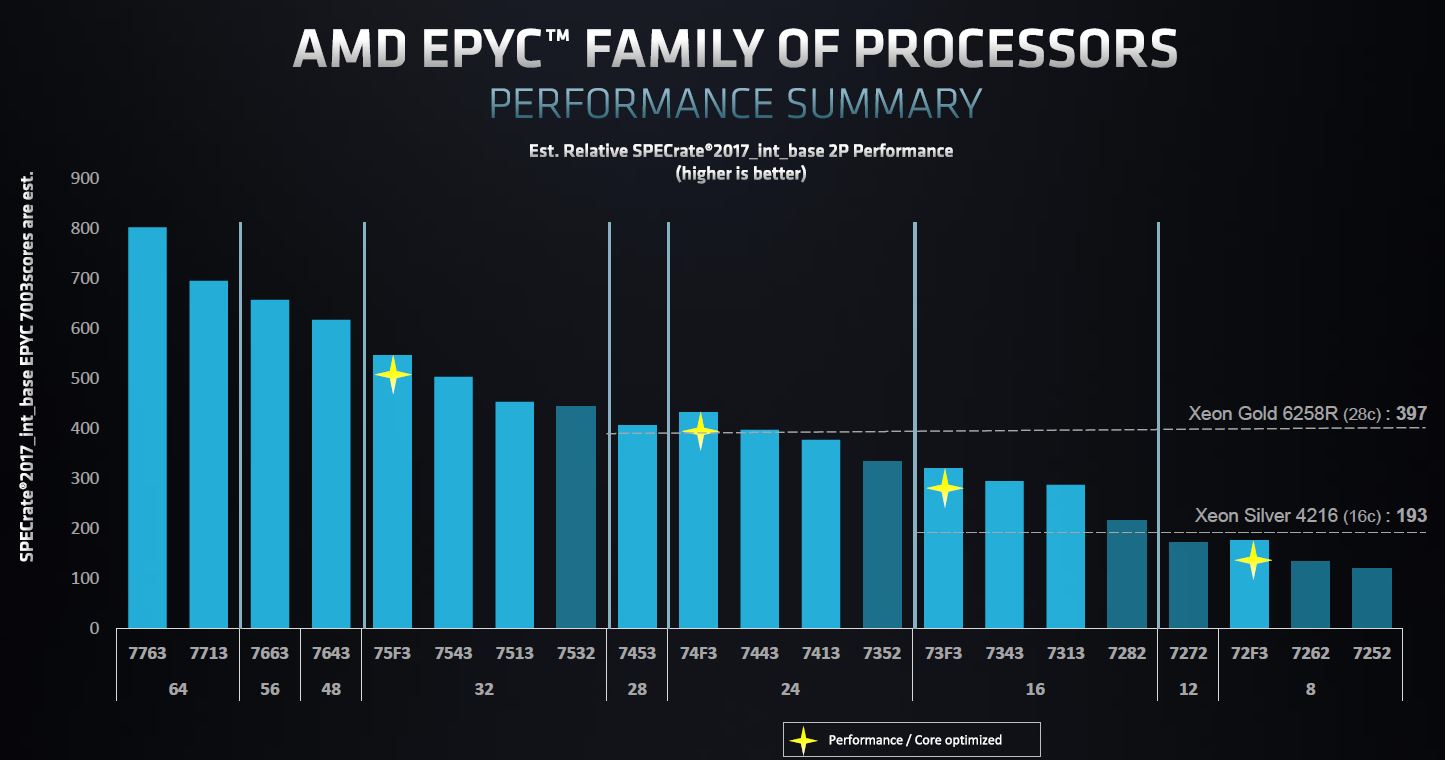

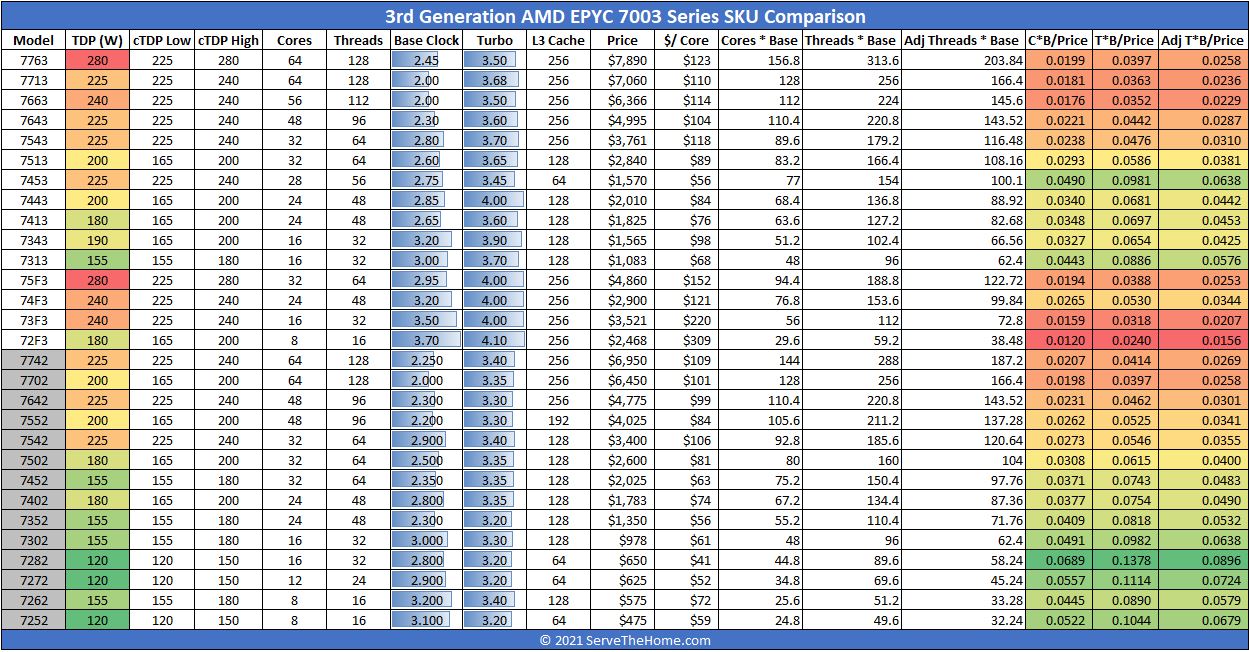

One will notice on the below chart that there are six EPYC 7002 SKUs, the EPYC 7532, EPYC 7352, EPYC 7282, EPYC 7272, EPYC 7262, and EPYC 7252 that will continue for another generation. Most of these are lower power 120-155W TDP SKUs. Many are the 4-channel optimized SKUs. AMD greatly increased the I/O die power consumption in this generation which seems to mean that it needs the previous-gen “Rome” parts to fill the 120-155W TDP space.

Here are the dual-socket capable SKUs. One can see that we are getting higher TDPs and higher clock speeds than with the previous generation.

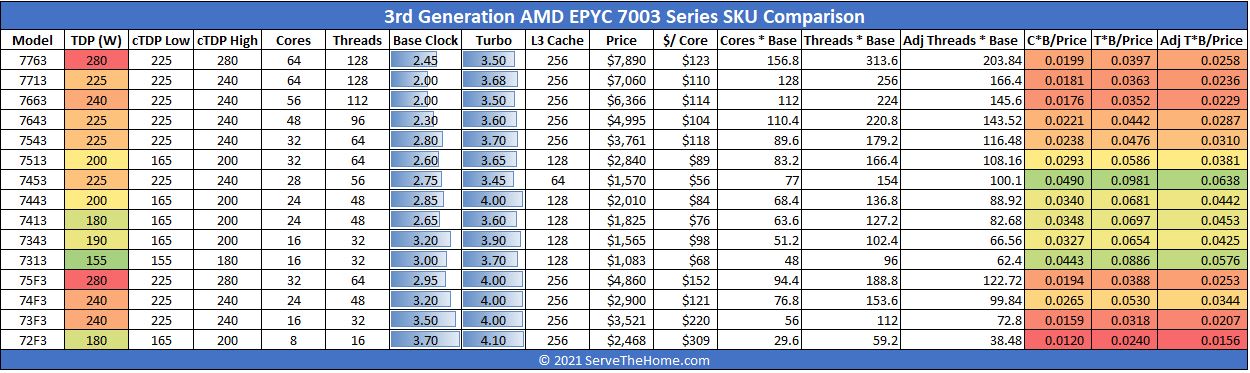

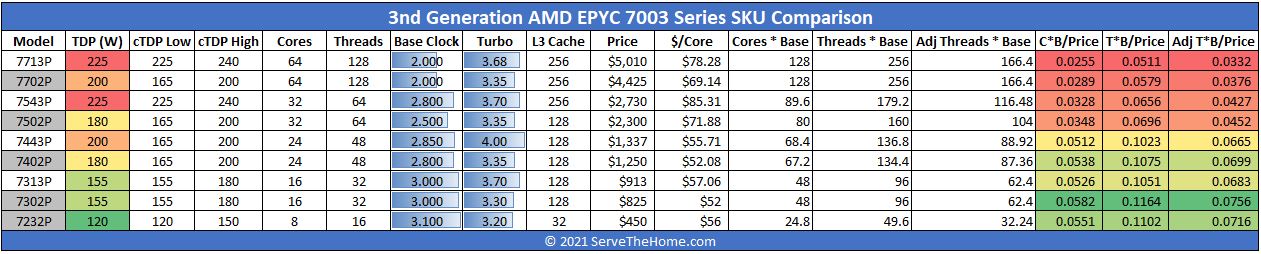

We put these SKUs in a chart with the EPYC 7003 series (minus the F SKUs) and one can immediately see the TDP delta. The other major delta is on pricing. Prices are up significantly across the line.

It seems as though AMD is now at a point where it is transitioning away from “get a foothold” pricing into pricing that more closely reflects that its chips are in high demand. As someone who used to do pricing consulting, this looks either like AMD is capturing that value, increasing prices simply for inflation, and/ or increasing list price so it has more room to discount. That last point is especially important in segments where Intel will have directly competitive Xeon parts.

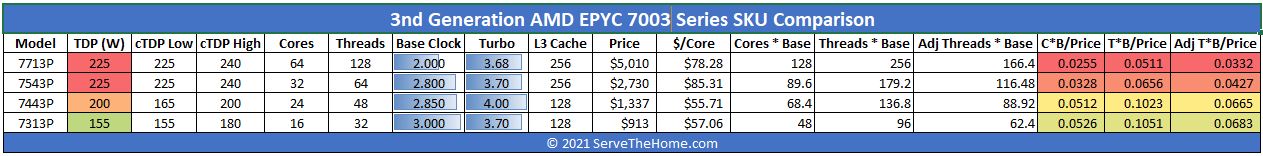

Again, AMD has its P-series single-socket only SKUs. These are variants of a subset of the above processors.

Pricing is up in this segment as well, but so is performance.

Given the lack of low-core count and low-TDP SKUs compared to typical Xeon lines, AMD is effectively pushing the market of low-end dual-socket CPUs to move to single-socket P-series CPUs. Our initial thought is that this may be a technology limitation since AMD is aware of the Xeon Bronze and Xeon Silver market where Intel sells many Xeons, but AMD is moving further away from addressing.

Now that we have discussed the SKU stack, it is time to get into the details.

Any reason why the 72F3 has such conservative clock speeds?

The 7443P looks as if it will become STH subscriber favorite. Testing those is hopefully prime objective :)? Speed, per core pricing, core count – there this CPU shines.

What are you trying to say with: “This is still a niche segment as consolidating 2x 2P to 1x 2P is often less attractive than 2x 2P to 1x 2P” ?

Have you heard co.s are actually thinking of EPYC for virtualization not just HPC? I’m seeing these more as HPC chips

I’d like to send appreciation for your lack of brevity. This is a great mix of architecture, performance but also market impact.

The 28 and 56 core CPUs are because of the 8-core CCXs allowing better harvesting of dies with only one dead core. Previously, you couldn’t have a 7-core die because then you would have an asymmetric CPU with one 4-core CCX and one 3-core CCX. You would have to disable 2 cores to keep the CCXs symmetric. Now with the 8-core CCXs you can disable one core per die and use the 7-core dies to make 28-core and 56-core CPUs.

CCD count per CPU may be: 2/4/8

Core count per CCD may be: 1/2/3/4/5/6/7/8

So in theory these core counts per CPU are possible: 2/4/6/8/10/12/14/16/20/24/28/32/40/48/56/64

I’m wondering if we’re going to see new Epyc Embedded solutions as well, these seem to be getting long in the tooth much like the Xeon-D.

Is there any difference between 7V13 and 7763?