In our Kioxia CD6-L review, we are going to take a look at a SSD platform with a purpose. These PCIe Gen4 NVMe SSDs were designed with the key objective of taking share from SATA SSDs. In this review, we are going to see how they fare. In the end, we are going to have a bit of a surprise, that Kioxia does not know is happening.

When we have discussed data center NVMe SSDs on STH, we have typically meant PCIe Gen3 x4 devices. With the release of Sandy Bridge generation Intel Xeon E5 (V1) CPUs, we finally had a platform that supported NVMe SSDs. That was 2012. As we prepare for the 8-year anniversary of the start of a shift to NVMe in servers, we also must prepare for the inevitable decline of SATA SSDs. This is also the first review where we are testing the next-gen Arm server CPUs, specifically the Ampere Altra Q80 parts with PCIe Gen4 NVMe storage. While one may see the CD6-L as a close relative to the CD6 (which it is), we are adding a few new bits into this CD6-L review.

Companion Video

We have a companion video to go along with this piece that focuses a bit more on the NVMe replacing SATA comparison:

That is really more of a distinct piece, so it is best to open it in another YouTube tab/ app and watch there, perhaps while reading the rest of this review.

Kioxia CD6-L Overview

The Kioxia CD6-L is the company’s newest data center focused NVMe SSD. As such, it offers PCIe Gen4 performance and is designed for the new U.3 form factor. Effectively U.3 helps make it easier for legacy vendors to design server backplanes that can support either NVMe SSDs or SAS/ SATA. Eventually, we will see next-gen form factors take over, but until then, 2.5″ is an important industry form factor for SSDs.

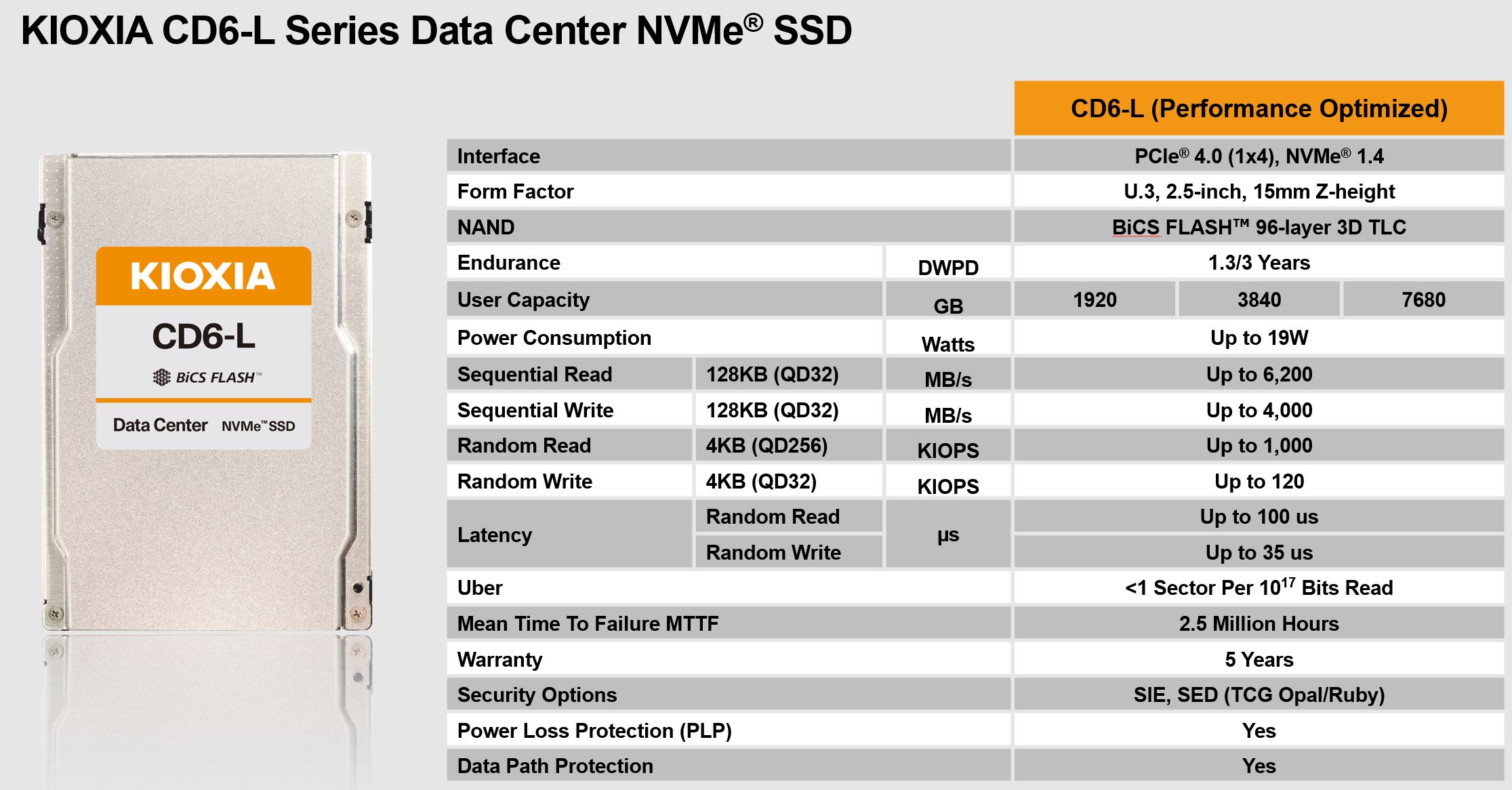

Here are the key specs for the CD6-L:

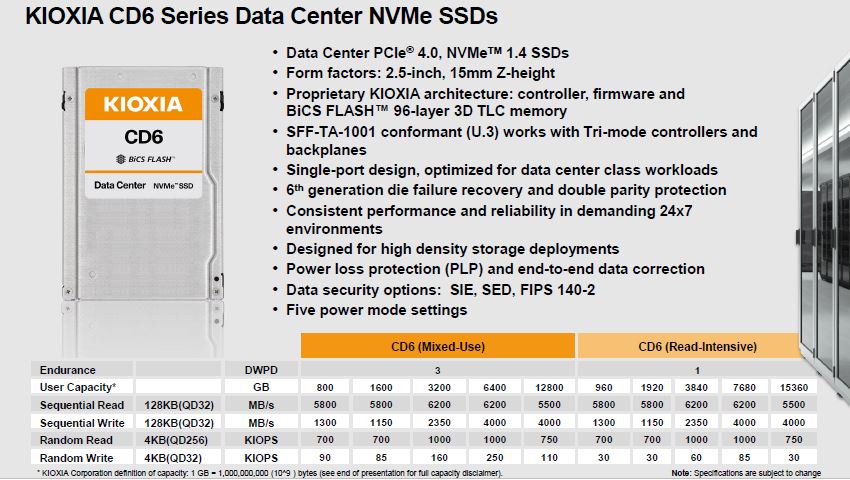

We generally like when companies break out metrics by model. If you read my previous reviews, you will notice that in the Kioxia CD6 PCIe Gen4 Data Center SSD Review the company did just this.

That brings up the question of what exactly is different between the drives we tested previously (CD6) and the drives we are testing today (CD6-L.) If you look at the 7.68TB line of the CD6 chart and compare it to the CD6-L numbers, you will notice one of the biggest differences is in the random write performance. Kioxia was able to get a bit more performance there. Other readers will note we now have a 1.3 DWPD rating for the read-intensive line and there are also fewer capacities. We do not have the 960GB drive nor the 15.36TB drive with the CD6-L.

As we mentioned in our CD6 review, read-intensive means that this drive is designed for many workloads that are focused on writing some data, but then frequently accessing the data that is stored. There are write-intensive drives out there that are focused on serving as log devices where the model is to write once/ read once or not reading data written at all in most cases. For the majority of workloads, read-intensive drives are going to work fine. At STH, we purchased many drives in the SATA SSD era second hand and used SMART data to discern how much data is actually being written to drives in the real world. Used enterprise SSDs: Dissecting our production SSD population. As an excerpt from that piece:

This is only over a subset of the SSDs we purchased for that article, so this only includes 234 of the SSDs in that piece. These were older drives, so they were much smaller than the 7.68TB SSD we have here. At the time, “read-intensive” was generally closer to 0.3 DWPD and so we found that 80-90% of the SSDs that we purchased were fine at that rate. With 1 DWPD and larger drives, there is a good chance the majority of workloads will work well on a modern large 1 DWPD drive. Many data center customers will purchase higher-end drives because they simply do not want to think about the repercussions of having to manage workloads to specific drives in specific servers. These days, there are fairly well-defined segments for general purpose SSDs, log devices, and boot devices so we see this as less of an issue than when we did that study.

Let us move on to performance testing, and what we had to do to test the drives. There we found way more than we expected so first, we are going to discuss what we found when starting Gen4 drive reviews, then we will get into more of the performance aspects.

I’m ashamed but we’re still using SATA in our Dells. We’ll be looking at gen4 in our next refresh for sure.

If people missed it… watch the video. I’m sending it to a colleague here since it explains the why of arch. It’s different than the article but related.

Good job on the ARM ampere tests too. we prob won’t buy this cycle, but having this info will help for 2022 plans

We won, John, we won. The SATAs can never again destroy our bandwidth. But the price, John, the terrible terrible price.

Plan to buy Dell 7525 for Media Storage but not sure can support NVME raid on Pci 4.0 ?

if we use raid for read only performance must multiply by disk ?

didn’t see anyone test on this :)

Can I use these drives in a normal AMD PCIe gen4 system, using a m.2 to u.2 cable? Or is there a m.2 to u.3 cable?

As far I know those Kioxia pro drives don’t have any end-user support in terms of sw tools or firmware. They wont even disclose any endurance numbers. In my they are only an option if you buy perhaps > 1000 disks to get the right support.