As part of a duo of SmartNIC announcements today, we have the Silicom FPGA SmartNIC N5010 which is perhaps the higher-end of the two solutions. Silicom has a history of building reference platforms for Intel and other networking companies and builds cards for various large OEMs. The FPGA SmartNIC N5010 is certainly one of the company’s higher-end offerings.

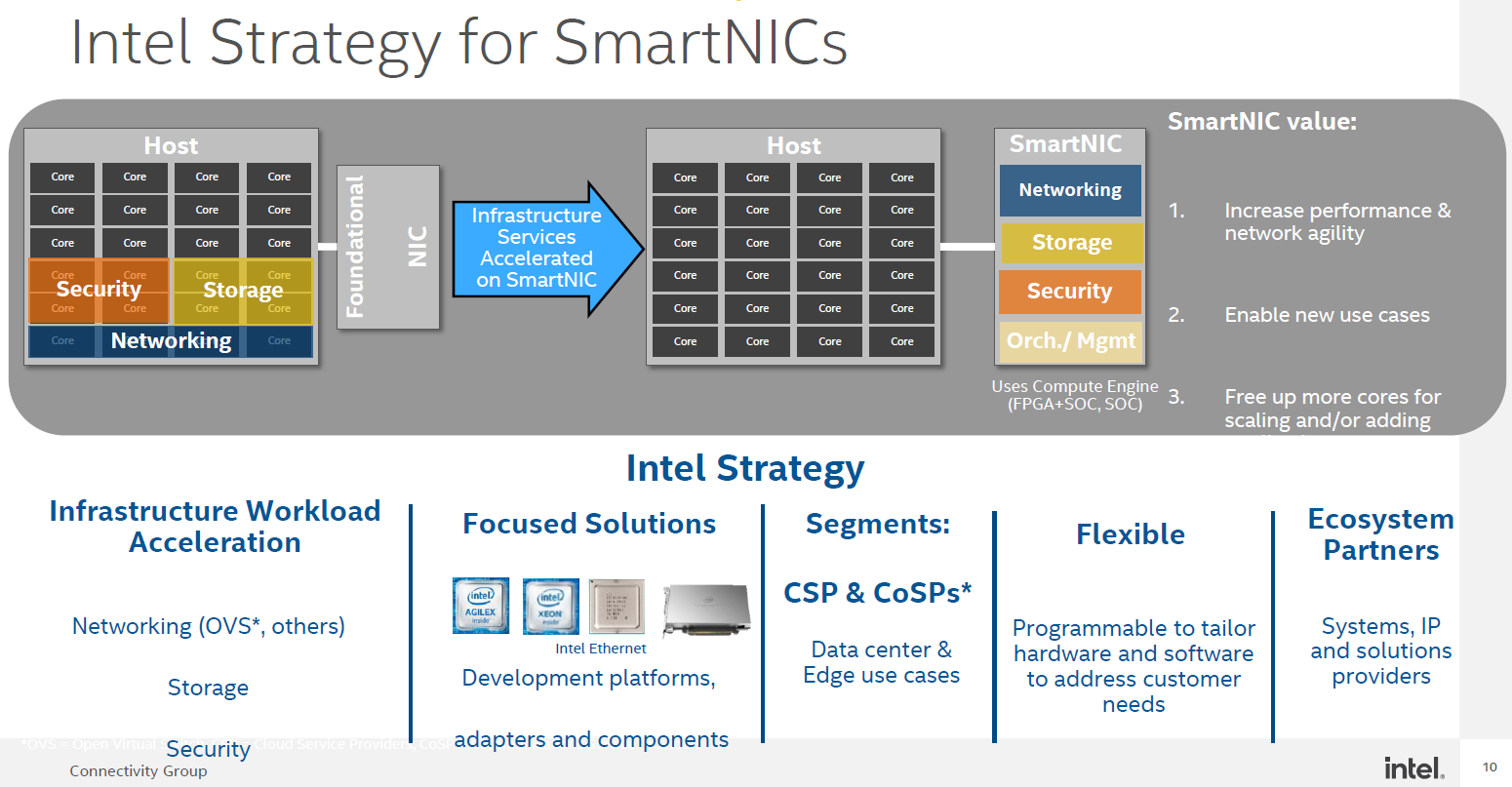

The Intel FPGA SmartNIC Strategy Context

The world of network interface cards is getting more intelligent. This is the next step in data center disaggregation. Having more capabilities at the NIC-level allows for more efficient utilization of x86 cores while delivering higher-end features and better security.

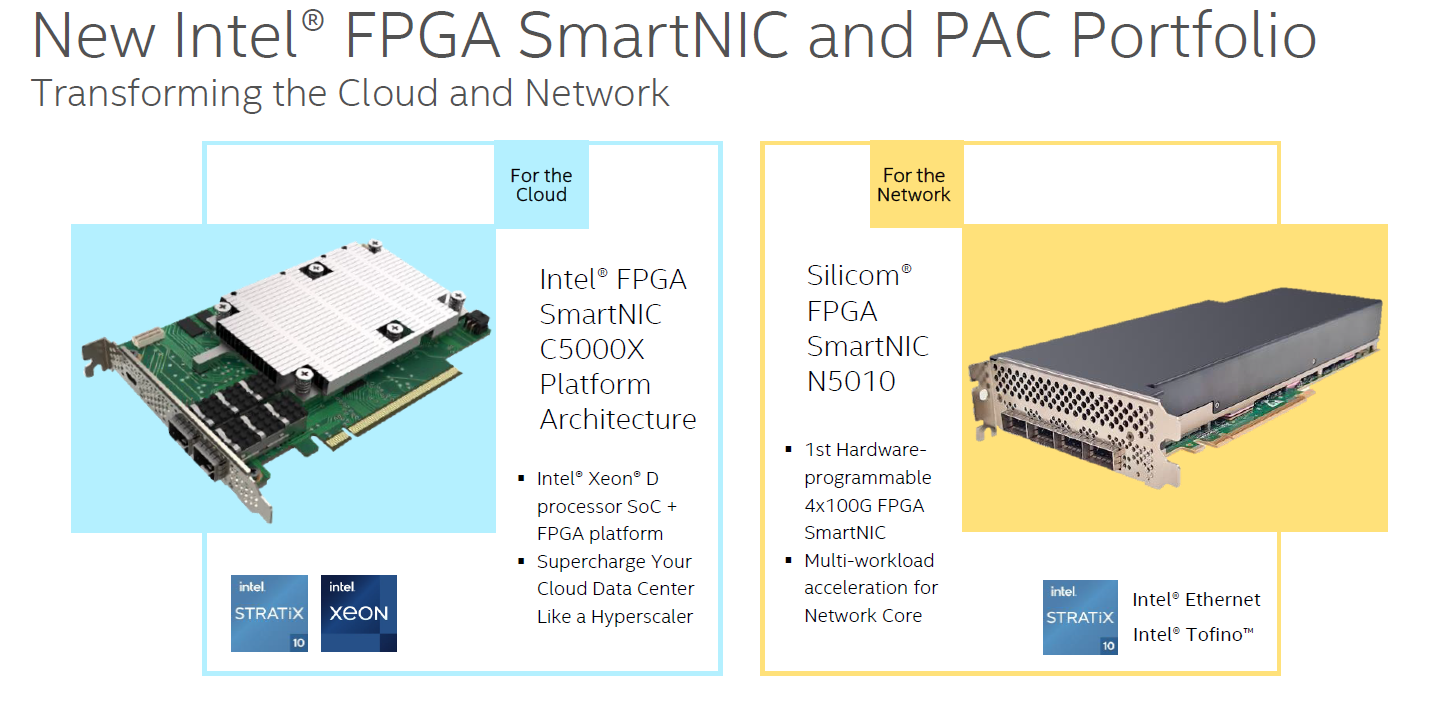

The Silicom FPGA SmartNIC N5010 is part of today’s duo of card announcement from Intel and is designed more for the cloud server. The other announcement is another NIC designed for heavy network workloads. You can read about the other Intel FPGA SmartNIC announced here.

This solution is the higher-bandwidth option of the two. While the Inventec unit is designed to be used in a standard cloud server, the Silicom unit is designed to be used in a system providing core network functionality. Operators are increasingly looking to replace proprietary form factors from legacy telecom equipment providers with more standard servers and form factors. As part of this, we have seen FPGA vendors look to gain early entry into the space. An example of a Xilinx-based solution might be something like a Xilinx T1 although Xilinx is focused on a solution with pre-packaged IP providers there.

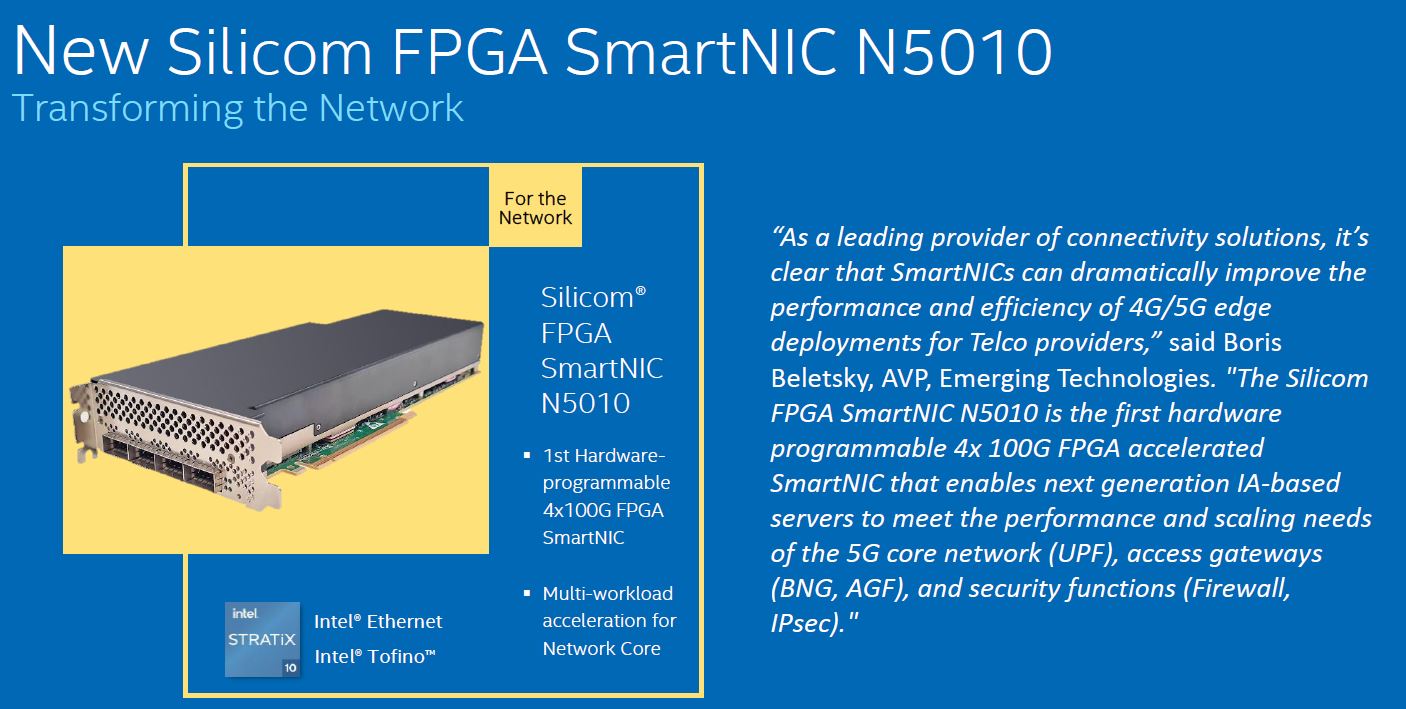

Silicom FPGA SmartNIC N5010

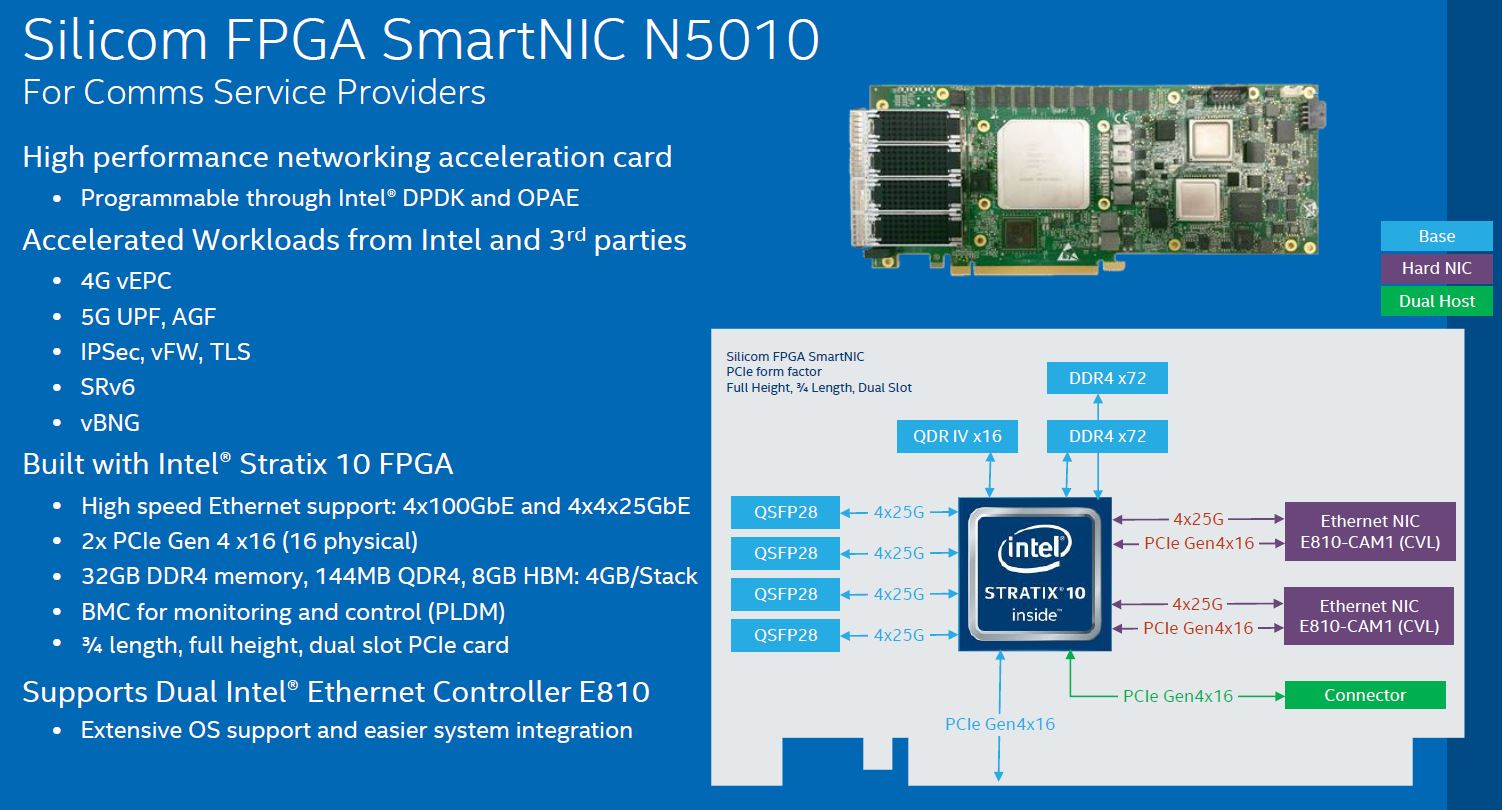

Here is the overview slide that Intel presented on the new FPGA SmartNIC N5010.

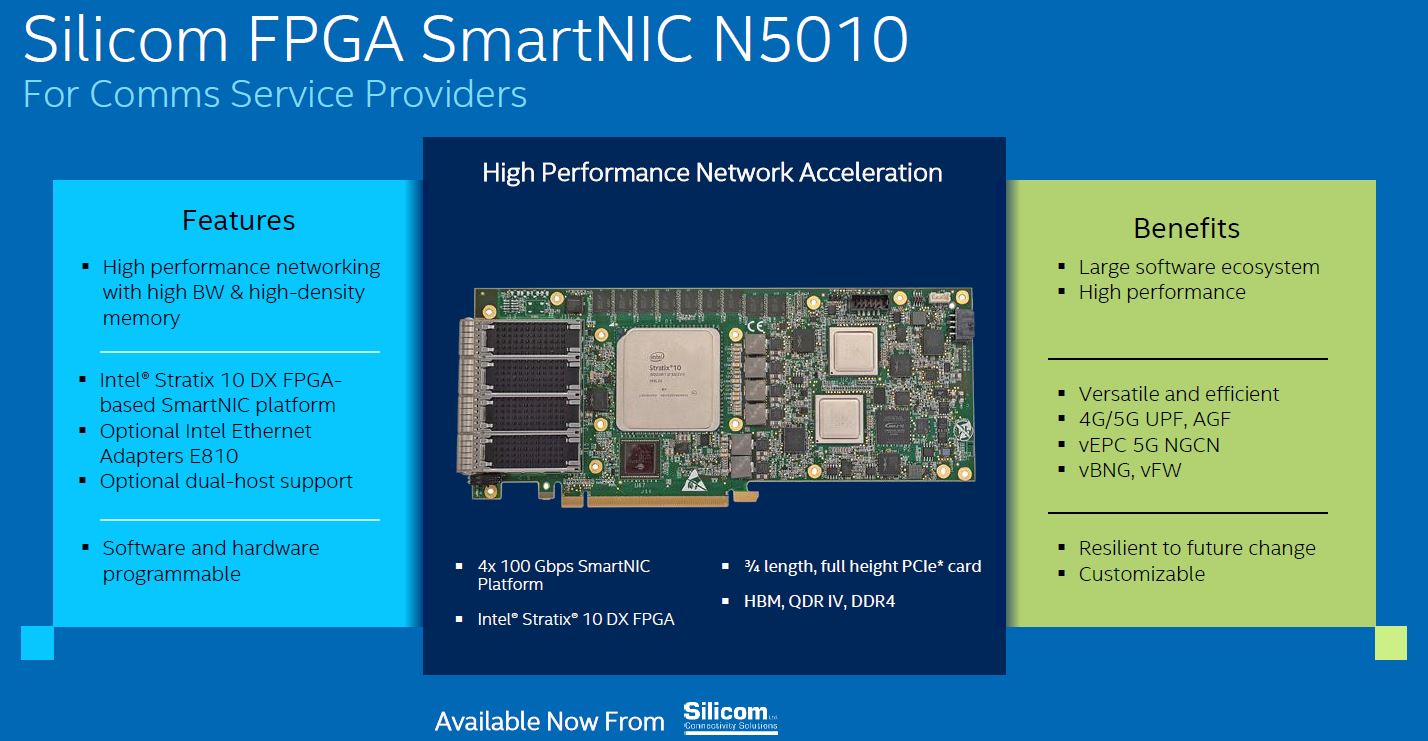

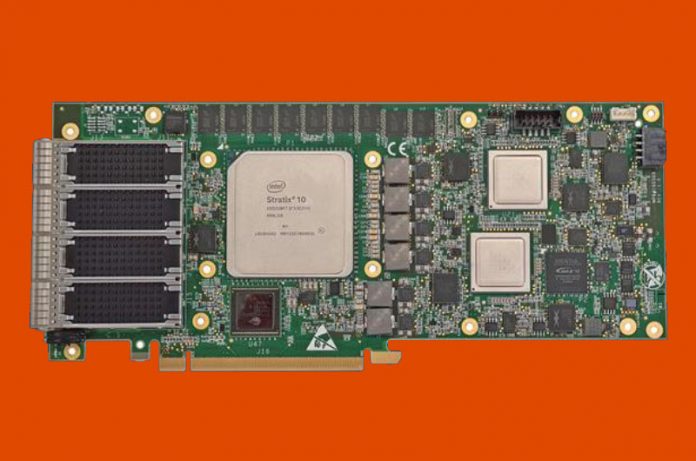

The Silicom FPGA SmartNIC N5010 uses an Intel Stratix 10 DX FPGA with 8GB HBM onboard. Many STH readers that are not familiar with FPGAs and HBM may know HBM (high-bandwidth memory) from high-end GPUs such as the NVIDIA A100. As memory bandwidth becomes an increasingly important aspect of system performance, HBM will continue to proliferate beyond GPUs and FPGAs. In SmartNICs and DPUs, HBM memory can be used for some lookup tables and other features that are important in certain networking workloads. Along with the HBM, there is another 32GB of ECC DDR4 memory onboard.

What makes this solution impressive is that it handles 4x 100Gb connections. There are dual Intel E810 (Columbiaville) foundational NICs onboard. If those sound familiar, they are the same that one may find on a 100GbE NIC. We covered the Intel Ethernet 800 Series 100GbE NIC Launch a few years ago, but the cards with the chips just saw more volume production in the last quarter or two.

From the block diagram, one may see that there is a PCIe Gen4 x16 link to the host, but also a second PCIe Gen4 x16 connector noted. Silicom has done a lot of work with multi-host adapters and we actually have some Red Rock Canyon/ Fulcrum based adapters in the lab. 4x 100Gbps ports require a PCIe Gen5 x16 slot to handle, so a PCIe Gen4 x16 slot is not enough to deliver the bandwidth this card offers to the host. In some applications, the FPGA is designed to do most of the work, minimizing host transfers. In other applications, a second PCIe Gen4 x16 slot can be connected via a cabled connector and is often attached to a second host CPU to avoid the CPU interconnect. Taking a step back, if you saw our Dell and AMD Showcase Future of Servers 160 PCIe Lane Design piece, it is easy to see how this may evolve in the future.

Final Words

Since this is more of an FGPA-based solution it falls into the category of a SmartNIC versus a DPU. The C5020X also announced today we went into a full “is it a DPU?” analysis. Here, this is a more traditional SmartNIC design, with a twist. This solution offers an enormous amount of power and actually consumes up to 225W. Silicom has a few different options available, including a single slot and actively cooled versions.

This is an extraordinarily exciting time in networking. Solutions such as the FPGA SmartNIC N5010 are enormous steps forward from the Intel FPGA Programmable Acceleration Card N3000 for Networking we saw last year. Not only are we seeing an explosion of new network card options for 5G operators and networks, but we are also seeing a simultaneous interest in increasing NIC prominence within the data center to extract control planes and provide more offload capabilities. The N5010 is a great example of some of the cool technology coming in this area.

Patrick,

Does the N5010 support Host RDMA offload as well?

I don’t see any mention in the article nor on the Silicom web site.

The 810s do support both iWARP and RoCEv2, however HBM/DDR memory is not needed for that. Burst absorption and serious lookup tables (L2/IPv4/IPv6) would make a killer vBNG though.

Thanks. As you said, an extraordinarily exciting time in networking.