For years, the typical server has been defined by the dual-socket market. At the same time, a number of organizations have noticed that crossing the QPI/ UPI bus between sockets is less than ideal. Processors have crept up not just in capacity, but also in price and power consumption which is making many take a look at single-socket solutions again. AMD EPYC has been pushing this space, so invariably, we are going to have an Intel Xeon response. Enter the Supermicro SYS-1019P-WTR solution which is a single-socket server with a full front panel loadout, expansion slots, and in a shorter depth package. In our review, we are going to take a look at this solution.

Supermicro SYS-1019P-WTR Hardware Overview

Lately, we have been splitting our hardware overview section into two parts. First, we are going to discuss the external features of the system. We are then going to discuss the internal components that make the server work.

Supermicro SYS-1019P-WTR External Overview

The front of the SYS-1019P-WTR shows off the server’s slim 1U chassis. Adorning the front are ten 2.5″ drive bays. These are SATA/ SAS bays, but two of the drive bays have an option for being wired as NVMe SSD bays so long as a riser card is used. There is also room for the normal power buttons and LED status lights along with two USB 3.0 ports.

On the rear of the system, we have two 500W 80Plus Platinum power supplies on one side and I/O expansion slots on the other (two full-height, one half-height.) We will show the riser configuration detail in the internal overview section. In the middle of the chassis, we have the primary I/O block. There are two USB 2.0 and two USB 3.0 ports along with legacy serial and VGA ports. Atop the USB 2.0 ports is an out of band management port. Something that is a bit different is that this system utilizes the Intel C622 chipset to provide dual 10Gbase-T networking via the PCH.

One item that may be especially important to our readers is the depth of the system. This server is only 23.5″ or 597mm deep. Since this is a single-socket server, one of the areas that will appeal to many buyers is the fact that the server is indeed shorter than the average dual-socket server.

Supermicro SYS-1019P-WTR Internal Overview

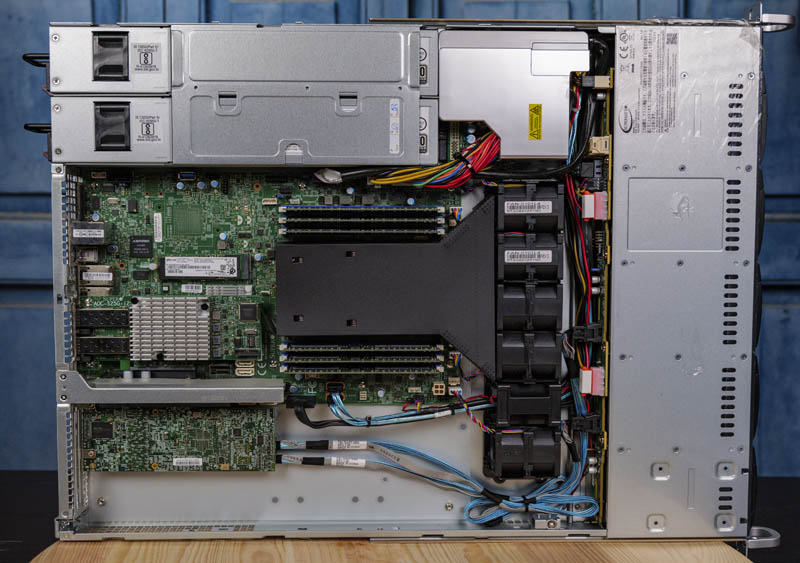

Inside the system, we see a standard server layout. On the front of the system we have the drive bays, then backplane, fans, CPU, and expansion/ PSUs.

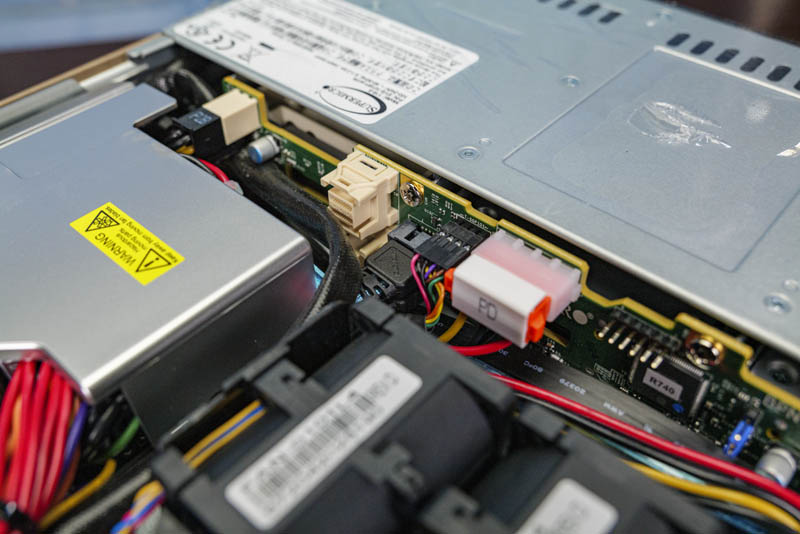

Since we covered the ten 2.5″ bays, we wanted to show the two white connectors on the storage backplane. One can add NVMe capability to this server by adding cables and a PCIe riser that connects to these two ports.

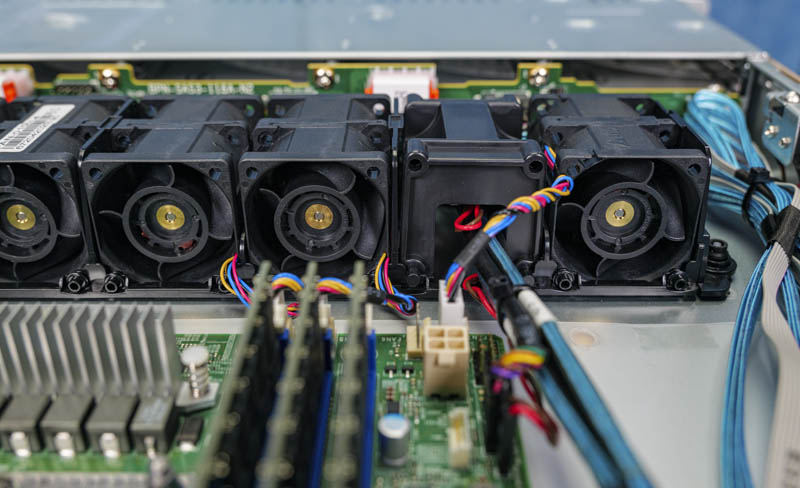

Behind the storage backplane are fans. There are five counter-rotating fan modules. Perhaps one of the more interesting features is a blank that is built as a fan form factor but allows for a cabling conduit to pass through the fan area.

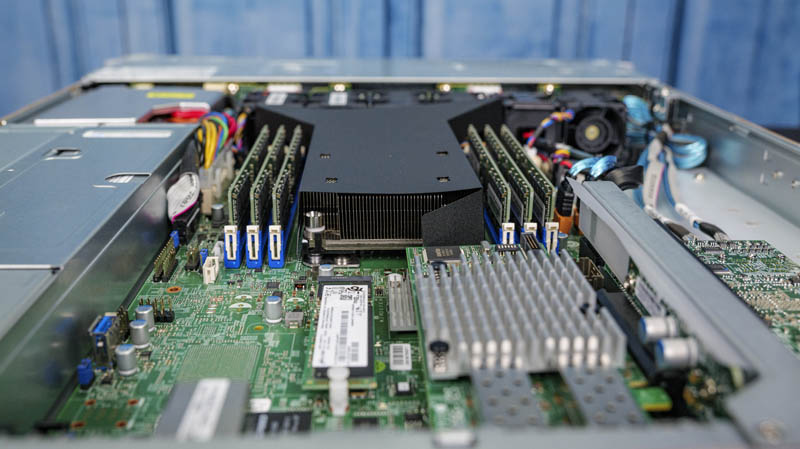

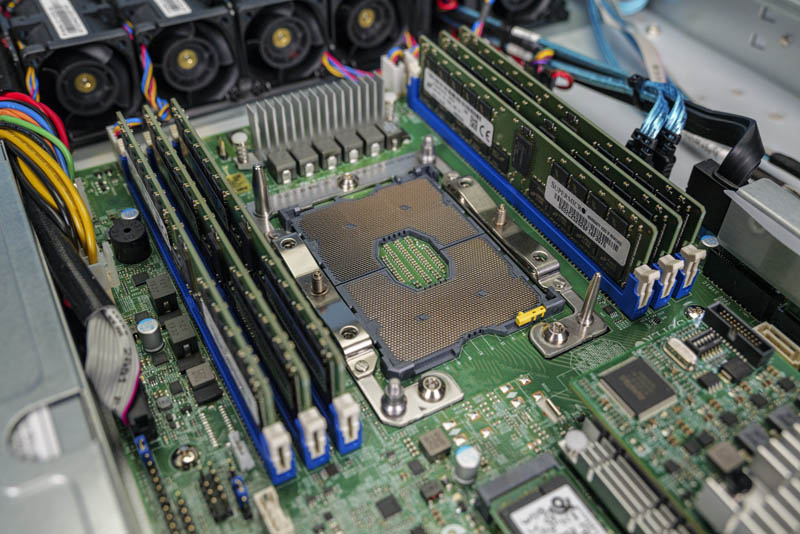

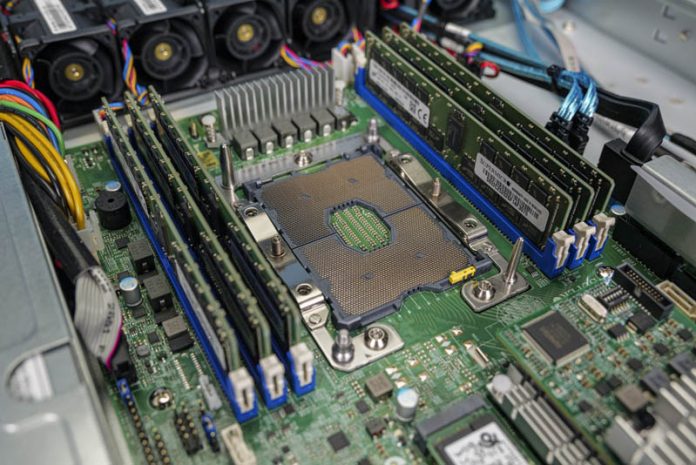

Inside the system, most of these fans have the primary duty of keeping the Intel Xeon Scalable processor cool. The SYS-1019P-WTR accepts first or second-generation Intel Xeon Scalable processors including 2nd Gen Intel Xeon Scalable Refresh SKUs. Although the system supports up to 205W CPUs, there are some SKUs excluded such as the Intel Xeon Gold 6250.

The CPU socket is flanked by six DIMM slots. Using 256GB DIMMs, one can theoretically get up to 1.5TB of memory per socket. We see 96-384GB configurations being the most common given DIMM pricing. Technically, the Xeon Scalable platforms can support 2 DIMMs per channel (2DPC) instead of the single DIMM per channel (1DPC) mode used here. If you want higher memory capacity, it may be worthwhile looking at a platform with twelve DIMMs per CPU in a 2DPC configuration.

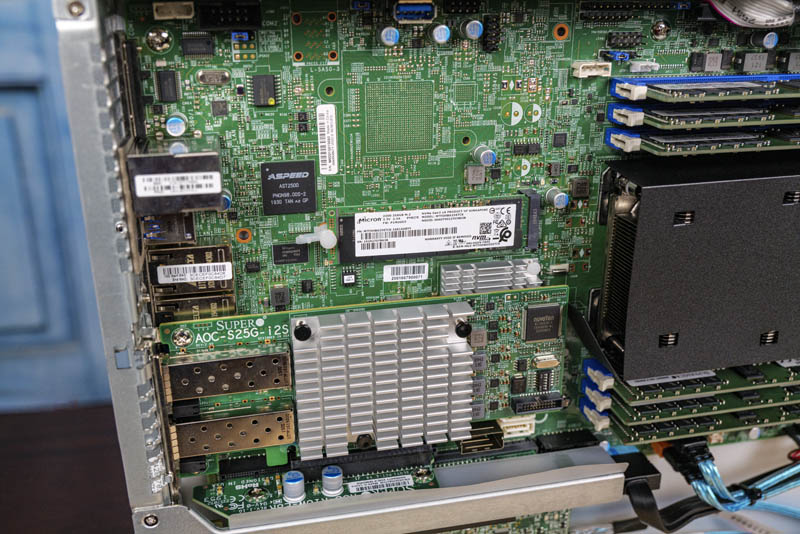

Directly behind the CPU, we have an I/O expansion area. One can see the ASPEED AST2500 BMC and underneath that a M.2 slot. This M.2 slot accepts either NVMe (PCIe Gen3 x4) or SATA SSDs in M.2 2280 (80mm) or 22110 (110mm) sizes. One can also see the internal USB Type-A header here just below the power supplies.

The first riser we are looking at is the low-profile PCIe Gen3 x8 slot. This sits above some of the motherboard’s I/O blocks. In our test system, we have the Supermicro AOC-S25G-i2S we reviewed. This is a dual 25GbE NIC in this slot.

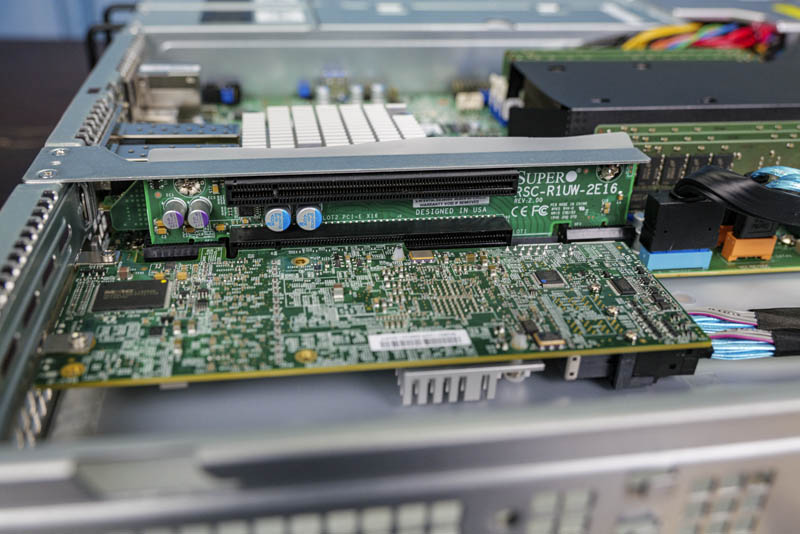

The “W” in SYS-1019P-WTR denotes WIO in Supermicro naming conventions. WIO means that the motherboard does not extend across the entire 1U chassis. As a result, the PCIe risers can handle two add-in cards instead of one. Here we get two PCIe Gen3 x16 slots. One of these slots has our Supermicro-Broadcom SAS 3108 RAID controller.

Although this system is wired for using the Broadcom SAS ports and two SATA ports, one can see that there are two Gold SATA ports which are for SATADOMs along with ten onboard SATA ports. One can drive all ten front panel ports as SATA using only motherboard ports to lower server costs. One could also use two SATADOMs with the SAS controller and get 10x front panel 2.5″ bays, two SATADOM drives, and a M.2 drive bay for a total of thirteen drives in that configuration.

This is actually quite a bit of expansion in a 23.5″ chassis. Larger dual socket platforms offer more, but thirteen SSDs, a 28 core 205W TDP CPU, six DIMMs, and three PCIe expansion slots (40 lanes worth) is a lot in this compact of a chassis.

Next, we are going to take a look at our test configuration, topology, and management before getting to our performance, power consumption, and final words.

One thing I’d like you to focus more on is field serviceability. Since you mention the continued lack of a service guide plus the small print SN labels I do not understand the 9.1 design grade – why a server gets an ‘aesthetics’ grade is another point of contention.

Regarding the field serviceability and what I will file under design (in general, not necessarily true for this review):

– Are the PCIe slots tool-less?

– Are the fan connectors on the motherboard and easily reachable with the fan cables or do the fans slot into connectors directly?

– Are cable guides available at all?

– Are cable arms available and if yes, do they stay in place without drooping too much and how easy they’re to use? Do they require assembly, maybe even with tools?

– How easy the rails are installed (both server and rack-wise)

– Are the hard drive caddies tool-less and are they easily slotted in?

– Even seemingly mundane things like PSU and HDD numbering or the lack thereof

All these cost time and money before and during deployment and – this has been my experience – is something Supermicro is sorely in need of improvement. This is also not to say everything is rosy with their direct or indirect competitors; I’ve seen both good and questionable design decisions from HPE, Dell, IBM / Lenovo, Fujitsu, Inspur, Blackcore, Ciara and Asus.

Still, thanks for the indepth review.

To confirm – did the server barebones ship with the rackmount rail kit? I can’t see mention of it either on the product page at Supermicro’s website or in the review.

Thank you.