We have looked at several single-node servers from Inspur, but now we have our first multi-node server review from the company. The Inspur i24 is designed to compete in the 2U 4-node or 2U4N market which is a popular sweet spot for mainstream compute density. Since we do so many 2U4N systems at STH, we have a set testing methodology to evaluate these servers in a more realistic environment. Since this is the first 2U4N system from Inspur we are reviewing, it is something that we are excited to see how it performs in our testing.

Inspur i24 2U4N Server Overview

Since this is a more complex system, we are first going to look at the system chassis. We are then going to focus our discussion on the design of the four nodes. As a quick note to our readers, this is the Inspur NS5162M5 system but Inspur has a more friendly product name the “i24” as a result, we are going to use i24 in this review.

Inspur i24 Chassis Overview

The Inspur i24 is a 2U 4-node system. Fitting in a single 2U enclosure one gets four nodes which effectively doubles rack compute density over four 1U servers. On the front of the chassis, one gets one of two options. Either 12x 3.5″ drives or 24x 2.5″ drives. The 24x 2.5″ drives can be either a 6x SATA/ SAS or 2x SATA/ SAS plus 4x U.2 NVMe configuration. Currently, there is not an all-NVMe solution. Since 2.5″ storage is popular in these platforms, that is the configuration we are testing.

There are two other features we wanted to point out on the front of the chassis. First, each node gets power and reset buttons along with LED indicators all mounted on the rack ears. This is fairly common on modern 2U4N servers to increase density. The other item of note is that the drive bays are not 24 bays spaced evenly as we often see on single node 2U servers. Instead, we see three air vents in the front of the chassis to help airflow and cooling. We have seen similar designs from other vendors especially when they are looking to improve cooling in these platforms.

A small feature we like is that Inspur is labeling the nodes “A, B, C, D” and has labels above the drive slots on the front of the chassis labeling A1-A6, B1-B6, C1-C6, and D1-D6. This is a small detail, but in older generation 2U4N servers it was not always so easy to tell which drive corresponded with which node.

In the rear of the chassis, we have power supplies in the middle with two nodes on either side. These redundant hot-swap 80Plus Platinum power supplies are Great Wall units that can provide 1.7kW-2kW of power at higher voltages or up to 1.08kW at 100-120V. Inspur has other power supply options including 80Plus Titanium units and single PSU configurations.

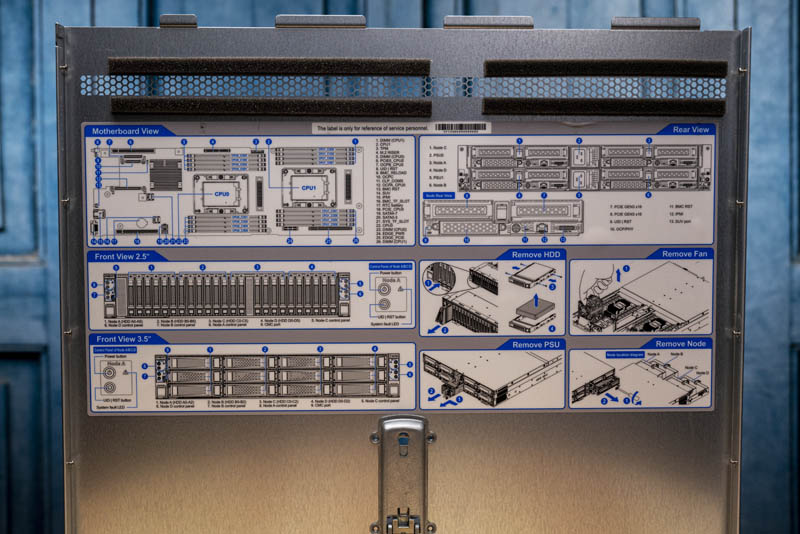

Inspur has a service cover the runs the middle top of the system. This is useful as one can service features like the backplane without having to fully remove the chassis from its rack. It even has basic field service information.

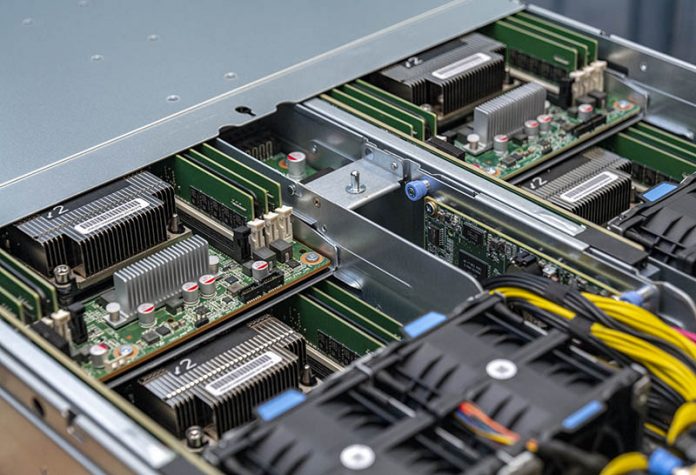

Inside, one can see four pairs of 80mm fans. We will see how these perform in our efficiency testing. The other common design for 2U4N systems is to put smaller 40mm fans on each node. Typically the arrays of larger fans like the i24 uses translate to lower power consumption.

Here is a view of the chassis airflow looking from the rear of the chassis with the power supplies and nodes removed to give you another view of the internal layout.

Inside the chassis, one can see the AST1250 chassis management controller or CMC. Inspur is able to collect chassis-level data. A few vendors are making these chassis management modules available through a single management NIC to further reduce cabling, but that is not what Inspur is doing here. We are going to discuss the CMC more in our management section.

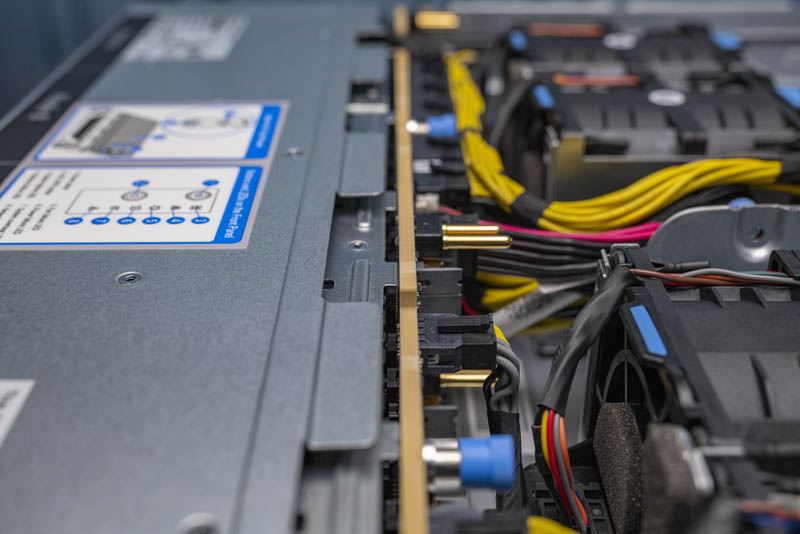

Another item we wanted to point out is the backplane/ midplane PCB. This is a very thick PCB and the nodes use high-density connectors to directly connect to the drive backplane and get power. This is a streamlined design compared to some other solutions we have seen.

One other small item on the chassis is that the 2.5″ model we are reviewing is 31.7 inches deep. The 3.5″ model is actually deeper at 33.3 inches deep.

Now that we have looked at the exterior, it is time to look at what each node in the i24 offers.

The units should also support the new Series 200 Intel Optane DCPMMs, but only in AppDirect (not Memory) mode.

U.S. Forces Intel to Pause Shipments to Leading Server Maker.

Tomshardware

Intel Restarts Shipments to Chinese Server Vendor

Tomshardware

How do you get to CMC management’s?