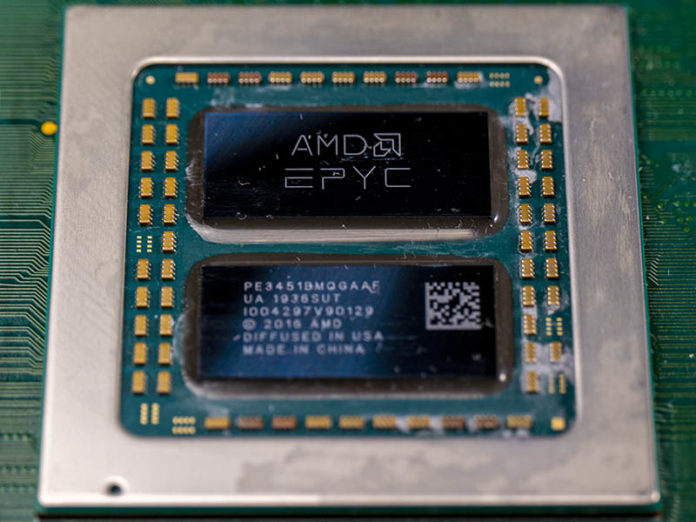

It was a cold day in London, 2018 when we witnessed the AMD EPYC Embedded 3000 Series Launch. While we were able to test the AMD EPYC 3251 a few months after, that was an 8-core part, not a 12 or 16 core part that occupied the top-end of the stack. Our readers asked questions, as did we. Starting in late November 2019, the top-end dual-die EPYC 3000 parts started shipping in volume and we have been working to get one ever since. We can now bring you the fruits of those efforts, a review of the AMD EPYC 3451 16-core embedded processor. This is a dual die EPYC design from the Naples generation with several features that we wanted to cover in our review.

AMD EPYC 3451 and EPYC 3001 Series Platform Video

Since this is a push in the new year, we have a video discussing a good portion of what is covered in this review.

Check it out if you just wanted to learn about the EPYC 3451 and the EPYC Embedded 3001 series. Of course, we go into more detail in this piece.

AMD EPYC 3451 Overview

Key stats for the AMD EPYC 3451: 16 cores / 32 threads with a 2.14GHz base clock, 2.45GHz all-core boost, and a maximum 3.0GHz turbo boost. There is 32MB of onboard L3 cache. The CPU features a configurable 80-100W TDP. These are $778 list price parts.

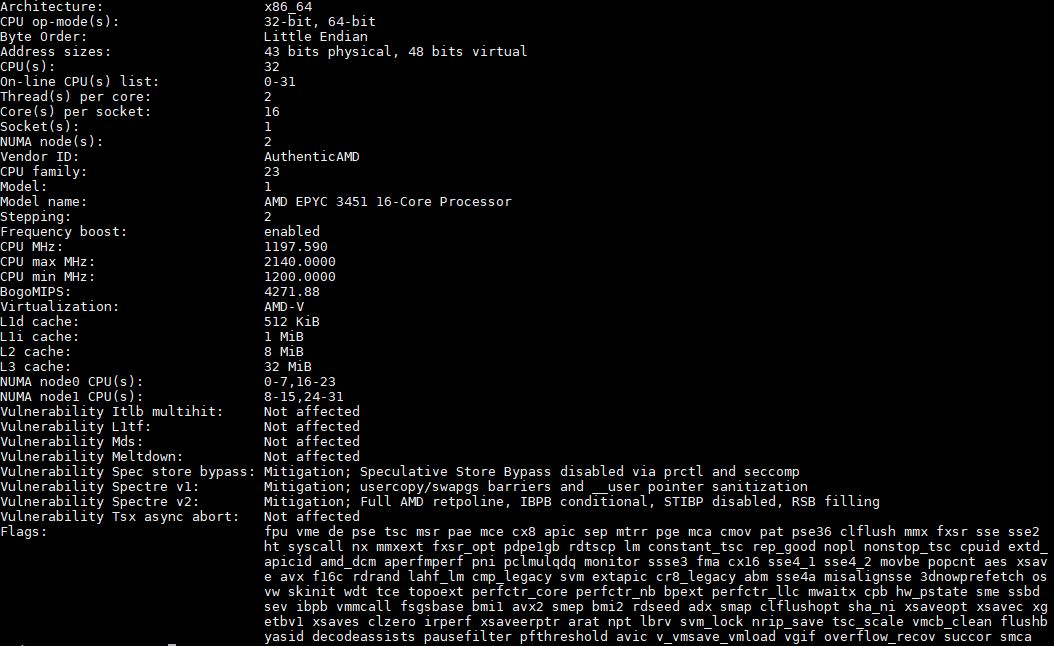

Here is the lscpu output for the AMD EPYC 3451:

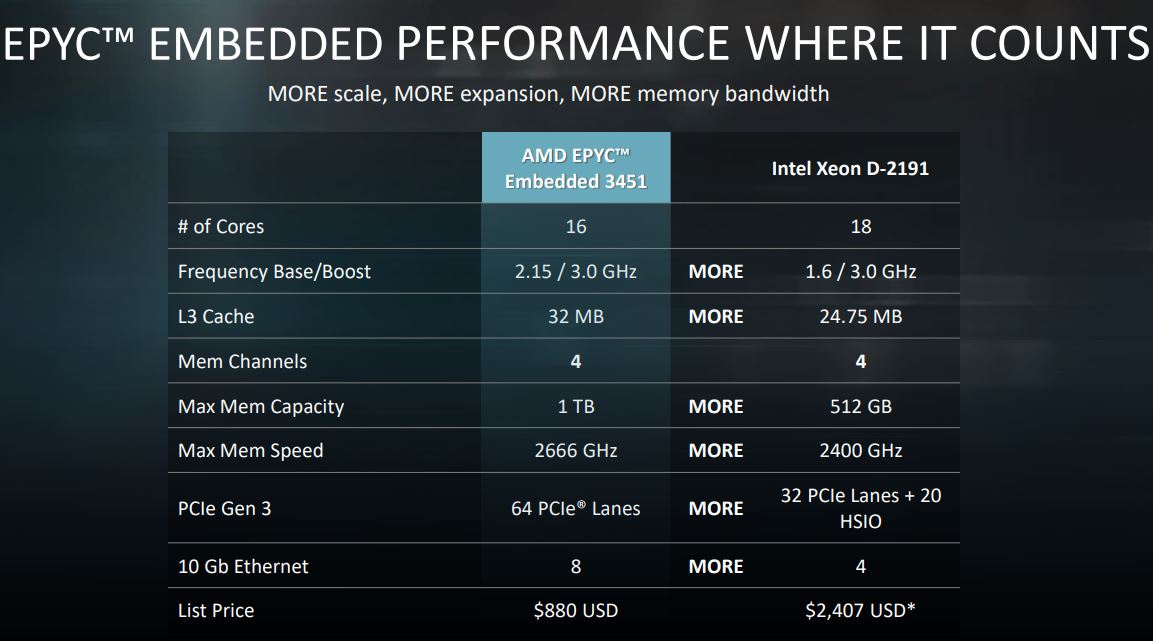

You will notice a few changes from the launch of the part. The 2.14GHz base clock is 10MHz lower than the original spec. We covered how this became a configurable TDP part as in our AMD EPYC 3000 Line Gets Updated Adding and Dropping Models piece. We also see list pricing that is $102 lower than we had on the original slide:

Most of those changes are more like a refinement of the stack especially since we have seen the “Rome” launch on the socketed server-side in the interim.

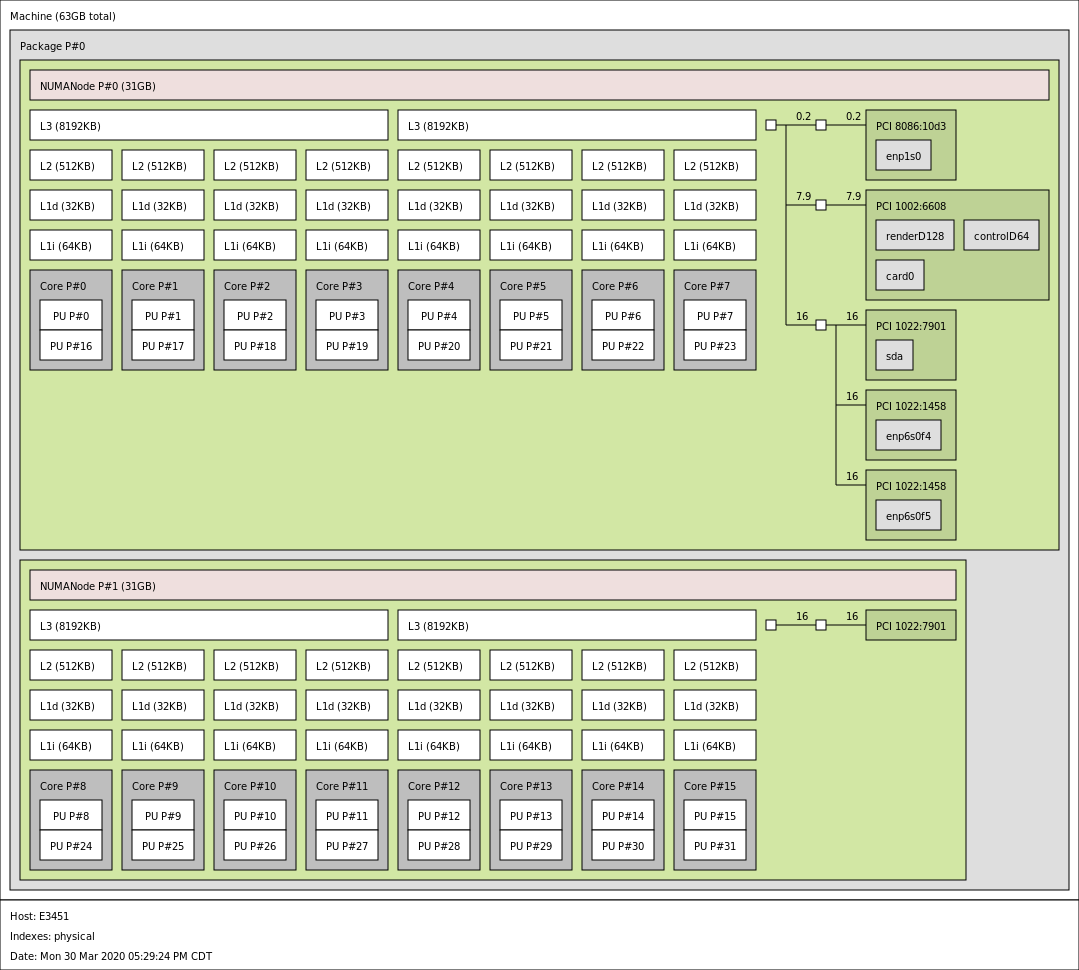

Since the AMD EPYC 3451 has a “1” as the last digit, we know that it is a “Naples” generation part. We also know that this is a dual-die part with two 8 core CCDs. As a result, this system has two distinct NUMA nodes, much like a two-socket 8-core system:

On one hand, Intel Xeon D parts in this space only have a single NUMA node currently. Another way to look at the two die solution is that it is much more familiar setup to many segments than the four NUMA node EPYC 7001 CPU.

Having a two-die solution is extremely important. This gives the system up to 64x PCIe Gen3 lanes. The Intel Xeon D-1500 / Xeon D-1600 has 24x PCIe Gen3 lanes and 8x Gen2 lanes while the Intel Xeon D-2100 series gets 32 PCIe Gen3 dedicated lanes plus 20 HSIO lanes where some can be configured for PCIe in a system. While the single die EPYC 3000 series competes directly with the Xeon D-1500/ D-1600 and offers more performance, the dual die offers something even bigger. Indeed, the dual die AMD EPYC 3451 offers more PCIe lanes than a single-socket Intel Xeon Scalable server (48) which is great when one needs NICs, accelerators, and storage.

We previously tested the Intel Xeon D-2183IT and found it to be a very capable part with 16 cores, quad-channel DDR4-2400, and a 100W TDP. That makes it a direct competitor to the EPYC 3451 across a lot of features. There is one big difference, and that is price. MSRP for the Intel Xeon D-2183IT is $1764 which puts it roughly $1000 more or about a 125% premium over the EPYC 3451. In our review, we are going to see if it is worth that kind of premium.

Test Configuration

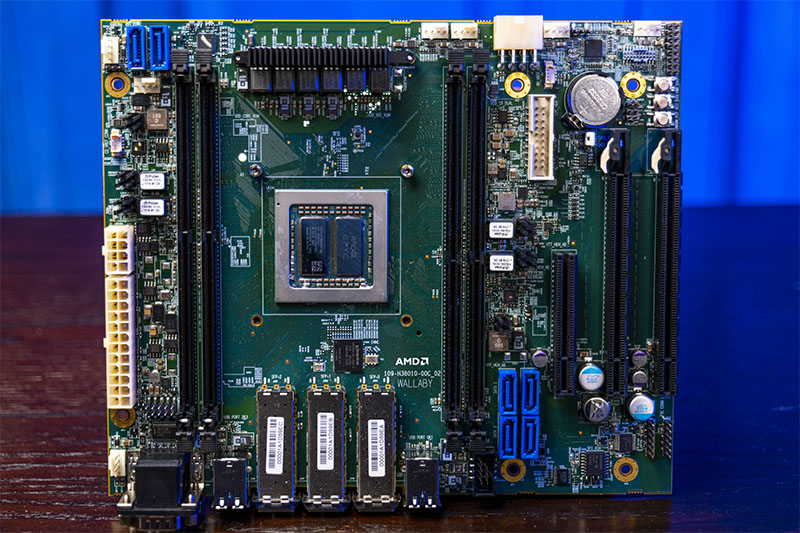

For this testing we are using the updated AMD Wallaby platform:

- System: AMD Wallaby

- CPU: 1x AMD EPYC 3451 16-core

- Memory: 4x 16GB DDR4-2666 DDR4 DRAM

- OS SSD: 1x Intel DC S3710 400GB Boot

Some of the major updates here are that we get more PCIe and network slots. With the dual-die configuration, we get up to 64 PCIe lanes and up to 8x 10GbE ports, 32 lanes and 4 ports per-die.

The Wallaby platform exposes some, but not all of those features. It does, however, expose more than the version we used for the EPYC 3251 testing and, importantly, has the dual die part along with quad-channel DDR4-2666 memory support. We usually prefer using production boards from a partner, but for embedded products, we often have to use reference platforms.

Next, it is time to get to our benchmarks.

The market analysis vs Intel is great. I’d skip the benchmarks and go there after the first page.

Love to see these. Like you said, there needs to be more platforms. And someone to actually take advantage of all the expansion capability. I’d like to see Supermicro do a v.2 that does that…

I like to see this AMD EPYC 3000 SoCs in product like Supermicro Flex-ATX boards since I first read about them in 2018.

We use especially the X10SDV-4C-7TP4F (4Core Xeon-D) for edge storage servers, because this boards are small enough for short depth 19″ cases that fit into short depth network gear cabinets.

Furthermore, I like them for the low power consumption.

I know there are cheep NAS boxes that also fit into small recks, however this Xeon-D Flex-ATX boards are way more flexible, reliable and powerful (think of clients working while a RAID set need to be scrubbed, rebuild, backuped and so on). It is possible to connect up to 16 SAS drives without requiring a SAS expander.

We also run Mail-Servers, SAMBA as a directory controller and Free RADIUS for VPN and WiFi access management on it.

Why not mini-ITX? Because it is not possible to have an SAS controller and SFP+ nic on a mini-ITX board (only on PCIe slot!).

The Supermicro X10SDV-4C-7TP4F has both onboard and provide 2 PCIe slots for further expandability!

I have already seen mini-ITX boards with 10GBase-T interfaces. While this would allow 10Gbit/s network connection together with a SAS HBA on a mini-ITX board, it is usually not very practical because a lot of 1G edge switches have 2 or 4 SFP+ ports. However, I don’t know a single 24 port 1G switch with a 10GBase-T interface. Furthermore, I think SFP+ is in general more flexible and cheeper then 10GBase-T. Therefor, I would really like to see mainboards for AMD EPYC 3000 series CPUs that exposes the included 10Gbit/s networking as SFP+ ports.

I often think about using Supermicro Flex-ATX boards with higher core counts for small 3 node Proxmox HA clusters. However, the boards with 16 core Xeon-D CPUs are too expensive compered to single socket EPYC 7000 servers. Furthermore, they are hardly available. The EPYC 3451 could be a game changer for this. We could probably fit 3 servers with EPYC 3451 CPUs in one single short depth rack. With at least 4 SPF+ interfaces we wouldn’t even need a 10Gbit switch for inter-cluster network connection, because we could simply connect every node to all the other ones directly!

I really hop I can read I review about a small AMD EPYC 3000 mainboard with SFP+ ports on STH soon.

Thanks to Patrick for his great work!

Why use such an old kernel (4.4) vs a newer (maybe have optimizations) kernel (5.x)?

Rob, that is just the software code that we are compiling. We are more likely to add another project such as chromium or similar as a second compile task. We are getting to the point where the kernel compile is so fast that we need a bigger project. That is even with the fact we compile more in our benchmark than many do.

EPYC3451D4I2-2T

https://www.asrockrack.com/general/productdetail.asp?Model=EPYC3451D4I2-2T#Specifications

Nearly one year since this post and we’re unable to buy any platform rocking a 3451 :(

Getting close to 2 years, and still cannot get a system with 3451, or even other 3000 series ones with all the 10Gbps PHYs exposed, and all the PCIe lanes in small form factors (i.e. to two or three OCP 3.0 connectors would be nice option for example – for 25Gbps nic) . All the solutions I found only exposed very small subset of functionality of this SoC, and are super overpriced for what they provide. There is technically asrock rack, but still I am not able to order it, and it still uses Intel NIC to do networking, which is silly. I asked asrock rack and supermicro, and they said they are “working” on it, but still nothing in sight.