Some time ago we were asked to review a unique system from International Computer Concepts (ICC.) The ICC Vega R-116i is a custom server the is designed to run an 8 core CPU at high clock speeds for the high-frequency trading (HFT) market. When it arrived in the lab, we immediately noticed something was different. This was not simply a cobbled-together solution. ICC actually has customized just about every aspect of the R-116i to meet the needs of its customers. In this review, we are going to show you around the ICC Vega R-116i, and what makes it unique. Specifically, we are going to point out what ICC does that differentiates this solution from something you could build yourself.

ICC Vega R-116i Hardware Overview

Before we get started with our overview, this server’s photos were shot on the first operational day of our new photo studio. This was before new lights and such arrived. If some of the shots are a bit off, apologies in advance.

Looking at the front of the chassis, the ICC Vega R-116i is a 1U with a distinctive front end. Instead of having a plethora of storage options, the server has two 2.5″ hot-swap bays and two power supplies.

What this practically means is that once the server is plugged in, the primary hot-swap components can all be serviced via the cold aisle. You can see the dual 2.5″ SSDs and one of the 600W redundant hot-swap PSUs pulled out of the chassis here.

Moving to the rear, we see a fairly typical server rear I/O layout. There are legacy serial console and VGA ports. There are four USB Type-A headers all for local management if one needs to plug a KVM cart in for servicing. There is a dedicated IPMI port which one may not have expected simply based on the processor (more on that shortly.) One can also find two 1GbE LAN ports powered by Intel i210-at. These NICs are fairly well regarded basic 1GbE NICs and have been a mainstay of servers for years now. That means that they are supported out-of-the-box in virtually every major OS.

A subtle note here, the labeling on this chassis is relatively good. For example, one can see the LAN 0 and LAN 1 labeled on the system. That is a very small detail but one that shows that the system designer was thorough.

Moving to the other side of the chassis rear, to the far right, one can see two AC power inputs. These inputs are wired to the power distribution board and feed power to the front-mounted power supplies. This mechanism allows for easy cold aisle hot-swap component configurations.

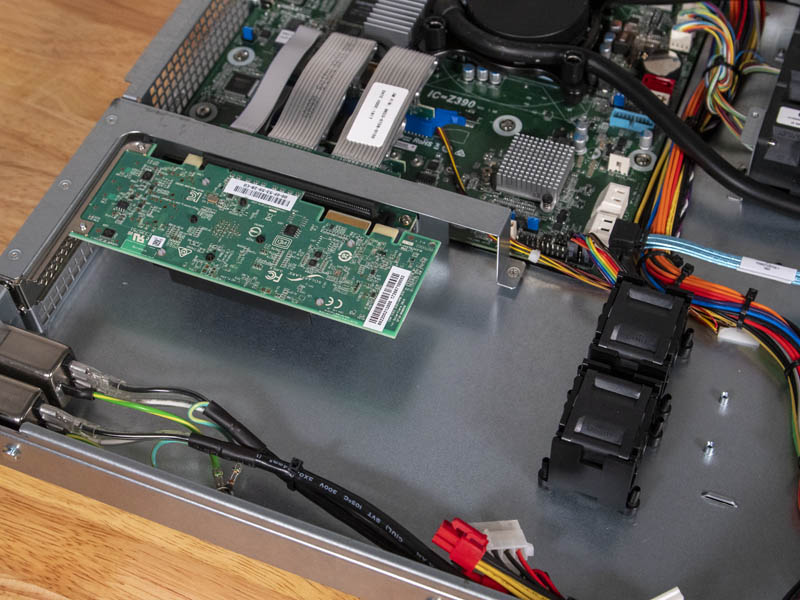

One can see the SolarFlare NIC sitting in the PCIe 3.0 slot. ICC offers both a single x16 or two x8 PCIe slot options depending on what you want to configure. Here, SolarFlare’s NICs are well-regarded by many HFT shops so it is a common sight. ICC uses not just PSU exhaust to cool the PCIe cards but also two fans which blow air directly over the PCIe card heatsinks.

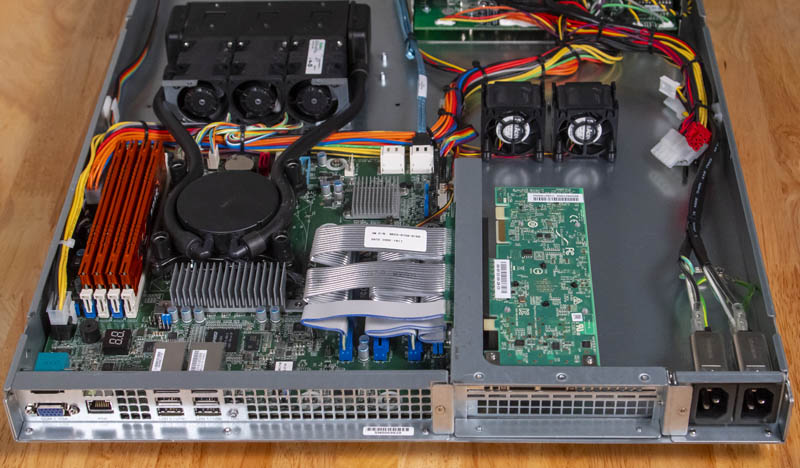

Taking a look from above and to the rear, one can see the general layout of the server’s primary components.

ICC told us, prior to this review, that they designed the chassis custom for this application. This is not a simple Supermicro rebrand. Some actual engineering effort went into this solution. Forward of the PSU power distribution, one will find the hot-swap bays and PSUs so we are simply focusing on the meat of the server here.

There are three features that will jump out at you immediately:

- The memory is G.Skill Ares with fancy heat spreaders;

- The CPU is water-cooled; and

- The motherboard is based on an Intel Z390 chipset but is oriented like a server

The G.Skill Ares heatsinks are bright and they have a bit of additional significance. The G.Skill F4-3200C14D-32GAO set is not one we can find easily online (2x 16GB DDR4-3200 CL14 kits.) It appears as though ICC had G.Skill use lower-profile heatsinks than they normally would on their RAM in order to facilitate putting them into the chassis. To the casual observer, they may look like off the shelf DIMMs, but it appears as though they are customized. These are not ECC DIMMs since the CPU does not support it, but they are DIMMs designed for clock speeds beyond what current Intel Xeon CPUs offer.

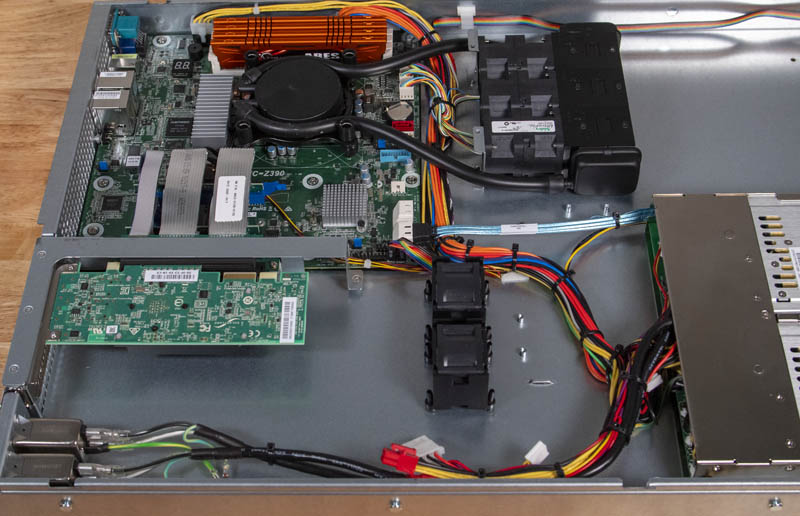

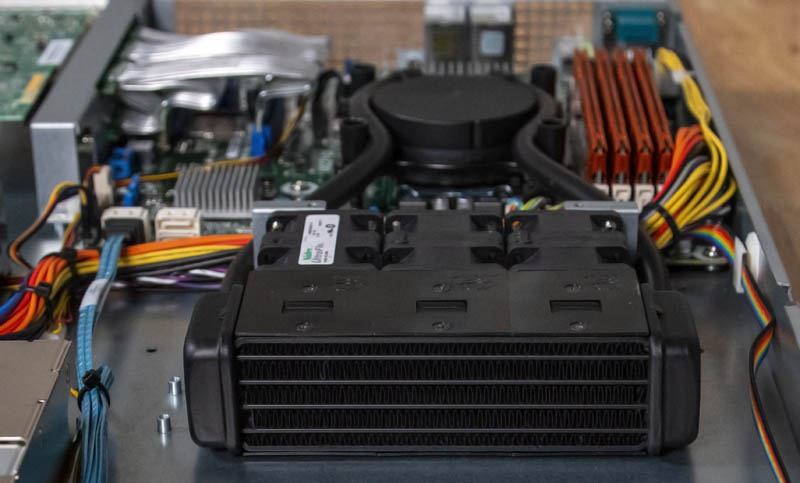

Perhaps the bigger one is that the company has an all-in-one water cooling solution. There is a water block/ pump unit for the LGA1151 socket. That connects to a 1U radiator with three sets of counter-rotating 1U fans for redundancy. This is ICC’s custom design for the chassis and it uses high-quality Nidec fans.

You can see features like the tube lengths in this sealed all-in-one solution are just right and do not interfere with other components. The closed-loop solution does not need facility water and does not require servicing.

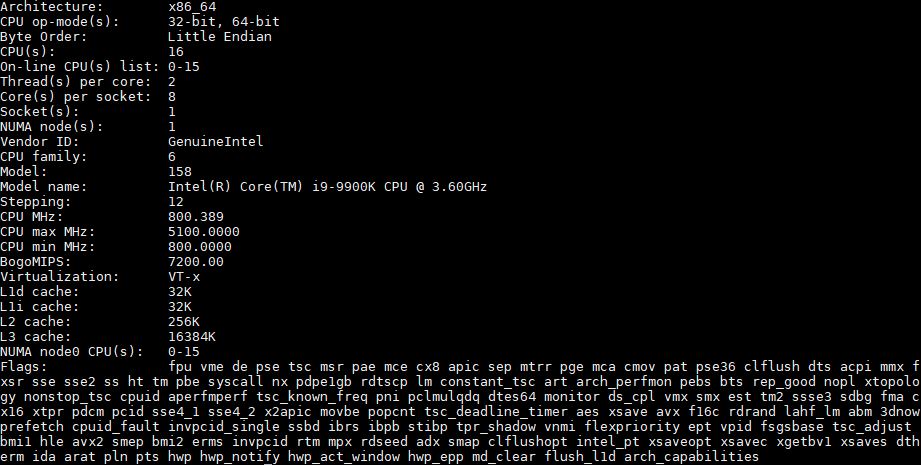

That watercooler is needed because it is cooling an Intel Core i9-9900K processor. This 8-core processor is capable of hitting over 5GHz in this 1U server making it one of the highest clock speed CPUs you can get in a 1U form factor. In some markets, having enough (8) cores is OK because the fastest clock speeds are required to attain the lowest latency calculations.

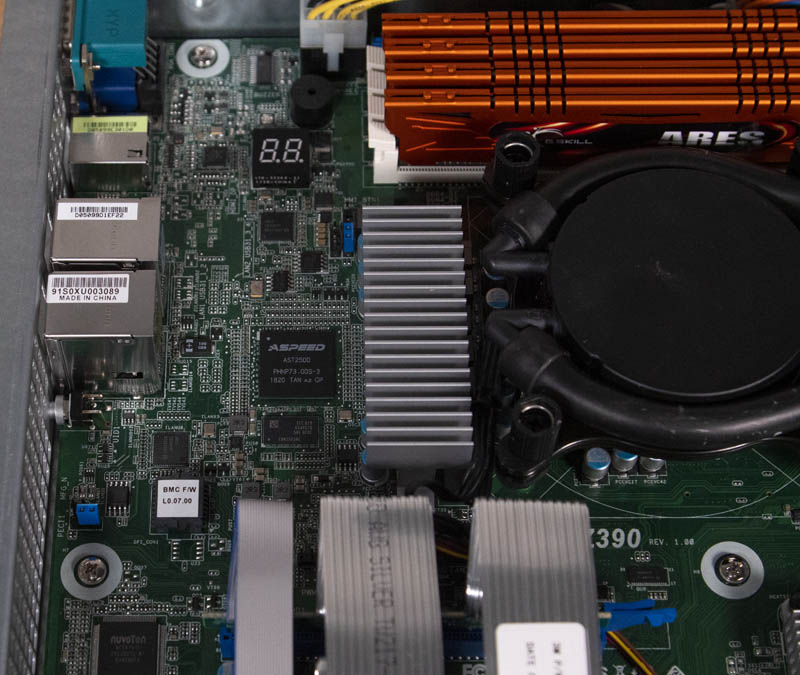

Part of the reason that the water-cooling solution does not interfere is that the motherboard is laid out in such a way to facilitate the unit. This is not an off the shelf motherboard. Instead, the IC-Z390 motherboard has features like server DIMM layouts for proper airflow. It has an Aspeed AST2500 BMC for out of band IPMI management using its dedicated NIC.

The ICC Vega R-116i is using a custom-designed motherboard here that allows them to package an overclocking Intel chipset and CPU into a 1U chassis with management features. Our best guess is that ASRock Rack is the ODM/ OEM and this is a product of a custom engagement for ICC. Do not quote us on that, some of the markings just look like ASRR but the company does not sell these boards publicly.

At a conceptual level, putting a high-clock speed overclocked desktop CPU into a server has been executed in many ways before. What ICC has done is gone beyond simply taking consumer parts and fitting 1U coolers. Instead, the company has engineered the system to work together well. When we were first asked to review the system, I was skeptical about how much was custom versus off-the-shelf. After tearing the system apart, ICC truly went the extra mile to customize the system. It shows in the serviceability and usability of the platform.

Next, we are going to look at the Vega R-116i topology, management, and BIOS before we get to our performance testing.

Yes, I’m sure the buy/sell orders get sent out MUCH quicker than with some loser 4 GHz processor when both are on common 1 GbE networks….

(This is a moronic concept, IMO; catering to stupid traders thinking their orders ‘get thru quicker’? LOL!)

It’s for environments where latency matters, and the winner (e.g. the fastest one) takes all. And as you say if 1 GbE is “common” then there are certainly other factors that could be differentiators. That 25% in clock advantage could be to shave 20% off decision time or run that much more involved/complex algorithms in the same timeframe as your competitors.

Calling people whose reality you don’t understand stupid reveals quite a bit about yourself.

Does the CPU water cooler blow hot air over the motherboard towards the back of the case?

Mark this is a very healthy industry that pushes computing. There are firms happy to spend on exotic solutions because they can pay for themselves in a day

@Marl Dotson – for use in a latency sensitive situation you would a install fast Infiniband card in one of the PCIe expansion slots. You certainly would not use the built in Ethernet

That cooler looks to be very simular to Dynatron’s, probably an Asetek unit.

https://www.dynatron.co/product-page/l8

@David, I’m not familiar with the specific properties of the rad used here, however the temp of the liquid in the closed loop system should not exceed +4-8°C Delta T.

@Dan. Wow. Okay. At least one 10Gb card would be the basic standard here: 1Gb LAN is a bottleneck as @hoohoo pointed out. Kettle or pot?

Follow up: is this an exciting product? 5Ghz compute on 8 cores is great, but there are several other bottlenecks in the hardware, in addition to the Nic card.

Hi,

i would like to make a recommendation concerning the CPU Charts for the future.

It would be helpful if you could add near to the name of the cpu, the cores and the thread count. For example EPYC 7302p (C16/T32). It would be easier for us to see the difference since its a little hard to keep track in our head each models specs.

Thank you

A couple of comments here assuming that onboard 1GbE is the main networking here. You’ve missed the Solarflare NIC. As shown it’s a dual SFP NIC in a low-profile PCIe x8 format. That’s at least a 10GbE card according to their current portfolio. It may even be a 10/25GbE card; https://solarflare.com/wp-content/uploads/2019/09/SF-119854-CD-9-XtremeScale-X2522-Product-Brief.pdf

For those commenting on the network interface – this is the entire point of the SolarFlare network adapter. This card will leverage RDMA to ensure lowest latency across the fabric. The Intel adapters will either go unused or be there for boot/imaging purposes due to the native guest driver support which negates the need to inject SolarFlare drivers in the image.

It is unlikely you would see anything that doesn’t support RDMA (be it RoCE or some ‘custom’ implementation) used by trading systems. You need the low latency provided by such solutions otherwise all the improvements locally via high clock CPU/RAM etc are lost across the fabric.

For some perspective here, I think HedRat, Alex, and others are spot on. You would expect a specialized NIC to be in a system like this and not an Intel X710 or similar.

The dual 1GbE I actually like a lot. One for an OS NIC to get all of the non-essential services off the primary application adapter. One for a potential provisioning network. That is just an example but I think this has a NIC configuration I would expect.

There is a specialized NIC and even room for another card, be it accelerator or a different, custom NIC. If you have to hook up your system to a broker or an exchange you’ll have to use standard protocols. The onboard Ethernet may still be useful for management or Internet access.

the storage support the NVME SSD?