The AMD EPYC 7642 has 48 cores and 96 threads per socket. The Intel Xeon Scalable LGA3647 lineup in the market during the second half of 2019 and much of 2020 has a maximum of 28 cores. For general purpose workloads that do not take advantage of some of the bleeding edge Xeon features, AMD is matching and sometimes beating Intel’s per-core performance. AMD has 48 cores here, Intel has at most 28. That should give you some sense of how this review is going to go.

Key stats for the AMD EPYC 7642: 48 cores / 96 threads with a 2.3GHz base clock and 3.3GHz turbo boost. There is 256MB of onboard L3 cache. The CPU features a 225W TDP. One can configure the cTDP up to 240W. These are $4475 list price parts.

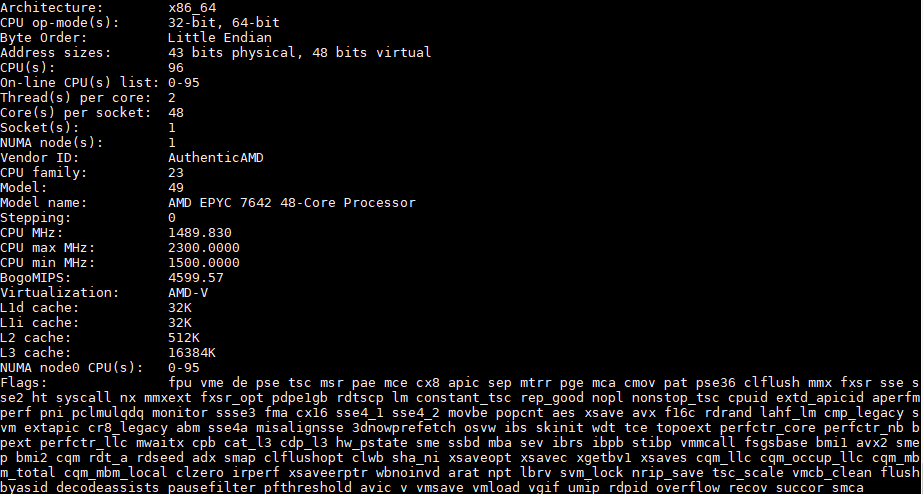

Here is what the lscpu output looks like for an AMD EPYC 7642:

256MB of L3 cache is an enormous number in the current x86 ecosystem. The 28 core Intel Xeon Platinum 8280 has 66.5MB of L2 plus L3 cache. L2 plus L3 on the AMD EPYC 7642 is 280MB. With that, the AMD EPYC 7642 has about 5.83MB of L2 + L3 cache per core. Intel Xeon Platinum 8280, 2.375MB of L2 + L3 cache. Having almost four times the cache is a big deal.

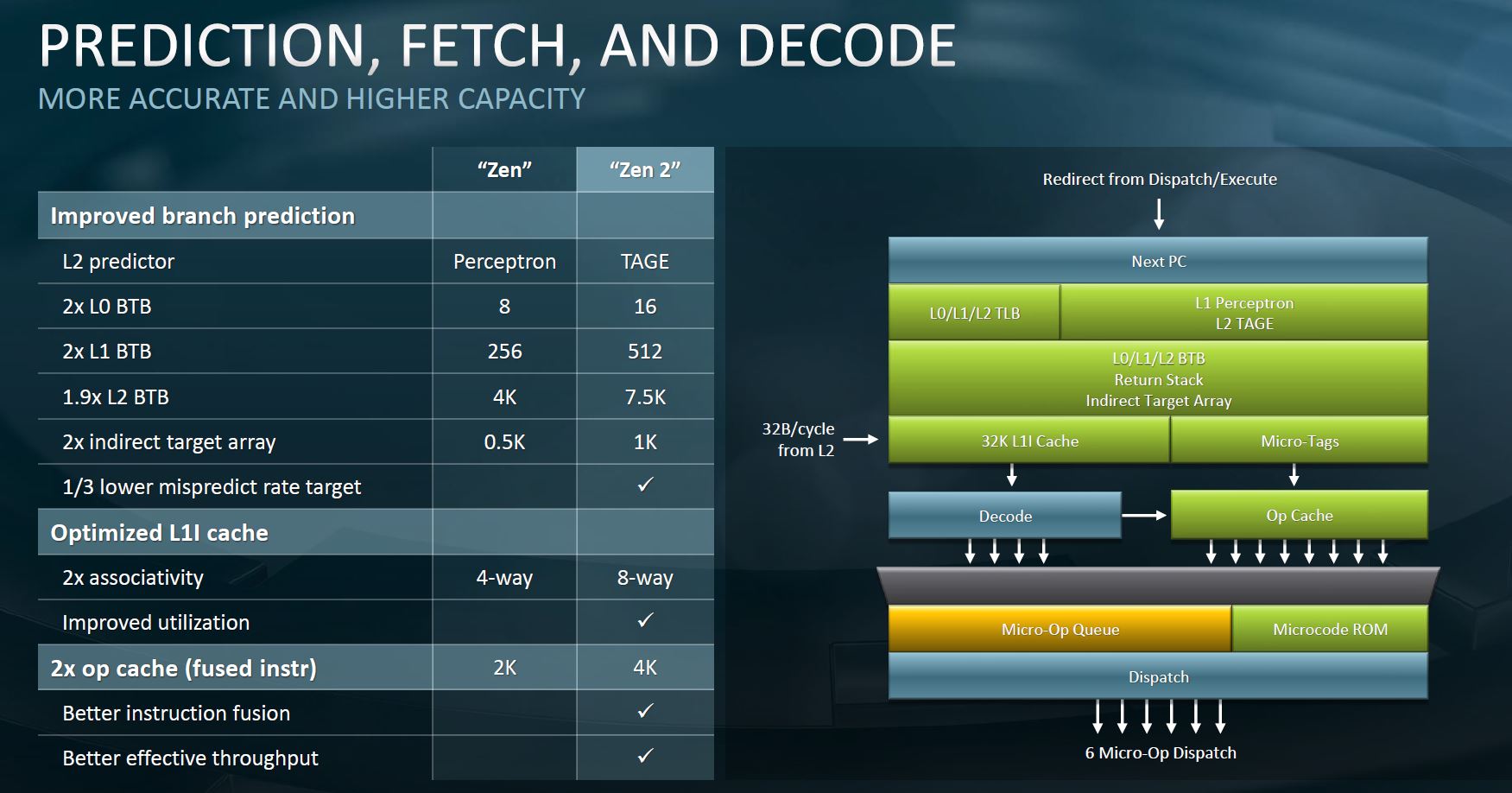

Aside from the enormous caches, AMD made improvements to how it fills caches with the new generation as we covered in AMD EPYC 7002 Series Rome Delivers a Knockout.

The large and more efficient caches are a big part of what makes AMD’s CPUs work. Even though Intel generally has a clock speed advantage, AMD is able to hold turbo clocks under load and keep cores fed through enormous caches. If you look at the state-of-the-art AI accelerator designs, one of the key principles that companies are gravitating to is having as much data cached next to the execution engines as possible. It turns out that moving data is very expensive from a power and performance perspective so keeping calculations operating on data in local caches is a common design principle in the AI accelerator market. Here, AMD is effectively following that trend and is reaping a benefit as a result.

A Word on Power Consumption

We tested these in a number of configurations. The lowest spec configuration we used is a Supermicro AS-1014S-WTRT. This had two 1.2TB Intel DC S3710 SSDs along with 8x 32GB DDR4-3200 RAM. One can get a bit lower in power consumption since this was using a Broadcom BCM57416 based onboard 10Gbase-T connection, but there were no add-in cards.

Even with that here are a few data points using the AMD EPYC 7642 in this configuration when we pushed the sliders all the way to performance mode and a 225W cTDP:

- Idle Power (Performance Mode): 115W

- STH 70% Load: 278W

- STH 100% Load: 304W

- Maximum Observed Power (Performance Mode): 322W

As a 1U server, this does not have the most efficient cooling, still, we are seeing absolutely great power figures here. The impact is simple. If one can consolidate smaller nodes onto an AMD EPYC 7642 system, there are power efficiency gains to be attained as well.

Next, let us look at our performance benchmarks before getting to market positioning and our final words.

“Instead, with Intel’s 10nm delays this AMD EPYC 7642 is essentially like later next year’s enormous performance jump halo Xeon, except it is available today from AMD and at a lower price.”

I think that’s the first Intel 10nm dig I’ve read on STH. That paragraph was great.

When is it better than 7702P if used in 1cpu servers?

Brian, you must have missed the glued-together cores article. I find Patrick is very fair with his digs on all sides. lol

I almost feel like AMD openned a wormwhole with these EPYCs and processors have leaped a decade into the future. It’s very overwhelming, to the point of almost feeling unreal.

But I am still with Intel, unfortunately. I have more choices of dual socket motherboards, can easily get a pair of cheap 8280L or 8276L QS (nah, no ES thx) CPUs and build my “poorman” home lab servers, and later when those parts have great price drops I can replace those CPUs with proper released version. So EPYC looks good, but the price and current choice limitations also look “Epic” to me. BTW I don’t need PCIe 4.0 for now – I am still trying hard to saturate all my available PCIe 3 (96) lanes per server. That’s about 80GBytes/s of data bidirectional in real life I think? And I have 2 servers…Well, when I say “cheap”, each CPU is actually cheaper than the motherboard…you get the idea…