Our review of the Inspur Systems NF5280M5 has special significance. This is the system that we have been using in our dual-socket 2nd generation Intel Xeon Scalable reviews. You can see examples in our Intel Xeon Gold 5220 Benchmarks and Review and Intel Xeon Gold 6230 Benchmarks and Review among others. This dual-socket 2U LGA3647 platform is designed for flexibility in the form factor.

Inspur Systems NF5280M5 Overview

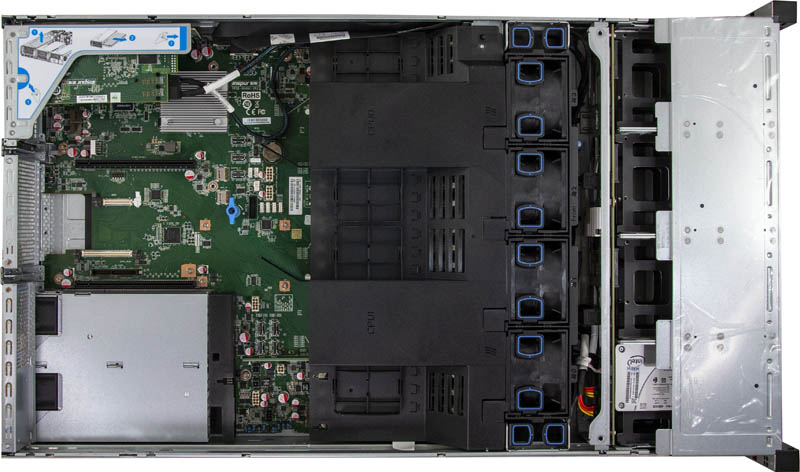

As you can see from the cover image, our test configuration utilized a 12x 3.5″ front panel hot-swap storage array. We also wanted to note that Inspur has options for 24x 2.5″ bays for SATA, SAS, and NVMe storage needs. One of the overall themes of the Inspur NF5280M5 is the flexibility to outfit the unit with various configurations to meet specific needs. The front storage configuration is one of those examples.

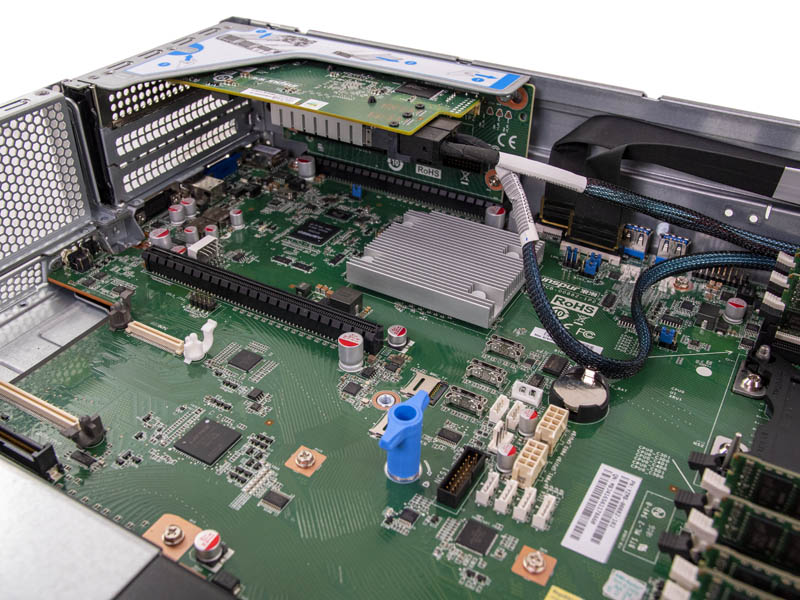

Inside the Inspur Systems NF5280M5 is a fairly standard layout for 2U servers. Storage is in the front of the chassis, there is a fan partition in the middle, followed by CPUs, then I/O and power supplies.

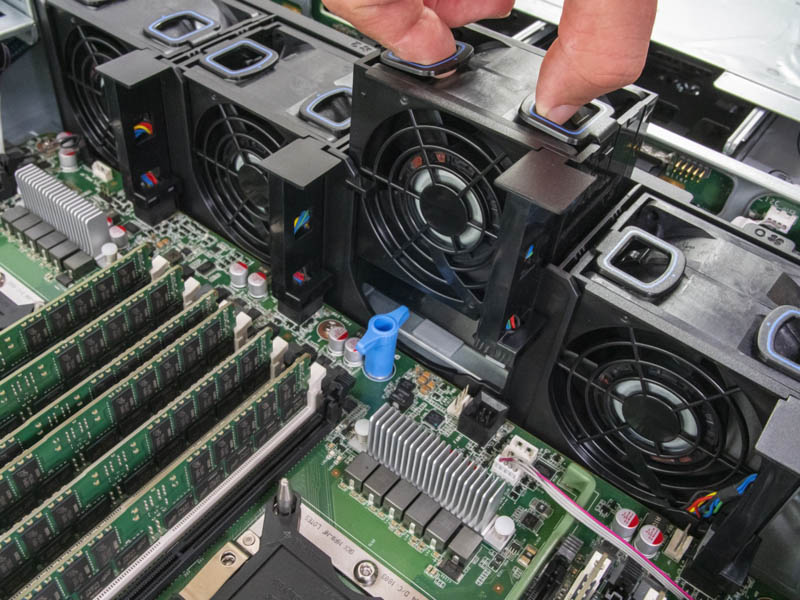

Inspur uses four large 80mm fans in the NF5280M5. These fans are tool-less hot-swap fans that can be replaced quickly.

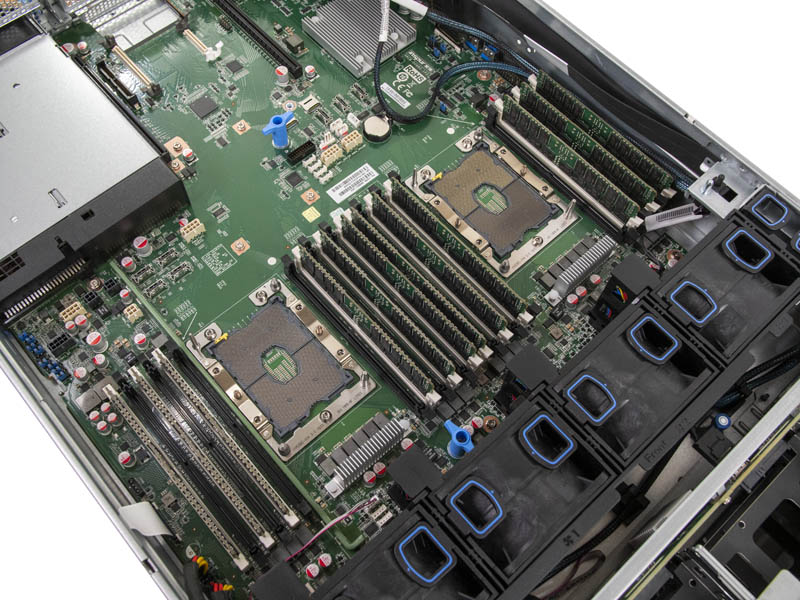

Inspur uses a hard air baffle with features like DIMM slot labeling to ensure proper operation. This is a higher-end and more effective solution than some of the soft or absent air baffles we see from some other vendors. Underneath this air baffle, we see two Intel Xeon Scalable sockets each flanked by twelve DDR4 DIMM slots (twenty-four total.) These DIMM slots can be filled with either traditional DDR4 memory, or a mix of DDR4 memory and Intel Optane DCPMM. That allows the Inspur NF5280M5 to handle over 6TB of memory.

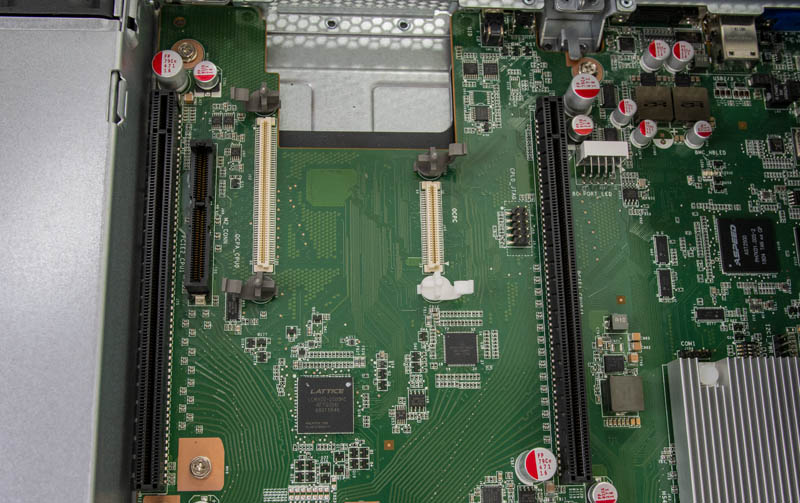

Looking at the rear I/O section, there is an OCP 2.0 slot. We like the fact that Inspur is using an open standard NIC form factor. That is a more flexible option than many proprietary LOM designs. The small black connector to the left of the OCP 2.0 connector in this picture is for a dual M.2 drive riser.

You can see two PCIe riser slots on either side of the OCP NIC slot. These are for risers that can be installed in the system. Our test system did not have these installed. The Inspur NF5280M5 can utilize risers with different slot configurations to support up to four GPUs, or different storage/ accelerator combinations.

Our Inspur NF5280M5 had a single PCIe riser populated and a Broadcom SAS controller installed in the third of these three riser locations.

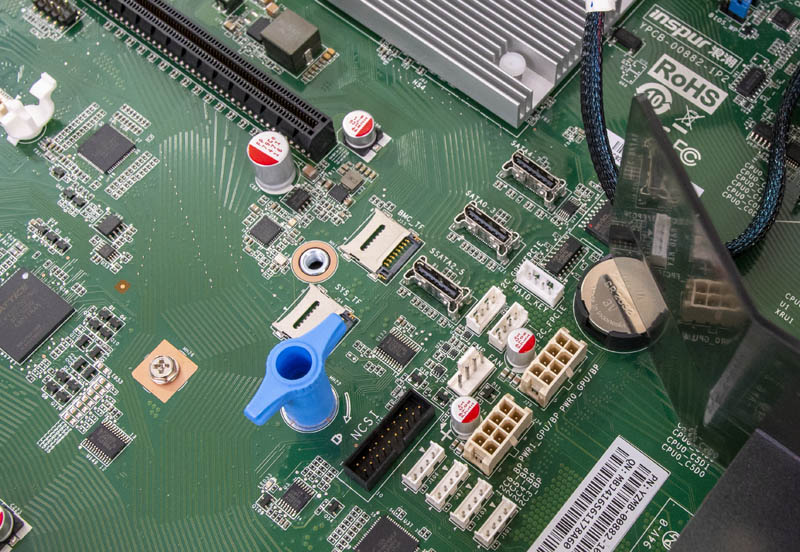

The motherboard has features such as dual USB 3.0 Type-A header and there are additional ports such as high-density Oculink SATA connectors as well as MicroSD slots.

One can also see headers for GPU power. Our system did not have risers for GPUs, but if it did, these are the types of power connectors that allow you to provide auxiliary power to up to four NVIDIA Tesla V100 GPUs. Inspur has other acceleration options such as with NVIDIA Tesla T4’s and FPGAs for inference acceleration.

The rear of the chassis has customizable blocks for PCIe expansion and/ or rear storage (2.5″ or 3.5″) options. The base-level functionality is the management port and legacy I/O blocks with an OCP NIC for networking. Additionally, as one would expect there are dual power supplies that one can configure to meet their needs.

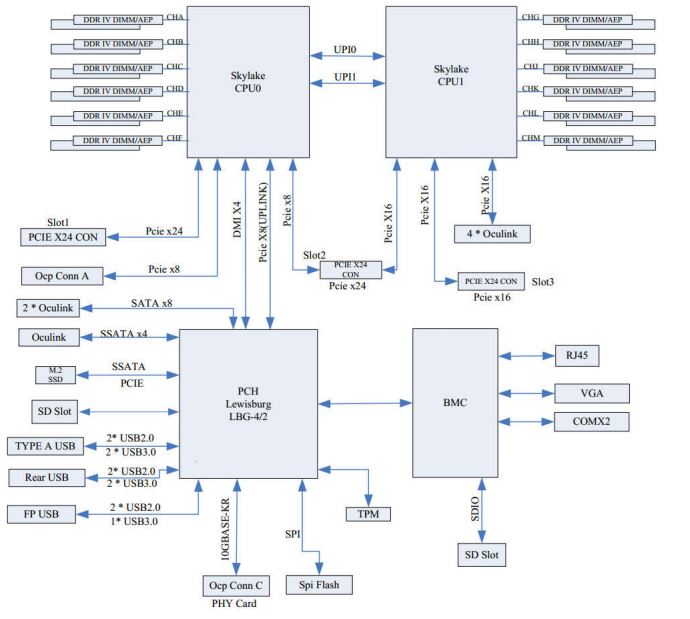

The block diagram shows the basics of how the system is architected. As one can see, this is based on a two UPI link motherboard.

Next, we are going to take a look at the Inspur Systems NF5280M5 management.

Is Inspur going to do an AMD EPYC 7002 Rome server? I’d like to see that reviewed if so. If not, they’re behind.

Hot Swap Internal Fans?

Who is going to pull a rack server out and remove the cover with out shutting it down first?

Basically everyone who wants to avoid unnecessary down-time.

(Servers with hot-swap components are designed to run without a lid for short periods of time.)