Continuing our Deep Learning and AI Q3 2019 Interview Series, Scott Tease from Lenovo is running through our gauntlet of questions. Scott is the Executive Director, HPC & AI, Lenovo Data Center Group. The last time I saw Scott, he was taking me on a virtual reality tour of the Barcelona Supercomputing Center. Lenovo is a large HPC and AI vendor selling to companies ranging from startups to research organizations and to some of the largest organizations using AI today. We wanted to get his insights into the market and where we are headed.

Lenovo Discusses Deep Learning and AI Q3 2019

In this series, we sent major server vendors several questions and gave them carte blanche to interpret and answer them as they saw fit. Our goal is simple, provide our readers with unique perspectives from the industry. Each person in this series is shaped by their background, company, customer interactions, and unique experiences. The value of the series is both in the individual answers, but also what they all say about how the industry views its future.

Training

Who are the hot companies for training applications that you are seeing in the market? What challenges are they facing to take over NVIDIA’s dominance in the space?

There are a number of new startups emerging in the space including Graphcore, Habana, Cambricon and many more. The challenge they will have to overcome is building the ecosystem around the chip itself. NVIDIA has spent more than a decade developing the software ecosystem and community around accelerated computing. They have also built long-term relationships with OEMs and software vendors and it is going to be an uphill battle for any startup attempting to disrupt this space.

How are form factors going to change for training clusters? Are you looking at OCP’s OAM form factor for future designs or something different?

We believe the mass market will stay on standard PCIe form factors for the foreseeable future due to their openness and interoperability. Proprietary form factors and interconnects like NVLink/SXM and new open standards like OAM – which Lenovo is a contributing participant in creating the specifications – will see growth in high-end AI training to provide high-speed throughput of the data to the accelerators.

What kind of storage back-ends are you seeing as popular for your deep learning training customers? What are some of the lessons learned from your customers that STH readers can take advantage of?

All-Flash/NVMe, file system-based storage subsystems are growing in importance as AI is incredibly data-hungry. The challenge we are seeing is keeping the accelerators fed for deep learning workloads. It is becoming increasingly difficult to host sufficiently fast storage close enough to the accelerators. From this challenge, we will see innovative solutions such as NVMe over fabric or other advances in the I/O space. It is important to keep a system-level perspective on the hardware to gather the maximal ROI.

What storage and networking solutions are you seeing as the predominant trends for your AI customers? What will the next generation AI storage and networking infrastructure look like?

For enterprise customers that have a big data environment, we’re typically seeing new solutions embedded into their existing infrastructure. Our advice to customers is to start with the assets/infrastructure that you already have. In many cases, customers can start with common networking that they already have in their environment, from 10-25GB Ethernet to high-speed fabric including Infiniband, 100GB Ethernet and OPA. We have recently announced a liquid-cooled 200GB HDR adapter with Mellanox for the high-end HPC clusters.

Over the next 2-3 years, what are trends in power delivery and cooling that your customers demand?

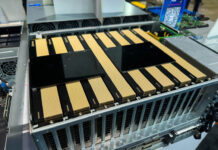

With the increasing TDP for CPUs and accelerators, we believe the future will include liquid cooling or some hybrid liquid/air-cooled systems. This trend has already taken over in large HPC clusters and we are looking at ways to enable liquid cooling for the full spectrum of HPC & AI customers. We recently launched our Lenovo Neptune™ portfolio which aims to bring the advantage of liquid cooling to customers of all sizes across multiple industries.

What should STH readers keep in mind as they plan their 2019 AI clusters?

The world is changing quickly, and we recommend buying IT infrastructure that can support multiple business initiatives such as AI, HPC, VDI and Big Data to increase utilization and, ultimately, ROI on your IT investments. We recommend keeping your AI training close to where your data resides. It can be costly and time consuming to move large amounts of data in and out of different environments.

Inferencing

Are you seeing a lot of demand for new inferencing solutions based on chips like the NVIDIA Tesla T4?

We have already seen adoption for acceleration at the edge and in the data center leveraging the NVIDIA Telsa T4 chip. As we see more organizations roll out inferencing solutions, especially in edge applications, it could drive demand for other types of accelerators including FPGAs and custom ASICs, once customers begin optimizing for price, performance and power consumption. CPUs will also continue to play a major role in AI inferencing workloads.

Are your customers demanding more FPGAs in their infrastructures?

We have seen interest for FPGAs in niche markets and one-off Proof of Concepts (PoCs), but not yet for mass adoption. We could see this trend quickly changing as customers move from PoCs to actual implementations.

Who are the big accelerator companies that you are working with in the AI inferencing space?

Today, NVIDIA is dominating the conversation around AI and our GPU platforms have been well received for AI training workloads. For inference workloads, it is definitely a mix of Intel Xeon CPUs and NVIDIA T4s. As we have seen with Google’s release of TPUs in Hyperscale and Tesla’s HW3 announcement, GPUs may not be optimal for all workloads. We may see purpose-built AI chips emerge for specific workloads. To see adoption in the mass market, accelerators will need to be supported by the open-source community and software ecosystem. This is where incumbents like Intel and NVIDIA will maintain an advantage.

Are there certain form factors that you are focusing on to enable in your server portfolio? For example, Facebook is leaning heavily on M.2 for inferencing designs.

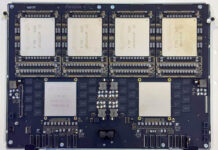

Lenovo’s hyperscale business works with many of the large hyperscale providers globally, building unique boards for their specific requirements including things like M.2 & OAM designs. We also expect similar custom-built designs coming out of our OEM team for edge/IoT applications. There are unique form factors for inferencing chips and we may see the market fragment a bit in hyperscale and edge, but for the mass market and data center applications, we expect PCIe to be dominant for quite some time, especially with the PCIe 4.0 on the horizon, offering higher throughput.

What percentage of your customers today are looking to deploy inferencing in their server clusters? Are they doing so with dedicated hardware or are they looking at technologies like 2nd Generation Intel Xeon Scalable VNNI as “good enough” solutions?

The majority of our customers are still in the Proof of Concept stage today with a handful rolling out large implementations. The choice of architecture depends on the unique customer needs and those conversations are typically around Intel Xeon or NVIDIA T4 for inferencing applications. We have also had some interest in Intel Movidius chips for edge inferencing applications. The compute power to perform inferencing workloads will grow as data sets such as 4K video increase along with the increasing complexity of AI models.

What should STH readers keep in mind as they plan their 2019 server purchases when it comes to AI inferencing?

Similar to AI training, data locality will be key, driving more compute to the edge for IoT/edge workloads. For large rollouts, price, performance and density will be important factors.

Application

How are you using AI and ML to make your servers and storage solutions better?

We are working on several internal use cases at Lenovo, from improving customer service through virtual assistants and improving quality control using computer vision to logistics and scheduling optimization.

Where and when do you expect an IT admin will see AI-based automation take over a task that is now so big that they will have a “wow” moment?

I think the “wow” moment will come when we see AI being used to accomplish tasks that were not possible before. For instance, performing quality inspections with computer vision systems on every product on an assembly line or predicting systems failure before it happens. These will be a lightbulb moment for most people. Lenovo’s AI Innovation Centers serve this purpose and demonstrate to clients how AI can be leveraged to improve their business processes.

Final Words

Thanks again to Scott for taking the time to answer our questions. I know you are constantly traveling to Lenovo’s customers and suppliers all over the world so taking the time to share your perspective with our readers is greatly appreciated.

For our readers, Lenovo has a long heritage in the HPC and AI spaces. For me, one of the most interesting parts for me was Scott’s discussion of OAM and inferencing where he said to “expect similar custom-built designs coming out of our OEM team for edge/IoT applications.” The edge is the new AI battleground and this is showing how there is a lot of innovation even at some of the most basic levels there.