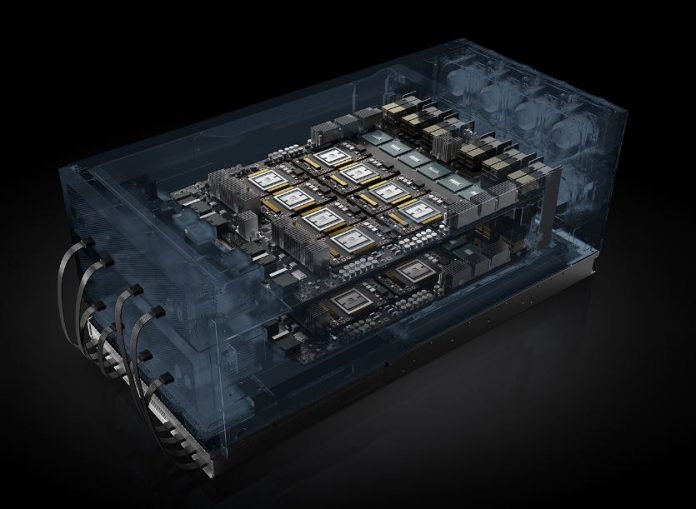

At GTC in Taiwan this week, NVIDIA launched its new cloud-focused update to the HGX-1 architecture. This new architecture is dubbed the NVIDIA HGX-2 and is focused on providing cloud infrastructure players a path to 16 GPU systems. The HGX-2 is not entirely new as the architecture first was seen in the NVIDIA DGX-2 launched earlier in 2018. You can read more about the DGX-2 in our piece: NVIDIA DGX-2 Pushing Limits at 16 GPUs and 512GB of HBM2 RAM.

NVIDIA HGX-2 Architecture

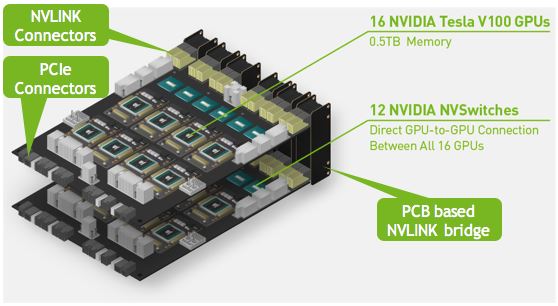

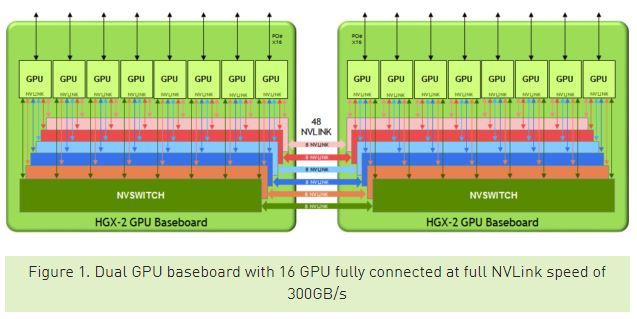

The base architecture calls for up to 16x NVIDIA Tesla V100 GPUs to be connected in two sets of eight cards. These two sets of eight cards are connected to each other with 12 NVIDIA NVSwitches which allow full 300GB/s bandwidth between each GPU. GPU to GPU bandwidth and latency are important for current deep learning model training tools.

Each set of eight GPUs are connected to a baseboard with six NVSwitches onboard. These NVSwitches provide the HGX-2 GPU to GPU bandwidth on the same baseboard but also provide connectivity to the other baseboard. From what we are told, the NVSwitches use a ton of power which makes sense given the bandwidth they are pushing.

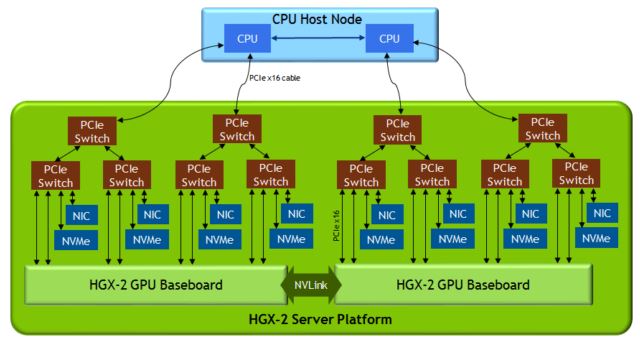

Each baseboard is connected via a set of PCIe switches. NVIDIA uses these PCIe switches to provide a 100Gbps EDR/ Ethernet Mellanox NIC to a pair of GPUs along with NVME storage. This architecture means that GPUs do not have to go all the way back to CPUs to send data and access (some) storage.

Overall, this is high-end connectivity but the DGX-2 is rated as using up to 10kW which is a massive jump over the DGX-1, likely due to the NVSwitch array.

NVIDIA HGX-2 Vendor Support

Major OEM players such as Lenovo, Supermicro, QCT, and Wiwynn will support the HGX-2 architecture in systems designed for their major customers.