At GTC 2018, Baidu brought some serious hardware. The Baidu X-MAN is a liquid cooled 8-way NVIDIA Tesla V100 shelf that shows how the company is grappling with the power consumption of massive GPU farms for deep learning and AI. Companies like Facebook have opened their deep learning GPU shelves to the public and now Baidu is showing off its design.

In the world of machine learning and AI, Baidu has been forging ahead as a leader for years. Unlike the average startup, Baidu operates on a massive scale. The company is both using deep learning and AI in its core business today but also operates a research arm focused on new frameworks and innovations for the future. Like companies such as Google and Facebook, it is also providing much of this work to the community. As an example, PaddlePaddle for massively parallel training.

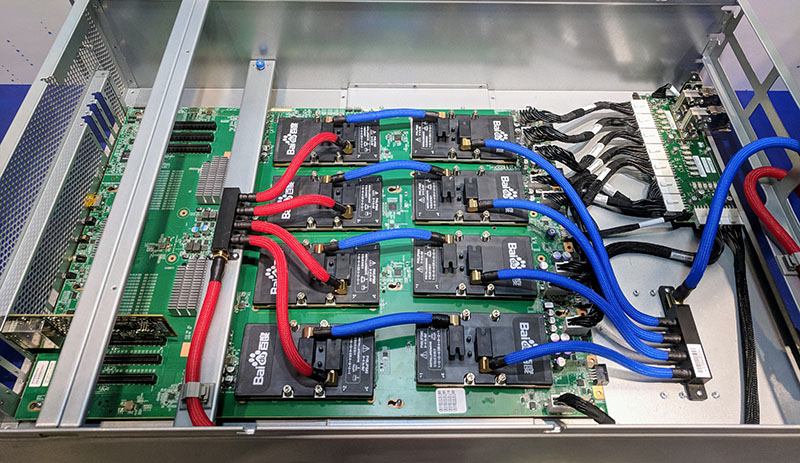

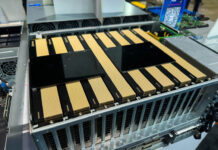

Baidu X-MAN 8-Way Liquid Cooled GPU Shelf

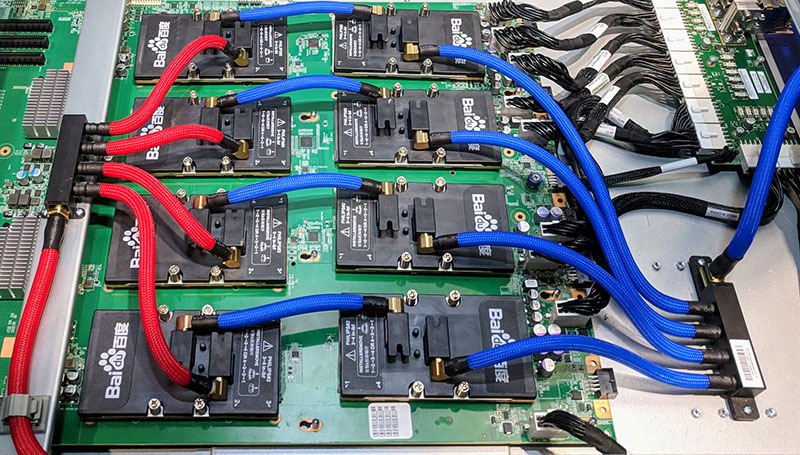

Baidu processes over 100PB of data per day so it requires clusters of GPUs and FPGAs to handle the training and inferencing workloads. To handle its GPUs, the Baidu X-MAN shelf is used to house eight NVIDIA Tesla V100 or P100 GPUs.

The blue and red tubing is for liquid cooling. Cold liquid is pumped over the SXM2 packages using four parallel paths. You can see Baidu branding clearly on the water blocks.

Like many SXM2 designs, the Baidu X-MAN GPU box utilizes NVLink to speed communication between the GPUs. Unlike servers like the NVIDIA DGX-1 and DGX-2, this is a PCIe based system. Instead of being a standalone server, it is designed to be operated on a switched PCIe network. That means that we see PCIe x16 slots available for external cabling.

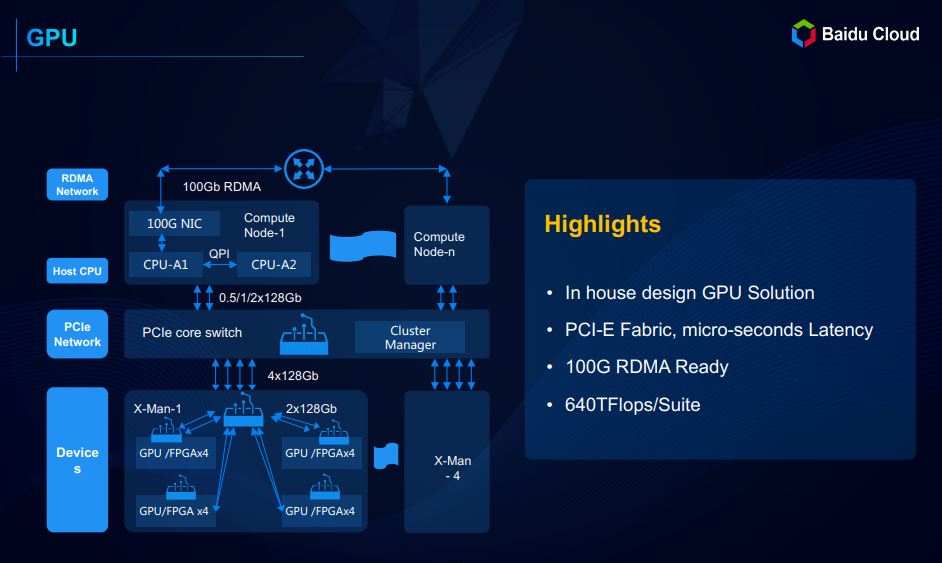

Here is a quick look at the Baidu Cloud infrastructure that utilizes the Baidu X-Man GPU boxes. You can see that it is using switched PCIe fabric to deliver low latency between the GPU boxes.

Baidu X-MAN Impact

The GPU architecture of Baidu Cloud is interesting enough itself, but we are going to focus on the X-MAN. Liquid cooling large numbers of GPUs has an appreciable impact. Baidu Cloud claims it is running at a <= 1.11 PUE. Liquid cooling large numbers of GPUs allows a 45% reduction in cooling power. For large numbers of GPU boxes, this yields immense power savings at scale.

At GTC 2018 we saw a number of four, eight, and now sixteen GPU servers. The Baidu X-MAN example is great to show how the hyper-scale players are innovating on a completely different scale.

Cool stuff. Why do they use such a tall case? This could be 1-2U easy

where to buy this cooling system?