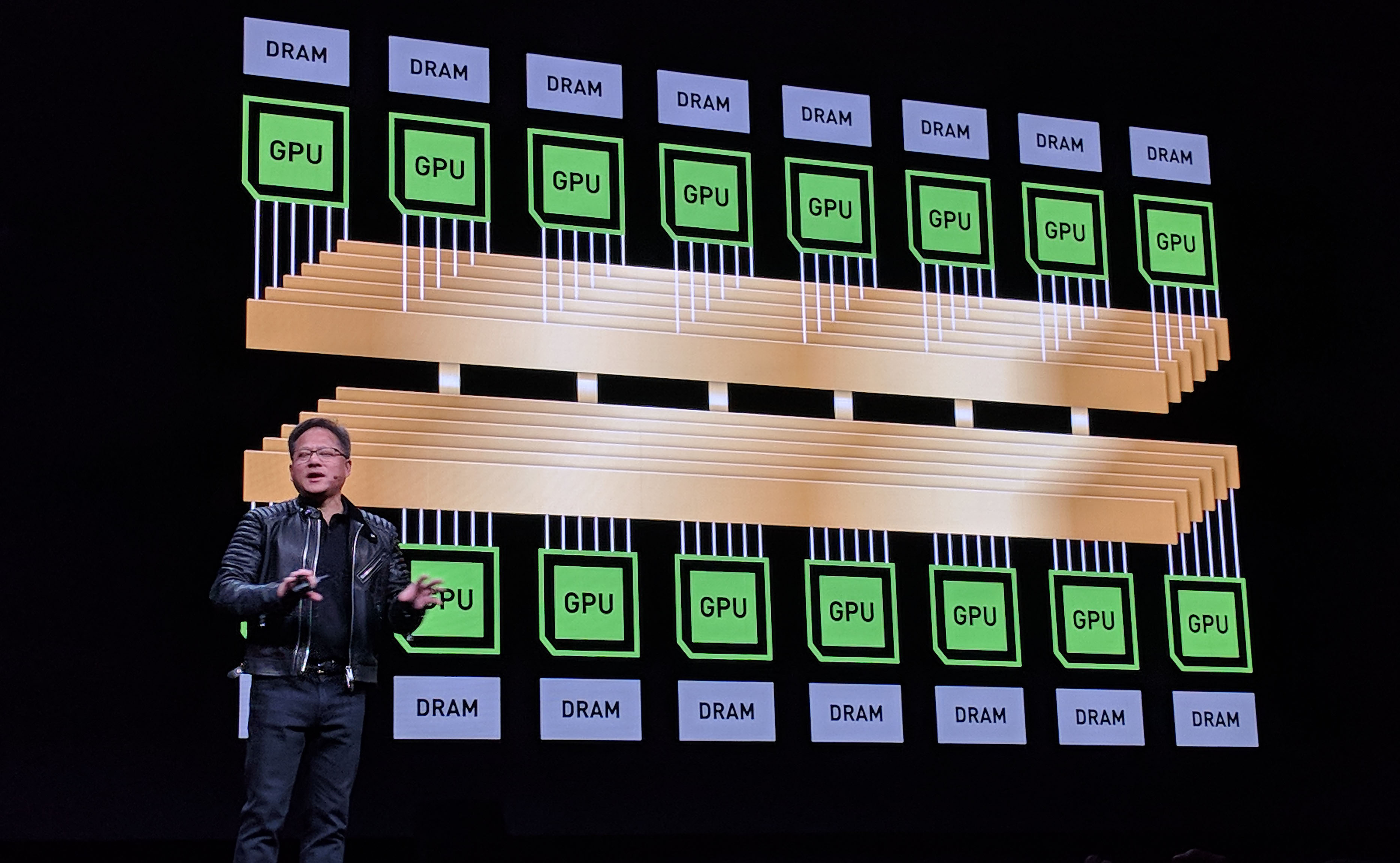

NVIDIA may have announced that all new DGX-1 servers and DGX Station workstations have been upgraded to 32GB NVIDIA Volta V100’s, but that was the smaller of the data center announcements. Instead, there is a new next-level scale-up deep learning and AI training machine called the NVIDIA DGX-2. The NVIDIA DGX-2 utilizes a new NVLink switch architecture, more RAM and up to 16x NVIDIA Tesla V100 GPUs to achieve up to 2 petaflops of performance in a single machine.

Scaling-up with NVSwitch

Key to the DGX-2 is the NVSwitch. This is a technology the company desperately needs in order to achieve scale-up architectures. The background on this is threefold:

- NVLink is essentially a faster than PCIe 3.0 interconnect that allows for faster GPU to GPU communication, imperative for fast model training

- NVIDIA saw PCIe 3.0 to PCIe 4.0/ 5.0 transitions taking too long, so they needed to act quickly to address their increasingly faster GPU’s need for more interconnect bandwidth. Over the years, NVIDIA has been scaling NVLink to faster speeds and larger architectures

- With the threat of scale-up solutions like Intel Nervana chips coming in 2018, NVIDIA needed to scale-up its training machines. Generally, the companies we work with, in the deep learning / AI space, prefer to scale up before scaling out as there are limits to Infiniband infrastructure speeds and latency

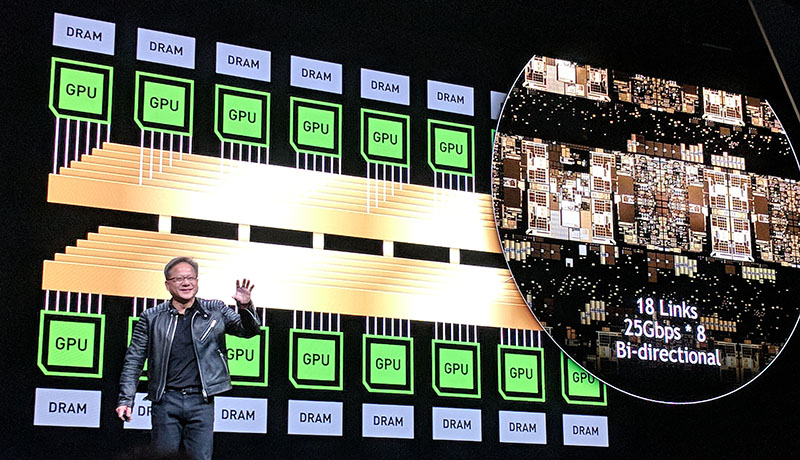

Although NVIDIA will be quick to point out how NVLink is not PCIe and the NVSwitch is not a PCIe switch, conceptually the architecture is similar. By adding an interconnect switch fabric that can support multiple layers of switches allows for NVIDIA and its partners to create larger systems that scale up to more GPUs. Every GPU to GPU has up to 300GB/s of bandwidth using the NVSwitch.

From a competitive side, it is not lost on us that this also means that the CPU will be further de-emphasized in deep learning and AI systems. With the DGX-2, and presumably larger systems going forward, NVIDIA has lowered the CPU to GPU ratio. In the DGX-1 there are two CPUs for eight GPUs. In the DGX-2 there are two CPUs for 16 GPUs. From a competitive standpoint, that means that Intel will sell fewer chips into these systems.

NVSwitch is another way for NVIDIA to differentiate itself over PCIe switch architectures like the dual root DeepLearning10 and single root DeepLearning11 reference systems we contributed in 2017. What is most intriguing with NVSwitch is that we do not know what the scaling limits are. We know that the latest switches are 18 port designs but what topologies can that enable?

Next, we will talk more about the NVIDIA DGX-2 and how it uses the NVSwitch architecture.