Adding a large number of GPUs to a system is something that has a lot of design focus in the industry at this point. AI/ Deep Learning companies have been working with single root servers with 8-10 GPUs. We showed examples of these in our DeepLearning10 and DeepLearning11 builds. When it comes to password cracking, and the new hot market, crypto mining, PCIe bandwidth is less of a concern and one focuses on minimizing non-GPU component costs. When the Ethereal Capital 16x GPU rig entered the lab, we were impressed. It came equipped with 16x NVIDIA P106-100 6GB GPUs, delta sourced fans and power supplies, and a server class embedded motherboard.

A few weeks ago we published our Ethereal Capital 16x GPU Mining System P106-100-X16 Preview. At that time, some of the IP protection applications were still in-progress so we could not go into full details. Today, we get to review a well-engineered GPU crypto mining rig that is designed to be deployed next to inexpensive sources of power.

Ethereal Capital P106-100-X16 16x GPU Overview

Our initial introduction to the Ethereal Capital P106-100-X16 happened before the unit arrived. When shipping was being set up by the manufacturer we received a message to the effect that it was going to take an extra day for packaging because they were not used to shipping anything smaller than a pallet.

Since this is a system designed to be operated in large mines, near cheap power, we tested this in a data center (hence not the normal STH white balance in the pictures.) That gave us the opportunity to try it out in a real-life scenario.

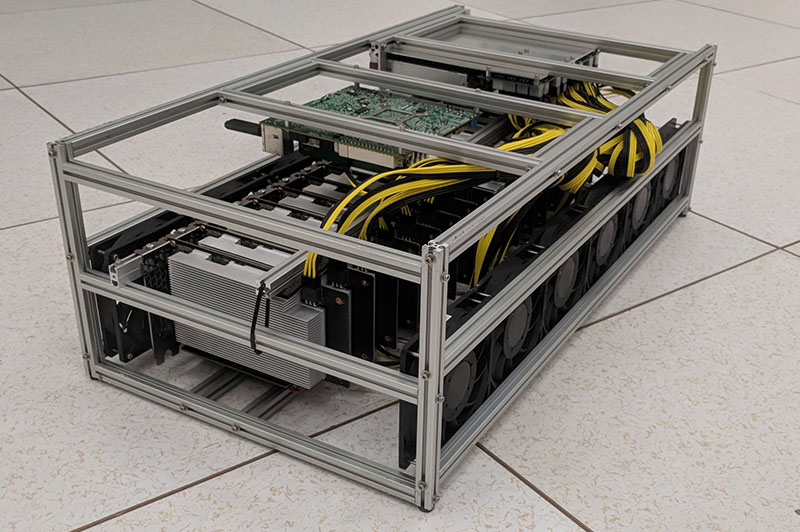

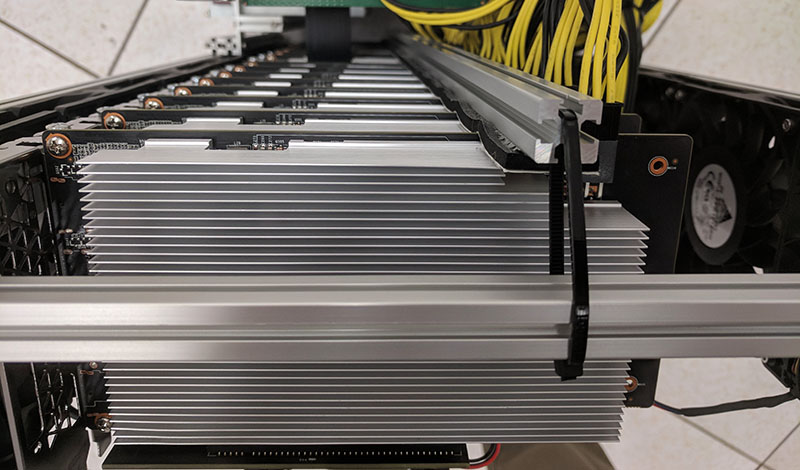

As you might imagine, with 16x GPUs, this is an absolutely massive system. Here is a look at the system from our first angle. You can see the power distribution to each card, the motherboard undercarriage and the high-quality power cables used.

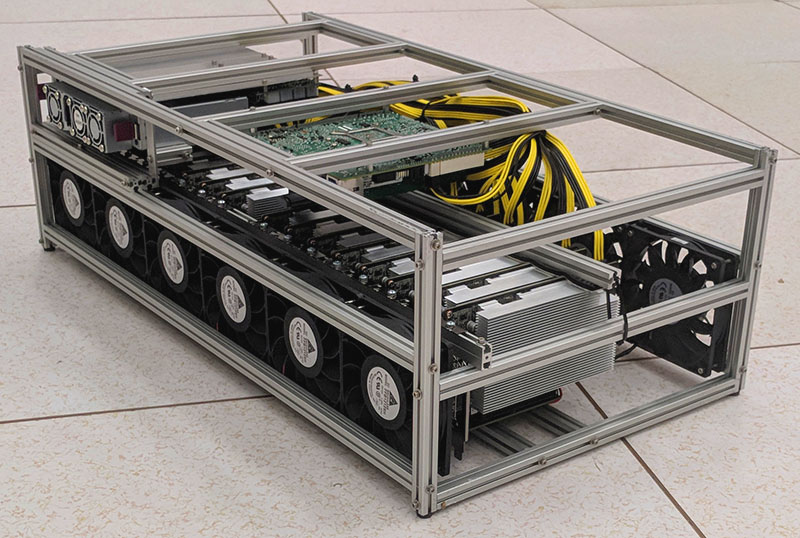

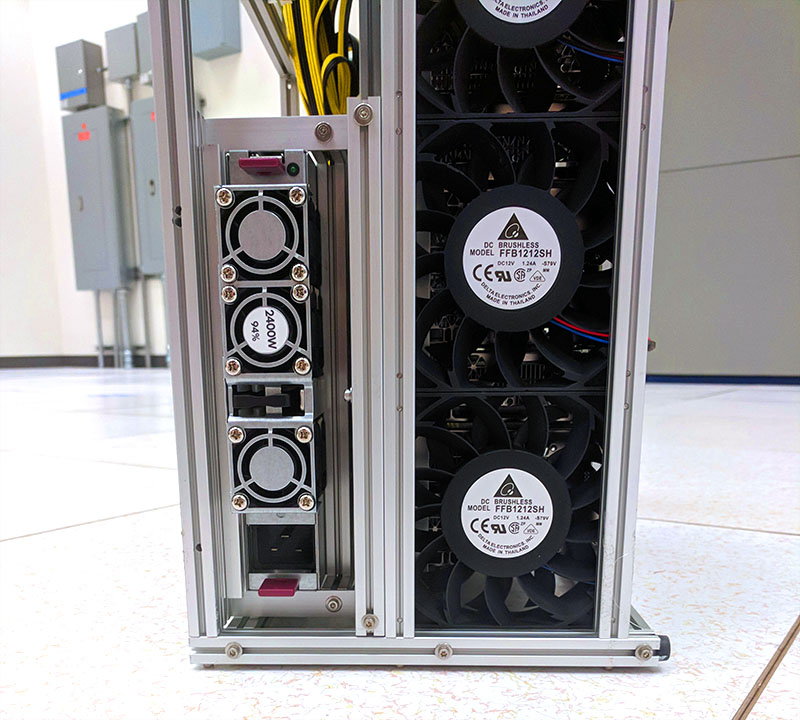

From another angle, you can see the twelve Delta brushless fans that keep the 16x NVIDIA P106-100 GPUs cool. These are the class of fans we commonly see in servers instead of consumer focused fans, and they move a lot of air. Our GPUs were kept sub 70C without issue.

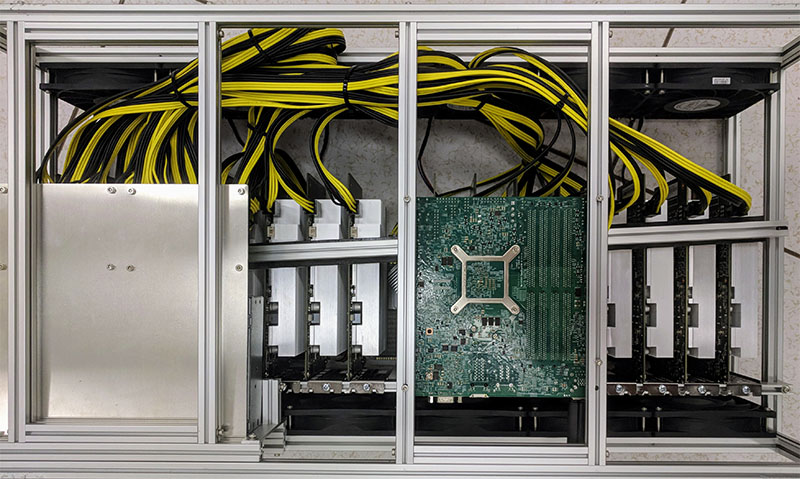

This is another view that highlights the I/O for the system, and what must be cabled. One can minimally connect one network port and one power cable and be up and running.

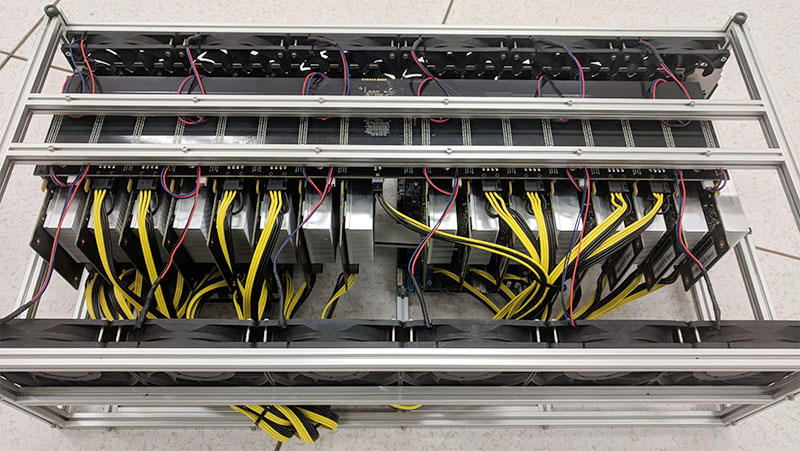

Looking from the top view, we can see that the GPUs stretch across the width of the enclosure.

The motherboard uses a PCB riser to connect its PCIe 3.0 x16 slot to a custom made PCB that is part of the “special sauce” of the system. One can see that this custom PCB provides PCIe x16 physical slots connected through a PLX (Broadcom) PCIe switch. Lower-end mining rigs will typically use arrays of PCB and USB 3.0 cables and connectors to connect GPUs. Here, the full PCIe wiring is all PCB which is a significant upgrade.

All 16x NVIDIA P106-100 GPUs are very well secured and did not come free during shipping. They are sandwiched between two bottom braces and the PCIe switch PCB and a top brace along with standard faceplate mounting.

You can also see the walls of massive Delta fans that are at the front and rear of the passively cooled GPUs. Using mining-specific GPUs minimizes headaches with multiple video outputs and saves power since each GPU does not have its own fan(s) nor video out circuitry.

These NVIDIA P106-100 GPUs are essentially mining focused variants of the NVIDIA GTX 1060 6GB cards. They lack video outputs and the design has been simplified and optimized for cryptocurrency mining.

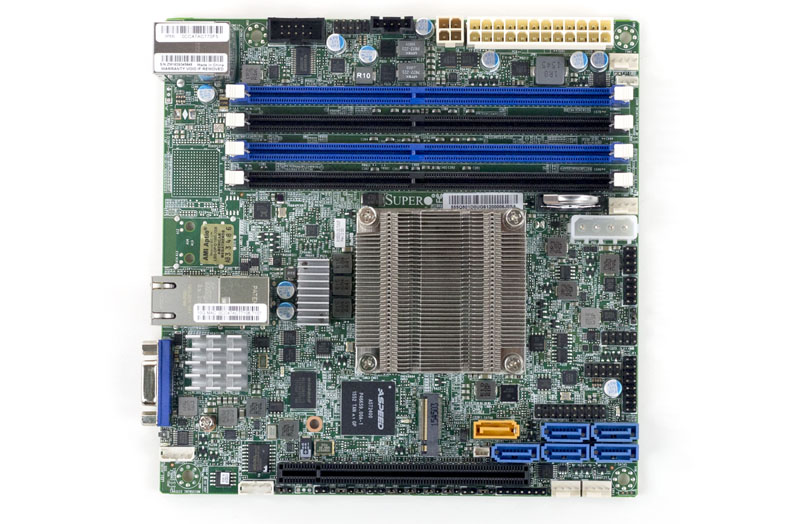

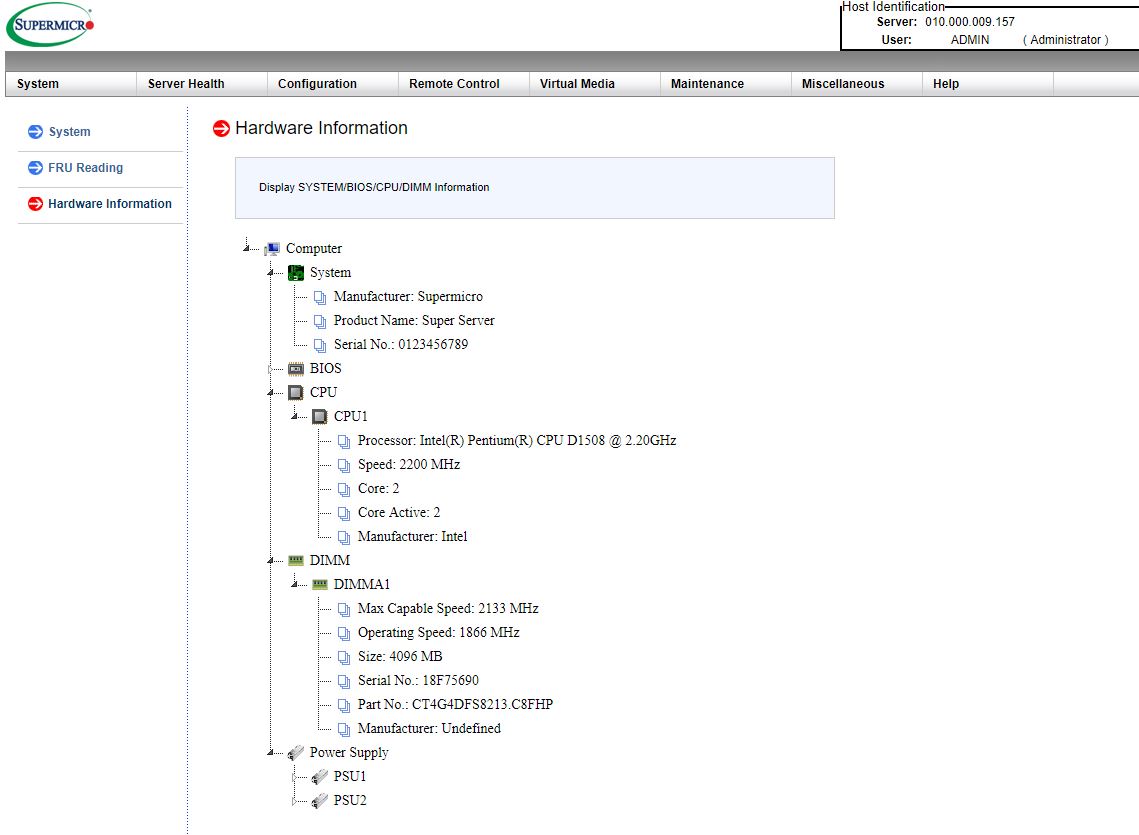

At the heart of this system is a Supermicro X10SDV-2C-TLN2F motherboard with a 4GB DDR4 stick of RAM. The Pentium D1508 dual-core (four thread) CPU was easily able to keep our miners fed at single digit CPU utilization. The ethOS mining OS is loaded onto a USB key which makes swapping easy and minimizes the cost of storage media. In a larger mine, it is possible to network boot the motherboard. Interestingly enough, given the X10SDV’s embedded Pentium D1508 CPU, this rig actually comes with dual 10GbE networking. That may make it the fastest networked purpose-built mining rig around but it also means you can have a second network path for A + B networking. The last thing you want is for a switch in your remote data center to go down and take down all of your rigs.

To the very right of the picture, you can see a custom PCB riser that connects the switched GPU PCIe PCB to the motherboard. Despite a single PCIe slot, this embedded motherboard is able to provide PCIe connectivity to 16x GPUs.

Luckily, since this is STH, we had a photo from our X10SDV-2C-TLN2F review on hand and did not need to pull the motherboard. Feel free to read the review for more on its capabilities.

When it comes to powering the system, power is provided by a delta 2.4kW 94% efficient power supply. That PSU is both efficient and a high-quality unit which we were excited to see. There is only a single PDU connection to keep the wiring as simple as possible.

To sum up the hardware experience, Ethereal Capital is using excellent hardware in the P106-100-X16. We would have liked to have seen some enhancements to the metal structure, and we were told that we have a prototype and that there would be more enclosed sides in the production version.

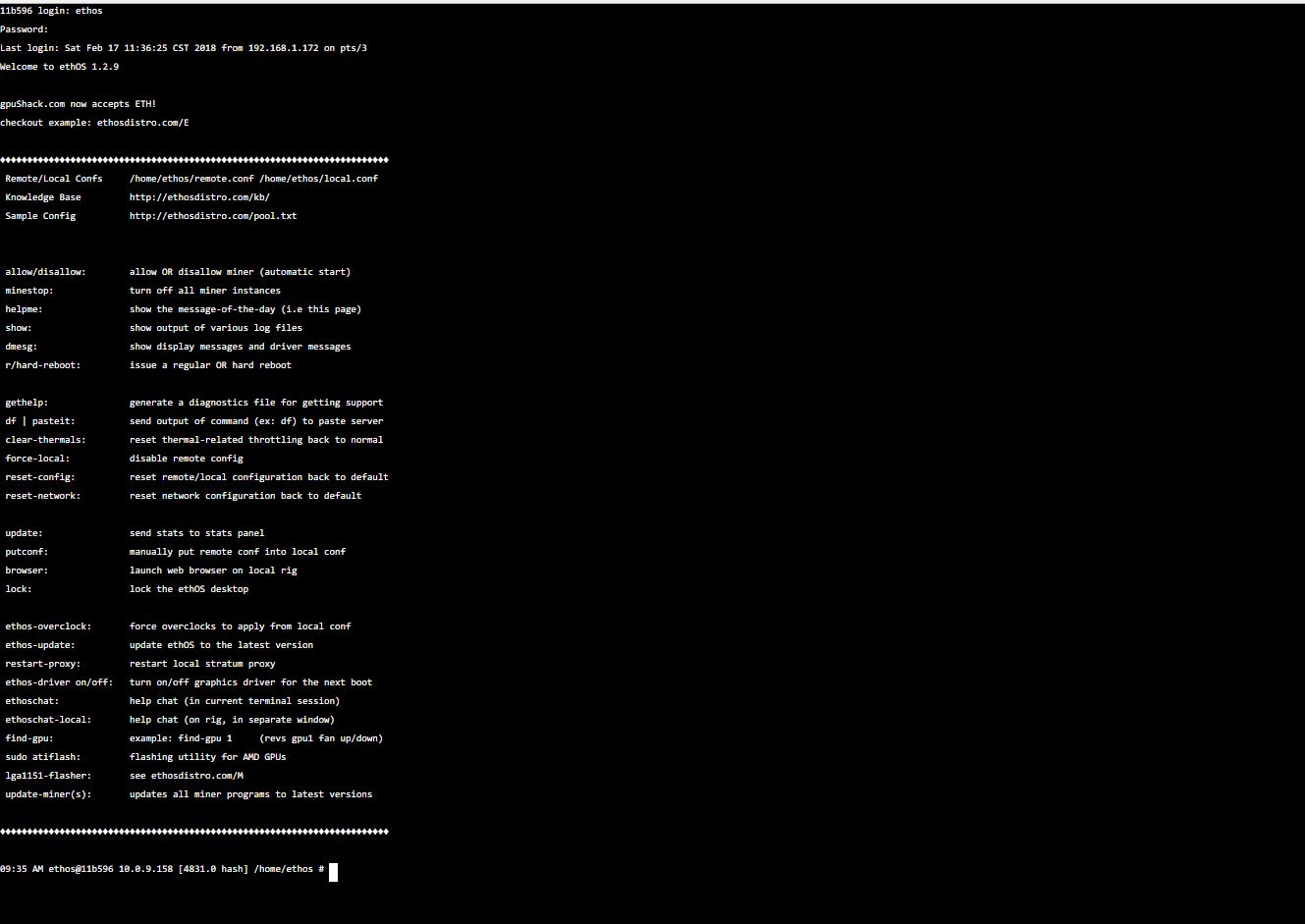

Ethereal Capital P106-100-X16 ethOS and Management

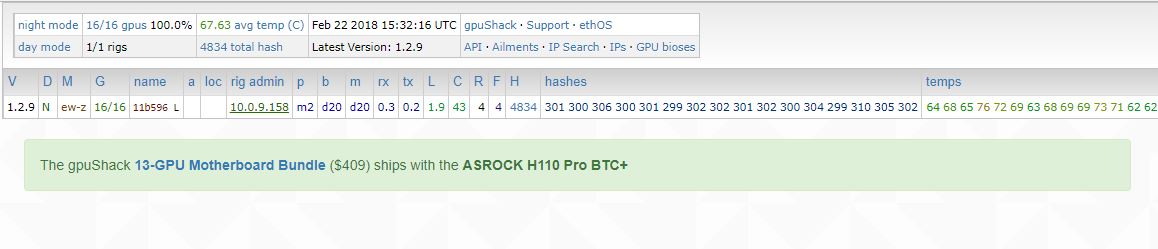

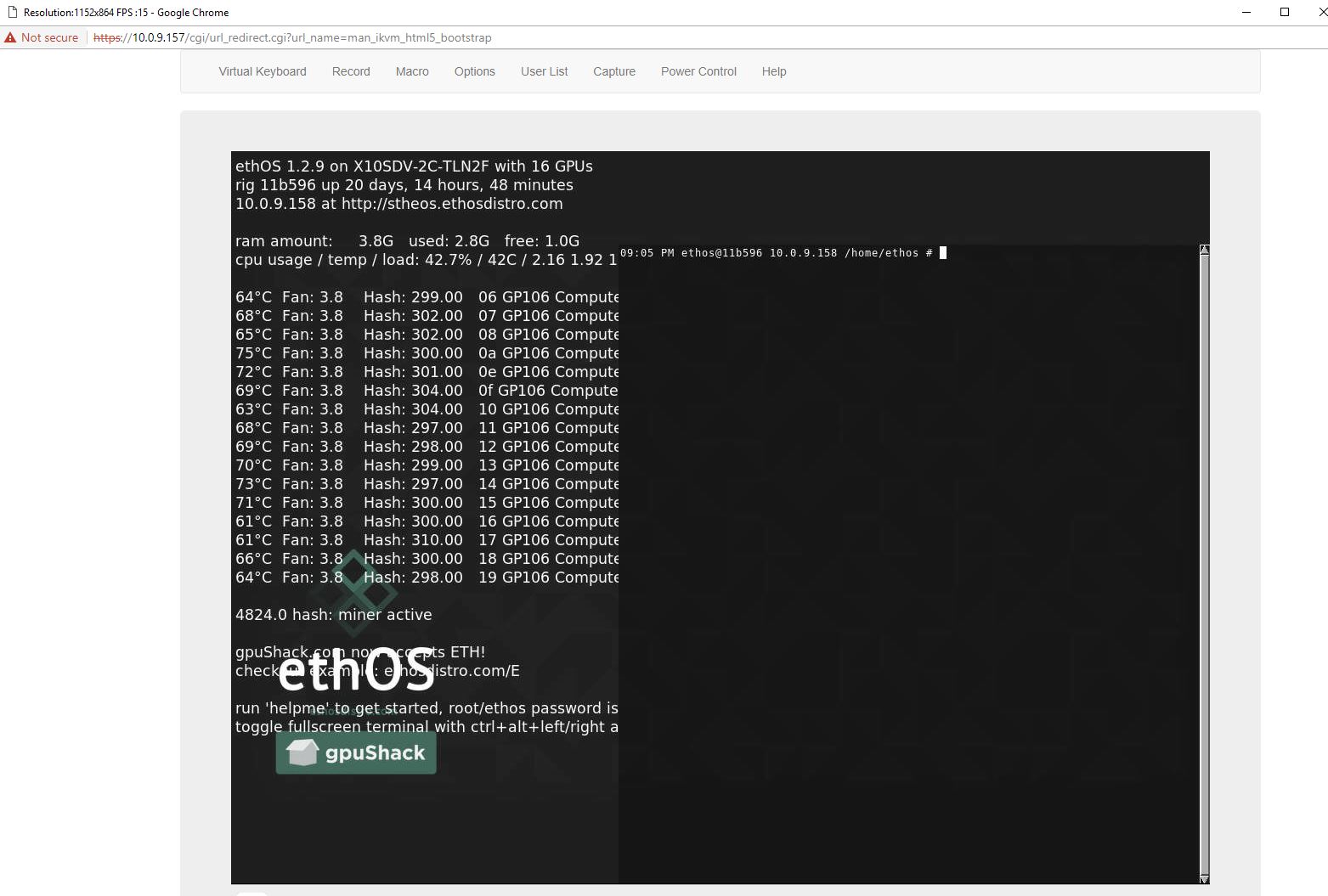

The Ethereal Capital team worked with the ethOS developers to ensure their solution supported 16 GPUs. Realistically, in this system, there are 17 GPUs since there is a small GPU dedicated to remote management. Many OSes struggle with this many GPUs, and with an additional BMC GPU, so the extra work by ethOS is appreciated.

Once the solution is up and running, you can get a management dashboard. We only have one system online, however one can have many going simultaneously and all managed from the same centralized dashboard where you can see stats like power consumption and temps.

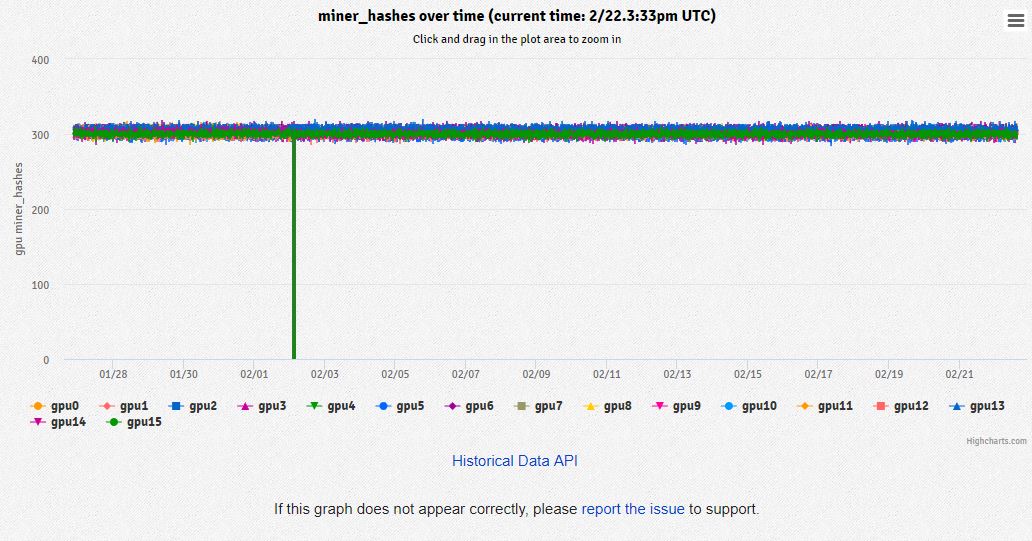

There are also graphs that show individual hashes for GPUs over time, temps and power consumption over time, and similar.

Another nice feature of ethOS is that the developers added a web-based console application. You can click a link to the system and a new browser is opened that allows you to log in and work remotely. We are not doing a full ethOS review in this article, but ethOS can pull a remotely managed configuration file so if you want to change what you have the rig mining, you can do so without needing to log into the system at all. This is especially important for managing fleets of these systems.

Another key feature of the Ethereal Capital P106-100-X16 is that, by virtue of using a Supermicro server-class embedded motherboard, it has IPMI and remote management capabilities. This is something that you do not get on consumer-based platforms. It also leads to some excellent functionality. For example, there are both Java-based and HTML5 iKVM features. With this, you can troubleshoot your rig remotely or perform low-level maintenance such as changing BIOS settings.

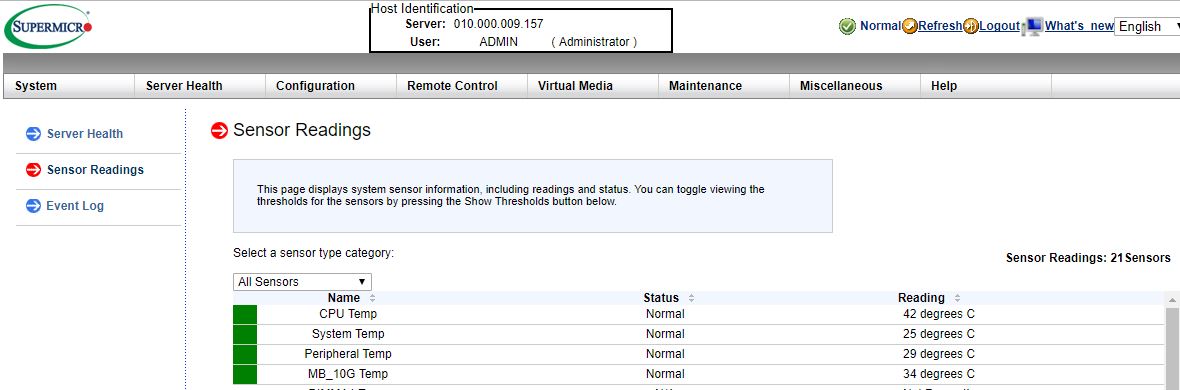

The IPMI functionality also provides features such as the ability to remote mount CD/ ISO images and monitor the system. If you want to see motherboard and CPU temperatures, for example, there are sensor readings for that.

One also has access to features such as event logging, remote power cycling, and watchdog features. For those times when you need to perform system inventories remotely, one can pull basic hardware information even when the system is powered off.

The key here is that this solution is extremely easy to manage remotely. After we locked the cabinet, we never had to go back to service the mining rig. If you are deploying these in remote data centers where you do not have employees this type of capability is a must-have.

Ethereal Capital P106-100-X16 Performance Power Consumption Profit and Stability

From a mining perspective, the system provided approximately 305 Sol/s with Equihash mining per GPU or around 4880Sol/s in aggregate. We were able to push this to 325 Sol/s per GPU or about 5200Sol/s in Equihash mining without much effort. On the Ethereum side, we were able to see 24-25MH/s per GPU. During our testing, most days, Equihash was more profitable than Ethereum and includes coins such as Zcash, Zclassic, Bitcoin-Gold, and Zencash among others, but they were very close.

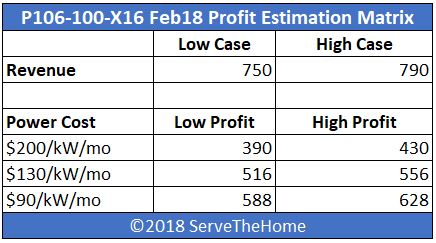

During our testing, the overall mining market saw a major down-trend. We were also mining these relatively larger/ more liquid coins versus something less liquid and potentially more profitable. Even with that, we still yielded about $25/ day in proceeds before we optimized and about $26.25/day after a bit of optimization for $750-$788 in crypto revenue.

In an expensive mining data center, we would expect $200/ kW per month electricity and rack pricing. At up to 1.8kW this means up to $360/month for power or a $390-428/ month profit. Less expensive areas, such as Northern Virginia USA with $0.055kWh power you can find data centers for $120-140/ kW which means a monthly profit of around $500-$550. These systems are also suitable for some of the lower-cost power installations that are $100 or less per kW/ mo which can bring monthly profits to the $600/ month range.

We do want to note that had we run this same matrix using January 2018 mining revenue, the profit figures would be much higher. There are also opportunities for exploring additional coins, optimizing power, which can again earn more profit. What this matrix should clearly show is why large-scale mining operations chase low-cost power. It has a dramatic impact on profit lines. The Ethereal Capital P106-100-X16 is designed to operate in those low-cost power data centers where remote management and high degrees of reliability are a must.

The system performed extremely well during a few weeks of testing. We did not see any issues during our testing in a 72F / 22.2C data center.

Final Words

At the end of the day, this is the type of system you would want to deploy in a remote data center. The fans and power supply are Delta components made for 24×7 operations. The Supermicro motherboard is an embedded design that provides out-of-band management capabilities consumer-based solutions simply cannot match. This was our first experience with ethOS and it did have a learning curve. After about an hour or two, we had everything up and running and the system never looked back from there. Stability was top notch. We also showed this to some of the data center operators we work with and heard comments like “mining rigs usually scare us, but this does not look scary.” During our evaluation, we provided feedback to the company, namely that enclosed sides would be great. We heard that this was planned for a future revision, as well as the ability to utilize even more power.

The downside of this type of arrangement is that costs will be higher than if one is using a scary mess of PCIe/ USB cables and consumer components. If you are in the same room as the mining rig, servicing it is easy. If you have these rigs chasing cheap hydro-electric/ geothermal power in far-flung corners of the world, the extra management, and monitoring capabilities are exactly what you would want. Further, having the ability to do this remote troubleshooting will likely save money in the long run over hiring remote hands for diagnostics the same way it does in traditional servers.

If I were building out a mine with several thousand GPUs, this is the type of solution I would want.

If you are looking to build out a mine for yourself, here is the company’s website.

Not a good system, really, hacked together with good intentions but no design idea, or research what to base on.

1. The PLX switch is underconnected (even at 3.0); the riser clearly is only x4 – thus a mining system with cheap risers but a dedicated lane each will outperform, as will a better – but WAY more expensive – PLX chip. This is clearly cost reasoned – 4 lane PLX chips are cheap, 8 and 16 SKUs cost even as 2.0 far more.

2. Your statement of redundant networking makes no sense overall considering this has a single PSU in stock config which is the most likely to fail, not a switch or internal components (it is also, compared to Supermicro as ATX standard conform or HP as non, or any consumer/workstation PSU, extremely expensive to replace and hard to source).

3. 2400W is underpowered for anything above this 1060s; it delivers 150W per GPU only leaving zero upgrade path (not even realistically 1070s due to system power consumption and especially the fans)

As responsible for multiple mining farms i will absolutely not use this and not sell it to my customers either.

There are better options cheaper, even if you do not want to (or cannot) build yourself; both PCIe native and switched.

Hi William thanks for your comment.

1. We have not been seeing the impact of the PLX chip for mining performance. Did you have another application in mind? If we were doing deep learning/ AI work, certainly larger PLX chips would be in order.

2. These days networking vendors tell us that the #1 cause of network failure is human error, whether that is cabling or configuration. As a result, we look at failure more than simply the hardware. Likewise, on point 1, the PCB system reduces the number of cables and risers again minimizing potential failure points. At around $190 to replace retail, it seems reasonable compared to consumer alternative at 2400W. Given there are retail supply constraints due to mining. On the other hand, compared to a consumer PSU, these are extremely easy to service.

3. Completely agreed that P106-100 6GB cards are not too far from the maximum you may want in this configuration. We did not have P104-100’s to test, nor 16x AMD cards. We were shown another PSU option being prototyped.

On your choice not to deploy, it sounds like you are invested in a competing solution. There are many solutions out there and it is fascinating to see them. We are happy to review your solution if you think it is better.

We have recently completed enhancements to the system which allows us to run up to 5 x 1500W power supplies in a N+1 configuration to cater for higher wattage GPU’s and provide PSU redundancy.

The Broadcom PLX PEX 8636 PCIe switch chip was chosen for its 24 x downstream PCIe x1 ports and upstream x16 port. We tested x16 upstream to the host but it made no difference for the crypto mining application.

We can certainly provide a x16 bridge board, and change the PCIe switch chip to another PLX model to suit other custom requirements.

An enclosed sheet metal chassis is under development and should be completed within the next six weeks.

Interesting machine and layout. A nice alternative to all the frankenstein rigs I’ve seen out there. Hope you can source parts cheap otherwise I see your margins a little thin. But it’s a great prototype.

I would like to comment on William’s 3rd point: “3. 2400W is underpowered for anything above this 1060s; it delivers 150W per GPU only leaving zero upgrade path ”

It’s more accurate to say the GPU pulls power from the PS. I’m running multiple rigs of 1060 Ti and 1070 Ti with power limited to 120W per GPU using nvidia-smi. If you are pulling 150W per GPU you are doing something wrong.

So thats 16×120 = 1920W which leaves a decent margin for the other components.

Haters gonna hate, I guess.

The only thing I see “wrong” with this design is that it’d be more power efficient if you had ducting for all those fans over the GPUs and motherboard. If you PWM’d the fans you’d get power savings too.

Kilcy: You’re right. I have 16 x P104-100’s running now power limited to 140W each which is pretty much all you would want to run them at anyhow. The Power Backplane solves the single PSU limitation and will enable up to 5 x Common Slot PSU’s of up to 1500W each to be used.

Steve Ming: We are developing the enclosed sheet metal chassis now. The recently completed GPU Backplane v2 includes PWM fan control. New versions of the system will ship with 6 x larger fans being PWM controlled to achieve the target GPU temperature.

Nice machines, I would like to start selling on my website :)

Over-engineered, probably over expensive. without a view towards the big picture.

A nice 4u rack mount case from Gray Matter, a good 7 slot motherboard, and 7 1080ti reference cards will not only outperform this on most algorithms, but do it with far less complexity.

Don’t like risers? Understandable. Octominer makes a fine 8 slot board that works well with 1080ti blowers.

And with only 7-8 cards, runs easily under Windows.