Proxmox VE has a killer feature: the ability to set up a bootable ZFS zpool for the Debian-based hypervisor solution. That means one can, from the initial installer, configure a ZFS mirror to boot from using Proxmox VE which is a feature very few Linux distributions have at this point. The installer makes this exceedingly easy, but sometimes one can find a screen where it fails to boot. In this article, we are going to show how to fix this issue.

The Proxmox VE Cannot Import rpool ZFS Boot Issue

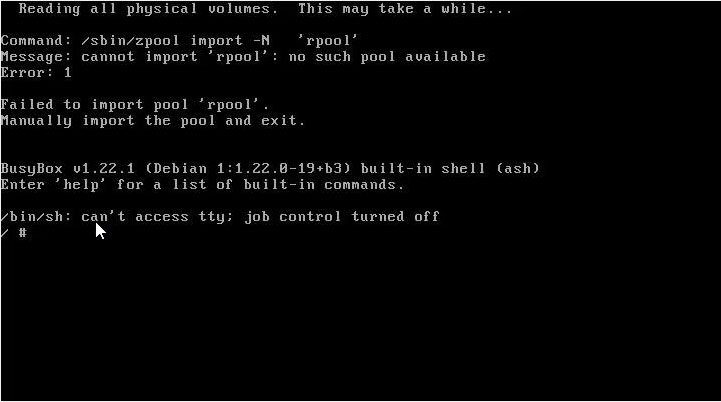

When this issue occurs here is what the text generally looks like:

Command: /sbin/zpool import -N "rpool"

Message: cannot import 'rpool' : no such pool available

Error: 1

Failed to import pool 'rpool'.

Manually import the pool and exit.

Here is a screenshot:

The problem often occurs when you have fast SSDs, but we have seen it on a few machines, some with LSI/ Avago/ Broadcom HBAs, some using Intel PCH SATA and with different types of SSDs. Luckily the fix is easy.

The basic steps to fix this are:

- Import the rpool manually to continue booting as a temporary fix

- Add a delay to your GRUB configuration

- Update GRUB

- Reboot to test and ensure that it works.

For those who want the full directions, here is a video we made on a Proxmox VE 5 node that was exhibiting this issue.

As you can see, it is an easy fix but one that can drive you crazy if it is not done.

Final Words

On our last dozen or so Proxmox VE machines we have hit this one twice. Booting to a ZFS mirror is a great feature of the platform, so we still advise trying it. At the same time, since we hit this issue with different SSDs on different platforms (one Intel one Supermicro) we felt it was worth posting a fix. It may not help you today, but bookmark this fix in the event it does strike you in the future.

Note: with Proxmox VE 5.1 we have seen a docker installation change that can cause an error when booting from the ZFS root zpool. If you instead see a bunch of strings that look like Docker container IDs that is a different issue, and we have a video coming on how to properly install Docker on Proxmox VE 5.1 with a ZFS rpool. Stay tuned.

I had what I think was the same problem not too long ago on a PVE 5.1x node. It actually started after I moved the system disks from one motherboard to another so I assumed it was something to do with that. But the same fix you came up with worked for me as well. Was really frustrating trying to figure out why the boot import wouldn’t work, but the command line import I could do 10 seconds later worked absolutely fine…. every time! But I don’t actually think it’s a fast SSD problem. The motherboard I moved this from only had basic on-board SATA and a single AIB SAS controller. The new motherboard has an extra SATA controller giving more on-board SATA ports, and I think the problem is actually just the kernel enumerating disks/controllers slower and the specific controller my boot drives are plugged into is probably last. So when ZFS goes to try to mount the rpool, the boot controller and boot disks haven’t actually been completely enumerated by the kernel yet and so ZFS can’t find the disks at that exact moment. But by the time you get to the rescue command prompt a few seconds later, the kernel has finished enumerating the controllers and disks and the pool import works fine.

Thank you! This worked! I just installed a new proxmox on my new r720. Was getting this exact same issue.

Спасибо, ваш совет мне помог.

Thanks, your tutorial helped me.

I also have this issue – but proxmox7.0 on my R720 is using systemd – is there a similar option in systemd boot?

@Dan — yes, there is! Here’s how to do it on Proxmox 7+:

Edit /etc/kernel/cmdline and add:

rootdelay=10

Then, save the file and run:

proxmox-boot-tool refresh

This will regenerate the bootloader in the ESP and save the updated boot flags.