We recently attended a launch of two new products, the AMD EPYC Embedded 3000 series and the AMD Ryzen Embedded V1000 series. This piece is going to focus on the AMD EPYC Embedded 3000 series which has the technical features that should have the Intel Xeon D team nervous.

In terms of where these chips fit, AMD positions the EPYC Embedded 3000 series in applications where one needs more CPU compute, more memory, more storage, and more networking than in display devices. Essentially, this product line is the competitive answer to the Intel Xeon D-2100 series which we have started testing and published on extensively.

With that introduction, let us look at the EPYC Embedded 3000 series.

AMD EPYC Embedded 3000 Series – Get Excited

If you want to make a statement in the embedded market, to say AMD is back, this is the slide you need.

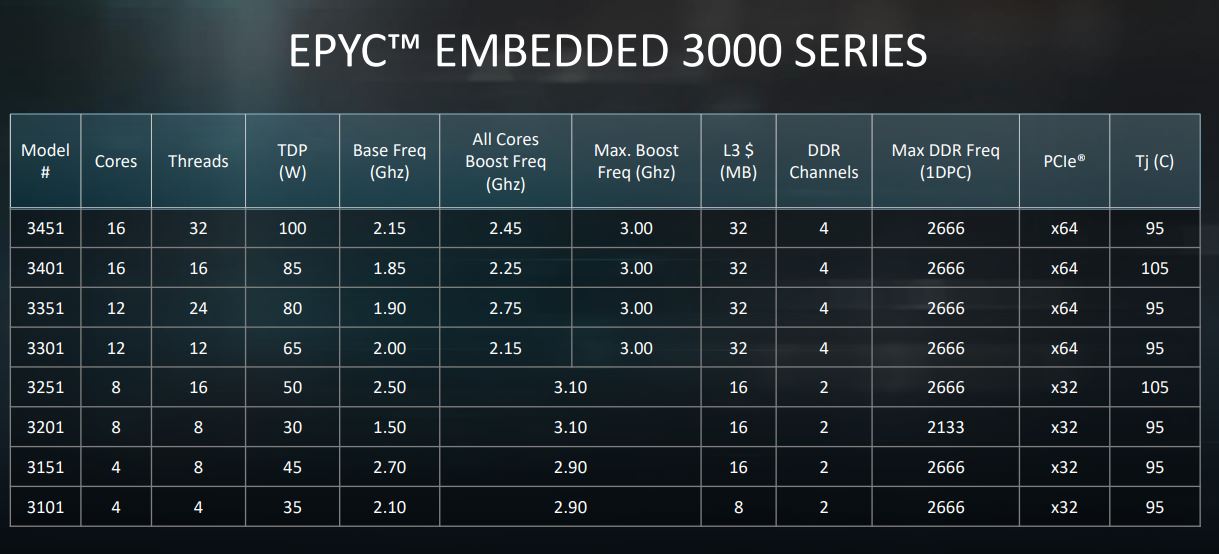

The AMD EPYC Embedded 3000 Series spans 4 to 16 core ranges. While the Xeon D-2191 is an 18 core part, it is listed as limited availability. In practice, AMD’s range comes very close to the new Xeon D-2100 series in raw cores and clock speeds. Here is the list:

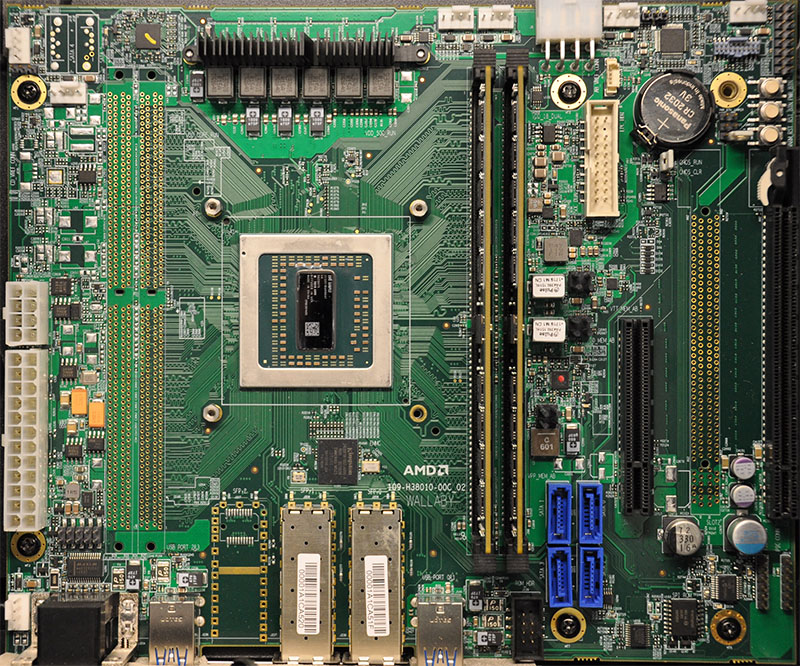

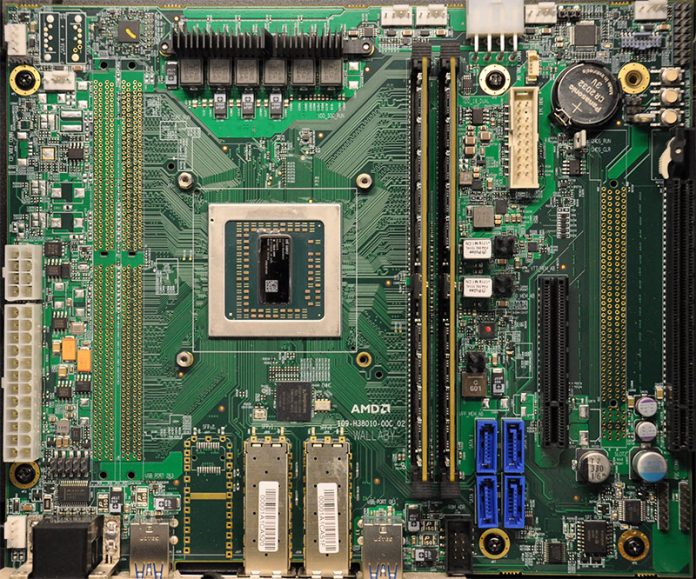

Astute STH readers will notice a difference on the 4 and 8 core parts versus the 12 and 16 core parts. The lower-end parts have half the DDR4 channels, two instead of four. There are also half the number of PCIe 3.0 lanes, 32 instead of 64. Essentially AMD has a single die on the smaller package which lowers costs. Here is the single die AMD Wallaby platform.

As you can also see, there are two DIMM slots not placed and a PCIe x16 slot not placed on this reference platform.

The larger 12 and 16 core count chips utilize a dual-die package. Here is Scott Aylor, Corporate VP and GM of AMD’s enterprise business with a high core count package at the London, England launch event.

This dual die part is built in a similar way to the AMD EPYC 7000 series, scaled down to two cores and packaged for embedded. Check out our AMD EPYC 7000 Series Architecture Overview for Non-CE or EE Majors to learn more about how AMD’s modular architecture works and how AMD scales features with each die.

AMD’s competitive positioning is focused on more cache, more PCIe 3.0, and up to 8x 10GbE. AMD also highlights its security technologies like Secure Memory Encryption that can be used to secure vulnerable edge devices. For example, a lone 5G LTE tower in a rural area that has a server below the antenna array. AMD’s value proposition is that these security technologies can make those devices more secure.

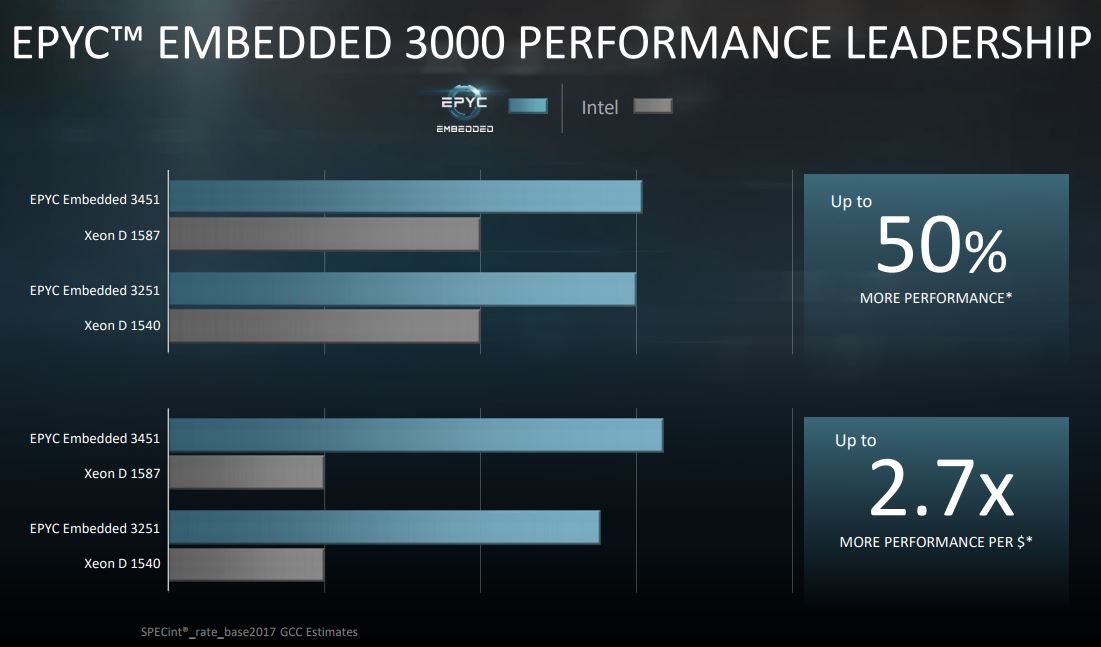

AMD used the Intel Xeon D-1587 and Xeon D-1540 as comparison points. We discussed these two chips and the evolution of the Intel Xeon D series from the launch in Q1 2015 to the Xeon D-2100 series launch a few weeks ago. You can read about that in our Exploring Intel Xeon D Evolution from Xeon D-1500 to Xeon D-2100.

While the D-1500 was focused on low power operation, the D-2100 series is focused on speed. The Intel Xeon D-1587 was known to be a memory bandwidth constrained part with only dual channel memory feeding 16 cores. That has been rectified in the D-2100 series.

This slide is the jaw-dropper, and the one that should have Intel nervous:

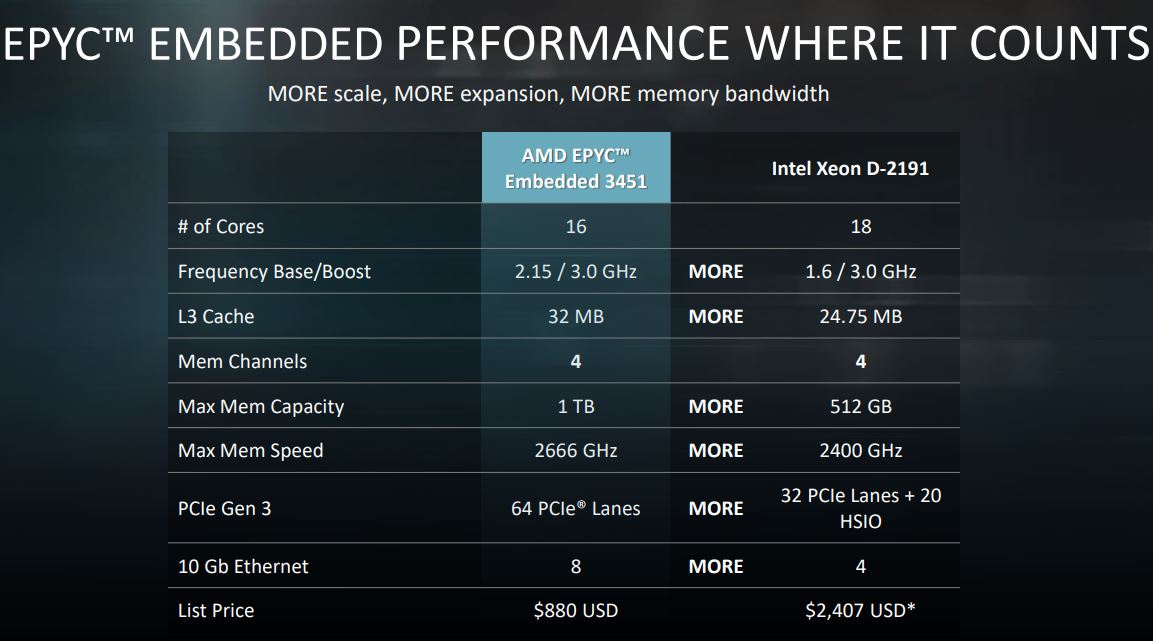

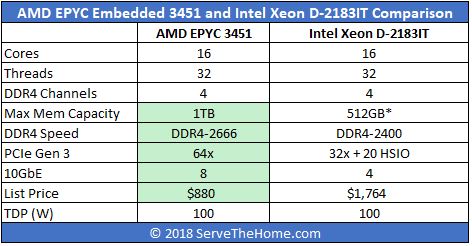

AMD made the comparison to the Xeon D-2191 which is a low production volume part. AMD is also using L3 cache to L3 cache which is not apples to apples since it is comparing an inclusive to an exclusive cache. If we were making this, we may compare it to the Intel Xeon D-2183IT we tested, a higher-volume compute part.

Let us examine that list. First, AMD has twice the memory capacity support. We put an asterisk next to the 512GB support since there is something strange on the Intel spec sheet. Normally Intel supports DIMMs of a certain size and then a number of DIMMs to reach a total capacity. We have seen 4 slot Xeon D-2100 platforms claim to support 128GB LRDIMMs, for 512GB RAM. At the same time 8 slot Xeon D-2100 platforms with 128GB LRDIMMs, one would expect a maximum capacity of 1TB. That is strange because either this is a new method of de-tuning memory capacity (e.g. only 1DPC 128GB LRDIMM support) for Intel, or it means that a Xeon D-2100 series could actually support 1TB of RAM. Still, official spec sheet wise, AMD has a 2x advantage and also higher memory speeds.

On the PCIe lanes, the higher-core count EPYC 3000 series clearly wins. That is important if you want NVMe storage at the edge without using an expensive and power-hungry PCIe expander. It also means that the AMD EPYC 3451 has more PCIe connectivity at a lower TDP than the Qualcomm Centriq 2400 series.

AMD also has up to 8x 10GbE on its dual die parts, twice as much as the new Intel Xeon D-2100 series. One may argue they prefer the Intel 700 series NIC IP over AMD’s 10GbE MAC, but the option is there for embedded platform design teams.

On the price, AMD is offering the 16 core / 32 thread model at $880, or about half of what the Intel Xeon D-2183IT carries as a list price. You can argue who has faster cores, but Intel positions the Intel Xeon D-2163IT with 12 cores / 24 threads at $930 and with only DDR4-2133 support.

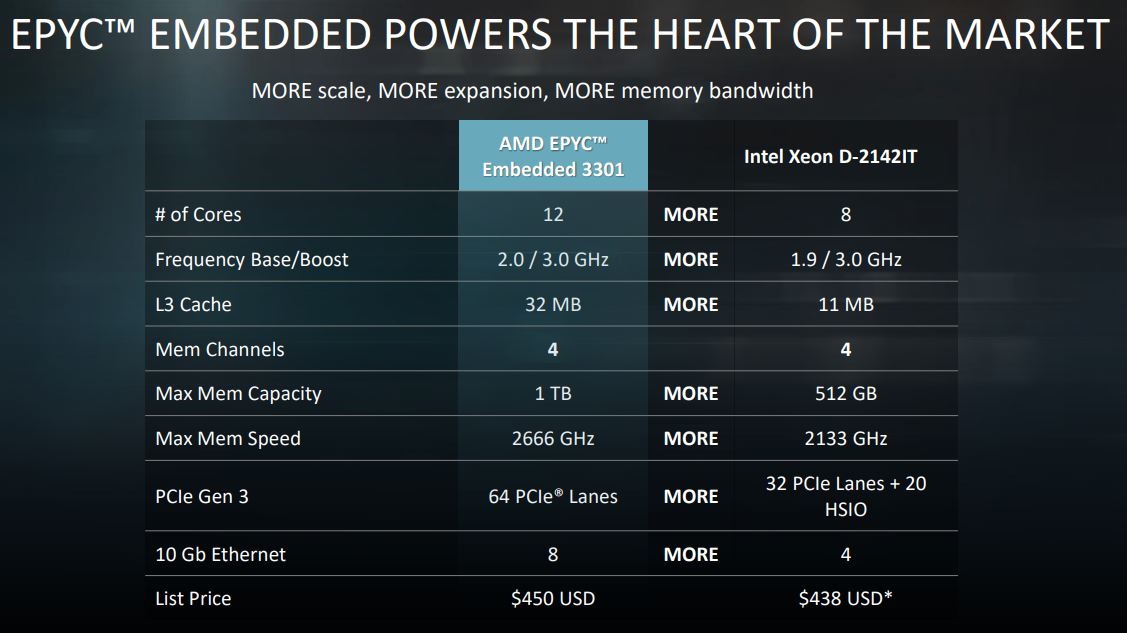

Beyond the top end, at around $430-450 AMD has a 12 core part versus 8 for Intel. Again, that L3 cache line is not comparable since they are different types of cache.

What we did not get is the pricing for the single die parts. Anything 4 or 8 core, Intel has a clear advantage as it has more HSIO lanes, QAT variants, NICs are equalized, and RAM channels are doubled for Intel. These slides are asymmetrical warfare but at the higher end price/ platform resources, AMD has a clear winner.

Patrick, the L3 on SKL-X/SP/D is no longer inclusive, it’s mostly exclusive just like Zen’s.

Nanachi I read this as L3 is non-inclusive. AMD’s is inclusive right? That’s why STH is saying comparing L3 cache size isn’t right because with SKL-D you’d need to include the L2 as well.

When is Supermicro and HPE gonna come out which microservers for these. Imagine a HPE ProLiant Microserver Gen 10 based on this. I’d take 80% of the SMB market overnight. 12-16 cores and 128 to 256 GB will service just about any mom’n’pops business like a judo studio or cleaners without needing the cloud and it’d be great for SMB SP’s to sell.

AMD is also exclusive.

L3$ is filled with L2$ victims.

So they are more or less comparable, L3-wise.

Patrick, do you think AsRock and SuperMicro will be launching 3251 ITX boards? I suspect this processor could be exceptional for running FreeNAS (with a lot of room to move, hopefully)

Also when will pricing come out, frustrating to see nothing. I’d like to think it’s a sub $350 US CPU

Will be there some Out-Of-Band managment available? Like (iLO, iKVM). This feature is must, and I can’t find any information about it.

OOB management is typically provided via an external BMC (e.g. the AST2500) so it will be up to the ODM/OEM’s to spec OOB management into their solutions.

Any idea on pricing for all models?

I dont understand why Xeon-D went with 18 Core Max and Zen has 16 Core as well. What are the usage of these that is better fit then Xeon-E5?

In the previous Gen, Xeon-D was perfect fit for Cache and Reverse Proxy, basically Redis, or things that needs lots of memory but not so much computation power.

And Why are we still stuck with 10Gbps and not moved to 25Gbps Ethernet?

Ed – I think the number of 10GbE switch ports is still high right now, and companies like Intel are making 25GbE add-in adapters.

cpu socket ??