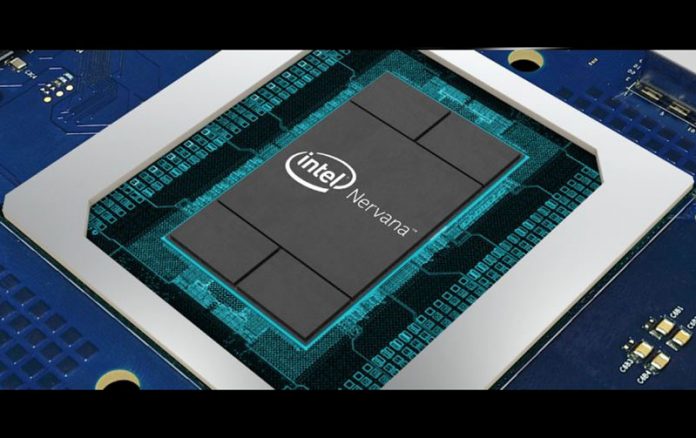

Intel has a major product portfolio hole at the moment, and they know it. NVIDIA has taken the AI/ deep learning training market by storm and essentially run away with the silicon and software market for the past few years. Intel positions its Xeon CPU line, its IoT edge silicon, and its FPGAs more as inferencing engines instead of training. We keep hearing “Xeons and FPGAs can train deep learning models too,” but we know that Intel is just buying time. Instead, the Intel Nervana Neural Network processors are the company’s big bet in the deep learning space. While we have heard them referenced for some time, Intel is now saying they will ship this year.

Intel Nervana Neural Network Processors Ship to Facebook

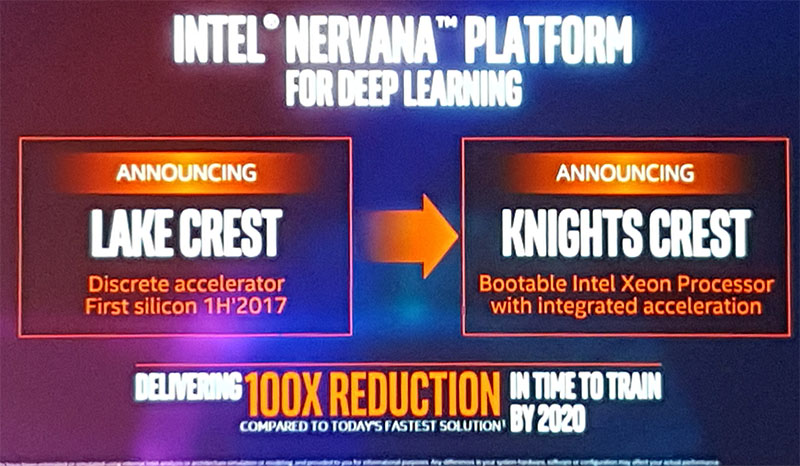

The Intel Nervana Neural Network Processors (Intel Nervana NNP), formerly known as “Lake Crest”, was the product of an acquisition and three years of R&D. We knew that Lake Crest was coming, and Intel said last year that the first silicon was slated for the first half of the year. Now, Intel is committing to shipping to customer(s) by the end of 2017.

Intel did a great job summarizing the key features and benefits (publicly disclosed) in the press release, so this is one of the few times we are going to copy/ paste a large section of a press release at STH. Key to the announcement is a hardware accelerator that is optimized for memory bandwidth (something Google found with the TPU is important,) and matrix multiply (that NVIDIA is targeting in Volta V100 silicon), as well as a new numeric format (which Microsoft found was useful.) From what we have heard, Nervana is made to be a scale up architecture, scaling to many Intel Nervana NNPs. In a few weeks we will be at Supercomputing (SC17) where we expect to see more details on Intel Knights Mill which is also deep learning optimized but intended for scale-out architectures based on an exsiting x86 design.

Here is an excerpt from the Intel release:

New memory architecture designed for maximizing utilization of silicon computation

Matrix multiplication and convolutions are a couple of the important primitives at the heart of Deep Learning. These computations are different from general purpose workloads since the operations and data movements are largely known a priori. For this reason, the Intel Nervana NNP does not have a standard cache hierarchy and on-chip memory is managed by software directly. Better memory management enables the chip to achieve high levels of utilization of the massive amount of compute on each die. This translates to achieving faster training time for Deep Learning models.

Achieve new level of scalability AI models

Designed with high speed on- and off-chip interconnects, the Intel Nervana NNP enables massive bi-directional data transfer. A stated design goal was to achieve true model parallelism where neural network parameters are distributed across multiple chips. This makes multiple chips act as one large virtual chip that can accommodate larger models, allowing customers to capture more insight from their data.

High degree of numerical parallelism: Flexpoint

Neural network computations on a single chip are largely constrained by power and memory bandwidth. To achieve higher degrees of throughput for neural network workloads, in addition to the above memory innovations, we have invented a new numeric format called Flexpoint. Flexpoint allows scalar computations to be implemented as fixed-point multiplications and additions while allowing for large dynamic range using a shared exponent. Since each circuit is smaller, this results in a vast increase in parallelism on a die while simultaneously decreasing power per computation.

Meaningful performance

The current AI revolution is actually a computational evolution. Intel has been at the heart of advancing the limits of computation since the invention of the integrated circuit. We have early partners in industry and research who are walking with us on this journey to make the first commercially neural network processor impactful for every industry. We have a product roadmap that puts us on track to exceed the goal we set last year to achieve a 100x increase in deep learning training performance by 2020.

In designing the Intel Nervana NNP family, Intel is once again listening to the silicon for cues on how to make it best for our customers’ newest challenges. Additionally, we are thrilled to have Facebook* in close collaboration sharing their technical insights as we bring this new generation of AI hardware to market. Our hope is to open up the possibilities for a new class of AI applications that are limited only by our imaginations. (emphasis added)

[Source: Intel]

Blablabla… we are loosing everywhere, blablabl…..

“My CPU is a neural net processor: a learning computer….”.

-The Terminator

I can’t wait to try deep learning benchmark with Intel :)