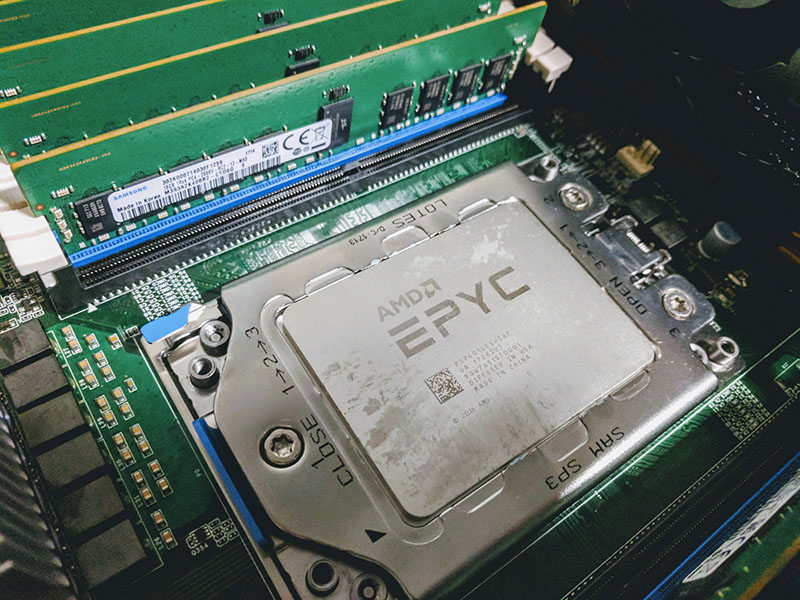

Today we have something that we have been waiting to share for some time, a special review of the 24-core AMD EPYC 7401P in single socket configuration. The AMD EPYC 7401P offers a unique value that is largely unprecedented in the server CPU space: pricing under $45 per core and $23 per thread on higher core count parts. Beyond that, the CPU delivers 24 cores and 48 threads to a single socket at a price point under $1100, or about what Intel will sell you a 12 core Skylake-SP part for.

Let us be clear if you are putting an EPYC 7401 series in a single-socket only server, get the AMD EPYC 7401P not the 7401. AMD has extraordinarily aggressive pricing on the EPYC 7401P. If you are buying a dual socket server with a single CPU, for future dual CPU operation after an upgrade, then the EPYC 7401 may make better sense.

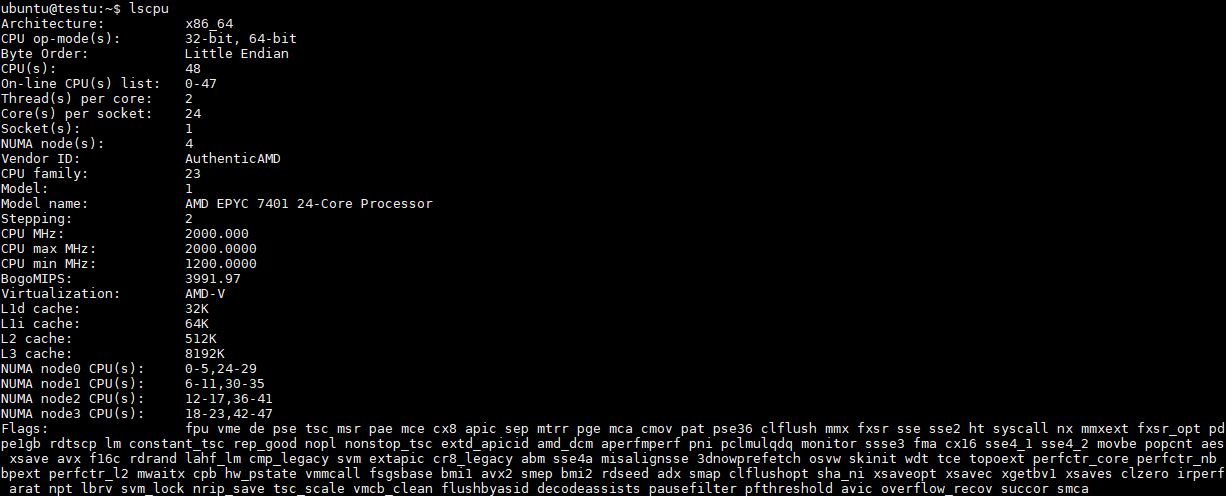

Key stats for the AMD EPYC 7401P (and EPYC 7401): 24 cores / 48 threads, 2.0GHz base and 3.0GHz turbo with a whopping 64MB L3 cache. The CPU features a 170W TDP. Here is the AMD product page with the feature set. Here is the lscpu output for the processor:

As part of our work in the sub $1100 CPU space, we have now benchmarked every Intel Xeon Silver and Bronze CPU, and all dual socket Intel combinations sub $2000. We have also completed work with every AMD EPYC performance variant from the EPYC 7251 to the EPYC 7601. This review is part of a massive project to deliver a complete set of results for STH readers, our data subscribers and for companies we are advising. During this review, we will be discussing comparisons between different options. These discussions are informed by running 6kW of systems constantly for months across a variety of workloads, including many we do not publish on STH.

Test Configuration

We are using the AMD EPYC 7401 for our benchmark numbers today. We validated the assumption with AMD that the EPYC 7401P and EPYC 7401 should be identical or nearly in terms of performance. We were told that the AMD EPYC 7401P may be negligibly faster due to having the dual socket capable circuitry disabled and therefore ever so slightly more power available for the rest of the package. We are going to label the results in our charts EPYC 7401P to make it clear that they are single socket results.

We now have every AMD EPYC SKU tested on a common Tyan EPYC platform and work started on another platform. Here is the base hardware configuration we are using:

- CPU: AMD EPYC 7401

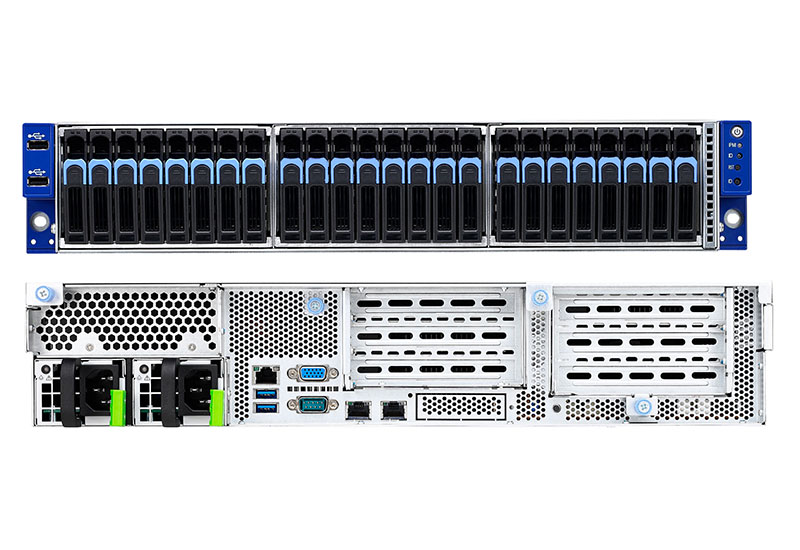

- Server Barebones: Tyan Transport SX TN70A-B8026 (B8026T70AE24HR)

- RAM: 8x 16GB 128GB DDR4-2666 RDIMMs (Samsung)

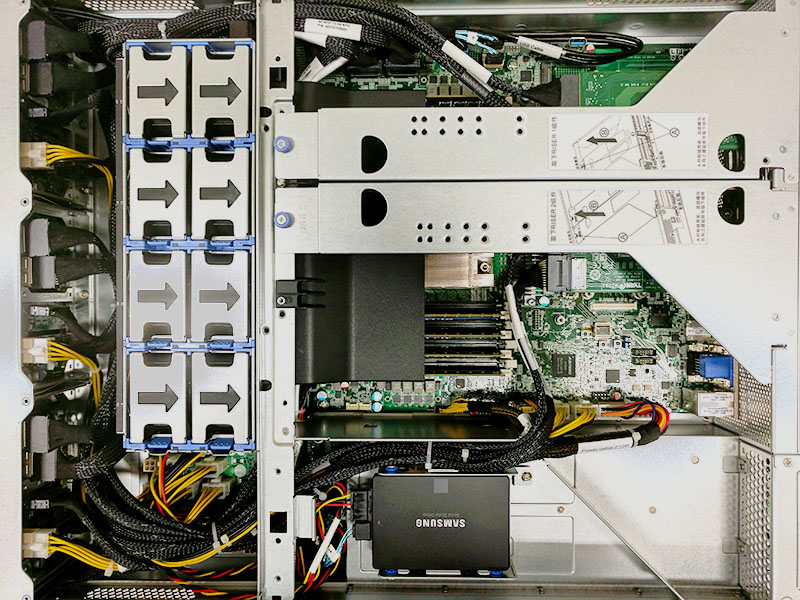

- SSD: 1x Intel DC S3710 400GB SATA SSD

- NIC: 1x Mellanox ConnectX-3 Pro EN VPI

Key to this system is that it supports 24x NVMe U.2 NVMe SSDs without using Broadcom PLX PCIe expanders. That is 96 lanes of PCIe 3.0 directly from a single SKU. One of the key advantages AMD EPYC has is that a single EPYC CPU can use 128x PCIe lanes, the same number as the dual socket configuration. Tyan has responded to this opportunity by offering a single-socket system that can handle 24x NVMe drives plus have I/O available for 10/25/40/50/100GbE.

AMD and Tyan originally suggested that we use a Samsung SSD (as pictured), however, to aid in consistency, we are using our lab standard Intel DC S3710 400GB SSDs.

In our forthcoming system review, we will have data on every CPU from the AMD EPYC 7251 to the EPYC 7601 for those looking at different options. We are going to try to keep our comparisons as relevant as possible from a price/ performance standpoint but we will also bring in additional data points as needed.

Patrick,

It seems numbers for Dual Silver 4116 are not present for all benchmarks, any reason for that?

Great review. I don’t see how Intel can ignore this, but there will probably have to be a hit to the OEM channel before they act, and product lines change slowly.

FYI, the Linux kernel compile chart has the Xeon Gold 6138 twice with two different results

Thanks for the review. I think this processor will be used for the next video editing workstation I am going to build. With this workstation I will follow the GROMACS advice and use GPU power to do most of the heavy lifting.

It’s good to see that the GROMACS website advices to use GPU’s to speed up the process. GPU’s are relatively cheap and fast for these kind of calculations. Blackmagicdesign Davinci Resolve uses the same philosophie, use GPU’s where possible. AMD EPYC has enough PCIe-lanes for the maximum of 4 GPU’s supported in Windows (Linux upto 8 GPU’s in 1 system).

Micha Engel, where do you get 4? The single socket Epyc has 128 PCIe lanes.

@Bill Broadley

Davinci Resolve supports upto 4 GPU’s under windows (4x16PCIe-lanes). I will use 8 PCIe-lanes for the DecLink card(10 bit color grading) and 2×8 PCIe-lanes for 2 HighPoint SSD7101A in raid-0 for realtime rendering/editing etc (14GB/s sustained read and 12 GB/s sustaind write, 960 pro SSD’s will be 25% overprovisioned).

@BinkyTO – The Xeon Silver 4116 dual socket system still has a few days left of runs. Included what we had.

@TR – Fixed. The filter was off.

We need 4 node in 2u or 8 node in 4u of this. We can use for web hosting.

OpenStack work on these?

I still want to test it in our environment before buying racks of them. This is still helpful. Maybe we’ll buy a few to try

@Girish

Have a look at the supermicro website (4 node in 2U).

Maybe 1 node in 1 U is already fast enough.

I see no reason why AMD EPYC wouldn’t work with OpenStack, AMD is one of the many companies supporting the OpenStack organizations (and they have the hardware to back it up).

Does anyone know when these will be in stock. I’ve tried ordering from several companies, including some that listed them as in stock– but they appear to be backordered.

Andrew – We were able to order recently through a server vendor.

Do you have any information on thermals? Our EPYC 7401 run 24/7 rendering, and seem to be throttling down to 2.2GHz instead of staying at 2.8GHz boost. Any thoughts on that? Thermals are reading a package temp of 65C.