Ever since the Intel Xeon Scalable Processor family launched, we have been fielding questions asking for comparisons to the AMD EPYC. This week we posted some initial results showing the differences between DDR4-2400 and DDR4-2666 on AMD Infinity Fabric. Expect a larger set of benchmarks over the next few days. The Supermicro test bed we are using we feel is close to a shipping spec.

In the market today, there is a ton of fear, uncertainty, and doubt (aka FUD) that needs to be addressed. Furthermore, there are many commentators that do not have hands-on experience with the platforms or little architectural understanding, and yet are coronating a winner. We have had test systems for some time. At this point, we wanted to share a few myths we are consistently hearing and getting questions on. While we do not do a ton of these pieces, we also do not have the bandwidth to respond to individual requests so this format is easier on the STH team.

Myth 1: AMD EPYC Was Available Before Intel Xeon Scalable

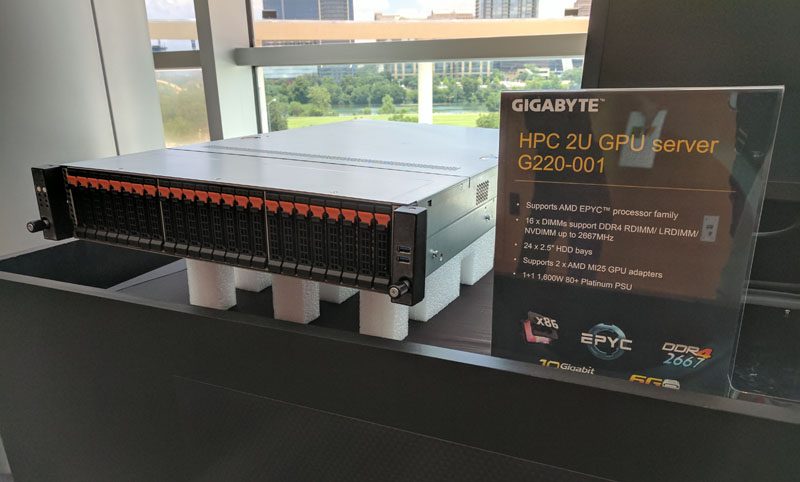

In terms of public launch events, we were at the AMD EPYC launch on June 20, 2017 in Austin, TX. We wrote about how pleasantly surprised we were to see the flourishing AMD EPYC ecosystem. It is clear that the industry is behind AMD EPYC. Before we proceed, we do think AMD EPYC will capture noticeable market share in this generation.

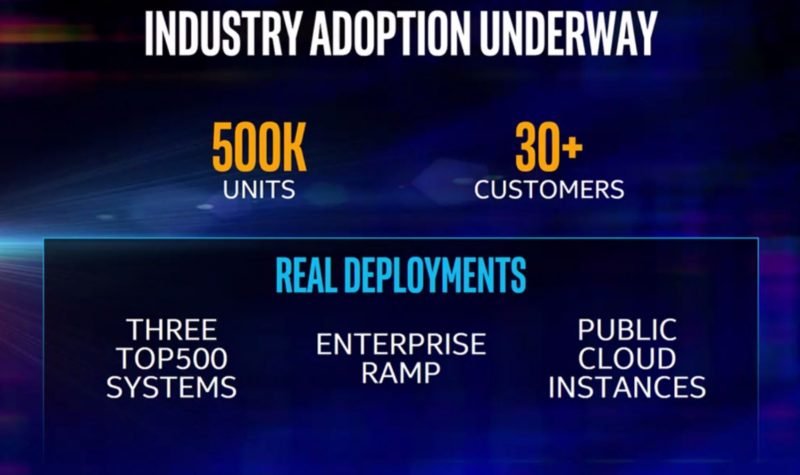

Launches are important. CPUs generally have only five or six quarters on the market until new generations arrive. Launch timing is important because that often sets a clock towards when we can expect a new generation. While AMD “launched” EPYC on June 20, 2017, commercially available systems will arrive in August 2017. Conversely, Intel “launched” Xeon Scalable on July 11, 2017 but in reality, it was shipping significant volume before that date:

While that may not be a full quarter worth of shipments, it is enough to see public cloud deployments, TOP500 supercomputers, and other organizations not just buy but test and deploy systems well before launch. Essentially, Intel’s launch was a product that had been shipping and deployed for months while AMD EPYC was something that would be shipping a half quarter after launch.

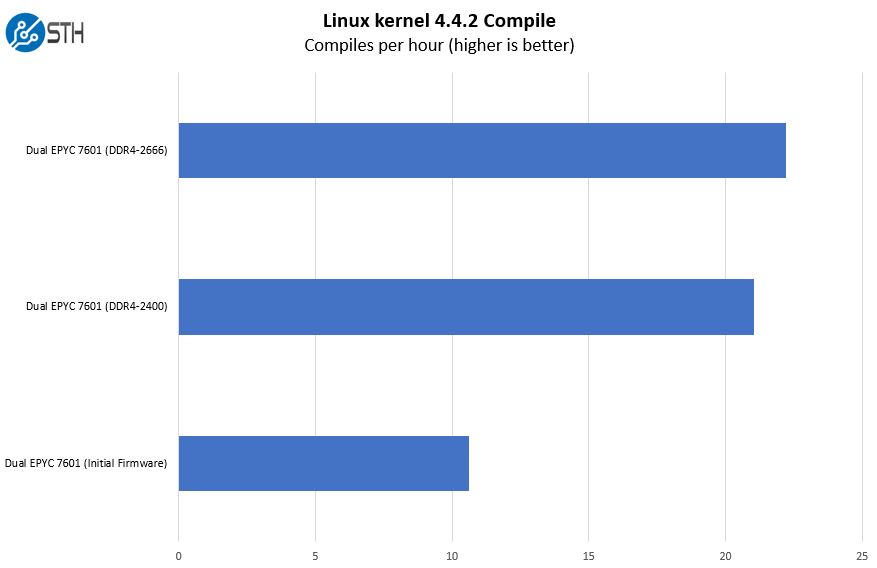

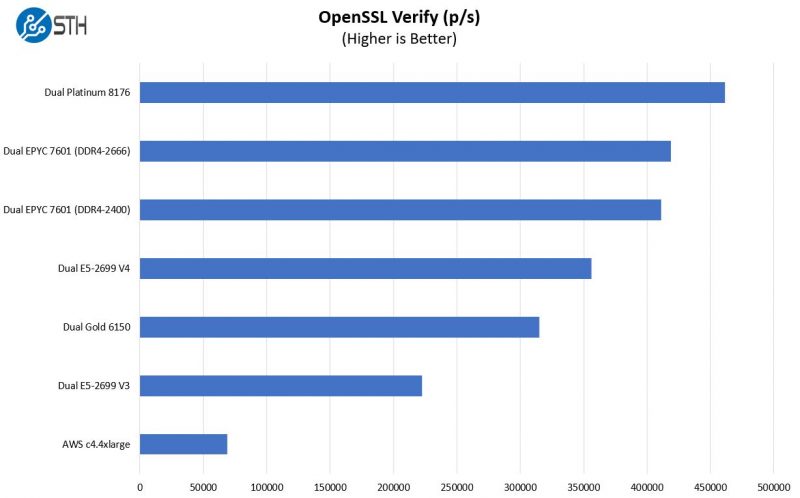

I received quite a few comments from folks on why we have not published a full suite of competitive benchmarks yet for AMD EPYC given the fact we have had a test system since before the June 20th launch. One of the biggest reasons is that we have watched AMD performance improve substantially. When we publish numbers, readers see them for months and years. We were the first major publication with a test system, but publishing on a castrated set of pre-production AMD AGESA firmware and DDR4-2400 we deemed to be irresponsible. Here is one glimpse of performance gains we have seen from woefully pre-production firmware to production firmware with DDR4-2666 and production with DDR4-2666.

Those gains are enormous. Our test suite takes many days to run, but it appears as though we have a commercial system (e.g. one you can buy not an AMD test rig) that has usable commercial firmware.

Just being clear, we think that the first Supermicro firmware we are going to say is OK to ship arrived on July 18. This is after a motherboard upgrade (to shipping spec), multiple firmware upgrades, and an upgrade to DDR4-2666 RAM.

AMD EPYC is getting close to where we think the platform is manageable. However, as of July 24 we contacted our Dell EMC, HPE, Lenovo, and Supermicro reps and resellers cash-in-hand and nobody would sell us an EPYC system. Further, we have heard from some of the earlier vendors that we should expect early August 2017 availability of the initial systems.

With its July 24, 2017 earnings announcement, AMD released information that its Enterprise, Embedded and Semi-Custom segment revenues were down slightly compared to the same quarter a year ago. Once AMD EPYC starts shipping we expect this to change drastically as AMD EPYC will sell in the market.

The bottom line is, AMD EPYC is not something we can buy off the shelf today. We did buy an Intel Xeon Silver dual socket machine last week. We have also bought a number of retail Intel Xeon Scalable CPUs at retail for testing.

It seems as though AMD EPYC ship date is sometime after Intel Xeon Scalable. Our sense is that through July 2017, there is no real Intel Scalable v. AMD EPYC because AMD EPYC is not shipping in commercial systems outside of potentially a very limited early ship program. If AMD’s early ship program was comparable to Intel’s 500K units, AMD would have likely doubled or more its last quarter enterprise revenue.

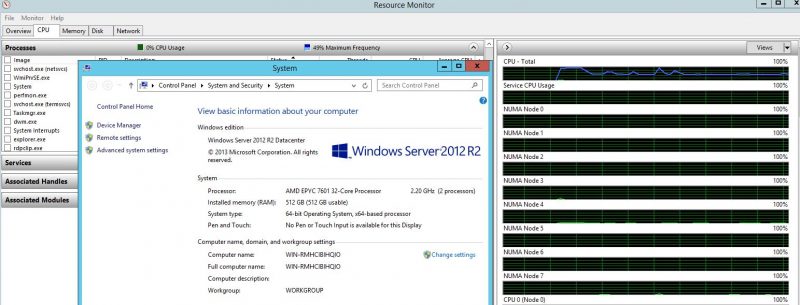

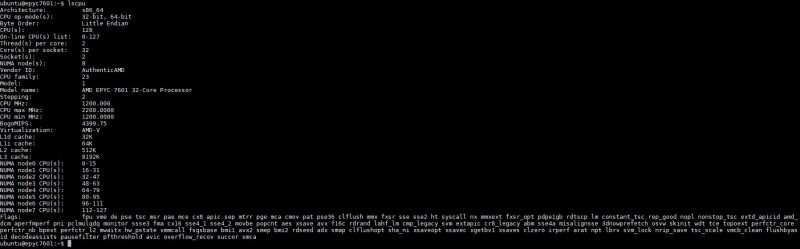

Myth 2: An AMD EPYC Chip is a Single NUMA Node

We have seen this one even from prominent industry analysts. Here is what a dual socket AMD EPYC looks like in Windows and Linux (Ubuntu):

NUMA is not a new concept. In our initial latency testing, there is a significant difference between on die, on package, and cross socket Infinity Fabric latencies. As a result, you want software and OSes to be aware of this.

While we may be moving to a world with multiple chip modules, we need accurate architectural reporting to the software layers.

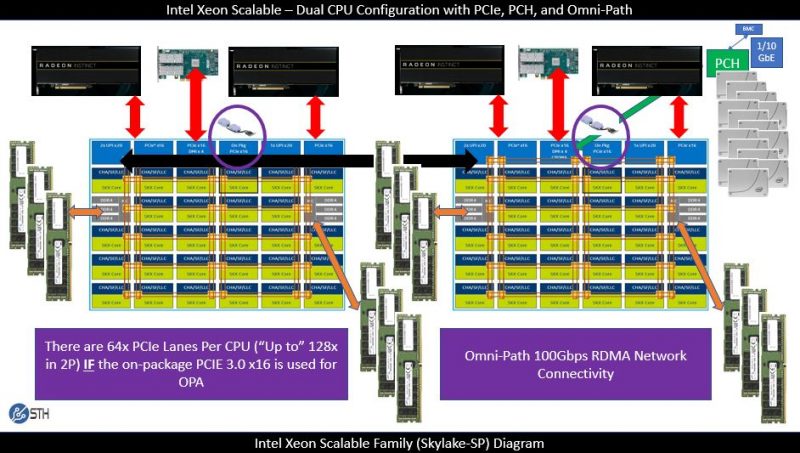

Myth 3: AMD has more PCIe lanes than Intel Xeon Scalable in Dual Socket

AMD v. Intel in the single socket arena is no contest. AMD has more hands down thanks to its innovative architecture. It is very strange that Intel has not been interested in ramping up PCIe lanes and in recent years has been reducing them in some key product lines. For example, the Intel Xeon E7 chips only had 32x PCIe 3.0 lanes while the E5 lines had 40x lanes. The actual silicon PCIe controllers on the Broadwell-EX die could support extra lanes, Intel made a product definition decision to limit its previous-generation CPUs to 32 instead of 40 lanes. As a result, the four socket Xeon E5 V4 systems had more PCIe lanes than the four socket Xeon E7 V4 systems. Why there are so few lanes on the Intel Xeon Scalable processor family we have no idea. Our speculation would be that Intel set its LGA3647 socket details before NVMe became a major push.

In a dual socket configuration, looking at the spec sheets, you may assume that there are 128 lanes for AMD EPYC and 96 for Intel in a dual socket configuration. That is not entirely true. Intel actually has 64x PCIe 3.0 lanes per CPU, they just are not exposed in most use cases.

On the AMD side, there are 128 lanes, but some are lost to get a usable server platform. Most implementations from vendors we have seen expose 96-112 PCIe 3.0 lanes to add-in devices.

On balance, we do (strongly) prefer AMD’s implementation with EPYC. System vendors can, through a BIOS change, re-allocate SATA lanes to PCIe as an example. However, saying that Intel has a huge effective PCIe lane deficiency in dual socket configuration should have a disclaimer attached at all times. If you want to read more, see our AMD EPYC and Intel Xeon Scalable Architecture Ultimate Deep Dive piece.

When it comes to single socket configurations, AMD has the advantage hands-down which is why we see single socket AMD platforms being touted by major vendors such as HPE.

PCIe is Not Always the PCIe You Expect

On the subject of PCIe lanes, when is a PCIe lane not a PCIe lane? That may sound like a trick question, but it is far from trivial. Serious analysts and journalists need to know the difference as it is of supreme importance to many companies that may be evaluating the AMD EPYC platform.

While at the AMD EPYC launch event we saw a HPE system that fit 24x NVMe devices into a 1U form factor. That is absolutely cool!

When we inspected the server internals, something struck us with the design, the U.2 drives were not hot swap.

We wondered why this might be and asked the HPE representatives presenting the system. At the event, HPE’s response to us was that hyper-scale customers will use fail in place and not hot swap the drives. HPE cited that SSDs do not fail that often anyway.

That still seemed like an answer that could be improved upon and it turns out there is a fairly significant feature missing with the AMD EPYC platforms at present. We have had this confirmed by five different server vendors and it seems to be a known challenge that the server vendors are working around.

At present, AMD EPYC servers do not support industry standard hot plug NVMe

U.2 NVMe SSDs are popular because they have the ability to hot swap much like traditional SAS drives. For data center customers, this means that failed drives can be replaced in the field using hot swap trays, even by unskilled data center remote hands staff without taking systems down.

If one thinks back to the early days of U.2 NVMe SSDs on Intel and ARM platforms, not every server vendor supported NVMe hot swap. It seems as though AMD and its system partners are going through the same support cycle with EPYC. We hear that workarounds are coming, but are still a few months/ quarters away.

While PCIe lanes may be PCIe lanes, the inability to hotplug a NVMe SSD on an AMD EPYC platform may be a non-starter for enterprise software defined storage vendors until this functionality is brought to EPYC.

We have little doubt that this will have some sort of fix for this generation of AMD EPYC. We have had five different vendors confirm that they are aware and are working on workarounds. Until that fix is out, the “holy grail” of the single socket AMD EPYC single socket system that directly connects 24x NVMe drives is still going to be unsuitable for many enterprise customers.

Edit 2017-07-31: AMD requested that we re-iterate that they support hot plug NVMe on EPYC. The above references that there may be OEM decisions to not support the feature. Other OEMs are still working on enabling the feature on their platforms. We have not heard of a shipping EPYC system as of this update that supports hot plug NVMe

Myth 4: AMD EPYC is a Bad Server Platform

Just as we see folks spreading misinformation on the Intel Xeon Scalable platform, the AMD EPYC platform is surprisingly good. Going back to our Myth 1 statement that we have been looking to purchase AMD EPYC, after testing a system we are looking to add AMD EPYC to our hosting infrastructure.

There are applications where AMD EPYC will be very good. For example, in storage servers (especially once NVMe hot plug is available) EPYC can be great. Furthermore, for a web hosting tier or as a VPN server, AMD EPYC is likely to make an excellent solution. Here is an example of preliminary results we are seeing in one of the AMD EPYC’s better workloads:

There is certainly a ton of merit to the platform even though it is decidedly less mature.

We do see the single socket platforms as potential standouts, especially for containerized workloads. Docker and AMD EPYC have been working very well in our initial testing. We have been getting 0.5% variance between Dockerized and bare metal benchmarks which are normal test variations for the workloads.

Final Words

AMD EPYC is a great product, but there are some uphill battles they are fighting. While AMD systems we expect to be able to purchase in August, the market is deploying Xeon Scalable today. We had a vendor tell us this week that two of our Skylake-SP review systems were being delayed because “we are still trying to meet customer demand for Skylake orders and cannot manufacture systems fast enough.”

At the same time, we have been experiencing the AMD EPYC system go from a product in June that had a lot of rough edges to a product in late July that we gave our stamp of approval to and are willing to deploy in our production cluster. Current challenges like the NVMe hot plug will get fixed because there will be customer demand for the feature and we know several vendors are working on it.

Looking forward to December 2017 and into 2018, we do think that many of these early shipping challenges will be ironed out and we will see more enterprises start buying test systems or clusters. For those enterprises that want an alternative architecture, adding EPYC is a 2-3 on a 10 scale for difficulty while adding ARM or POWER can be a 7-10.

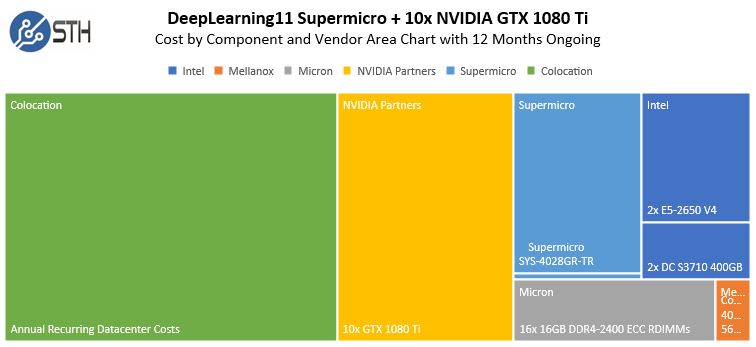

Finally, we have heard requests to add more TCO analysis like we have been doing with our GPU compute DeepLearning10 and DeepLearning11 reference builds and with the Intel Xeon E3-1200 V6 series. We will be heading that request once pricing gets finalized. In the enterprise server space, CPU costs are usually a relatively minor component. Here is an example from DeepLearning11 using a 12-month cost for a server with relatively little RAM and storage and that uses completely open source software and management tools. In 3-5 years, especially with per-core licensed software CPU costs can be negligible.

Hawt stuff. I’d have assumed that EPYC was already shipping like crazy.

The new TCO is useful for us.

I believe Google and Amazon had early access to Skylake SP last year, however the CPUs they got were different to the ones shipping today. According to Semiaccurate some feature was missing, although they don’t specify which one.

Regarding PCIe lanes, Skylake has 48 + 4 for chipset – that’s 52. Then there’s 16 extra on some SKUs, if you use the special Omnipath connection (but you have to pay extra for that)

Ed I don’t think OPA is shipping but it’s dirt cheap under $160 for 100gb network. Intel’s also dumping low speed 1 and 10gb networks over DMI plus SATA disks so it’s getting lots of utilization there. I watched the video.

Good article. Nobody else has talked about NVMe hot inserts issues?

Our Dell EMC rep didn’t even know they were going to have EPYC systems and couldn’t order us any.

is hot swappable capability enabled by cpu, chipsets or software?

thanks.

@robret

All of the above. It is a combination of hardware, firmware, and OS/software.

Something to consider: In the AMD NUMA setup, are the PCIe lanes spread out between the CPUs or are they all attached to the first CPU? I would say Intel has a huge advantage here with all lanes connected to a single CPU.

We covered this in detail both in our architecture pieces on the new chips as well as our Architecture Ultimate Deep Dive video. https://www.servethehome.com/amd-epyc-and-intel-xeon-scalable-architecture-ultimate-deep-dive/

Entire article spreading Intel FUD, complains about Epyc rumors, reeks of past Intel transgressions.

You spend your time listing all the downsides, with none of spots AMD has Intel beat, you even make up excuses and flat out lie(PCIE lanes).

1) Did Intel write that for you? People don’t plan supercomputer deployments by calling dell up randomly. Bios issues are common on initial releases, trying to say otherwise is bs.

Epyc is worth waiting for, lower TDP, more pcie lanes(end of story).

2) Numa nodes ? what wait, you are talking about 2-4 cpu intel nodes and you bring up nonsense about numa-nodes.

3) Flat out lie, there are not 64-pcie lanes available on the CPU , end of story 48 is the max. What are you counting qpi lanes?

Hi ben – appreciate the comments. Discussed this with the AMD team and the update requested was clarifying the platform NVMe hot plug.

Also, you may want to check on XCC Skylake. We have an architecture piece that shows 64 PCIe including the on-package x16 that can be used for OPA (today) or others in the future.

The 16 extra lanes you are talking about are used up for chipset, UPI etc and NEVER can be made available by a motherboard manufacturer.

AMD is using Infinity Fabric for CPU-CPU etc, so the ZERO lanes are taken up by the CPU architecture.

You are comparing apples to oranges, one is a limitation by the CPU arch, one is sometimes a limitation by motherboard manufacturers.

A 2P AMD EPYC has more available bandwidth across all buses compared to an Intel Xeon Scalable Processor arch.

Finally release more about what software load you are benchmarking on.

ben it sounds like you’re just trying to AMD troll? I mean EPYC literally re-allocates PCIe for IF, SATA and so on. And the x16 is confirmed dedicated for Omni path not for UPI.

I like STH because of its lack of trolls. Looks like AMD is bringing them.

And this article I’d say is positive for my impression of EPYC. New tech always has bugs. It’s all about gen2.

SleveOclock not going to get into, was bit grumpy and tired so apologies to Patrick for my harshness, love the site but this article needs some touch ups.

The information about PCI-E lanes is still incorrect because it makes it sound like if the motherboard manufacturer decided to the pcie lanes would be available, they are not ever. There are really 256 lanes in a 2P epyc systems, if only they didn’t use 128 lanes for cpu-to-cpu, or there are 8 less lanes with a AMD APU or less lanes with an Intel cpu with an integrated gpu. No one ever brings up points like that, there aren’t 64 lanes ever available for 1P, which is the point I am making, and trying to make it sound otherwise is just wrong.

Nor does it bring up the issues of trying to ram a proprietary solution Omnipath (re-branded QLogic TrueScale) down everyone’s throat. Infiniband is open technical standard that anyone can make cards .

With EPYC you can make the decision for yourself and not lose 16 lanes to an inferior network connect. Infiniband is still the go too connect, even shown with sth dropping connectx-3 cards in their new Intel systems.

Finally the most glaring error is that 1P epyc systems have 128 lanes , the P series part numbers and systems will be available with 124 lanes available for you to mix and match the way you want to. A much better solution if you are looking to build primarily a gpGPU box.

You need a 3-4P Xeon system just to get close to the number of lanes, which will be vastly more expensive.

https://www.amd.com/en/products/cpu/amd-epyc-7401p

http://www.tyan.com/Motherboards=S8026=S8026GM2NRE=description=EN

Are you sure those are NUMA nodes? When you have more than 64 logical CPUs in Windows, it breaks them into processor groups which appear as NUMA nodes in Task Manager etc.

I think this setup has 128 logical CPUs assuming HT is enabled ?

Yes. These are NUMA nodes due to the physical architecture.