As we move into even smaller NAND processes and TLC is becoming standard, we thought it was time to do a modern SSD endurance experiment. We wanted to see the impact of having a (mostly) full SSD on overall SSD lifespans. While enthusiasts and even IT shops tend to over spend on SSD endurance ratings, top volume products are often the lower cost and lower capacity models. For many (not all) business and home users a 120GB SSD is commonplace. We are taking two brand new Samsung 750 EVO 120GB drives and writing data to them until they fail. The Samsung 750 EVO uses 16nm planar TLC NAND.

At STH we primarily use data center SSDs. Since we have very few SSDs with under 1 petabyte of write endurance in the lab, we purchased two sacrificial SSDs. You can see recent statistics on the hundreds of SSDs in our production population here: Used enterprise SSDs: Dissecting our production SSD population. Aside from those drives we also have another 100-200TB of data center SSDs in the DemoEval lab for machines being used for benchmarking or poof of concept builds. We have tried using consumer SSDs in some of our Ceph storage cluster nodes as OS boot drives (with minimal logging) but experienced three nodes failing within 72 hours during burn-in of the cluster. The idea of using small 120GB consumer drives is really intriguing due to the pricing. We wanted to see how they would perform if we loaded them up.

During our recent Firefox (and subsequently Chrome and Edge) article on writes to SSDs, there were a lot of questions around endurance. This is primarily because of the Tech Report’s awesome SSD endurance experiment using what are now older generation drives (and drives with a larger capacity.) We wanted to try a more modern planar TLC NAND drive and using a smaller capacity. In high-volume lower-end systems pricing is a major constraint. For example, we found a 120GB SSD based sub $300 touchscreen convertible Lenovo laptop on Amazon with free one day Prime shipping. That is a good representation of where the low cost SSD market is targeted.

Meet the Samsung 750 EVO Sacrifices

Earlier this week we headed over to Amazon to pick our sacrifices for the SSD endurance testing. (Prime shipping is addictive.) After some debate we decided to purchase two Samsung 750 120GB SSDs. Their published 35 TBW warrantied endurance rating is likely fine for many users but it is also one of the lowest in the industry. Here is what we purchased for around $53 each.

Samsung 750 EVO 120GB Amazon

Samsung 750 EVO 120GB AmazonWe did not ask Samsung or any other vendor for assistance with this article, we just decided to break out the credit card and get drives as fast as possible. Quickly thereafter we were greeted by two new retail boxed SSDs:

It is time to get started with the SSD endurance test.

Why two drives?

We are using two Samsung 750 EVO 120GB SSDs because we wanted to see what would happen if we simulated a mostly-full SSD and wrote only on remaining area versus writing across the entire drive. If you have ever had a laptop where there was at most a few GB available after an OS or programs were installed, then you have experienced the frustration of having to write and delete files in a mostly full all the time system. The answer, of course, is to buy a larger SSD, but that is sometimes not possible.

In the last few days I have spoken to enterprise storage folks at four of the top server vendors as well as performance testing folks at two large client notebook manufacturers. I asked this question: does a mostly full SSD struggle more with endurance than a drive being written across the entire NAND space somewhat evenly. My assumption was yes. All eight folks that were asked the question said “of course.” I asked if anyone had shareable data and the best I received was “we do but we cannot share.”

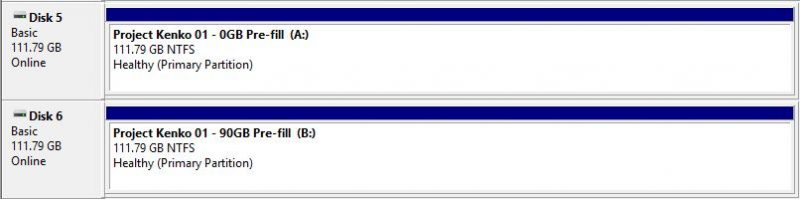

As a result we have two SSDs. One where we will write across the entire drive and the other that we will fill 90GB worth of static data and just write on the remaining portion.

The Test System: Kenko

Our test system was the only dual Intel Xeon E5 V3 system we still had in the lab. We had the opportunity to take our ASUS RS520-E8-RS8 server out of service while we upgrade it to 40GbE.

After installing Windows it was time to give the system a hostname. A few months ago I was in Peru and saw a sacrificial altar of the Incas at Kenko. It was dark inside but one of the more interesting places of the trip.

The ASUS server would be where we sacrificed our two Samsung 750 EVO 120GB SSDs. We had the advantage of being able to remotely reboot the system using IPMI which made life easier for testing drives after power cycles.

Getting Underway

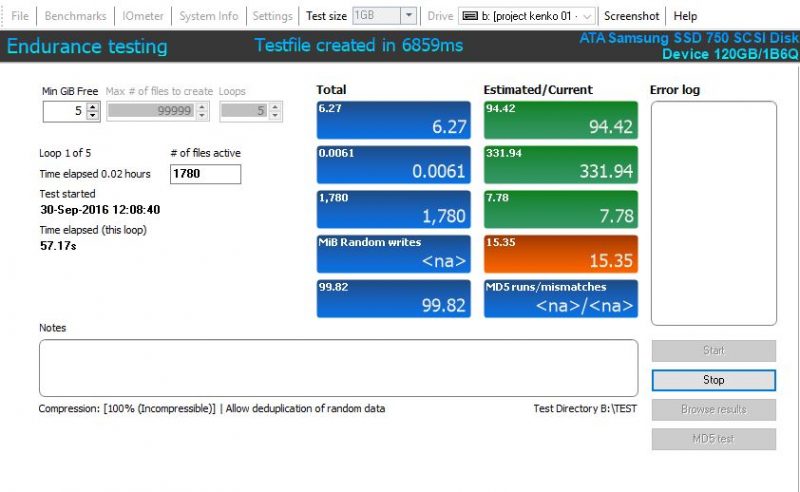

We are using a similar methodology to what the Tech Report team used with a few tweaks. First, we pre-filled our first drive with a 90GB desktop backup image. Second, we used a smaller “Min GiB Free” level because these are smaller drives and they often have a higher fill ratio. Here is our Anvil’s Storage Utilities setting using the third drive (that we used purely to test settings):

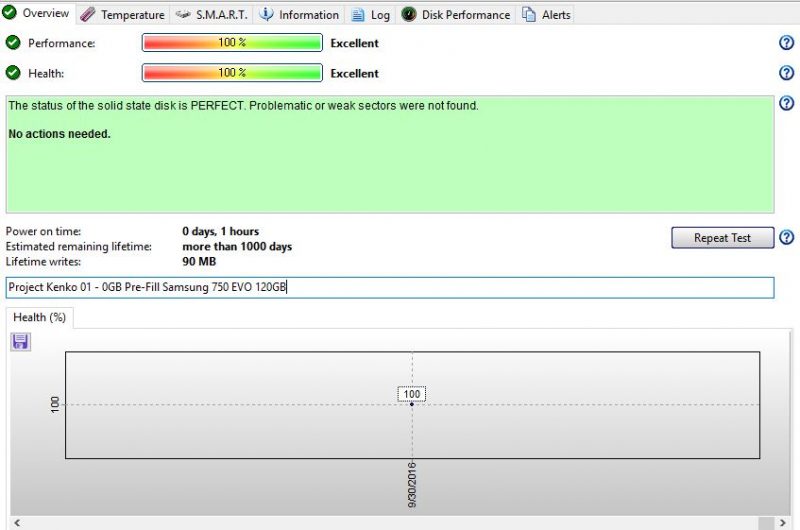

Here is Project Kenko 01 Drive A – 0GB pre-filled and verified via hdsentinel pro:

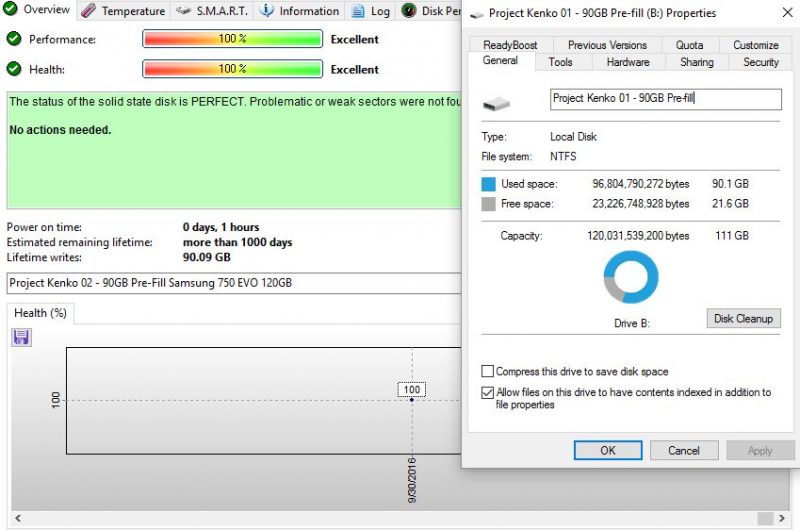

We wrote our 90GB of test data and verified that hdesntinel was accurately reporting data. As you can see Windows thinks we have 90.1GB used and hdsentinel pro has us at 90.09GB. This was a good check to run before starting the experiment.

Samsung’s specs on the drive list a 3 year 35 TBW warranty. We have now seen Firefox and Chrome have the ability to write 20-30GB/ day or more to drives so a drive that comes in at just about 32GB/day in write endurance is starting to get closer to the lower limit. Of course, very few systems run 24×7 for three years straight and that is a different write pattern than most endurance specs utilize.

We will report back on our results. We are extremely excited to see how long these drives last. Stay tuned for more updates and subscribe to our RSS feed.

“We will report back on our results”

Well? We’re waiting! (I can’t post the meme so you’ll have to imagine it)

Even if you don’t have the data, it’ll still be nice to read a summary or at least a comment.

Thank you!

Seriously! WTH happened here…?