Several months ago we had pre-release benchmarks of the Cavium ThunderX. The company promptly contacted us and wanted to show what its hardware can do. Those benchmarks were done on an older OS with older software. Over the past few weeks we have been working with both the single and dual socket (48 core and 96 core) variants of the Cavium ThunderX part and what struck us is how fast the software side is maturing in key areas. We will have more in-depth benchmarks of the platforms running real world workloads soon.

Prior to the release of the Cavium ThunderX most 64-bit ARM development, even for server applications, has been done on low price ARM development boards. There the typical core and memory count is both fixed and low. Networking is often provided by a USB to Ethernet adapter. This is a scene of typical ARM development hardware to date at many Silicon Valley startups:

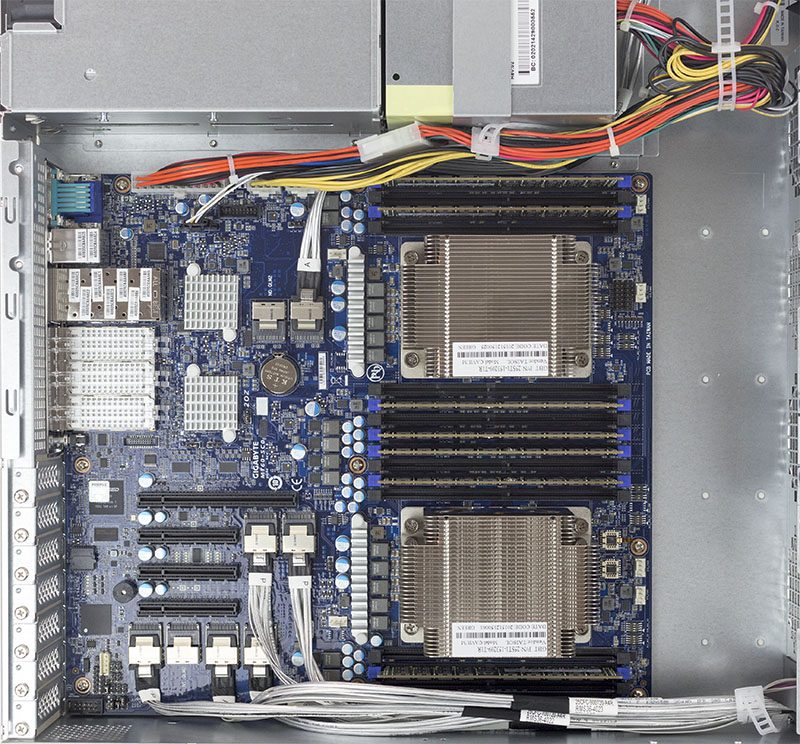

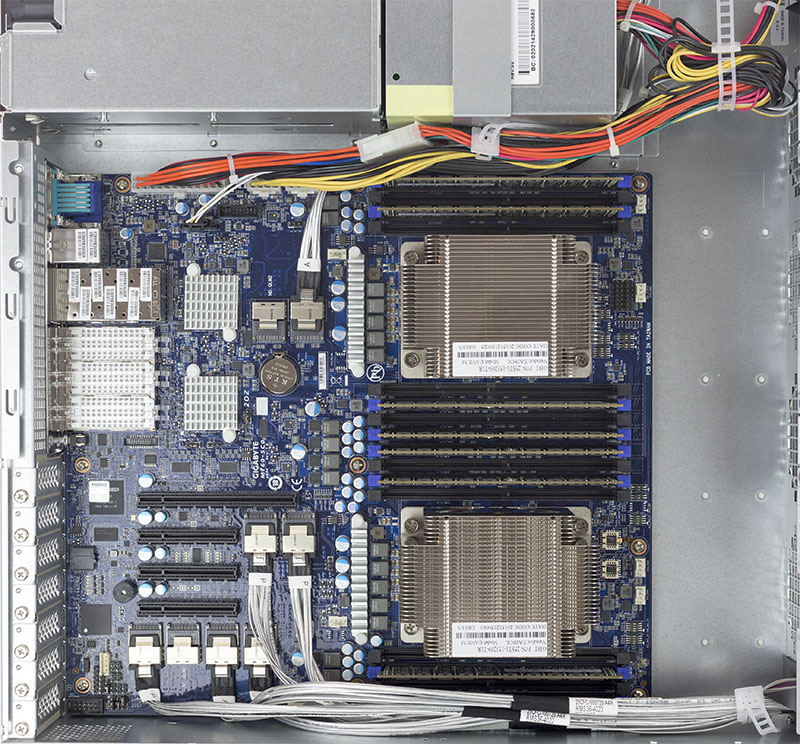

While that is great for IoT development, the Cavium ThunderX platform is completely different. There are both single and dual processor configurations scaling up to 96 64-bit ARM cores. Memory capacities can scale into the TB range, or about 1000x a typical IoT development board. Networking provided on our test platforms is 80Gbps for our single processor system and 160Gbps on our dual processor system. Onboard storage can support more than a dozen SSDs or hard drives. Here is what our dual Cavium ThunderX 96 core test platform (a Gigabyte R270-T61) looks like inside:

The bottom line is, if you are developing for ARM in the data center, you need to get a Cavium ThunderX platform as it is the best data center ARM platform generally available today. In the remainder of this article, we are going to show how some benchmarks around the evolving software and development ecosystems. These benchmarks will show how the Cavium ThunderX is a competitive server platform. With a few weeks of working with the hardware/ software, and given the fact we manage both lab and production servers with Ubuntu, we are going to share some anecdotal experiences as well.

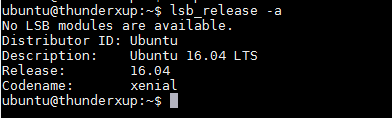

The Ubuntu 16.04 LTS Update

We originally received our single Cavium ThunderX 48 core system Gigabyte R120-T30 that we reviewed here. It had Ubuntu 14.04 LTS pre-installed from Cavium. After poking around with the machine running in our data center, there were a few nuances to the setup and ARM platforms:

- Using Ubuntu 14.04 LTS required quite a bit of patching to get great performance

- Trying to pull working software via “apt-get install” if it resided in universe did not always work. Sometimes packages were just not present. Those that did install were not optimized.

- As Cavium pointed out, using newer gcc versions and building applications from the latest source was often the way to get good performance out of ARM platforms.

We updated the 1S ThunderX platform to Ubuntu 16.04 LTS the same week we received the 2S ThunderX platform in our data center. It was immediately clear that the experience was much better. Software that required patching instead worked out of the box. Packages installed from repos almost every time with even many multiverse packages working without having to custom compile software. This was a completely different experience.

The update had two implications. First, unlike Ubuntu 14.04 LTS, 16.04 LTS felt more like it just worked. Second, out of box performance was much better than in 14.04.

Over a recent meeting, I told Cavium that with Ubuntu 16.04 LTS I would feel comfortable using and administering Cavium ThunderX in our web hosting architecture. We do not have hyperscale by any means. STH, even with the lab is only in 4 data centers and between 100-200 physical servers under management. Using 16.04 LTS is where we would expect most new customers to start.

Hierarchy of ARM Software Maturity

With x86 dominating the server market over the past few years many developers take for granted that most software runs on x86 and there is usually some degree of performance tuning that has already occurred in whatever package is in repositories or github. Folks in the industry usually use numbers like 97% of servers shipped are x86 based. As 64-bit ARM platforms come to market, software gets ported, but as we saw with the 14.04 to 16.04 generation difference, there is some staggering advancements being made.

Here is a framework for understanding software maturity for ARM systems:

If you think about our experiences with some Web 2.0 software under 14.04 and our early attempts at benchmarking pre-release Cavium ThunderX, we were dealing mostly with Stage 1 or Stage 2. If you think about software written for ARM on the mobile side (e.g. an Android smartphone) that software is generally between Stage 3 and many parts at Stages 4 and 5.

As we have seen over the past few months, ARM software running on the Cavium ThunderX single and dual processor machines has made the major jump from Stage 1 to Stage 2 and we are seeing software easily reaching into Stage 4 maturity in specific areas.

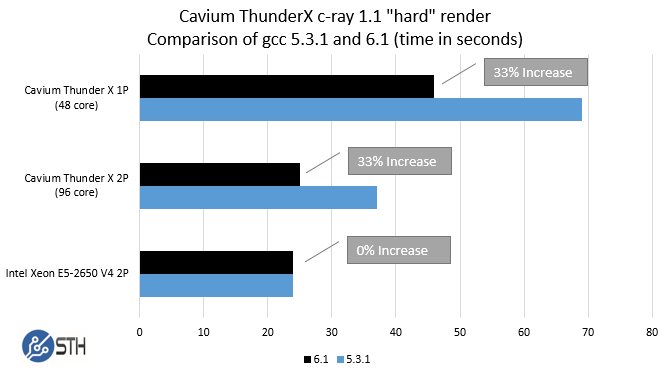

c-ray 1.1 – An example of how ARM compilers are improving

We have been using c-ray 1.1 for years. The benchmark essentially tries to simulate ray tracing calculations. Although this is not the target market of the first generation Cavium ThunderX parts, it scales extremely well from single/ dual core systems all the way up to the 192 thread Intel Xeon E7 systems we test at STH.

We used two compilers, the “stock” gcc 5.3.1 compiler that is in Ubuntu 16.04 as well as the newer gcc 6.1 compiler that will be part of the family of compilers that will be included in upcoming linux distributions. Note -we are not using the Cavium optimized gcc 5.3 compiler for this test. We use the same -o3 and -fastmath optimizations we use in our standard test and use the more difficult sphfract engine with the full number of threads for the systems tested. Our test scene size is 3840×2160 which we use to simulate a 4K test.

Here is the key takeaway, just moving to the newer gcc 6.1 version released in April 2016 gave the Cavium part a 33% performance improvement. On the Intel side, as we ran the tests gcc 6.1 did not provide significant improvements. That delta brought the Cavium ThunderX 96 core part to approximately the same performance level as the Intel Xeon E5-2650 V4 on this test.

The explanation here is simple, compilers are more mature on the Intel side. If we look back to the maturity curve, the Cavium ThunderX is benefiting from compilers getting better over time.

For the remainder of the tests we are going to use the Ubuntu 16.04 LTS default 5.3.1, unless otherwise noted, but we did want to show a test case here.

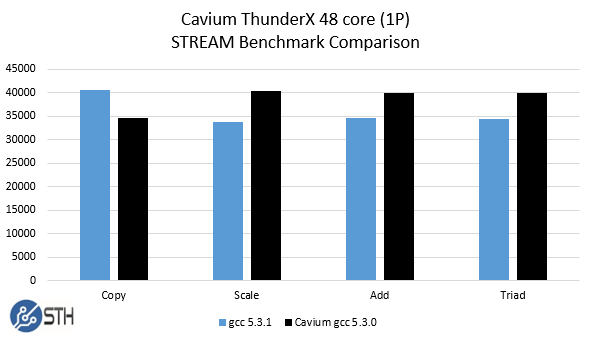

STREAM Testing

STREAM is perhaps the seminal memory bandwidth application used for well over a decade. The benchmark was created and is maintained by Dr. John D. McCalpin. Essential can be found here. We saw some intriguing results published elsewhere on STREAM, and in our previous “not-optimized” benchmark round we saw lower STREAM performance numbers than we would have expected.

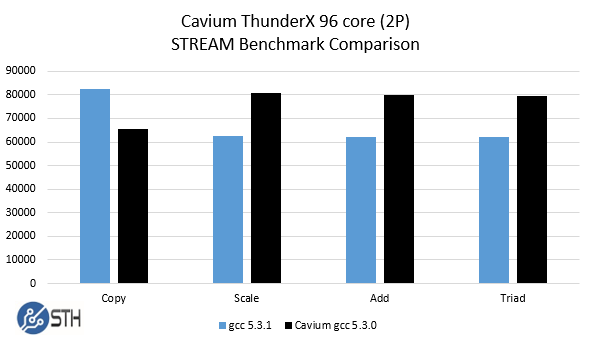

We used both the stock Ubuntu 16.04 LTS gcc 5.3.1 compiler as well as the “Cavium optimized” gcc 5.3.0 compiler that Cavium provided for its review systems. As we have seen previously, compilers have a major impact on performance and especially with our tests using Ubuntu 14.04 LTS one needed a patched gcc to get better performance from the system.

Here are the results for the single processor, 48 core Cavium ThunderX system:

Here are the results for the dual processor, 96 core Cavium ThunderX system:

As you can see, the stock gcc 5.3.1 version performed better in the copy tests while the Cavium optimized version performed better in Scale, Add and Triad. We generally only publish the Triad figures, but wanted to show this difference.

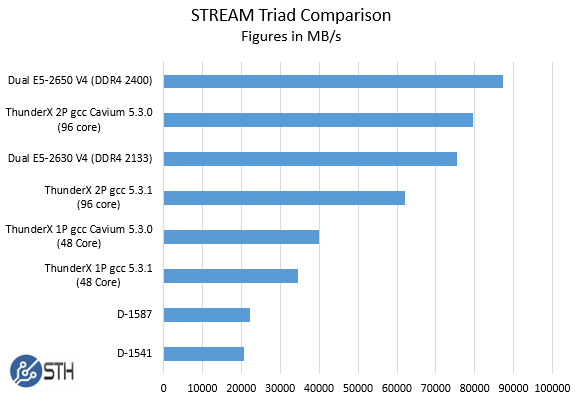

When we compare these to our selected Intel set here is what we get:

This is certainly another example where an evolving software ecosystem is helping ARM performance. What this means is that we expect in our upcoming benchmarks using web stacks to perform fairly well as memory caching is a very common performance technique.

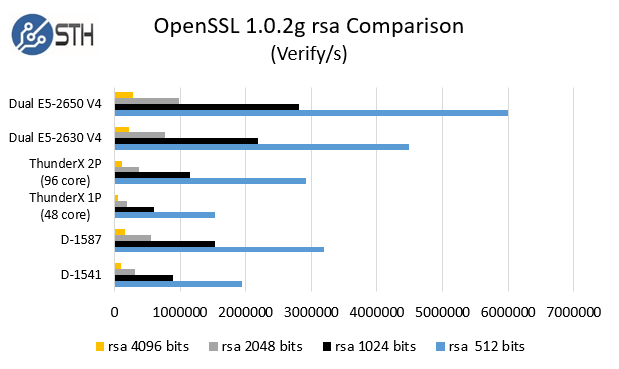

OpenSSL Performance

We again used gcc 5.3.1 but we wanted to show how much OpenSSL performance is being increased through software optimization. This is due to the software moving to much higher levels of the optimization maturity curve we introduced earlier. OpenSSL is a foundational security technology that underpins data center software. As such, optimizing OpenSSL can have a significant impact on software ranging from HTTPS termination for web servers and proxies, networking appliances, VPN appliances and much more.

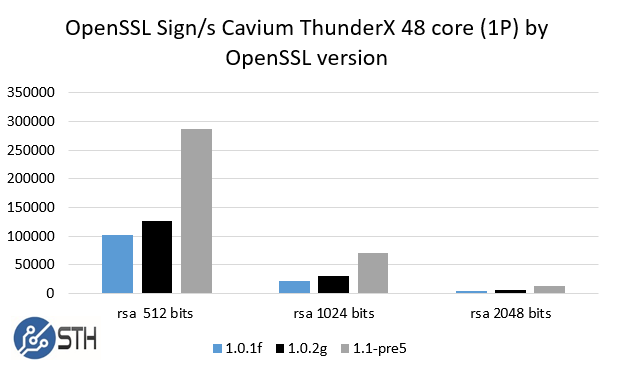

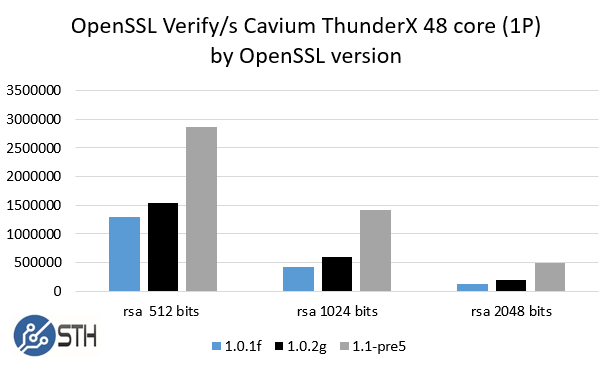

We used three versions of OpenSSL for our testing:

- OpenSSL 1.0.1f – as the default OpenSSL package in a clean Ubuntu 14.04 LTS installation

- OpenSSL 1.0.2.g – the default OpenSSL package in a clean Ubuntu 16.04 LTS installation

- OpenSSL 1.1-pre5 – the release candidate for OpenSSL 1.1. OpenSSL 1.1 is a major new release that was slated for mid-May 2016 debut. As of this writing it is still not released but we are publishing figures for the beta 2 (pre-release 5) version.

Intel and Cavium have different optimizations for different AES algorithms, however we are using RSA using -multi $numthreads for our results.

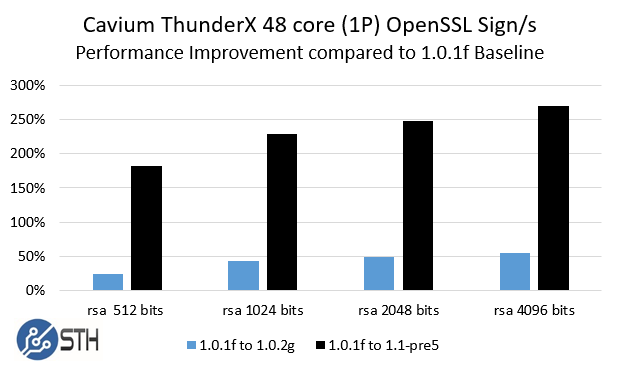

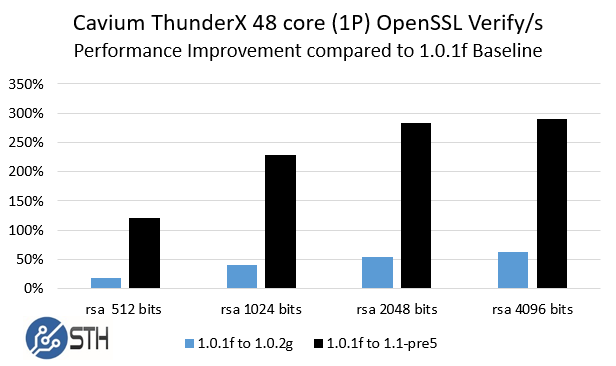

If you want to see the Software Maturity Model at play, here is an excellent test case using our single socket Cavium ThunderX 48 core (1S) system:

As you can see, the performance improvement between OpenSSL versions is quite striking. Since the rsa 4096 figures did not fit well on the chart given scaling, we created a derivative chart to show improvement compared to an OpenSSL 1.0.1f baseline:

As you can see, while moving to 1.0.2g gave 50% or more improvement in many cases, OpenSSL 1.1 is poised to give 200-300% improvements over the 1.0.1f baseline. That is a striking example of where optimization work can pay enormous dividends.

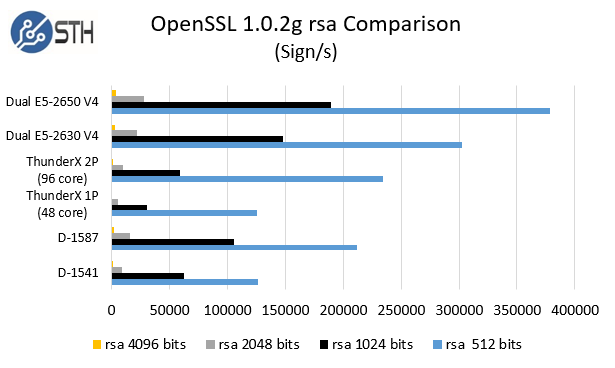

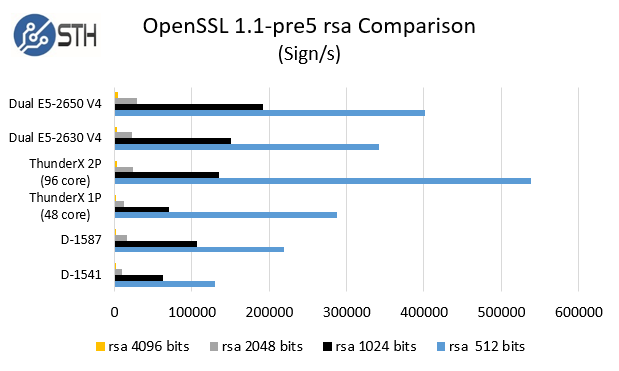

When we compare these to our selected Intel set the results are solid:

This is overall a very competitive result and appears to be getting more competitive with OpenSSL 1.1 based on the beta version we tried. Again, 1.0.2g is the shipping version with Ubuntu 16.04 LTS while OpenSSL 1.1 is a beta version so when OpenSSL 1.1 is finally released, we may update these figures since performance can change.

Beyond these figures, there are other factors at play with OpenSSL. Cavium has a secure compute function with hardware OpenSSL acceleration that we will be testing in the future. Our two test systems did not have these functions active as it is part of the Cavium ThunderX product family strategy. We also have Intel QuickAssist acceleration cards and platforms from Intel waiting the final OpenSSL 1.1 release when their QAT will be supported.

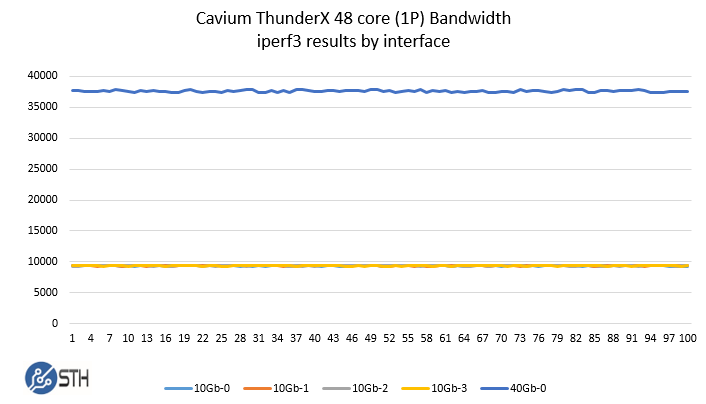

iperf3 – Cavium Networking Performance

We setup a very simple test with our Cavium ThunderX 48 core machine, and will be doing so with the 96 core machine in a future piece. We used iperf3 to measure network performance. Our Gigabyte Gigabyte R120-T30 test platform had a 40GbE QSFP+ network port along with four 10GbE SFP+ network ports.

Intel has a large Ethernet controller business so getting that much networking on one of their current generation platforms would cost at least $400 for an Intel XL710-qda2 adapter which we first saw some time ago. Given, there are options that are both much less expensive (embedded network controllers) and much more expensive on the Intel side, but the networking side is a clear point of differentiation for the Cavium ThunderX platform. Our dual socket test bed has an absolutely monstrous 160Gbps of network ports without using an add-in card.

We have been using iperf3 for years and under Ubuntu 14.04 LTS and Cavium it was a package that we could not simply “apt-get install -y iperf3” and have working. Using Ubuntu 16.04 LTS iperf3 installed immediately. Again, this is a good example of the maturing software ecosystem.

When we did get the system setup, network performance was excellent after we tweaked both our lab network to handle this bandwidth. We used a dual Intel Xeon E5-2699 V4 system as a test target for the 40GbE NIC and four Intel Xeon D-1541 systems each as a test target for the 10Gb SFP+ interfaces. We then plotted on a chart:

Results were as expected. As you can see, there is a lot of networking bandwidth on the platform. Our two socket system has twice as much networking capability onboard.

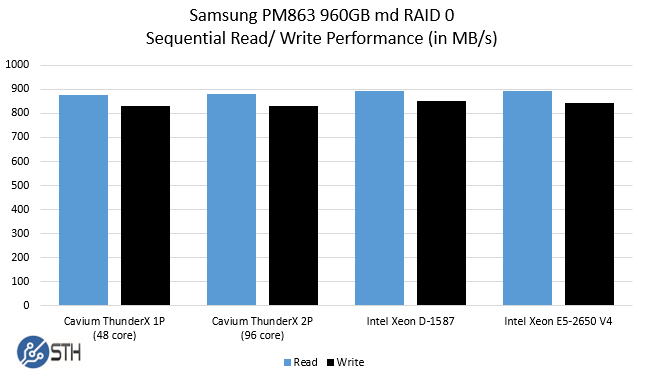

Storage Performance

As one looks at the storage configurations of our two Gigabyte Cavium test platforms, storage is clearly a key focus area. While Intel has 10 SATA III 6.0gbps ports on the Intel Xeon E5 platform (single or dual processor) and six on their Intel Xeon D SoC, Cavium has up to 16 on the single processor platform and 32 on the dual processor platform. We added two Samsung PM863 960GB SSDs in a md RAID 0 configuration in each system and ran a simple sequential read/ write test on the drives:

While one could point to the low single digit performance advantage in the Intel Xeon systems, the point here is that the results were very close even in a slightly more complex setup than with just a single drive.

Moving to a standard 2U 24x 2.5″ bay chassis requires an add-on HBA or two on the Intel side (e.g. with a Broadcom/ Avavo/ LSI SAS 3008 HBAs) while the Cavium dual processor part was able to handle this with ease. Depending on the vendor and setup, these HBAs can cost a few hundred dollars each. On the other hand, we do have 24-bay 2U NVMe Intel based systems in the lab where Intel has more PCIe 3.0 lanes available for NVMe drives. Still, SATA hard drives and SSDs are still the prevalent storage medium as of today.

Final Words

There is a lot more content coming on the Cavium side. At the end of the day, the x86 software ecosystem still has many more man hours behind optimizations of compilers and software. On the other hand, there are some big applications out there (nginx, memcached, redis, HAproxy, Ceph and others) that already have a lot of ARM optimization work behind them.

If you are a data center ARM developer in the summer of 2016, the Cavium ThunderX machines are the 64-bit ARM platform to get. While there are less expensive options out there, those do not compete with the Intel Xeon line-up at this point. In the STH lab we are seeing the impact of developer’s efforts bringing huge performance increases to the Cavium platform. The Cavium ThunderX is the only game in town when it comes to having a generally available data center ARM platform that not just meets Intel’s platform in some price/ performance areas but exceeds them in several.

Very interesting read!

Could you give a price indication for such a 48 core and 96 core Cavium ThunderX based server? (without memory and disks)

Would these machines make an interesting choice as high performance BGP core routers?

Hope systems like this will give motivation to ensure OpenZFS on Linux works on 64bit ARM – it would be a nice data storage platform if it did!

I have seen Ceph being optimized on the platform. Since there is a lot of storage (e.g. enough to fill a 2U 24 bay chassis with SATA ports) and network connectivity (160Gbps on the 2P system) the platform is certainly intriguing as a Ceph and/ or hyper converged node.

The problem with ThunderX-based servers is that they are simply not available in general market so interested developers/individuals can’t get them. Let’s see if the situation change with ThunderX2, perhaps some company is going to push that to cloud and sell it as a PaaS.

If you are looking for them, happy to make an introduction. Shoot me an e-mail at patrick at this domain if you are looking for them but cannot find them.

That’s kinda part of the problem that you need an introduction and send out e-mails to get a quote. Isn’t really commodity hardware so far if I can’t just use my usual x86 vendor.

There are few venders asacomputers sells then in the 3k range.