Cavium sent us over a Gigabyte R120-T30 just about three weeks after the production scale 48-core ThunderX ARM processor began shipping. We are going to have a much more comprehensive view of performance over the next few weeks and we are seeing much better performance out of this system than we initially expected. In the meantime, we wanted to provide an overview of the platform we received. The impact of the platform cannot be understated. This is production silicon 48 core 64-bit ARMv8 that is the first direct competitor to the Intel Xeon E5-1600 and Xeon E5-2600 lines we have seen since AMD effectively exited the market. After spending a few weeks with the platform, we feel ready to publish an overview. The Cavium ThunderX is a very different approach to a server processor. While we will explore the performance of the 48-core Cavium ThunderX in a series of upcoming pieces, the Gigabyte R120-T30 1U server provides insights into some of the biggest selling points of the ThunderX platform.

Test Configuration

In the test configuration supplied by Cavium, we had the following configuration:

- Server: Gigabyte R120-T30 (4x 3.5″ hot swap bay 1U)

- Motherboard: Gigabyte MT30-GS0

- CPU: Cavium ThunderX 48-core ARMv8

- RAM: 128GB (4x 32GB DDR4 2133MHz RDIMMs) (1TB max capacity using 8x 128GB DIMMs)

- SSDs: 2x SanDisk CloudSpeed Ultra 800GB SATA

- PSU: 400w 80 Plus Gold

What this configuration hides is the fact that this system is a lot more advanced than a similar Intel platform from an I/O perspective. The Cavium ThunderX provides significantly more I/O standard than Intel’s Xeon E5 architecture.

Gigabyte R120-T30 1U server with Cavium ThunderX Overview

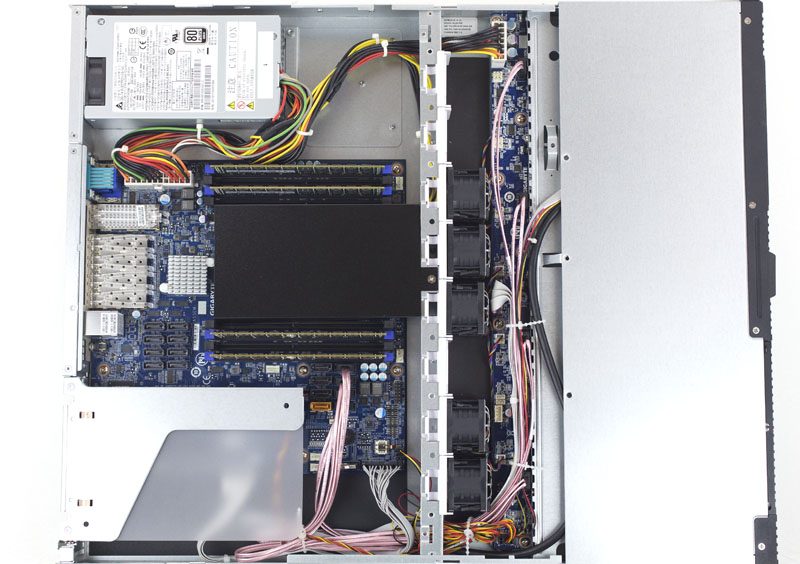

From the outside, the Gigabyte R120-T30 1U looks much like a standard 1U 4-bay 3.5″ server. What is inside the machine is different than what almost anyone who has purchased a server over the past 7 years has experienced.

Opening the top cover, we see what appears to be a standard form factor motherboard with a large CPU heatsink. The Gigabyte R120-T30 has 5x hot swap fans and a PCIe riser slot as well.

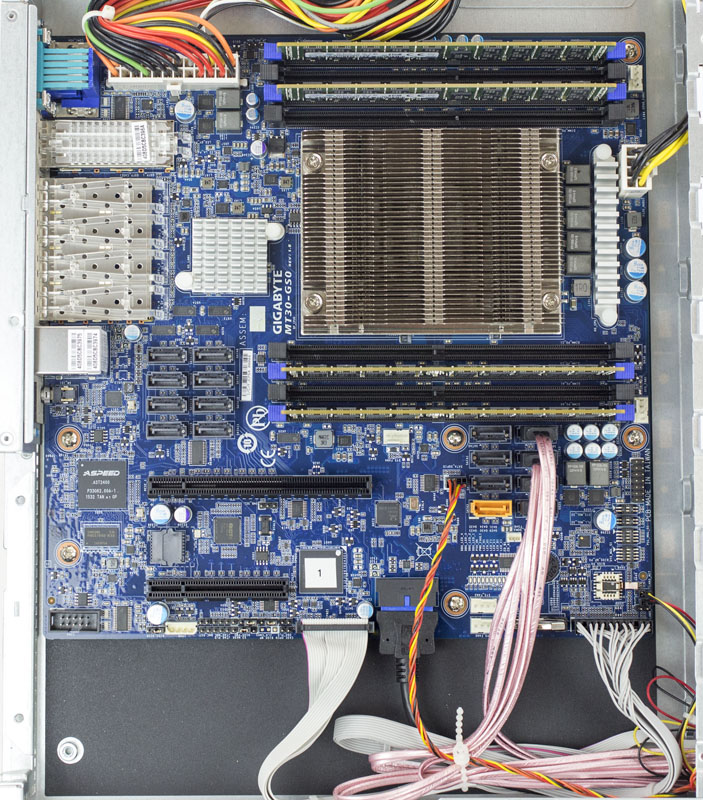

On either side of the CPU we have 4 DIMM slots. Each can take DDR4 RDIMMs to fill the ThunderX’s quad channel memory design with up to 2 DIMMs per channel (DPC.) We had 4x 32GB DDR4 2133MHz RDIMMs installed but we did also add a set of 8x 32GB (256GB) DDR4 RDIMMs just to validate all eight DIMM slots could be populated. The ThunderX supports up to 128GB DDR4 DIMMs so with 8x 128GB we get a total of 1TB of memory in a single CPU platform. Here is what the Gigabyte MT30-GS0 motherboard looks like without the fan shroud and PCIe riser:

While the newest Intel Xeon E5-1600 and E5-2600 V4 systems can utilize 3DPC for 1.5TB of RAM per CPU, 1TB is still an impressive total. For comparison, the Intel Xeon D SoC Intel produces tops out at 128GB (dual channel memory and 2 DPC.) Moving down the Intel stack further the Xeon E3 V5 lineup tops out at a paltry 64GB max. We have verified via the STREAM benchmark that there is significantly more bandwidth than the Intel Xeon D platform, even using faster DDR4-2400 RAM, but not as much as the Intel Xeon E5-2600 V4 platform. More on this in a future piece.

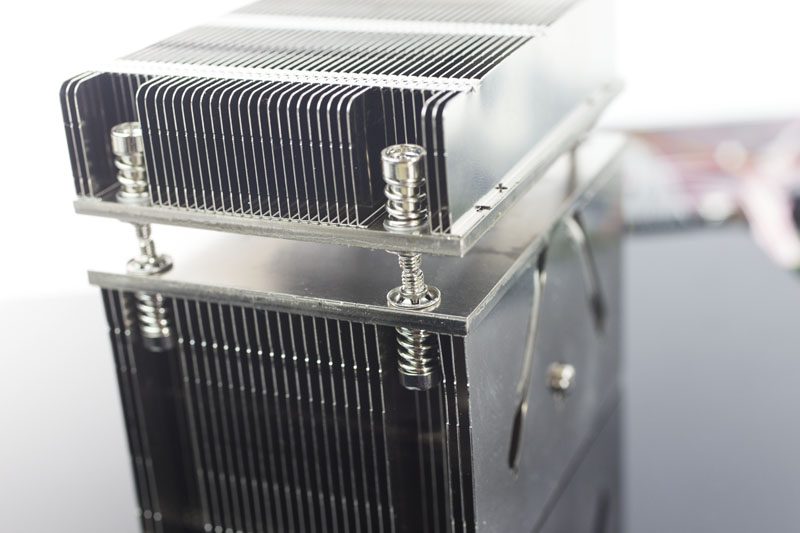

As we removed the CPU heatsink seemed very familiar. We noticed that the heatsink mounting points in the Gigabyte platform were just about a LGA2011 narrow ILM width. We pulled out a LGA2011 narrow ILM heatsink and sure enough, the mounting points lined up. We did not try installing a different heatsink as we were unsure of the pressure specs of the CPU package. Gigabyte did a nice job re-purposing existing designs for this new platform.

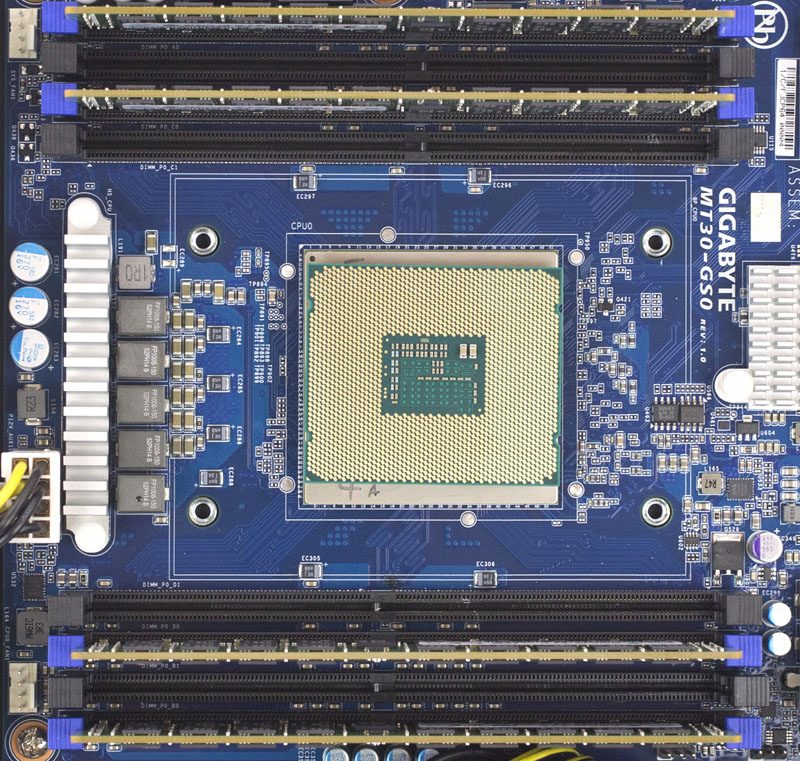

Underneath the heatsink we see a soldered Cavium ThunderX SoC:

The overall package is not socketed, but it is large compared to a LGA2011 chip. Here is an example as a size comparison:

In terms of storage, the Cavium ThunderX has access to 16x SATA ports per CPU. To put that in perspective, an Intel Xeon E5-1600 V3, Intel Xeon E5-2600 V4 dual socket system, or even the quad socket Xeon E5-4600 series all use the same PCH which provides SATA connectivity (Intel onboard SAS was abandoned after Ivy Bridge.) With Cavium, each processor can give you up to 16 SATA III ports (note this can be lower depending on SKU.) Our 1U platform only has 4 of the 16x SATA III ports wired, but it does mean that a 3U 16-bay chassis will not require an add-on HBA.

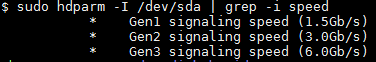

We did verify that the platform was correctly telling Ubuntu 14.04 LTS that we have a SATA III 6.0gbps connection:

The SATA ports are arranged in two 8-port sets with 7-pin SATA III connectors. It seems like the dual socket Gigabyte/ Cavium systems are using higher-density connectors.

The motherboard also has two PCIe 3.0 x8 slots. Most add-on cards come in PCIe x1, x4 or x8 form factors so ThunderX supports only up to an x8 PCIe slot.

The networking side is completely unlike what we see from most Intel platforms. Our test unit has a total of 80Gbps worth of networking from the SoC. There is a QSFP+ 40Gb Ethernet port and four SFP+ 10Gb Ethernet ports. Just to give one an idea, this is equivalent to having an Intel Fortville X710-am2 onboard. In card format, on the Intel side that would occupy a PCIe 3.0 x8 slot with an Intel XL710-QDA2 installed.

For those looking at high network bandwidth, this is a truly awesome setup onboard.

Rounding out the list of features, one can see a standard VGA port, serial port, IPMI out-of-band management LAN port and four USB 3.0 connectors (two front two rear.) The second ARM SoC onboard this platform is the AST2400 which is probably the #1 BMC in the industry. It is great to see Gigabyte/ Cavium use the industry standard BMC.

We will have more on management features in a coming piece in this series, but we can share the the experience is an absolute pleasure compared to some of the ARM development boards. The AST2400 integration is something we were pleased to see.

Final Words

If you cannot tell where this is headed, we have some interesting comparisons coming in future pieces. The Intel Xeon D platform we have today still provides lower power and better single threaded performance, but in terms of memory bandwidth, memory capacity, SATA storage expansion, and networking, it is thoroughly out-classed by the Cavium ThunderX. From what we have heard in terms of pricing both list and street, we expect the Cavium ThunderX platforms to be very competitive with the higher-end Xeon D platforms in terms of price.

Shifting to the Intel Xeon E5-1600 V3 and Intel Xeon E5-2600 V4 side, for NVMe storage, those platforms have a clear PCIe lane advantage. Furthermore, we expect areas like Windows / VMware virtualization hosts, HPC servers or those with high single threaded performance needs, higher memory capacities and etc. to stay as Intel strongholds for the near future.

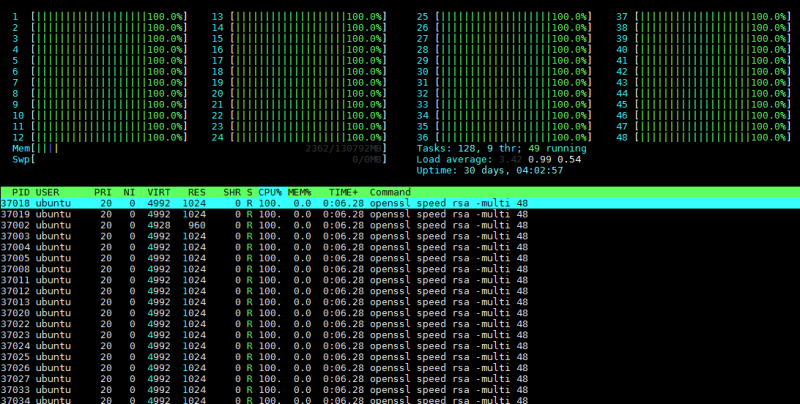

On the other hand, as the software side matures, especially with releases like the recent Ubuntu 16.04 LTS, we are seeing performance in web stack applications get significantly better. As an example, our OpenSSL / RSA testing was showing an ~80% performance increase moving from stock OpenSSL 1.0.1g to 1.0.2g on the ThunderX 48-core part. OpenSSL 1.1 seems like we will again see a solid performance gain. These types of performance increases are unlike what we see on the Intel side and show how rapidly the ARM side is maturing.

If you can use the onboard I/O or accelerators in the Cavium ThunderX workload optimized SKUs, Cavium is going to make a strong case. From the pricing we have seen thus far, if one can utilize a unique combination of 48x ARM cores, high-speed networking, SATA ports and accelerators, the Cavium platform is very compelling.

We are going to have a more in-depth look at day to day management and (small scale) operations with the ThunderX platform in the coming weeks. We have also been running baseline performance figures using the ThunderX as a web appliance (e.g. as a nginx, redis, and SSL offload server.) Stay tuned to STH for some very interesting tips, tricks and performance results.

Are they willing to sell direct to small users? Any idea on the cost?

Hi Kevin, I am meeting with Gigabyte at Computex in Taipei this week to discuss. Feel free to send me an e-mail (patrick at this domain) and I can let you know what I find.