The ASUS RS520-E8-RS8 is a 2U dual Intel Xeon E5-2600 V3 server that has a surprising amount of flexibility in terms of newer expansion standards. We have been reviewing ASUS server products for over 5 years now and in the past have reviewed products which had a familair set of features. Unlike many of the previous product, the ASUS RS520-E8-RS8 surprised us with its flexibility supporting newer standards such as m.2 storage and Open Compute Project networking.

Test Configuration

After acquiring the ASUS RS520-E8-RS8 we outfitted it to be a ZFS storage server. The 3.5″ bays worked well for us. This is an actual configuration we are using in production now.

- Processors: 2x Intel Xeon E5-2650L V3

(12 cores each)

- Memory: 8x 16GB Crucial DDR4

(128GB Total)

- Storage: 4x Western Digital Red Pro 4TB

, 2x Intel DC S3700 400GB

, 2x Fusion-io ioDrive 320GB (PCIe), 2x Intel 750 400GB 2.5″

using a Supermicro AOC-SLG3-2E4

- Networking: Intel OCP X520 Mezzanine Card

(dual SFP+)

- Operating Systems: Ubuntu 14.04.3 LTS and Windows Server 2012 R2

One thing we did “hack” a bit with this server was adding the dual 2.5″ drives. We ended up affixing them in a non-hot swap configuration above the power supplies since there is ample room and airflow. The Supermicro AOC was used to provide the two SFF-8643 connectors we needed. The net result is a very fast mirrored ZIL/ SLOG (Fusion-io) and L2ARC (Intel 750) to ensure the storage can saturate the OCP mezzanine cards dual 10 gigabit ports. ASUS also makes an X520 based single and dual port OCP mezzanine card which we would have requested had ASUS sent us this server (we procured it on our own.)

ASUS RS520-E8-RS8 Overview

The ASUS RS520-E8-RS8 is a 2U server chassis that has eight hot-swap 3.5″ bays in its front. Unlike many competing offerings, the server also includes a slim DVD optical drive and front panel USB and VGA video. These can be useful when setting up the server.

Inside the server we have two LGA2011-3 sockets with eight DDR4 DIMMs per socket (2 DIMMs per channel x 4 channels). ASUS uses four hot swap fans with a custom plastic duct to provide some redundancy to the cooling and ensure airflow. Heatsinks were included with our unit.

Looking quickly at the expansion slots, there are two PCIe 3.0 x8 slots and a PCIe 3.0 x16 slot. One can also see an OCP mezzanine connector above the x16 slot (more on that shortly) and m.2 connector right of the PCIe x8 slots. The dual SFF-8087 connectors are driven by the Intel C612 PCH and can provide a total of 8x SATA III 6.0gbps ports. This is a much cleaner/ easier to use setup than having eight 7-pin SATA cables routed through the chassis. A 9th SATA III port is used for the slim optical drive connectivity.

One of the more unique features is the OCP mezzanine card slot. The inclusion of the OCP mezzanine slot is nice because it is not a vendor proprietary design. We were able to choose from several options from ASUS as well as third party vendors such as Intel and Mellanox.

Here is the X520-DA2 OCP mezzanine card installed in the server. It was a very simple affair to install.

As a quick overview of our server prior to adding storage, one can see the custom motherboard works well in this chassis.

Moving to the final part of the motherboard we can see some standard input cables. for example the ATX style power connector. ASUS utilizes a power distribution board between the 770W 80Plus Gold redundant power supplies and motherboard so there is cabling from the PDB to the motherboard. This cabling sits atop the redundant power supplies but there is a lot of room for the cabling.

One can also see an internal USB Type-A port which can be used to install embedded OSes. We used dual Intel DC S3700’s for our OS but one could use this header for FreeNAS or VMware ESXi installed on an inexpensive USB drive. That would save an additional drive bay for storage drives.

Looking a bit closer as the ICs here, we can see two Intel i210-at gigabit Ethernet controllers as well as a Realtek controller for the management NIC. The ASPEED AST2400 is the update to the popular AST2300 BMC and is virtually ubiquitous on today’s servers. This chip and the SK.hynix memory chip below it provide the baseboard management and IPMI 2.0 functions.

Taking a quick look at the rear of the unit we can see the dual NICs, dual USB 3.0 ports, a legacy PS/2 port, a management NIC port and a legacy VGA connector. A unique feature is the two digit LCD display which can show POST codes. This is helpful for troubleshooting. One can also see the OCP mezzanine ports in-line with the rest of the rear I/O. The COM port is an optional installation.

ASUS is using only 44 or so of the platforms PCIe lanes, or about half of what the platform can offer. We do wish that there were more PCIe slots available as it would allow us to install more than three add-in cards in addition to the mezzanine card. The chassis has I/O expansion for 7 cards but the motherboard will only let you use 3 of them in practice. Here is what ours looked like when we were testing it in our Fremont California datacenter lab with an Interface Masters dual 10Gbase-T network card instead of the Supermicro NVMe AOC:

As one can see, there are several slots still open. We would also like to note that the ASUS rail mounting kit was fast to get racked, although it does lack support for the chassis when pulled out of the rack.

ASUS RS520-E8-RS8 Management

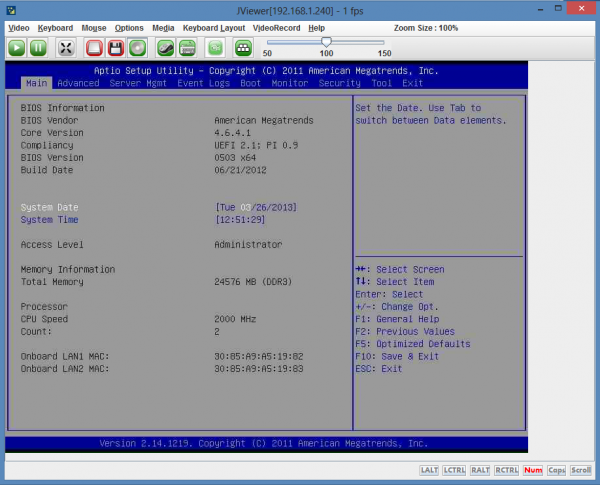

Like many servers today, the ASUS R520-E8-RS8 provides remote management functionality. We did a deep-dive on the basic ASUS ASMB functionality some time ago, however most of that still applies. ASUS provides iKVM functionality, remote media (e.g. CD) and monitoring in an IPMI 2.0 compliant solution.

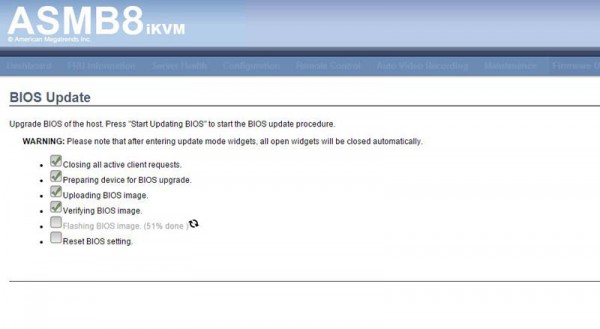

ASUS also allows one to update their BIOS via the WebGUI. This is much easier than having to use a USB drive or boot to UEFI/ DOS.

Overall the solution worked well and we did have to utilizes the remote BIOS update feature. We did this over the WAN and VPN and it worked both times we used it.

Conclusion

We have a lot of choices when it comes to hardware we run STH on. The fact that we procured one of these servers and are using it in production means it is working well for us. The ASUS Z10PR-D16 motherboard in this model is shared with a few of ASUS’ other servers (one 1U review forthcoming.) Overall servicing the motherboard and the overall system was easy. We would have liked to have seen two hot swap 2.5″ bays in the front instead of the slim optical drive. Another potential enhancement is to better use the space above the power supplies, possibly as non-hot swap 2.5″ mounting points. We were able to get drives in there using a far from elegant solution. In the end, after its first 45 days in the data center lab, the server is running extremely well and has not had a hiccup. We did have to get on the latest BIOS version, but after that was done during testing the server has been rock solid.

I personally find the Asus servers a lesser option compared to the Intel chassis’s that come in cheaper when the bang-for-buck gets involved. The Asus are available here is Oz but not best supported and thus don’t take off well. Almost as bad as Gigabyte that is totally unsupported. Intel, Dell, HP and SM have the stakes for us.