Ever since we started our first colocation adventures over two years ago to host STH, there has been the need for a second site. Colocation has an advantage over building your own in-office data center in that you can fairly easily spin up a second location, even for just a few machines. We recently hit a point where we decided to re-evaluate hosting and had a few key findings that led us to expand to a second colocation facility. Another benefit is that we had a list of lessons learned from colocation that made us better buyers the second time around. We already previewed our Las Vegas, Nevada colocation expansion here. As part of this series, we will be discussing the essentials for going colo. This Part 1 will look at how we decided to go with a second site.

The current setup in Las Vegas, Nevada

Just a quick bit of background, we have a 1/2 cabinet now in Las Vegas, Nevada. This houses a small lab environment and also our main backup server and several compute nodes. Here is a quick picture of the servers taken in the middle of setting them up:

While there is absolutely enough hardware there to run STH’s main site, forums, development instances, Linux-Bench.com and several other sites plus a lab — it is still in one cabinet. Las Vegas is an extremely stable area from a natural disaster perspective, as we highlighted before moving in. As STH has grown, it is time for a second site.

Colo (again) or the cloud

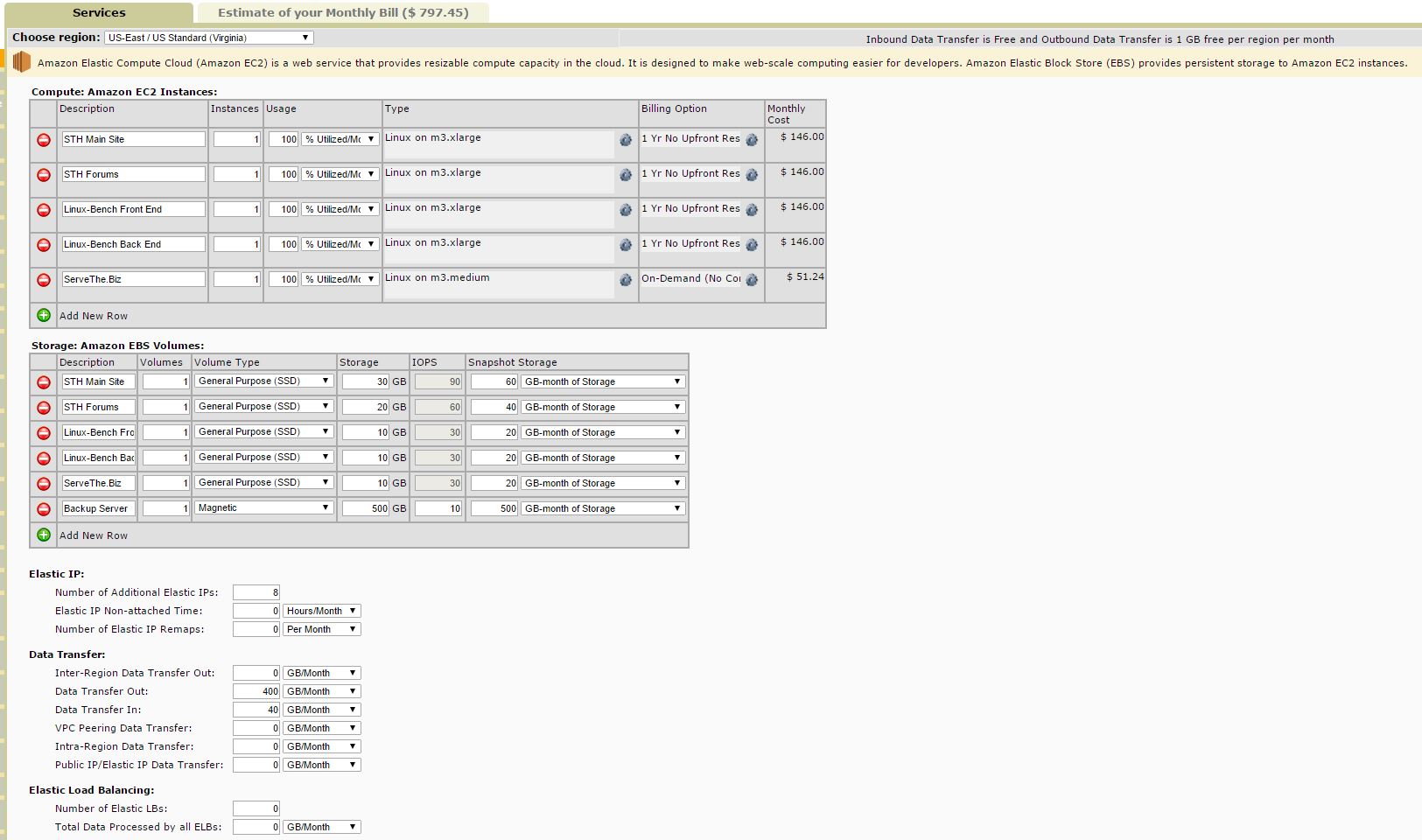

Since doing our initial AWS versus colocation cost comparison piece, Amazon has become significantly less expensive. There is now a no up front reserved instance price tier as well as lower bandwidth pricing. We did some back of the envelope math using the AWS pricing calculator just for what we would want in terms of a second site. Key VMs would be for STH WordPress, STH Forums, Linux-Bench front end + back end, ServeThe.Biz. Usually we have more VMs up for A/B testing, performance tweaks, HAproxy at times and etc. We used memory figures here for the AWS VM’s that would be commensurate with what the site actually uses. We also discounted bandwidth for the fact that the STH Las Vegas colo would still serve content so it would not serve as much content as our current single site. Here is a picture of a barebones AWS second location setup using 1 yr No Upfront Reserved instances:

The total came up to $797.45/ month using AWS EC2 m3 instances (link if you want to see the results). The m3.medium and m3.xlarge have less CPU power than our current VM nodes and less memory, but we used what running VMs consume, including memory caching, after 30 days of uptime. We have done experiments on STH with using less RAM, but the net impact was an average of 0.2s longer load times on the main site. While this is not a lot this is multiplied by millions so it does have an impact.

We are already benchmarking well against other hardware review sites as one can see here so the goal was not to get the cheapest instances we could. Instead we were looking for roughly comparable levels of performance. The AWS instances are still likely to be slower but AWS does have a superior management infrastructure and there are upgrades over time.

$800/ mo does include AWS hardware but with a combined 72GB of RAM and comparing to what we have been paying for hardware, we could build a single server to handle that load for:

- $930 96GB of RAM,

- $1200 processors,

- $600 barebones server,

- $650 SSD;

- $200 magnetic storage.

$3,580 total just based off of our recent ebay buys and would give us more storage, compute and RAM than we would have on AWS. Over 12 months it works at to around $300/ mo (of course if you kept the server longer than a year, that number goes down quite a bit. Check the Great Deals section of the forums often for saving a lot of money on a similar project.

So compared to AWS’s $800/mo, we would spend $300/mo for roughly equivalent hardware and that leaves $500 for colo. There are plenty of places we could put a 1U server for under $100/mo with all of the power and network we would need but we wanted something else: a local STH data center lab. At the end of the day, if one is savvy with shopping for hardware, and the needs are more static rather than elastic, colocation can save a bundle.

Wrapping up Part 1

We actually are spending under $500/ month (move-in discount) for our full 42U rack with power and 100mbps unmetered but we are using the remainder of the space for a testing lab. Having a lot of hardware on hand made the decision to go colo an easy one for us. After our first experience, scaling up to a second site and getting a functional installation was relatively easy. Even with the regular AWS discounts, it was less expensive to go colo this time around. We are writing this series about a week or two in arrears so one can see the 9 parts we have planned here or follow the second colo facility’s build log here. There is much more to come as the build has progressed so stay tuned.

N.B. There are a few big caveats to our calculations above that should be noted:

- First, we are using an absolute bare minimum in terms of nodes. We do not have any of the development instances assumed to be in AWS.

- Second, as we found with our initial calculations, we actually have twice as many production VMs as we originally expected. So we are using the today snapshot not an estimation in tomorrow.

- Third, we did not factor in spares, but the $400/ mo or $4,800/ year we would save in hosting on this base set would be enough to buy a second box and host it if we wanted and we would break-even in the first year.

- Fourth, we also did not use dynamically expanding memory/ ballooning memory in our calculations. We used a static memory figure so it is likely we could save quite a bit on hardware. A 64GB Xeon D-1540 configuration, for example, would have saved us $1500 off of our purchase price and likely would save 20% or more on ongoing colocation costs.

We use AWS at our startup but I really like this idea. I’m going to show my colleagues. I don’t agree with everything, but at least you have a perspective on colo versus the cloud which is not informed by a sheep mentality.

For home use it may be nice, but for businesses you have to calculate in the support contracts as well.

I doubt any business with on-premisess hardware would go without a support contract on their servers.

And since those support contracts are rather pricey, a AWS solution might be cheaper.