Recently we began testing an Intel Xeon D-1540 microserver node in a very custom test rig that allowed us to test a single Beverly Cove node outside of its normal chassis. This gave us access to a second platform with some unique features to test, albeit in an engineering test rig instead of a commercial system. After testing the Broadwell-DE pre-production Intel Xeon D-1540 under Ubuntu 14.04 LTS, then VMware ESXi 6.0 we turned next to Windows Server 2012 R2. Today we have an interesting result, an Intel X552 NIC showed up in Windows Server 2012 R2 after drivers were installed, the best part about it: we confirmed working 10gbps networking.

Test Configuration

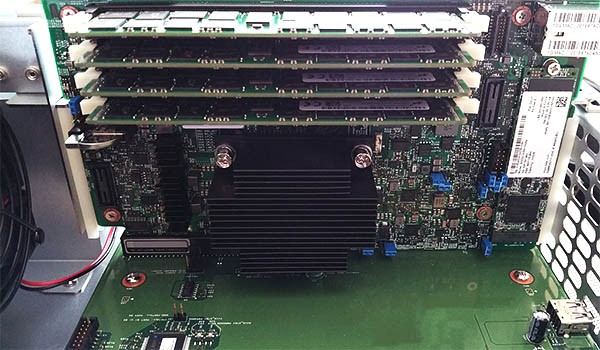

For this test we utilized a special platform from Intel that allows one to manually configure its microserver nodes. Let us be clear – this is not a platform you want to have at home. It is loud, inefficient from a space perspective and not overly user friendly. On the other hand, if you need to get into a microserver node, and you have no other choice, this is a good option. We had a pre-production Intel Beverly Cove node installed for testing.

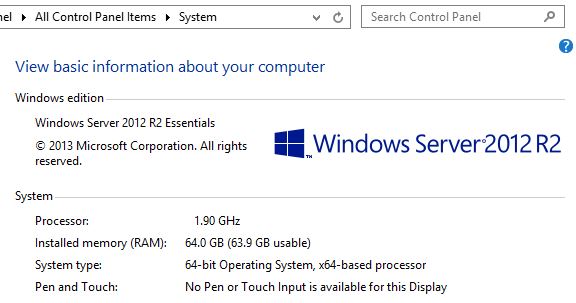

The basic configuration is a single board microserver node with 4 DIMM slots. This version had 4x 16GB DDR4 RDIMMs and an onboard Intel 530 180GB SATA m.2 SSD installed. Instead of being placed into a dense chassis, there is a custom PCB that accepts the node, provides power, fan connectors, 6x SATA 3 ports, a PCIe 3.0 x16 port and a SFP+ connection. For developers and admins, this is a nice platform, it is also great for journalists looking to test the latest hardware! This pre-production unit also appears to have the 1.9GHz Intel Xeon D-1540 version where the 8 cores and 16 threads are 100MHz slower than retail clocks. Still, this is our first Broadwell-DE part with 10gbps networking!

Intel Xeon D-1540 running with Windows Server 2012 R2 Essentials

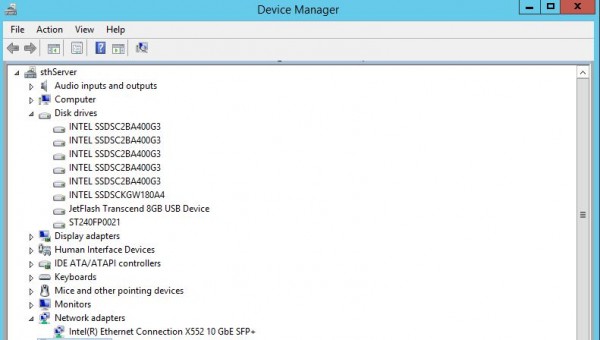

We have a Seagate 600 Pro 240GB in the lab with a Windows Server 2012 R2 Essentials. Our test system, aside from the Intel 530 180GB m.2 drive has 6x Intel DC S3700 400GB drives. We removed one of the drives and instead ran the Seagate SSD just to see what would happen. The OS was installed on a dual Haswell-EP system so it was just a simple test to see what would happen. Alas, a short time went by and we saw our 1.9GHz CPU and 64GB of DDR4:

Looking at the Device manager of the Supermicro X10SDV-F system we had been using, we saw the dual Intel i350-am2 gigabit Ethernet ports and something labeled the Intel 82599 Multi-Function Network Device. We also saw the Intel C220 PCH show up yet again, so Windows, Ubuntu, VMware ESXi 6.0 all point to the integrated PCH being Lynx Point based.

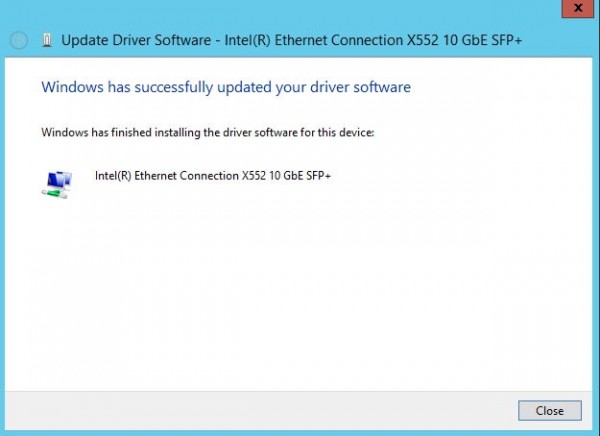

On our Intel development platform, network access was an issue but we had some special drivers on a USB stick. Once those were installed, we had network access. We did find something very different though:

Searching for the Intel Ethernet Connection X552 10 GbE SFP+ connection at this point got us very little. We did see in some of the ixgbe Linux documentation that the Ethernet Connection X552 10 GbE Backplane was referenced.

Alas, there is only one network adapter present so what this SFP+ version seems to be is an implementation of the SoC’s 10GbE controller for in-chassis backplane networking, only modified to make it accessible via a SFP+ port. This is likely something one might see within a microserver chassis but it is unlikely we will see the X552 in standard 1U servers. Still, this is the first working confirmation of Broadwell-DE 10GbE we have.

I will again note, this is our second pre-production silicon system using development drivers so there is a good chance naming could change.

Having a bit of fun: Gaming on Broadwell-DE

Of course, the Intel Broadwell-DE is a server part and does not have an integrated GPU. It usually relies upon the BMC to provide the GPU. Our Supermicro unit utilized the ASPEED AST2400 BMC that is almost ubiquitous at this point. Our microserver test bed did not have a BMC so one needed to use a physical keyboard, monitor and mouse. Using KVM-over-IP has spoiled us to the point we had to dig for these in the lab for these. In the PCIe slot we had a passively cooled Sapphire AMD Radeon 5450 PCIe x4 video card. Our setup was an 8 core/ 16 thread processor, 64GB of RAM, six enterprise SSDs, 10GbE SFP+ networking and a PCIe video card, and we were doing testing over the weekend after a long week.

We decided to fire up one of the most popular games out there: League of Legends. We have never done this type of thing before, but we had to prove it could work. After all, if it can play a video game in this pre-production configuration, it should have no problem with VDI using Citrix or VMware Horizon.

Playable maybe, but not overly enjoyable on the low-end AMD video card and 1920×1200 resolution. Ok this effort ended in defeat but fun anyway. With a faster GPU, this is certainly going to be a better experience. Of course, since we had (many) cores to run, we were able to use the <10% CPU required to also run 10GbE network testing. If one wanted a very low power (45w TDP) SoC to game with and run virtual machines in Hyper-V on the side, this is probably passable for casual gamers. To test this, we started re-running some network simple testing mid-way through.

Network testing on the 10GbE NIC

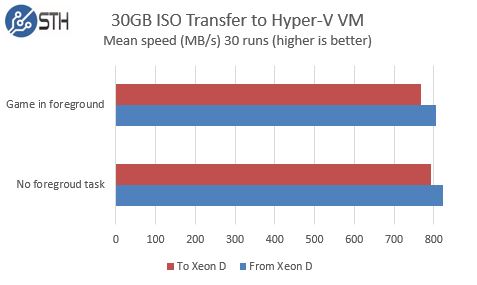

For this test we had our test storage server also connected to our 10Gb switch. We used a RAID 0 array with 4x Intel DC S3700 400GB SSDs in the Broadwell-DE server. Those four SSDs were passed through to the Hyper-V VM running Ubuntu 14.04 LTS with ZFS on Linux. We also used 4x Toshiba PX02SMF040 400GB SAS3 SSDs on our storage server connected to a LSI / Avago SAS 3008 controller. About 2x as much disk bandwidth as we would need on both ends to saturate 10GbE. We used a 8GB RAMDISK on both the VM and the storage test server for writes and the RAID 0 SSD for reads and simply passed a large ISO image file across the machines.

Overall, the results are as we would expect for a simple test with no tuning. We saw transfer speeds around 800MB/s which is solid for a NAS platform. There is more headroom in this platform to drive more bandwidth. To give some perspective of why this is great, we gave the Hyper-V VM four cores while leaving the remainder for playing the game. Doing massive transfers in the background did not even make the game stutter slightly. This is a 45w TDP SoC playing a 3D game and running an all SSD NAS with saturing a 10GbE NIC. Fairly impressive for those building converged servers.

Conclusion

Again, this is a pre-production Xeon D-1540 running 100MHz below production speeds in a very custom engineering test rig. Given that we are seeing great performance from the Xeon D-1540 already, and the production version should be ~5% faster, these are great results. We do need to work on digging on the question around the Intel X552 SFP+ networking as it does appear to be a backplane networking solution modified for this engineering rig. We should be getting our production samples of Broadwell-DE products soon and will continue to verify 10gbps networking.

Very interesting platform!

Can you please run the below powershell command and see if the 10GB network adapter is RDMA capable?

Get-NetAdapterRdma –Name * | Where-Object -FilterScript { $_.Enabled }

I no think of game to test before for VDI. Good think. I like Xeon D. I want more microserver review on this website.

Sorry my english not primary language

How do you pass data between the machines (FTP/SMB/NFS/iSCSI,Netcat) ?

Baatch – that did not come up as RDMA capable.

Where can one get this board ? What’s the model ? like the sfp