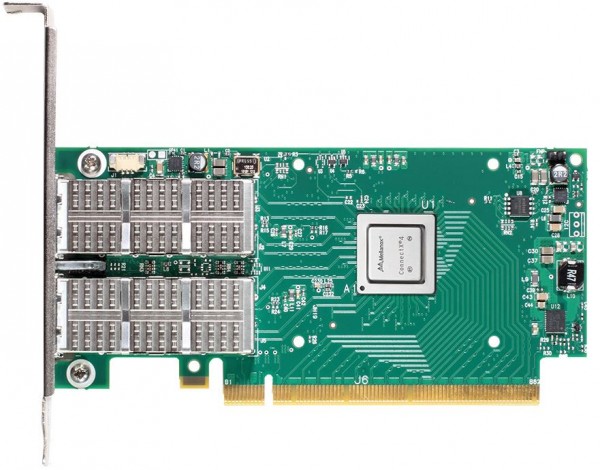

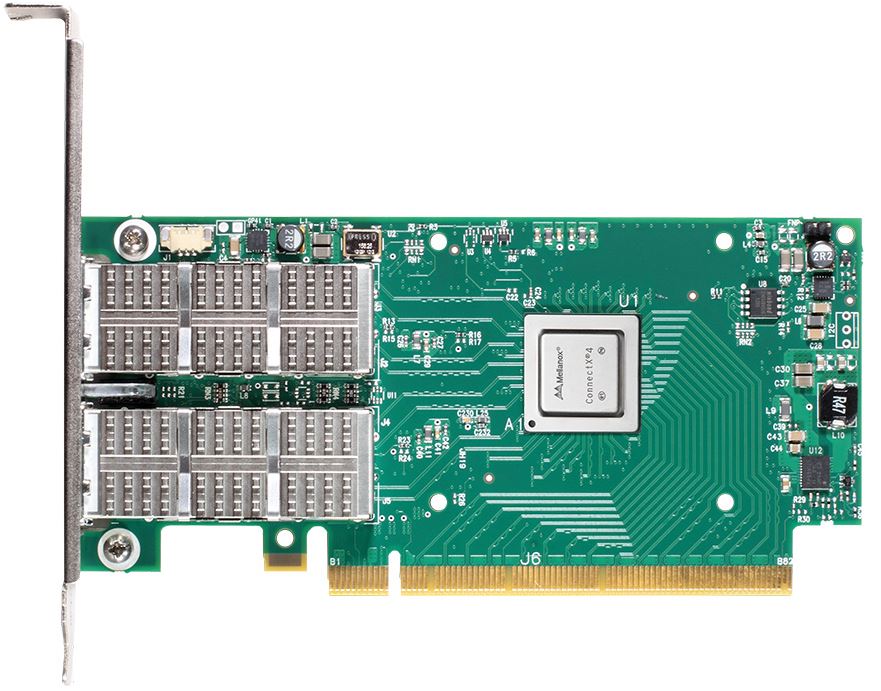

Recently Mellanox announced its ConnectX-4 VPI cards. These cards can handle either 100gbps EDR Infiniband or 100Gb Ethernet. They can also scale down to lower port speeds making these extremely fast and versatile cards. Many STH forum members are running Mellanox ConnectX VPI gear of various generations in Infiniband or Ethernet modes with much success. The Mellanox cards often perform well and come out earlier than many of their competitive parts at each speed step. As an example, the new Mellanox ConnectX-4 cards offer 100Gb Ethernet speeds while that is still a relatively rare speed for Ethernet switch stacking/ uplink ports. In fact these cards are so fast they require a PCIe 3.0 x16 slot and are still limited by that slot’s performance.

Compute power is still doubling on a fairly consistent basis. Storage has gone absolutely wild in terms of performance with 25w PCIe cards now pushing speeds of multi-rack high-end storage systems from 6 years ago. Yet in terms of networking progress has slowed. 10Gb Ethernet has just started to become the base standard for most environments. 10gb switch ports are still high in price relatively so we have not seen the move down-market like we have seen with other technologies. 40Gb Ethernet is making strides forward as we saw with our Intel Fortville piece. Intel is making an aggressive push to get 40Gb Ethernet to be the next standard, but we are seeing low integration of 40Gb Fortville parts onto motherboards as standard BOM components.

Meanwhile, Mellanox has been on an absolute tear. HPC lists are dominated by Mellanox interconnect technologies. EMC, Oracle and others are using Mellanox Infiniband/ VPI products for fast storage networking. In fact, this site has been replicating copies every five minutes using a 40Gb Ethernet Mellanox solution for four months now which has had tangible benefits in terms of latency.

The 100gbps VPI products are excellent because they allow one to make a simple driver checkbox change and change from Infiniband to Ethernet standards. STH published a Mellanox VPI Infiniband-Ethernet guide a few months ago regarding how to do this with ConnectX-3 generations of VPI adapters. Now we are seeing the QSFP+ standard hit even higher speeds with that same functionality.

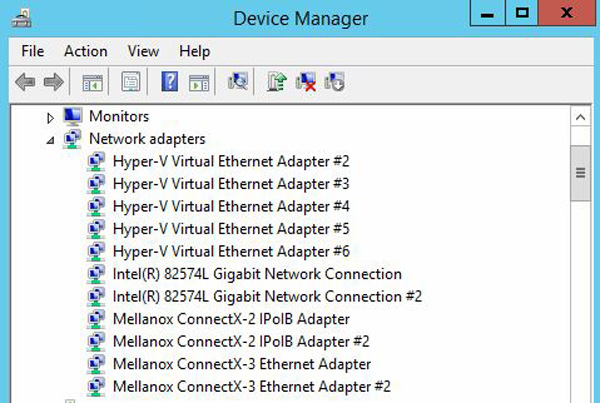

Perhaps the most exciting part about this for readers is that it will push pricing of ConnectX-3 cards down. ConnectX-2 cards are being depreciated in terms of drivers however we have seen many Mellanox ConnectX-3 deals spotted in the forums already. This should bring prices of the newer ConnectX-3 cards down even further as companies upgrade to the newest ConnectX-4 generation.

There is one major drawback to this generation of cards: the are limited to the PCIe 3.0 bus. As we saw with the dual port Intel Fortville 40GbE adapters, a PCIe 3.0 x8 bus can only handle in the mid 60gb range of throughput. So with a PCIe x16 link we would expect sub 130gbps maximum speeds which still makes Mellanox ConnectX-4 cards blisteringly fast. PCIe x8 slots are “standard” in servers so x16 slots will require a bit of motherboard hunting. Still, these do provide exciting and extreme performance!

are they shipping yet? i search around and really cannot find many for sale, the ones i do find seem like they arent really in stock..